Tool Learning with Large Language Models: A Survey (2405.17935v3)

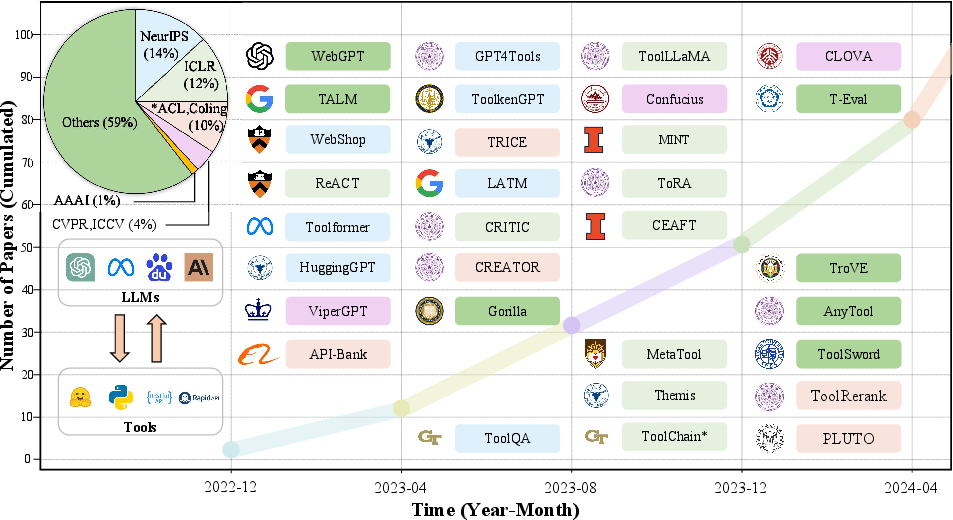

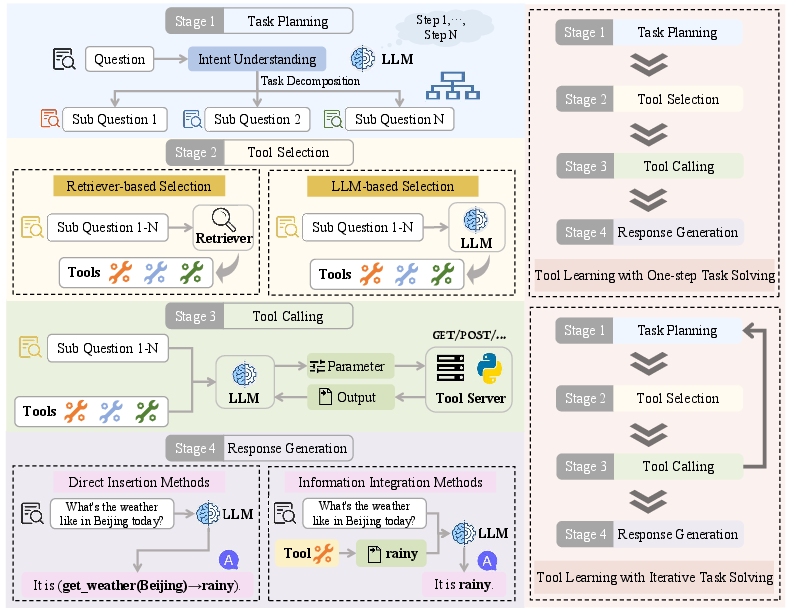

Abstract: Recently, tool learning with LLMs has emerged as a promising paradigm for augmenting the capabilities of LLMs to tackle highly complex problems. Despite growing attention and rapid advancements in this field, the existing literature remains fragmented and lacks systematic organization, posing barriers to entry for newcomers. This gap motivates us to conduct a comprehensive survey of existing works on tool learning with LLMs. In this survey, we focus on reviewing existing literature from the two primary aspects (1) why tool learning is beneficial and (2) how tool learning is implemented, enabling a comprehensive understanding of tool learning with LLMs. We first explore the "why" by reviewing both the benefits of tool integration and the inherent benefits of the tool learning paradigm from six specific aspects. In terms of "how", we systematically review the literature according to a taxonomy of four key stages in the tool learning workflow: task planning, tool selection, tool calling, and response generation. Additionally, we provide a detailed summary of existing benchmarks and evaluation methods, categorizing them according to their relevance to different stages. Finally, we discuss current challenges and outline potential future directions, aiming to inspire both researchers and industrial developers to further explore this emerging and promising area. We also maintain a GitHub repository to continually keep track of the relevant papers and resources in this rising area at https://github.com/quchangle1/LLM-Tool-Survey.

- Gpt-4 technical report. arXiv preprint arXiv:2303.08774.

- Protip: Progressive tool retrieval improves planning. arXiv preprint arXiv:2312.10332.

- Program synthesis with large language models. arXiv preprint arXiv:2108.07732.

- Api-blend: A comprehensive corpora for training and benchmarking api llms. arXiv preprint arXiv:2402.15491.

- cem: Coarsened exact matching in stata. The Stata Journal, 9(4):524–546.

- Large language models as tool makers. In Proceedings of the 12th International Conference on Learning Representations (ICLR).

- Evaluating large language models trained on code. arXiv preprint arXiv:2107.03374.

- Program of thoughts prompting: Disentangling computation from reasoning for numerical reasoning tasks. arXiv preprint arXiv:2211.12588.

- Fortify the shortest stave in attention: Enhancing context awareness of large language models for effective tool use. arXiv preprint arXiv:2312.04455.

- T-eval: Evaluating the tool utilization capability step by step. arXiv preprint arXiv:2312.14033.

- Factool: Factuality detection in generative ai–a tool augmented framework for multi-task and multi-domain scenarios. arXiv preprint arXiv:2307.13528.

- Training verifiers to solve math word problems. arXiv preprint arXiv:2110.14168.

- Cocktail: A comprehensive information retrieval benchmark with llm-generated documents integration. Findings of the Association for Computational Linguistics: ACL 2024.

- Recode: Modeling repeat consumption with neural ode. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval.

- Uncovering chatgpt’s capabilities in recommender systems. In Proceedings of the 17th ACM Conference on Recommender Systems, pages 1126–1132.

- Llms may dominate information access: Neural retrievers are biased towards llm-generated texts. arXiv preprint arXiv:2310.20501.

- Xuan-Quy Dao and Ngoc-Bich Le. 2023. Investigating the effectiveness of chatgpt in mathematical reasoning and problem solving: Evidence from the vietnamese national high school graduation examination. arXiv preprint arXiv:2306.06331.

- Mathsensei: A tool-augmented large language model for mathematical reasoning. arXiv preprint arXiv:2402.17231.

- Anytool: Self-reflective, hierarchical agents for large-scale api calls. arXiv preprint arXiv:2402.04253.

- Automatic text summarization: A comprehensive survey. Expert systems with applications, 165:113679.

- Navigating uncertainty: Optimizing api dependency for hallucination reduction in closed-book question answering. arXiv preprint arXiv:2401.01780.

- Nicholas Farn and Richard Shin. 2023. Tooltalk: Evaluating tool-usage in a conversational setting. arXiv preprint arXiv:2311.10775.

- Improving llm-based machine translation with systematic self-correction. arXiv preprint arXiv:2402.16379.

- Assistgpt: A general multi-modal assistant that can plan, execute, inspect, and learn. arXiv preprint arXiv:2306.08640.

- Luyu Gao and Jamie Callan. 2022. Unsupervised corpus aware language model pre-training for dense passage retrieval. In Proceedings of the 60st Annual Meeting of the Association for Computational Linguistics (ACL).

- Pal: Program-aided language models. In International Conference on Machine Learning, pages 10764–10799. PMLR.

- Confucius: Iterative tool learning from introspection feedback by easy-to-difficult curriculum. In In Proceedings of 38th Conference on Artificial Intelligence (AAAI).

- Efficient tool use with chain-of-abstraction reasoning. arXiv preprint arXiv:2401.17464.

- Enabling large language models to generate text with citations. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 6465–6488, Singapore. Association for Computational Linguistics.

- Retrieval-augmented generation for large language models: A survey. arXiv preprint arXiv:2312.10997.

- Clova: A closed-loop visual assistant with tool usage and update. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR).

- Tools, language and cognition in human evolution. Cambridge University Press.

- Critic: Large language models can self-correct with tool-interactive critiquing. In Proceedings of the 12th International Conference on Learning Representations (ICLR).

- Tora: A tool-integrated reasoning agent for mathematical problem solving. In Proceedings of the 12th International Conference on Learning Representations (ICLR).

- Middleware for llms: Tools are instrumental for language agents in complex environments. arXiv preprint arXiv:2402.14672.

- Look before you leap: Towards decision-aware and generalizable tool-usage for large language models. arXiv preprint arXiv:2402.16696.

- Stabletoolbench: Towards stable large-scale benchmarking on tool learning of large language models. arXiv preprint arXiv:2403.07714.

- Toolkengpt: Augmenting frozen language models with massive tools via tool embeddings. Advances in neural information processing systems, 36.

- Solving math word problems by combining language models with symbolic solvers. In Proceedings of the 2023 Annual Conference on Neural Information Processing Systems (NeurIPS).

- Efficiently teaching an effective dense retriever with balanced topic aware sampling. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, pages 113–122.

- Tool documentation enables zero-shot tool-usage with large language models. arXiv preprint arXiv:2308.00675.

- Planning and editing what you retrieve for enhanced tool learning. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT).

- Language models as zero-shot planners: Extracting actionable knowledge for embodied agents. In International Conference on Machine Learning, pages 9118–9147. PMLR.

- Understanding the planning of llm agents: A survey. arXiv preprint arXiv:2402.02716.

- Metatool benchmark for large language models: Deciding whether to use tools and which to use. In Proceedings of 12th International Conference on Learning Representations (ICLR).

- MultiTool-CoT: GPT-3 can use multiple external tools with chain of thought prompting. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), pages 1522–1532, Toronto, Canada. Association for Computational Linguistics.

- Unsupervised dense information retrieval with contrastive learning. arXiv preprint arXiv:2112.09118.

- A comprehensive evaluation of tool-assisted generation strategies. Findings of the Association for Computational Linguistics: EMNLP 2023.

- Kalervo Järvelin and Jaana Kekäläinen. 2002. Cumulated gain-based evaluation of ir techniques. ACM Transactions on Information Systems (TOIS), 20(4):422–446.

- Survey of hallucination in natural language generation. ACM Computing Surveys, 55(12):1–38.

- Is bert really robust? a strong baseline for natural language attack on text classification and entailment. In Proceedings of the AAAI conference on artificial intelligence, pages 8018–8025.

- Agentmd: Empowering language agents for risk prediction with large-scale clinical tool learning. arXiv preprint arXiv:2402.13225.

- Genegpt: Augmenting large language models with domain tools for improved access to biomedical information. Bioinformatics, 40(2):btae075.

- Calc-x and calcformers: Empowering arithmetical chain-of-thought through interaction with symbolic systems. In The 2023 Conference on Empirical Methods in Natural Language Processing.

- Mrkl systems: A modular, neuro-symbolic architecture that combines large language models, external knowledge sources and discrete reasoning. arXiv preprint arXiv:2205.00445.

- Internet-augmented dialogue generation. In Proceedings of the 60st Annual Meeting of the Association for Computational Linguistics (ACL).

- Tptu-v2: Boosting task planning and tool usage of large language model-based agents in real-world systems.

- Natural questions: a benchmark for question answering research. Transactions of the Association for Computational Linguistics, 7:453–466.

- Internet-augmented language models through few-shot prompting for open-domain question answering. arXiv preprint arXiv:2203.05115.

- Internet-augmented language models through few-shot prompting for open-domain question answering.

- Tool-augmented reward modeling. arXiv preprint arXiv:2310.01045.

- Api-bank: A comprehensive benchmark for tool-augmented llms. In The 2023 Conference on Empirical Methods in Natural Language Processing.

- Taskmatrix. ai: Completing tasks by connecting foundation models with millions of apis. Advances in Neural Information Processing Systems.

- Chin-Yew Lin. 2004. Rouge: A package for automatic evaluation of summaries. In Text summarization branches out, pages 74–81.

- Explainable ai: A review of machine learning interpretability methods. Entropy, 23(1):18.

- Toolnet: Connecting large language models with massive tools via tool graph. arXiv preprint arXiv:2403.00839.

- Internchat: Solving vision-centric tasks by interacting with chatbots beyond language. arXiv preprint arXiv:2305.05662.

- Controlllm: Augment language models with tools by searching on graphs. arXiv preprint arXiv:2310.17796.

- Chameleon: Plug-and-play compositional reasoning with large language models. Advances in Neural Information Processing Systems, 36.

- Gear: Augmenting language models with generalizable and efficient tool resolution. In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics (EACL).

- Gitagent: Facilitating autonomous agent with github by tool extension. arXiv preprint arXiv:2312.17294.

- Sciagent: Tool-augmented language models for scientific reasoning. arXiv preprint arXiv:2402.11451.

- m&m’s: A benchmark to evaluate tool-use for multi-step multi-modal tasks. arXiv preprint arXiv:2403.11085.

- When not to trust language models: Investigating effectiveness of parametric and non-parametric memories. arXiv preprint arXiv:2212.10511.

- Toolverifier: Generalization to new tools via self-verification. arXiv preprint arXiv:2402.14158.

- Augmented language models: a survey. arXiv preprint arXiv:2302.07842.

- Towards efficient generative large language model serving: A survey from algorithms to systems. arXiv preprint arXiv:2312.15234.

- Webgpt: Browser-assisted question-answering with human feedback. arXiv preprint arXiv:2112.09332.

- Maf: Multi-aspect feedback for improving reasoning in large language models. Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing.

- OpenAI. 2024. New embeddings models and api updates.

- Training language models to follow instructions with human feedback. Advances in neural information processing systems, 35:27730–27744.

- Fact-checking complex claims with program-guided reasoning. In Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 6981–7004, Toronto, Canada. Association for Computational Linguistics.

- Bleu: a method for automatic evaluation of machine translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, pages 311–318, Philadelphia, Pennsylvania, USA. Association for Computational Linguistics.

- Art: Automatic multi-step reasoning and tool-use for large language models. arXiv preprint arXiv:2303.09014.

- Talm: Tool augmented language models. arXiv preprint arXiv:2205.12255.

- Gorilla: Large language model connected with massive apis. arXiv preprint arXiv:2305.15334.

- Creator: Tool creation for disentangling abstract and concrete reasoning of large language models. In Findings of the Association for Computational Linguistics: EMNLP 2023, pages 6922–6939.

- Toolink: Linking toolkit creation and using through chain-of-solving on open-source model. arXiv preprint arXiv:2310.05155.

- Making language models better tool learners with execution feedback. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT).

- Reasoning with language model prompting: A survey. arXiv preprint arXiv:2212.09597.

- Tool learning with foundation models. arXiv preprint arXiv:2304.08354.

- Toolllm: Facilitating large language models to master 16000+ real-world apis. In Proceedings of the 12th International Conference on Learning Representations (ICLR).

- Colt: Towards completeness-oriented tool retrieval for large language models. arXiv preprint arXiv:2405.16089.

- Nils Reimers and Iryna Gurevych. 2019. Sentence-bert: Sentence embeddings using siamese bert-networks. Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing.

- The probabilistic relevance framework: Bm25 and beyond. Foundations and Trends® in Information Retrieval, 3(4):333–389.

- Tptu: Task planning and tool usage of large language model-based ai agents. arXiv preprint arXiv:2308.03427.

- Identifying the risks of lm agents with an lm-emulated sandbox. arXiv preprint arXiv:2309.15817.

- Toolformer: Language models can teach themselves to use tools. Advances in Neural Information Processing Systems, 36.

- Chaining simultaneous thoughts for numerical reasoning. arXiv preprint arXiv:2211.16482.

- Small llms are weak tool learners: A multi-llm agent. arXiv preprint arXiv:2401.07324.

- Hugginggpt: Solving ai tasks with chatgpt and its friends in hugging face. Advances in Neural Information Processing Systems, 36.

- Taskbench: Benchmarking large language models for task automation. arXiv preprint arXiv:2311.18760.

- Replug: Retrieval-augmented black-box language models. arXiv preprint arXiv:2301.12652.

- Learning to use tools via cooperative and interactive agents. arXiv preprint arXiv:2403.03031.

- Animal tool behavior: the use and manufacture of tools by animals. JHU Press.

- Restgpt: Connecting large language models with real-world applications via restful apis. arXiv preprint arXiv:2306.06624.

- Karen Sparck Jones. 1972. A statistical interpretation of term specificity and its application in retrieval. Journal of documentation, 28(1):11–21.

- Cognitive architectures for language agents. Transactions on Machine Learning Research.

- Towards verifiable text generation with evolving memory and self-reflection. arXiv preprint arXiv:2312.09075.

- A survey of reasoning with foundation models. arXiv preprint arXiv:2312.11562.

- Is chatgpt good at search? investigating large language models as re-ranking agents. In Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing, pages 14918–14937.

- Vipergpt: Visual inference via python execution for reasoning. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 11888–11898.

- Toolalpaca: Generalized tool learning for language models with 3000 simulated cases. arXiv preprint arXiv:2306.05301.

- Gemini: A family of highly capable multimodal models.

- Adrian Theuma and Ehsan Shareghi. 2024. Equipping language models with tool use capability for tabular data analysis in finance. In Proceedings of the 18th Conference of the European Chapter of the Association for Computational Linguistics (EACL).

- Lamda: Language models for dialog applications. arXiv preprint arXiv:2201.08239.

- Calc-cmu at semeval-2024 task 7: Pre-calc – learning to use the calculator improves numeracy in language models. In Proceedings of the 2024 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT).

- Barbara Von Eckardt. 1995. What is cognitive science? MIT press.

- Freshllms: Refreshing large language models with search engine augmentation. arXiv preprint arXiv:2310.03214.

- Universal adversarial triggers for attacking and analyzing NLP. In Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP), pages 2153–2162, Hong Kong, China. Association for Computational Linguistics.

- Llms in the imaginarium: Tool learning through simulated trial and error. arXiv preprint arXiv:2403.04746.

- Tool-lmm: A large multi-modal model for tool agent learning. arXiv preprint arXiv:2401.10727.

- Tpe: Towards better compositional reasoning over conceptual tools with multi-persona collaboration. arXiv preprint arXiv:2309.16090.

- A survey on large language model based autonomous agents. Frontiers of Computer Science, 18(6):1–26.

- Leti: Learning to generate from textual interactions. arXiv preprint arXiv:2305.10314.

- Mint: Evaluating llms in multi-turn interaction with tools and language feedback. In Proceedings of 12th International Conference on Learning Representations (ICLR).

- What are tools anyway? a survey from the language model perspective.

- Trove: Inducing verifiable and efficient toolboxes for solving programmatic tasks. arXiv preprint arXiv:2401.12869.

- Sherwood L Washburn. 1960. Tools and human evolution. Scientific American, 203(3):62–75.

- Chain-of-thought prompting elicits reasoning in large language models. Advances in neural information processing systems, 35:24824–24837.

- Cmath: can your language model pass chinese elementary school math test? arXiv preprint arXiv:2306.16636.

- Ethical and social risks of harm from language models. arXiv preprint arXiv:2112.04359.

- A new era in llm security: Exploring security concerns in real-world llm-based systems. arXiv preprint arXiv:2402.18649.

- Structure-aware fine-tuning for code pre-trained models. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING).

- Seal-tools: Self-instruct tool learning dataset for agent tuning and detailed benchmark. arXiv preprint arXiv:2405.08355.

- The rise and potential of large language model based agents: A survey. arXiv preprint arXiv:2309.07864.

- Approximate nearest neighbor negative contrastive learning for dense text retrieval. In Proceedings of 9th International Conference on Learning Representations (ICLR).

- Recomp: Improving retrieval-augmented lms with compression and selective augmentation. In Proceedings of 12th International Conference on Learning Representations (ICLR).

- On the tool manipulation capability of open-source large language models. arXiv preprint arXiv:2305.16504.

- Search-in-the-chain: Towards the accurate, credible and traceable content generation for complex knowledge-intensive tasks. In Proceedings of the ACM Web Conference 2024.

- Gpt4tools: Teaching large language model to use tools via self-instruction. Advances in Neural Information Processing Systems, 36.

- Mm-react: Prompting chatgpt for multimodal reasoning and action. arXiv preprint arXiv:2303.11381.

- Hotpotqa: A dataset for diverse, explainable multi-hop question answering. arXiv preprint arXiv:1809.09600.

- Webshop: Towards scalable real-world web interaction with grounded language agents. Advances in Neural Information Processing Systems, 35:20744–20757.

- React: Synergizing reasoning and acting in language models. arXiv preprint arXiv:2210.03629.

- Tooleyes: Fine-grained evaluation for tool learning capabilities of large language models in real-world scenarios. arXiv preprint arXiv:2401.00741.

- Toolsword: Unveiling safety issues of large language models in tool learning across three stages. arXiv preprint arXiv:2402.10753.

- Rotbench: A multi-level benchmark for evaluating the robustness of large language models in tool learning. arXiv preprint arXiv:2401.08326.

- Craft: Customizing llms by creating and retrieving from specialized toolsets. In Proceedings of 12th International Conference on Learning Representations (ICLR).

- Easytool: Enhancing llm-based agents with concise tool instruction. arXiv preprint arXiv:2401.06201.

- Injecagent: Benchmarking indirect prompt injections in tool-integrated large language model agents. arXiv preprint arXiv:2403.02691.

- Evaluating and improving tool-augmented computation-intensive math reasoning. Advances in Neural Information Processing Systems, 36.

- Prompting large language model for machine translation: A case study. In International Conference on Machine Learning, pages 41092–41110. PMLR.

- Jiawei Zhang. 2023. Graph-toolformer: To empower llms with graph reasoning ability via prompt augmented by chatgpt. arXiv preprint arXiv:2304.11116.

- Syntax error-free and generalizable tool use for llms via finite-state decoding. Advances in Neural Information Processing Systems.

- Benchmarking large language models for news summarization. Transactions of the Association for Computational Linguistics, 12:39–57.

- Reverse chain: A generic-rule for llms to master multi-api planning. arXiv preprint arXiv:2310.04474.

- Siren’s song in the ai ocean: a survey on hallucination in large language models. arXiv preprint arXiv:2309.01219.

- Explainability for large language models: A survey. ACM Transactions on Intelligent Systems and Technology, 15(2):1–38.

- Diffagent: Fast and accurate text-to-image api selection with large language model. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR).

- Retrieval-augmented generation for ai-generated content: A survey. arXiv preprint arXiv:2402.19473.

- A survey of large language models. arXiv preprint arXiv:2303.18223.

- Let me do it for you: Towards llm empowered recommendation via tool learning. In Proceedings of the 47th International ACM SIGIR Conference on Research and Development in Information Retrieval.

- Toolrerank: Adaptive and hierarchy-aware reranking for tool retrieval. In Proceedings of the 2024 Joint International Conference on Computational Linguistics, Language Resources and Evaluation (LREC-COLING).

- Viotgpt: Learning to schedule vision tools towards intelligent video internet of things. arXiv preprint arXiv:2312.00401.

- Mu Zhu. 2004. Recall, precision and average precision. Department of Statistics and Actuarial Science, University of Waterloo, Waterloo, 2(30):6.

- Toolchain*: Efficient action space navigation in large language models with a* search. In Proceedings of the 12th International Conference on Learning Representations (ICLR).

- Toolqa: A dataset for llm question answering with external tools. Advances in Neural Information Processing Systems, 36.

Collections

Sign up for free to add this paper to one or more collections.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.