Trajectory Anomaly Detection with Language Models

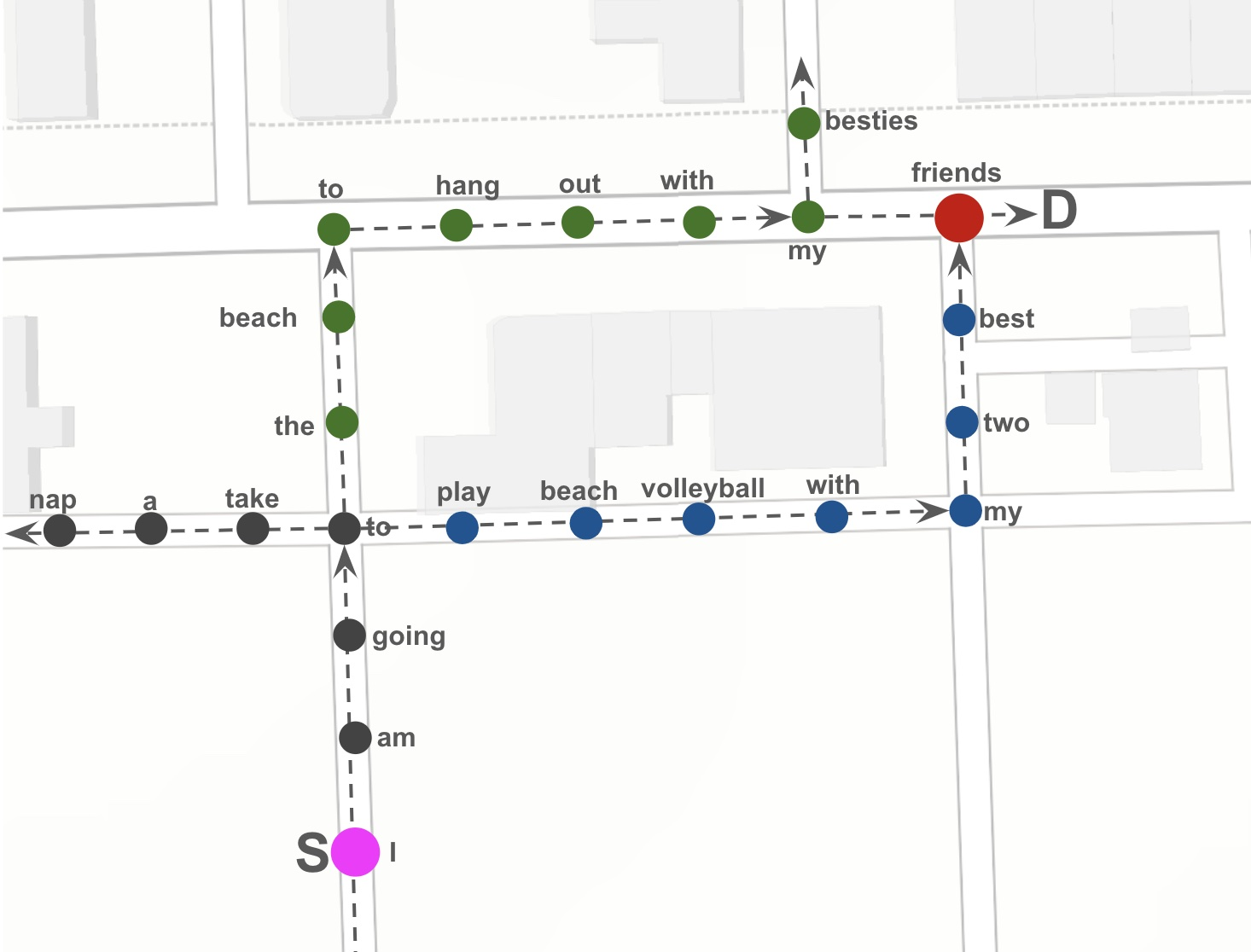

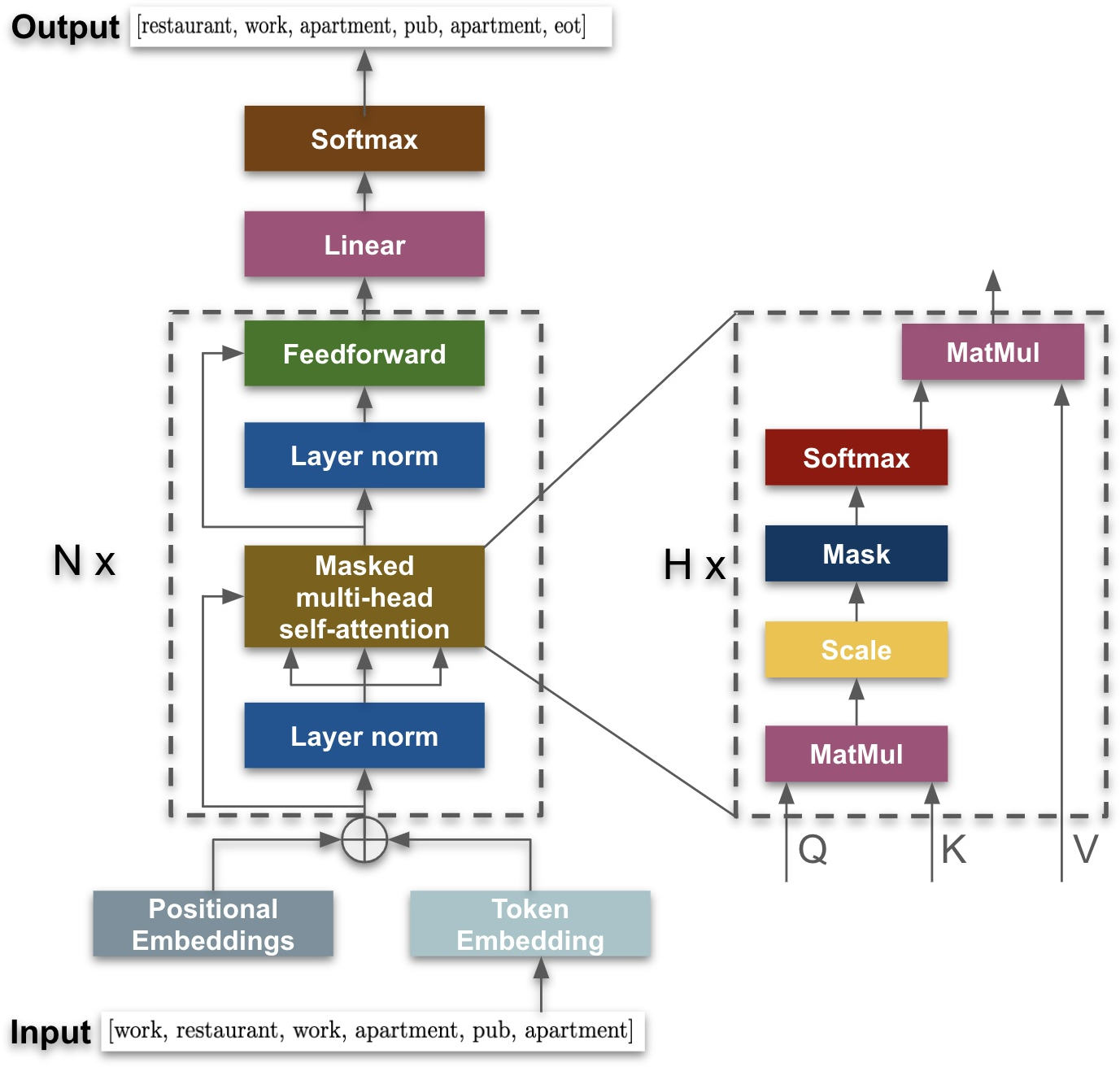

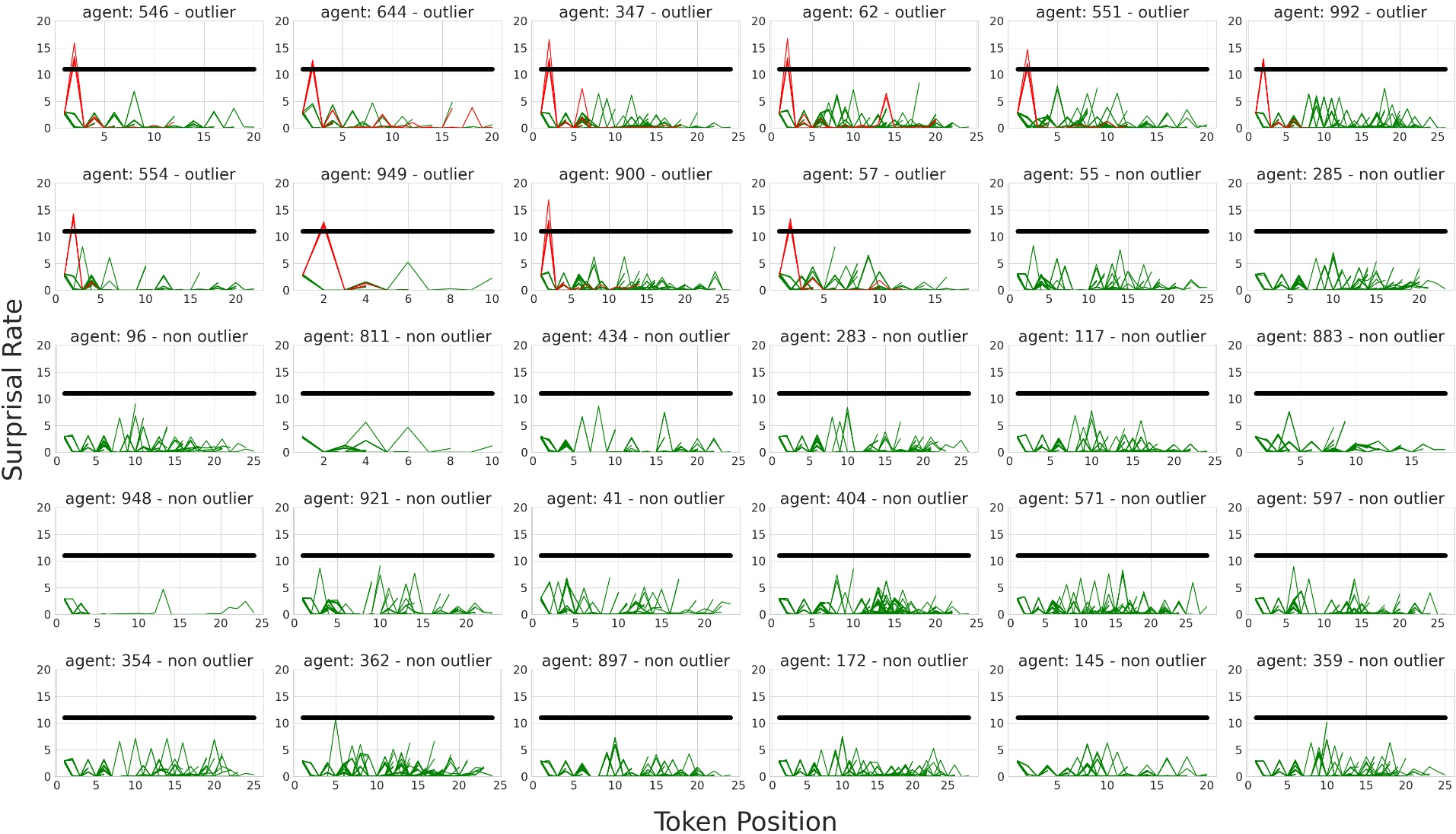

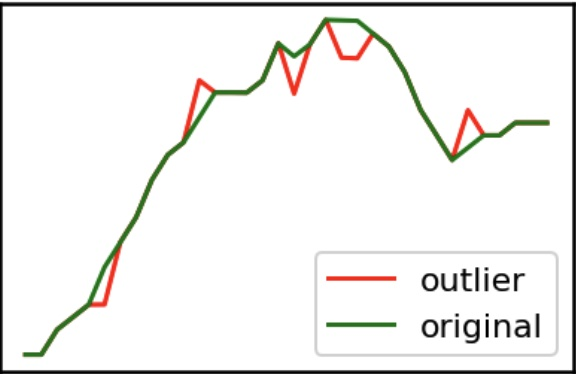

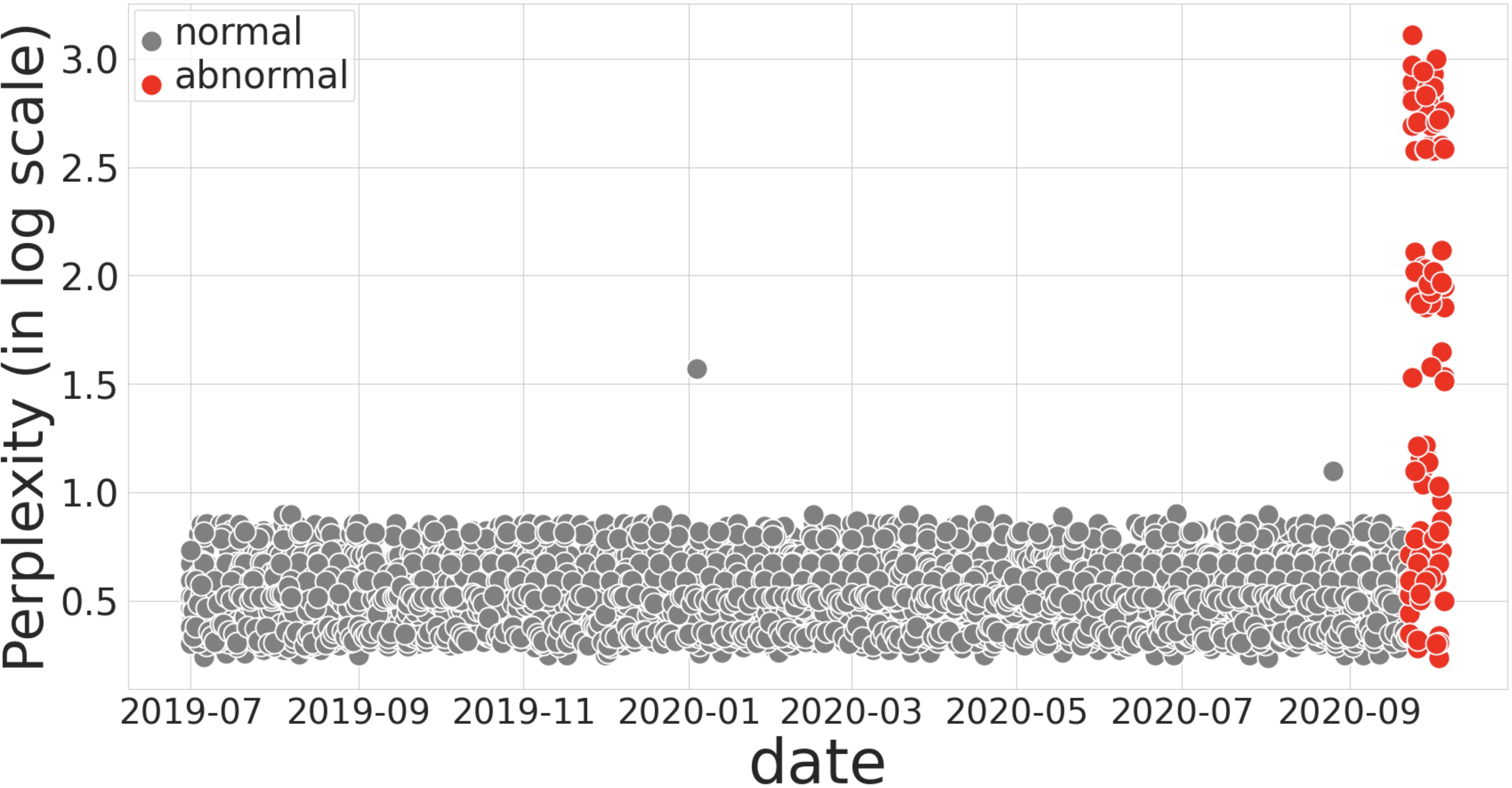

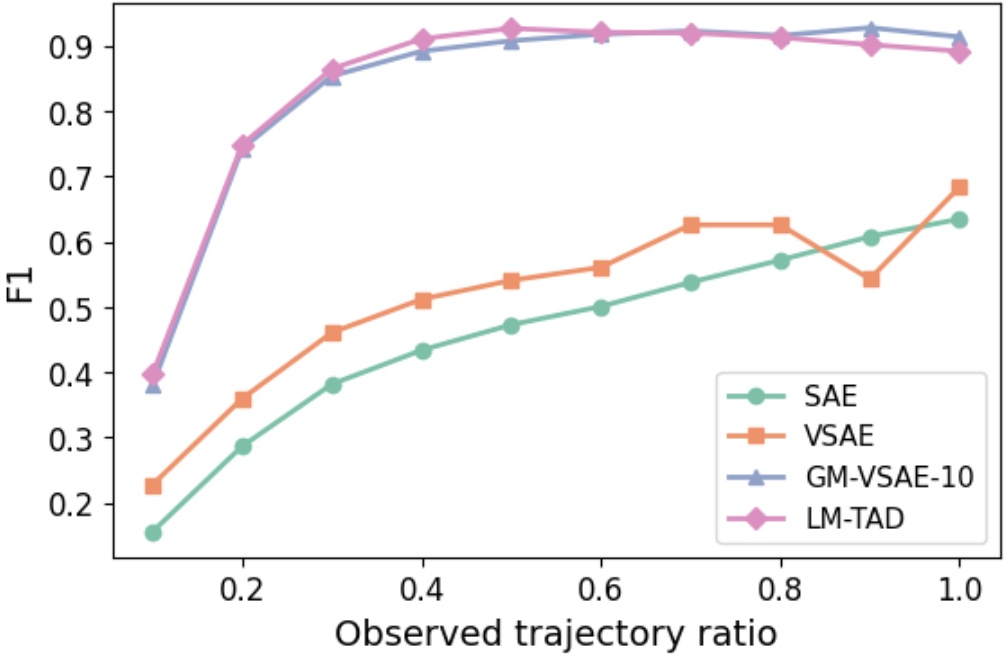

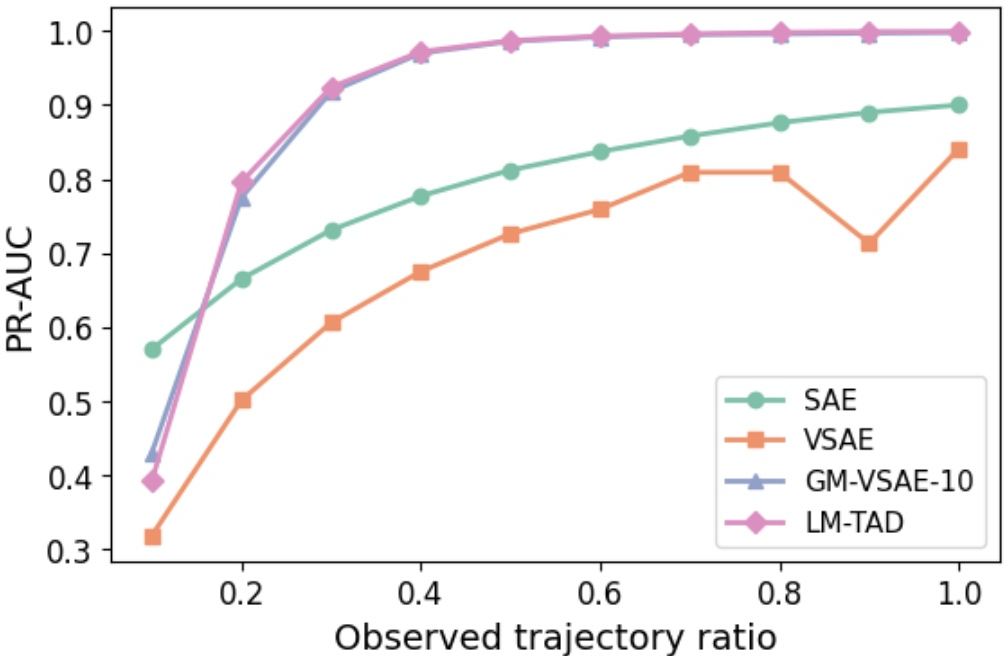

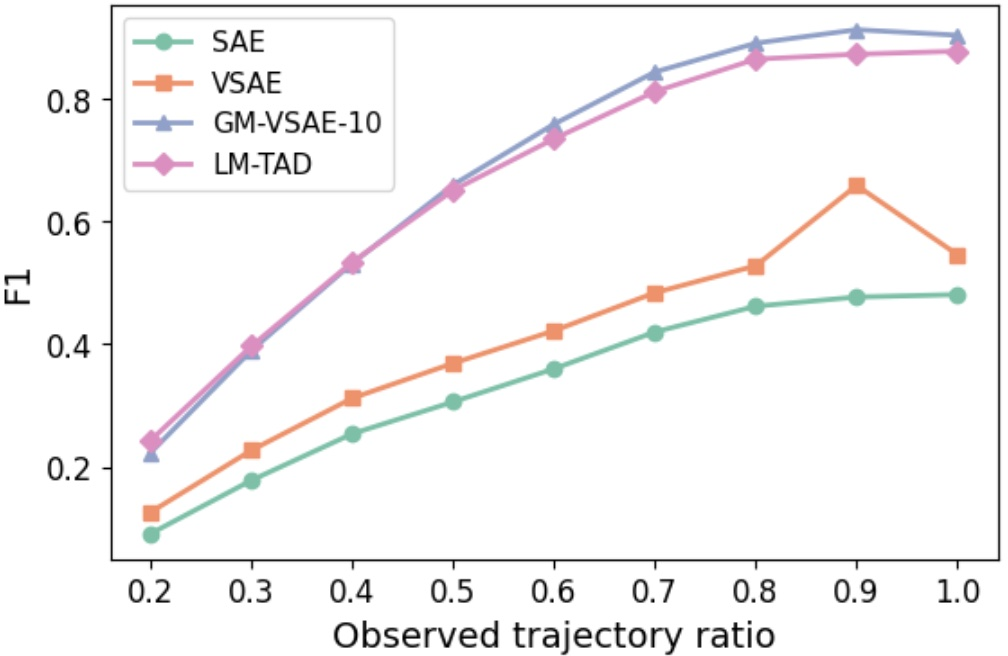

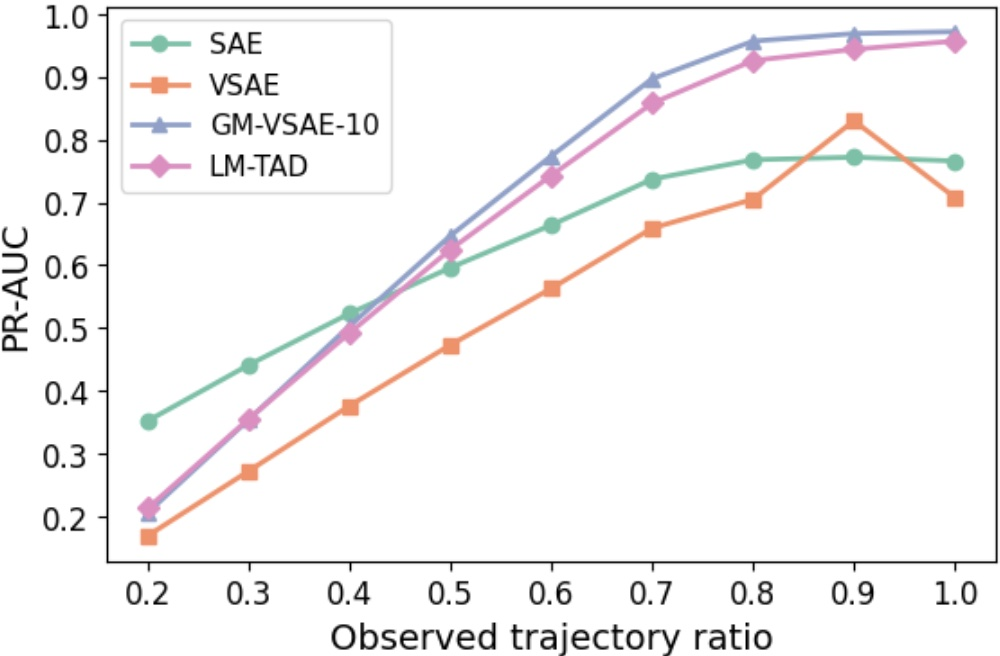

Abstract: This paper presents a novel approach for trajectory anomaly detection using an autoregressive causal-attention model, termed LM-TAD. This method leverages the similarities between language statements and trajectories, both of which consist of ordered elements requiring coherence through external rules and contextual variations. By treating trajectories as sequences of tokens, our model learns the probability distributions over trajectories, enabling the identification of anomalous locations with high precision. We incorporate user-specific tokens to account for individual behavior patterns, enhancing anomaly detection tailored to user context. Our experiments demonstrate the effectiveness of LM-TAD on both synthetic and real-world datasets. In particular, the model outperforms existing methods on the Pattern of Life (PoL) dataset by detecting user-contextual anomalies and achieves competitive results on the Porto taxi dataset, highlighting its adaptability and robustness. Additionally, we introduce the use of perplexity and surprisal rate metrics for detecting outliers and pinpointing specific anomalous locations within trajectories. The LM-TAD framework supports various trajectory representations, including GPS coordinates, staypoints, and activity types, proving its versatility in handling diverse trajectory data. Moreover, our approach is well-suited for online trajectory anomaly detection, significantly reducing computational latency by caching key-value states of the attention mechanism, thereby avoiding repeated computations.

- Massive Trajectory Data Based on Patterns of Life. In Proceedings of the 31st ACM International Conference on Advances in Geographic Information Systems. 1–4. https://doi.org/10.1145/3589132.3625592

- Jinwon An and Sungzoon Cho. 2015. Variational Autoencoder based Anomaly Detection using Reconstruction Probability. https://api.semanticscholar.org/CorpusID:36663713

- Alexei Baevski and Abdelrahman Mohamed. 2020. Effectiveness of Self-Supervised Pre-Training for ASR. In ICASSP 2020 - 2020 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). 7694–7698. https://doi.org/10.1109/ICASSP40776.2020.9054224

- wav2vec 2.0: A Framework for Self-Supervised Learning of Speech Representations. ArXiv abs/2006.11477 (2020). https://proceedings.neurips.cc/paper/2020/file/92d1e1eb1cd6f9fba3227870bb6d7f07-Paper.pdf

- Language Models Are Few-Shot Learners (NIPS’20). Curran Associates Inc., Red Hook, NY, USA, Article 159, 25 pages.

- iBOAT: Isolation-Based Online Anomalous Trajectory Detection. IEEE Transactions on Intelligent Transportation Systems 14, 2 (2013), 806–818. https://doi.org/10.1109/TITS.2013.2238531

- Real-Time Detection of Anomalous Taxi Trajectories from GPS Traces. In Mobile and Ubiquitous Systems: Computing, Networking, and Services, Alessandro Puiatti and Tao Gu (Eds.). Springer Berlin Heidelberg, Berlin, Heidelberg, 63–74.

- Outlier Detection with Autoencoder Ensembles. 90–98. https://doi.org/10.1137/1.9781611974973.11 arXiv:https://epubs.siam.org/doi/pdf/10.1137/1.9781611974973.11

- BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers). Association for Computational Linguistics, Minneapolis, Minnesota, 4171–4186. https://doi.org/10.18653/v1/N19-1423

- Online Trajectory Prediction for Metropolitan Scale Mobility Digital Twin. In Proceedings of the 30th International Conference on Advances in Geographic Information Systems (Seattle, Washington) (SIGSPATIAL ’22). Association for Computing Machinery, New York, NY, USA, Article 103, 12 pages. https://doi.org/10.1145/3557915.3561040

- Hugo Ledoux Filip Biljecki and Peter van Oosterom. 2013. Transportation mode-based segmentation and classification of movement trajectories. International Journal of Geographical Information Science 27, 2 (2013), 385–407. https://doi.org/10.1080/13658816.2012.692791 arXiv:https://doi.org/10.1080/13658816.2012.692791

- Coupled IGMM-GANs for deep multimodal anomaly detection in human mobility data. ArXiv abs/1809.02728 (2018). https://arxiv.org/abs/1809.02728

- How do you go where?: improving next location prediction by learning travel mode information using transformers. Proceedings of the 30th International Conference on Advances in Geographic Information Systems (2022). https://arxiv.org/abs/2210.04095

- Trajectory Outlier Detection: A Partition-and-Detect Framework. In 2008 IEEE 24th International Conference on Data Engineering. 140–149. https://doi.org/10.1109/ICDE.2008.4497422

- Deep Representation Learning for Trajectory Similarity Computation. In 2018 IEEE 34th International Conference on Data Engineering (ICDE). 617–628. https://doi.org/10.1109/ICDE.2018.00062

- MiniCache: KV Cache Compression in Depth Dimension for Large Language Models. https://arxiv.org/pdf/2405.14366

- Online Anomalous Trajectory Detection with Deep Generative Sequence Modeling. In 2020 IEEE 36th International Conference on Data Engineering (ICDE). 949–960. https://doi.org/10.1109/ICDE48307.2020.00087

- Outlier trajectory detection: a trajectory analytics based approach. In Database Systems for Advanced Applications (Lecture Notes in Computer Science), Selçuk Candan, Lei Chen, Torben Bach Pedersen, Lijun Chang, and Wen Hua (Eds.). Springer, Springer Nature, United States, 231–246. https://doi.org/10.1007/978-3-319-55753-3_15 22nd International Conference on Database Systems for Advanced Applications (DASFAA) ; Conference date: 27-03-2017 Through 30-03-2017.

- LSTM-based Encoder-Decoder for Multi-sensor Anomaly Detection. ArXiv abs/1607.00148 (2016). https://arxiv.org/pdf/1607.00148.pdf

- Let’s Speak Trajectories. In Proceedings of the 30th International Conference on Advances in Geographic Information Systems (Seattle, Washington) (SIGSPATIAL ’22). Association for Computing Machinery, New York, NY, USA, Article 37, 4 pages. https://doi.org/10.1145/3557915.3560972

- No News is Good News: A Critique of the One Billion Word Benchmark. ArXiv abs/2110.12609 (2021). https://api.semanticscholar.org/CorpusID:239769114

- Deep Contextualized Word Representations. In Proceedings of the 2018 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long Papers). Association for Computational Linguistics, New Orleans, Louisiana, 2227–2237. https://doi.org/10.18653/v1/N18-1202

- Dieter Pfoser and Christian S. Jensen. 1999. Capturing the Uncertainty of Moving-Object Representations. In Proceedings of the 6th International Symposium on Advances in Spatial Databases (SSD’99). Springer-Verlag, 111–132. https://doi.org/10.1007/3-540-48482-5_9

- Efficiently Scaling Transformer Inference. ArXiv abs/2211.05102 (2022). https://arxiv.org/pdf/2211.05102

- Learning Transferable Visual Models From Natural Language Supervision. In International Conference on Machine Learning. https://proceedings.mlr.press/v139/radford21a/radford21a.pdf

- Language Models are Unsupervised Multitask Learners. https://api.semanticscholar.org/CorpusID:160025533

- Sequential Variational Autoencoders for Collaborative Filtering. Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining (2018). https://arxiv.org/pdf/1811.09975.pdf

- Coupled IGMM-GANs with Applications to Anomaly Detection in Human Mobility Data. ACM Trans. Spatial Algorithms Syst. 6, 4, Article 24 (jun 2020), 14 pages. https://doi.org/10.1145/3385809

- Anomalous Trajectory Detection Using Recurrent Neural Network. In Advanced Data Mining and Applications, Guojun Gan, Bohan Li, Xue Li, and Shuliang Wang (Eds.). Springer International Publishing, Cham, 263–277.

- LLaMA: Open and Efficient Foundation Language Models. ArXiv abs/2302.13971 (2023). https://arxiv.org/pdf/2302.13971.pdf

- Inc Uber. 2023. H3 Hexagonal hierarchical geospatial indexing system. https://h3geo.org/. Accessed: 11/22/23.

- Attention is All you Need. In Advances in Neural Information Processing Systems, I. Guyon, U. Von Luxburg, S. Bengio, H. Wallach, R. Fergus, S. Vishwanathan, and R. Garnett (Eds.), Vol. 30. Curran Associates, Inc. https://arxiv.org/pdf/1706.03762.pdf

- Leveraging language foundation models for human mobility forecasting. Proceedings of the 30th International Conference on Advances in Geographic Information Systems (2022). https://arxiv.org/pdf/2209.05479.pdf

- Driving with Knowledge from the Physical World (KDD ’11). Association for Computing Machinery, New York, NY, USA, 316–324. https://doi.org/10.1145/2020408.2020462

- T-Drive: Driving Directions Based on Taxi Trajectories (GIS ’10). Association for Computing Machinery, New York, NY, USA, 99–108. https://doi.org/10.1145/1869790.1869807

- IBAT: Detecting Anomalous Taxi Trajectories from GPS Traces. In Proceedings of the 13th International Conference on Ubiquitous Computing (Beijing, China) (UbiComp ’11). Association for Computing Machinery, New York, NY, USA, 99–108. https://doi.org/10.1145/2030112.2030127

- A trajectory outlier detection method based on variational auto-encoder. Mathematical biosciences and engineering : MBE 20 8 (2023), 15075–15093. https://api.semanticscholar.org/CorpusID:259926930

- Online Anomalous Subtrajectory Detection on Road Networks with Deep Reinforcement Learning. In 2023 IEEE 39th International Conference on Data Engineering (ICDE). 246–258. https://doi.org/10.1109/ICDE55515.2023.00026

- Contextual Spatial Outlier Detection with Metric Learning (KDD ’17). Association for Computing Machinery, New York, NY, USA, 2161–2170. https://doi.org/10.1145/3097983.3098143

- Chong Zhou and Randy C. Paffenroth. 2017. Anomaly Detection with Robust Deep Autoencoders. In Proceedings of the 23rd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (Halifax, NS, Canada) (KDD ’17). Association for Computing Machinery, New York, NY, USA, 665–674. https://doi.org/10.1145/3097983.3098052

- Learning to Prompt for Vision-Language Models. International Journal of Computer Vision 130 (2021), 2337 – 2348. https://arxiv.org/pdf/2109.01134.pdf

- Time-Dependent Popular Routes Based Trajectory Outlier Detection. Springer-Verlag, Berlin, Heidelberg, 16–30. https://doi.org/10.1007/978-3-319-26190-4_2

- Deep Autoencoding Gaussian Mixture Model for Unsupervised Anomaly Detection. In International Conference on Learning Representations. https://sites.cs.ucsb.edu/~bzong/doc/iclr18-dagmm.pdf

- Urban life: a model of people and places. Computational and Mathematical Organization Theory 29 (11 2023), 20–51. https://doi.org/10.1007/s10588-021-09348-7

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.