- The paper introduces RHYTHM, a framework that integrates hierarchical temporal tokenization with frozen LLMs for improved human mobility prediction.

- It employs spatio-temporal feature encoding and semantic context integration, achieving a 2.4% gain in Accuracy@1 and 1.0% in Accuracy@5.

- The approach reduces computational complexity and supports scalability, enabling efficient deployment on large urban mobility datasets.

RHYTHM: Efficient Mobility Prediction with LLMs

The paper introduces RHYTHM (\underline{R}easoning with \underline{H}ierarchical \underline{T}emporal \underline{T}okenization for \underline{H}uman \underline{M}obility), a novel framework that leverages LLMs for human mobility prediction by integrating multi-scale temporal tokenization with the reasoning capabilities of pretrained LLMs. It addresses the limitations of existing approaches by decomposing trajectories into meaningful segments, tokenizing them into discrete representations, and enriching each token with pre-computed prompt embeddings derived from a frozen LLM. Evaluation across three real-world datasets demonstrates enhanced accuracy and computational efficiency compared to state-of-the-art methods.

Methodological Innovations

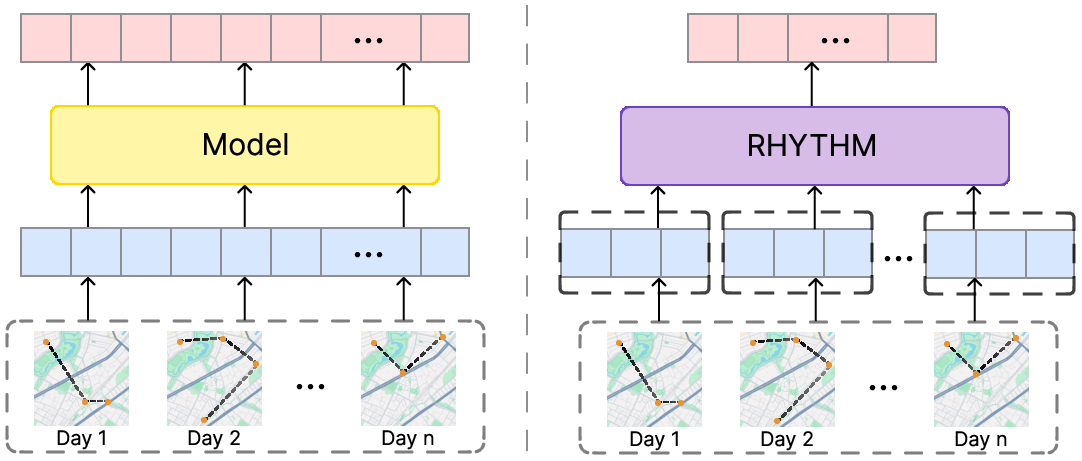

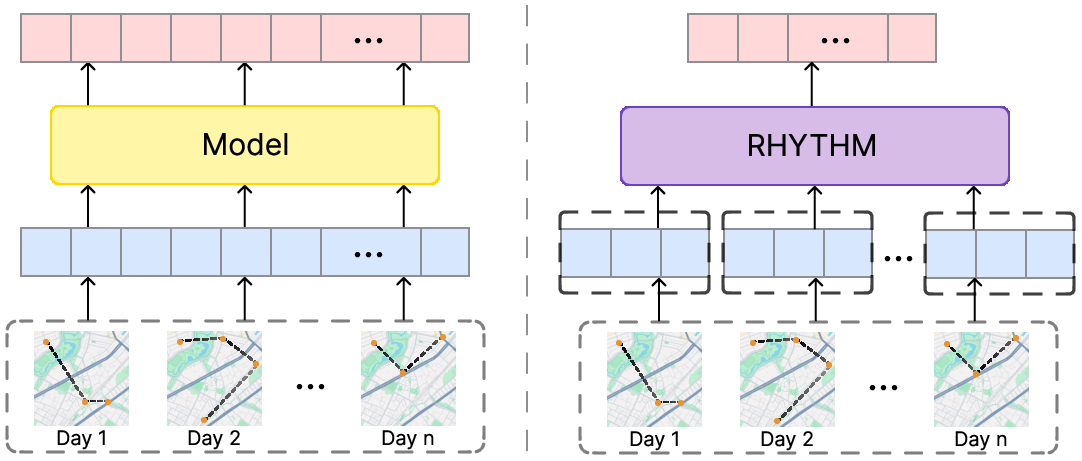

RHYTHM's architecture (Figure 1) integrates spatio-temporal feature encoding, temporal tokenization, semantic context integration, and cross-representational mobility prediction. For spatio-temporal feature encoding, the framework uses trainable embeddings to capture the periodic nature of human mobility, concatenating time-of-day and day-of-week information. Spatial representation combines location-specific categorical embeddings with transformed geographic coordinates. Temporal tokenization then divides the embedded representation into distinct segments, employing intra-segment attention for local patterns and inter-segment attention for long-range dependencies.

Figure 2: Motivation for RHYTHM. By partitioning trajectories into discrete tokens instead of a continuous stream, RHYTHM more effectively captures recurring mobility patterns.

Semantic context integration is achieved via a hierarchical prompting mechanism, with structured descriptions generated for historical segments and task-specific prompts constructed for future timestamps. These prompts are processed through frozen pre-trained LLMs, and semantic embeddings are pre-computed offline to minimize computational overhead. Finally, a learnable projection head transforms the fused spatio-temporal and semantic signals into location-specific scores, enabling mobility predictions.

Experimental Validation

The methodology is empirically validated using three urban mobility datasets from Kumamoto, Sapporo, and Hiroshima, obtained from YJMob100K. RHYTHM is benchmarked against established baselines, including LSTM, DeepMove, PatchTST, iTransformer, TimeLLM, PMT, ST-MoE-BERT, CMHSA, COLA, and Mobility-LLM. Performance is measured using Accuracy@k and Mean Reciprocal Rank (MRR), complemented by Dynamic Time Warping (DTW) and BLEU for trajectory-level evaluation.

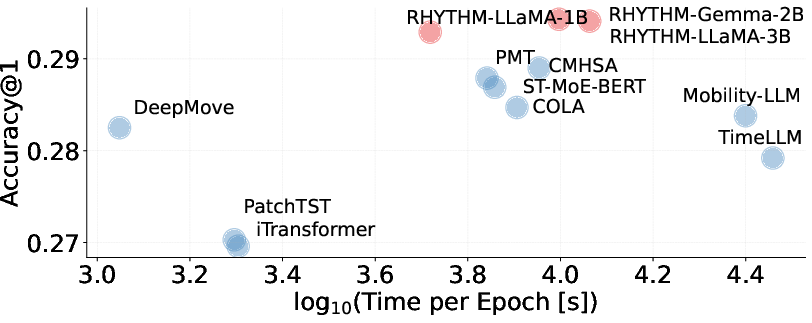

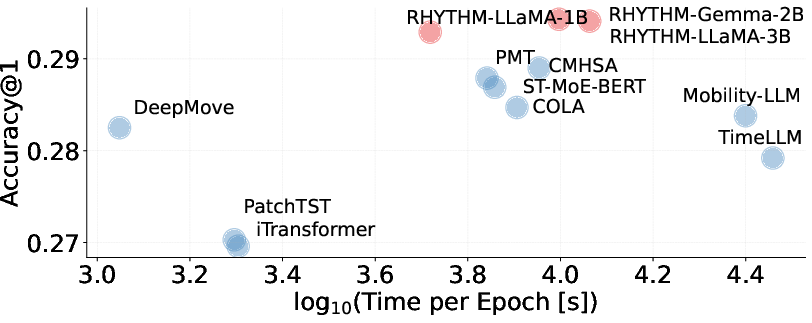

The results demonstrate that RHYTHM consistently surpasses baseline methods, achieving superior performance across most criteria and cities. In particular, RHYTHM delivers a 2.4% gain in Accuracy@1 and 1.0% improvement in Accuracy@5 relative to the strongest competing approach. Further analyses evaluate spatial accuracy, temporal patterns, computational efficiency, model scaling, and component-wise contributions.

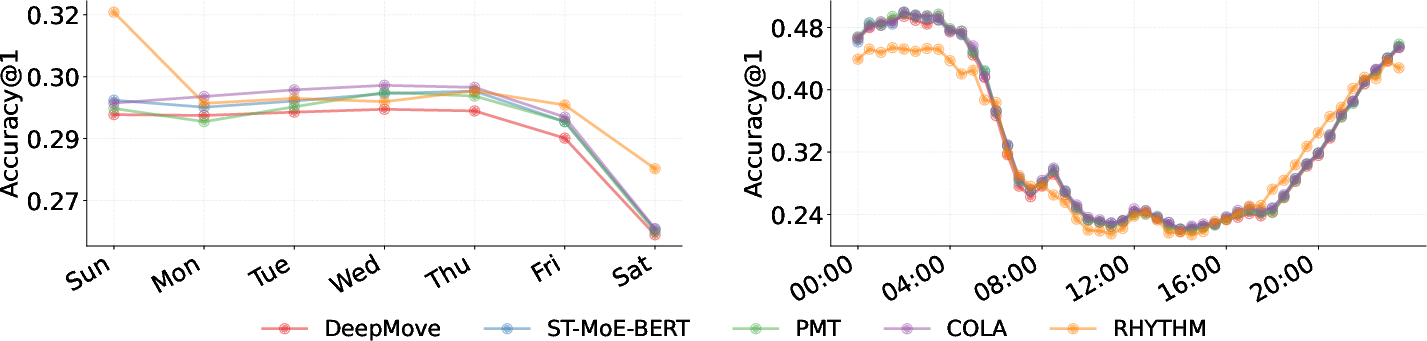

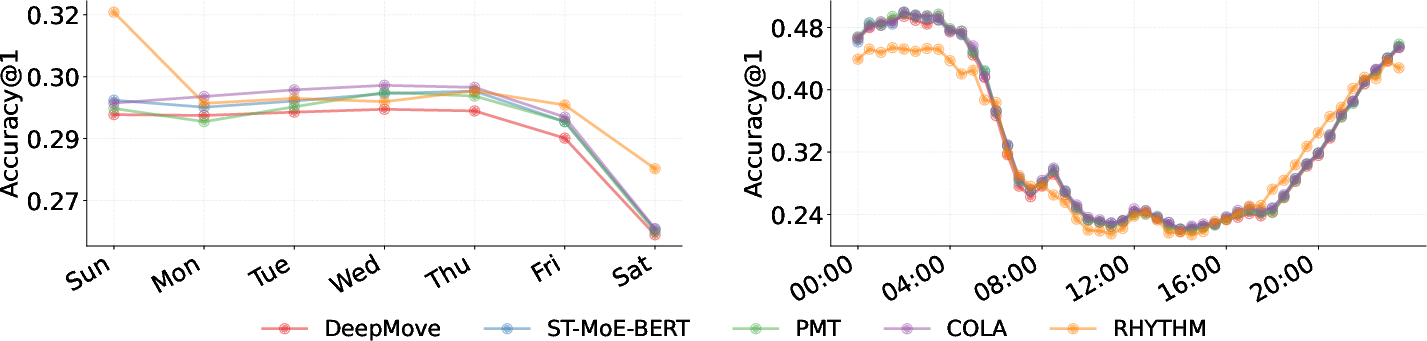

Figure 3: Temporal performance patterns of RHYTHM and baselines on Sapporo data showing weekly (left) and daily (right) variations. The results demonstrate systematic performance fluctuations across both diurnal and weekly cycles.

Efficiency and Scalability

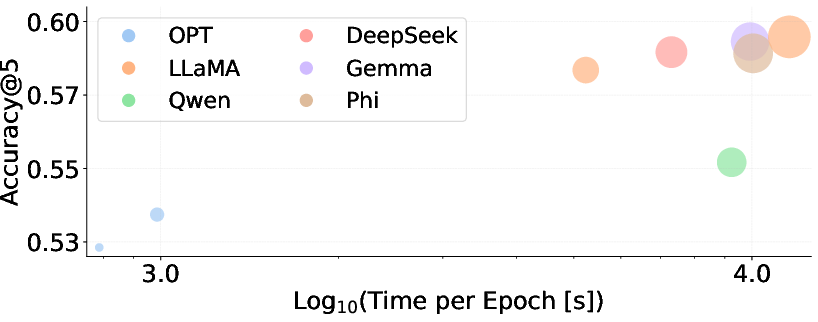

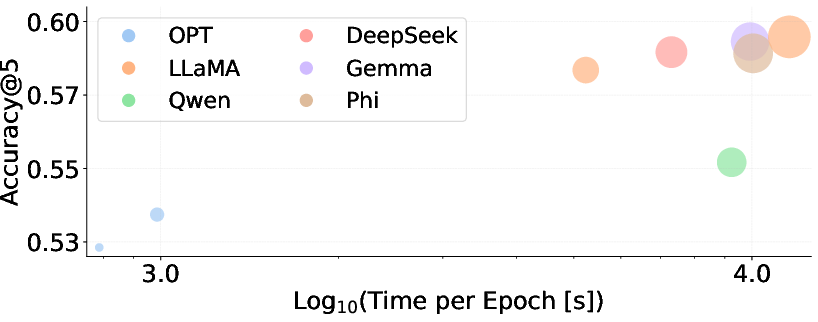

The paper emphasizes RHYTHM's architectural design for computational efficiency and parameter utilization. Pre-computation of semantic representations, combined with temporal segmentation, reduces attention complexity from O((T+H)2) to O((N+H)2). The frozen LLM parameters accelerate convergence and minimize memory footprint. These optimizations enable RHYTHM to handle extensive trajectory sequences without compromising prediction accuracy, making it suitable for resource-constrained environments and large-scale mobility applications. Computational performance across different LLM backbones using identical experimental settings is shown in (Figure 4).

Figure 4: Computational efficiency versus predictive accuracy trade-offs for RHYTHM and baseline approaches on the Sapporo dataset.

Implications and Future Directions

The research demonstrates the potential of LLMs as contextual reasoning modules in mobility prediction while maintaining computational tractability. The framework's modular design facilitates adaptation across different pre-trained LLMs without architectural modifications. The work opens avenues for enhancing the robustness and transferability of foundation models within spatio-temporal contexts. Future efforts could focus on developing sophisticated fine-tuning strategies, leveraging parameter-efficient adaptation and model compression techniques to enhance performance while minimizing computational requirements. Further exploration of autoregressive formulations for temporal sequences and mitigation of inherent biases within training datasets are also identified as valuable directions for future research.

Conclusion

RHYTHM presents a novel architecture for mobility prediction that encodes spatio-temporal relationships through temporal segmentation and utilizes semantic representations to model periodic behaviors. By incorporating frozen pre-trained LLMs as contextual reasoning modules, RHYTHM captures the underlying decision dynamics, particularly for non-routine trajectories, while maintaining computational tractability.