LoGAH: Predicting 774-Million-Parameter Transformers using Graph HyperNetworks with 1/100 Parameters (2405.16287v1)

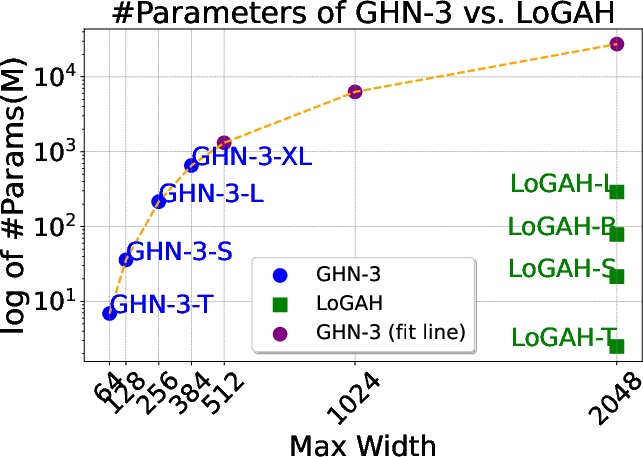

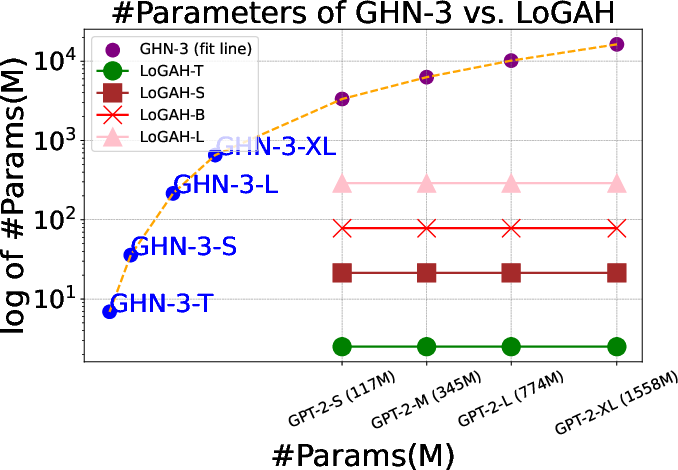

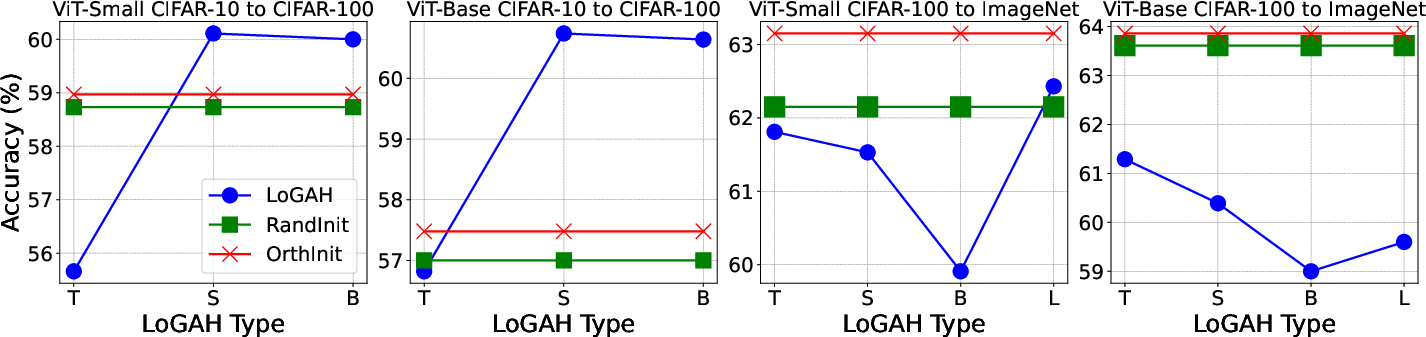

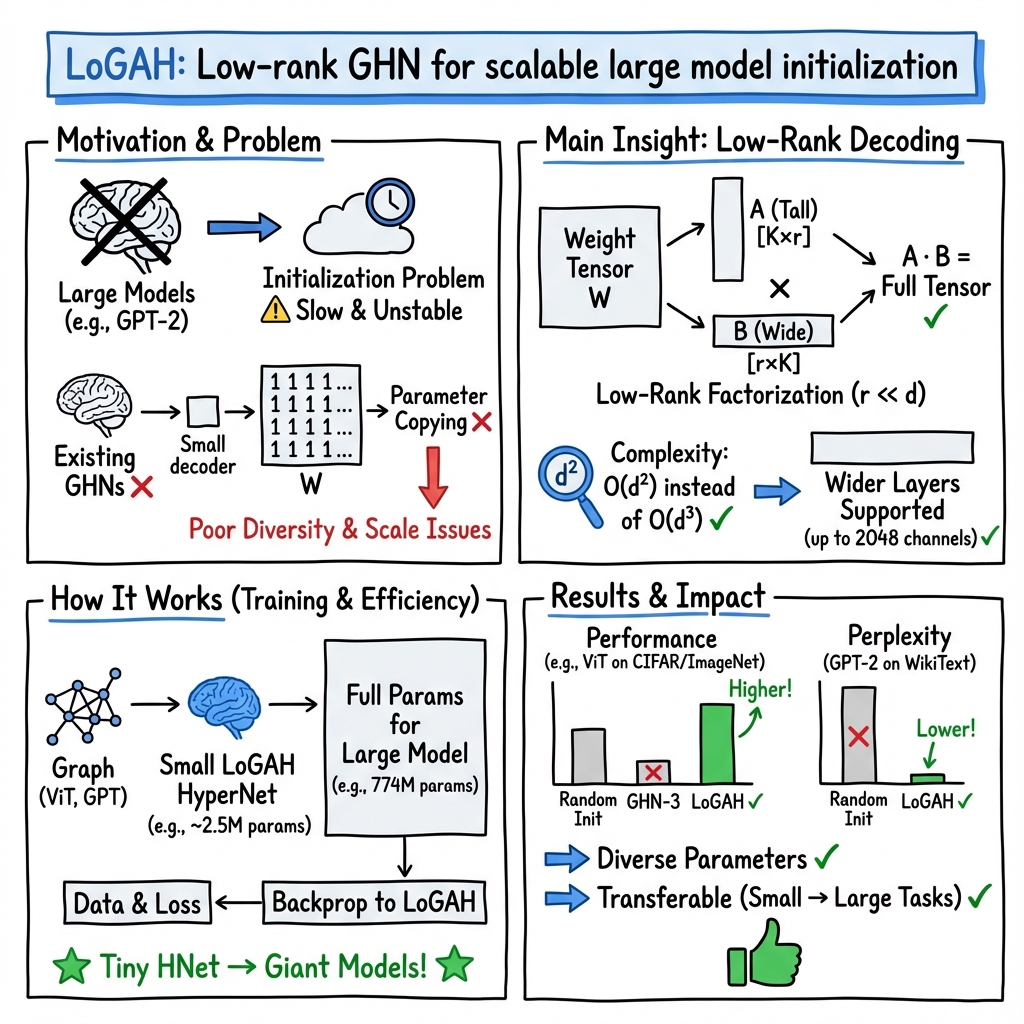

Abstract: A good initialization of deep learning models is essential since it can help them converge better and faster. However, pretraining large models is unaffordable for many researchers, which makes a desired prediction for initial parameters more necessary nowadays. Graph HyperNetworks (GHNs), one approach to predicting model parameters, have recently shown strong performance in initializing large vision models. Unfortunately, predicting parameters of very wide networks relies on copying small chunks of parameters multiple times and requires an extremely large number of parameters to support full prediction, which greatly hinders its adoption in practice. To address this limitation, we propose LoGAH (Low-rank GrAph Hypernetworks), a GHN with a low-rank parameter decoder that expands to significantly wider networks without requiring as excessive increase of parameters as in previous attempts. LoGAH allows us to predict the parameters of 774-million large neural networks in a memory-efficient manner. We show that vision and LLMs (i.e., ViT and GPT-2) initialized with LoGAH achieve better performance than those initialized randomly or using existing hypernetworks. Furthermore, we show promising transfer learning results w.r.t. training LoGAH on small datasets and using the predicted parameters to initialize for larger tasks. We provide the codes in https://github.com/Blackzxy/LoGAH .

- Masked autoencoders are scalable vision learners, 2021.

- BERT: Pre-training of deep bidirectional transformers for language understanding. In Jill Burstein, Christy Doran, and Thamar Solorio, editors, Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), pages 4171–4186, Minneapolis, Minnesota, June 2019. Association for Computational Linguistics. doi: 10.18653/v1/N19-1423. URL https://aclanthology.org/N19-1423.

- Improving language understanding by generative pre-training. 2018.

- Llama 2: Open foundation and fine-tuned chat models, 2023.

- AI@Meta. Llama 3 model card. 2024. URL https://github.com/meta-llama/llama3/blob/main/MODEL_CARD.md.

- An image is worth 16x16 words: Transformers for image recognition at scale, 2021.

- Scaling vision transformers to 22 billion parameters. In International Conference on Machine Learning, pages 7480–7512. PMLR, 2023.

- The computational limits of deep learning, 2022.

- Scaling vision transformers, 2022.

- Attention is all you need, 2023.

- Neocognitron: A neural network model for a mechanism of visual pattern recognition. IEEE transactions on systems, man, and cybernetics, (5):826–834, 1983.

- Imagenet large scale visual recognition challenge. International journal of computer vision, 115:211–252, 2015.

- The pile: An 800gb dataset of diverse text for language modeling. arXiv preprint arXiv:2101.00027, 2020.

- Graph hypernetworks for neural architecture search. arXiv preprint arXiv:1810.05749, 2018.

- Parameter prediction for unseen deep architectures, 2021.

- Can we scale transformers to predict parameters of diverse imagenet models?, 2023.

- Graph hypernetworks for neural architecture search, 2020.

- Language models are unsupervised multitask learners. OpenAI blog, 1(8):9, 2019.

- Do transformers really perform bad for graph representation?, 2021.

- Lora: Low-rank adaptation of large language models. arXiv preprint arXiv:2106.09685, 2021.

- Learning multiple layers of features from tiny images. 2009.

- Pointer sentinel mixture models, 2016.

- Exact solutions to the nonlinear dynamics of learning in deep linear neural networks, 2014.

- Openwebtext corpus. http://Skylion007.github.io/OpenWebTextCorpus, 2019.

- Huggingface’s transformers: State-of-the-art natural language processing. arXiv preprint arXiv:1910.03771, 2019.

- Zero: Memory optimizations toward training trillion parameter models, 2020.

- Delving deep into rectifiers: Surpassing human-level performance on imagenet classification, 2015.

- A survey of knowledge-intensive nlp with pre-trained language models, 2022.

- Threats to pre-trained language models: Survey and taxonomy, 2022.

- Language models are few-shot learners, 2020.

- End-to-end object detection with transformers, 2020.

- Generative pretraining from pixels. In Hal Daumé III and Aarti Singh, editors, Proceedings of the 37th International Conference on Machine Learning, volume 119 of Proceedings of Machine Learning Research, pages 1691–1703. PMLR, 13–18 Jul 2020. URL https://proceedings.mlr.press/v119/chen20s.html.

- Hypernetworks, 2016.

- Hyperseg: Patch-wise hypernetwork for real-time semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pages 4061–4070, June 2021.

- Fast and flexible multi-task classification using conditional neural adaptive processes, 2020.

- Task-adaptive neural process for user cold-start recommendation. In Proceedings of the Web Conference 2021, WWW ’21, page 1306–1316, New York, NY, USA, 2021. Association for Computing Machinery. ISBN 9781450383127. doi: 10.1145/3442381.3449908. URL https://doi.org/10.1145/3442381.3449908.

- Hypertransformer: Model generation for supervised and semi-supervised few-shot learning, 2022.

- General-purpose in-context learning by meta-learning transformers, 2024.

- Generating interpretable networks using hypernetworks. arXiv preprint arXiv:2312.03051, 2023.

- Metainit: Initializing learning by learning to initialize. Advances in Neural Information Processing Systems, 32, 2019.

- Towards theoretically inspired neural initialization optimization. Advances in Neural Information Processing Systems, 35:18983–18995, 2022.

- Gradmax: Growing neural networks using gradient information. arXiv preprint arXiv:2201.05125, 2022.

- Learning to grow pretrained models for efficient transformer training. arXiv preprint arXiv:2303.00980, 2023.

- Mamba: Linear-time sequence modeling with selective state spaces. arXiv preprint arXiv:2312.00752, 2023.

- Kunihiko Fukushima. Cognitron: A self-organizing multilayered neural network. Biological cybernetics, 20(3-4):121–136, 1975.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.