Deep Extrinsic Manifold Representation for Vision Tasks

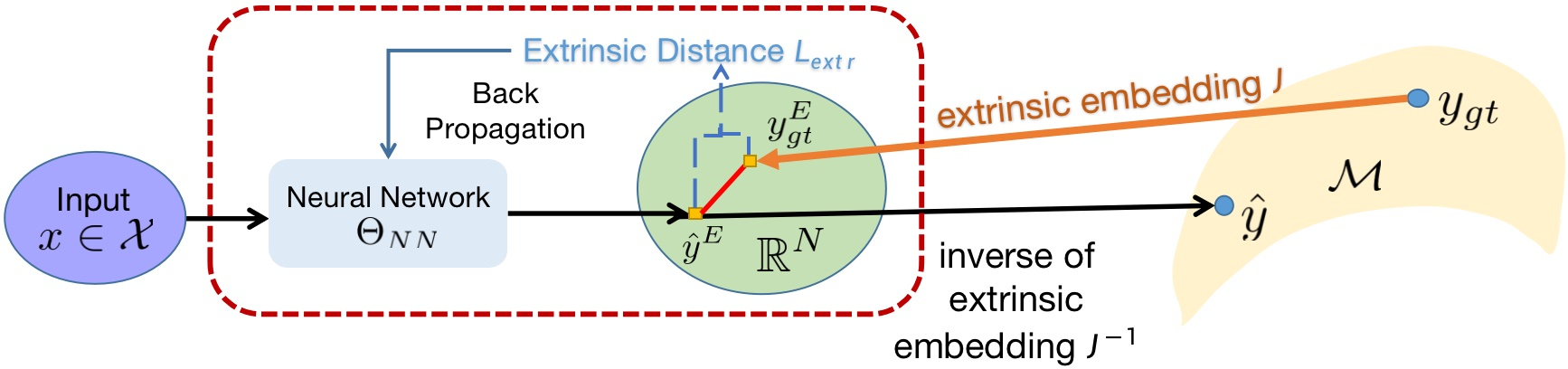

Abstract: Non-Euclidean data is frequently encountered across different fields, yet there is limited literature that addresses the fundamental challenge of training neural networks with manifold representations as outputs. We introduce the trick named Deep Extrinsic Manifold Representation (DEMR) for visual tasks in this context. DEMR incorporates extrinsic manifold embedding into deep neural networks, which helps generate manifold representations. The DEMR approach does not directly optimize the complex geodesic loss. Instead, it focuses on optimizing the computation graph within the embedded Euclidean space, allowing for adaptability to various architectural requirements. We provide empirical evidence supporting the proposed concept on two types of manifolds, $SE(3)$ and its associated quotient manifolds. This evidence offers theoretical assurances regarding feasibility, asymptotic properties, and generalization capability. The experimental results show that DEMR effectively adapts to point cloud alignment, producing outputs in $ SE(3) $, as well as in illumination subspace learning with outputs on the Grassmann manifold.

- Discrete geodesic regression in shape space. In International Workshop on Energy Minimization Methods in Computer Vision and Pattern Recognition, pp. 108–122. Springer, 2013.

- Learning implicit brain mri manifolds with deep learning. In Medical Imaging 2018: Image Processing, volume 10574, pp. 105741L. International Society for Optics and Photonics, 2018.

- Large sample theory of intrinsic and extrinsic sample means on manifolds. The Annals of Statistics, 31(1):1–29, 2003.

- Extrinsic analysis on manifolds is computationally faster than intrinsic analysis with applications to quality control by machine vision. Applied Stochastic Models in Business and Industry, 28(3):222–235, 2012.

- Boumal, N. An introduction to optimization on smooth manifolds. Available online, May, 3, 2020.

- Continuous-discrete extended kalman filter on matrix lie groups using concentrated gaussian distributions. Journal of Mathematical Imaging and Vision, 51:209–228, 2015.

- Geometric deep learning: going beyond euclidean data. IEEE Signal Processing Magazine, 34(4):18–42, 2017.

- Geometric deep learning: Grids, groups, graphs, geodesics, and gauges. arXiv preprint arXiv:2104.13478, 2021.

- Se3-nets: Learning rigid body motion using deep neural networks. In 2017 IEEE International Conference on Robotics and Automation (ICRA), pp. 173–180. IEEE, 2017.

- Deeper in data science: Geometric deep learning. PROCEEDINGS BOOKS, pp. 21, 2021.

- A comprehensive survey on geometric deep learning. IEEE Access, 8:35929–35949, 2020.

- Projective manifold gradient layer for deep rotation regression. arXiv preprint arXiv:2110.11657, 2021.

- Regression models on riemannian symmetric spaces. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 79(2):463–482, 2017.

- Intrinsic and extrinsic deep learning on manifolds. arXiv preprint arXiv:2302.08606, 2023.

- Fletcher, P. T. Geodesic regression and the theory of least squares on riemannian manifolds. International journal of computer vision, 105(2):171–185, 2013.

- Fletcher, T. Geodesic regression on riemannian manifolds. In Proceedings of the Third International Workshop on Mathematical Foundations of Computational Anatomy-Geometrical and Statistical Methods for Modelling Biological Shape Variability, pp. 75–86, 2011.

- From few to many: Illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intelligence, 23(6):643–660, 2001.

- Polynomial regression on riemannian manifolds. In European conference on computer vision, pp. 1–14. Springer, 2012.

- Detecting brain state changes by geometric deep learning of functional dynamics on riemannian manifold. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 543–552. Springer, 2021.

- Disconnected manifold learning for generative adversarial networks. Advances in Neural Information Processing Systems, 31, 2018.

- Lee, H. Robust extrinsic regression analysis for manifold valued data. arXiv preprint arXiv:2101.11872, 2021.

- An analysis of svd for deep rotation estimation. arXiv preprint arXiv:2006.14616, 2020.

- Extrinsic local regression on manifold-valued data. Journal of the American Statistical Association, 112(519):1261–1273, 2017.

- Learning invariant riemannian geometric representations using deep nets. In Proceedings of the IEEE International Conference on Computer Vision Workshops, pp. 1329–1338, 2017.

- Manifold learning benefits gans. arXiv preprint arXiv:2112.12618, 2021.

- Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 652–660, 2017.

- Understanding the limitations of cnn-based absolute camera pose regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 3302–3312, 2019.

- Intrinsic regression models for manifold-valued data. In International Conference on Medical Image Computing and Computer-Assisted Intervention, pp. 192–199. Springer, 2009.

- Robust geodesic regression. International Journal of Computer Vision, pp. 1–26, 2022.

- Nonparametric regression between general riemannian manifolds. SIAM Journal on Imaging Sciences, 3(3):527–563, 2010.

- Nested grassmannians for dimensionality reduction with applications. Machine Learning for Biomedical Imaging, 1(IPMI 2021 special issue):1–10, 2022.

- Zhang, Y. Bayesian geodesic regression on riemannian manifolds. arXiv preprint arXiv:2009.05108, 2020.

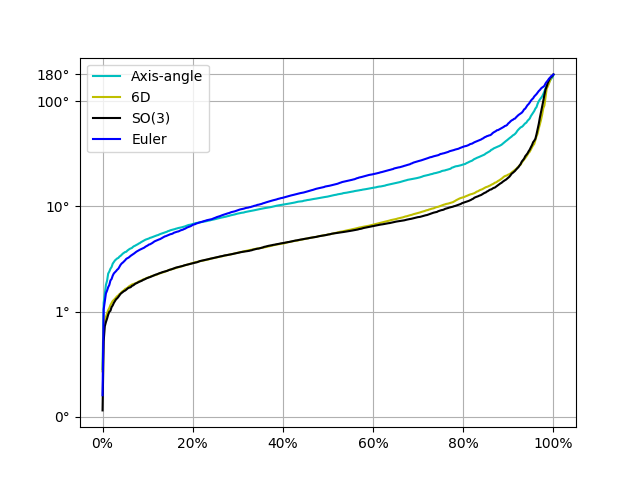

- On the continuity of rotation representations in neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5745–5753, 2019.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.