Gemma: Expanding the Horizon of Open Models with Gemini's Technological Insights

Overview of Gemma Models

Google DeepMind's Gemma models represent a significant stride in the development of open models, derived from the foundational work on Gemini models. By implementing state-of-the-art architectural design, training regimes, and safety protocols, Gemma offers a set of lightweight, high-performance models tailored for a variety of applications. Addressing both computational efficiency and the necessity for responsible AI development, these models set new benchmarks in language understanding, reasoning, and model safety across diverse text-based tasks.

Model Architectures and Parameters

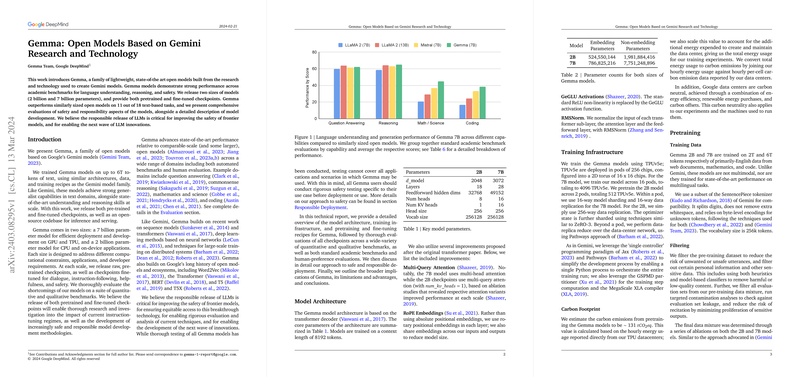

The architecture of Gemma models leverages the transformer decoder framework, incorporating numerous enhancements that bolster its performance and efficiency. Notable among these are the implementation of multi-query attention and rotary positional embeddings, alongside GeGLU activation functions and RMSNorm normalization. These improvements manifest in two distinct model sizes: a 2-billion parameter model optimized for CPU and on-device applications and a more robust 7-billion parameter version designed for GPU and TPU deployment. Both variations inherit the comprehensive vocabulary of 256k tokens, reflecting a design ethos that emphasizes versatility and scalability.

Training Procedures and Data Utilization

Gemma's training infrastructure employs Google's cutting-edge TPU technology, facilitating highly efficient model training across a wide array of computational settings. The models are pretrained on vast corpora encompassing web documents, mathematical content, and coding data, fine-tuned via supervised approaches and reinforced through human feedback. This compound training strategy is fine-tuned to align model outputs closely with human preferences, enhancing both the utility and safety of the models.

Evaluations and Benchmarks

Methodical evaluations underscore Gemma's superior performance over similar and even larger open models across a spectrum of benchmark tasks. Across domains such as question answering, commonsense reasoning, and coding, Gemma models consistently outperform counterparts, setting new performance standards. Notably, in mathematics and coding benchmarks, Gemma demonstrates exceptional prowess, reflecting its broader capabilities in generalist tasks and specialized domains alike.

Safety Measures and Responsible Deployment

DeepMind has undertaken rigorous safety assessments and incorporated multiple layers of mitigation strategies to address potential risks associated with Gemma's deployment. These include extensive filtering of training data, adherence to structured development protocols, and commitments to ongoing evaluation and refinement to safeguard against both unintentional and malicious misuses of the technology.

Future Directions and Conclusion

Gemma represents a pivotal advancement in the landscape of open AI models, driven by methodological innovation and a commitment to ethical AI principles. By providing access to both pretrained and fine-tuned models, the Gemma project invites exploration and development within the research community, promising to catalyze further breakthroughs in AI capabilities. While acknowledging the inherent limitations and areas for further research, the deployment of Gemma models is a calculated step toward democratizing AI research and enabling a new generation of AI applications.