Gemma 2: Enhancing Small Open LLMs with Advanced Techniques

The paper "Gemma 2: Improving Open LLMs at a Practical Size" by the Gemma Team at Google DeepMind details the advancements made in the development of the Gemma 2 family of LLMs. These models range in scale from 2 billion to 27 billion parameters, aspiring to deliver high performance while maintaining a practical size. The primary focus of this work is the application of several advanced techniques to improve model architecture and training, substantially augmenting the performance of smaller models without proportionally increasing their size.

Model Architecture and Training Innovations

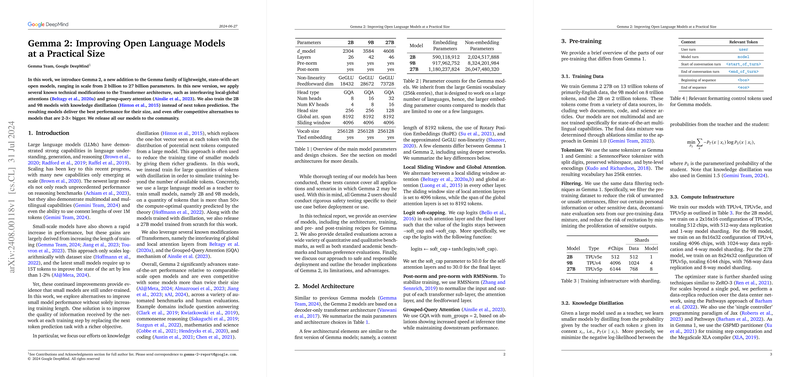

The Gemma 2 models build on the transformer architecture and incorporate enhancements such as interleaving local-global attentions and group-query attention (GQA). These modifications are crucial for balancing computational efficiency and model performance.

Key Innovations:

- Interleaving Local-Global Attentions: This technique alternates between local sliding window attention and global attention, facilitating both detailed local interactions and broader context awareness. This is achieved with a sliding window size of 4096 tokens and a global attention span of 8192 tokens.

- Grouped-Query Attention (GQA): GQA improves inference speed and maintains downstream performance by reducing the number of active parameters during attention computation.

- Knowledge Distillation: Instead of conventional next-token prediction, the smaller 2B and 9B models are trained using knowledge distillation. Here, a larger teacher model’s probability distribution is used to provide richer training signals, simulating an extended training regime.

- Training Data Efficiency: The 2B, 9B, and 27B models are trained on 2 trillion, 8 trillion, and 13 trillion tokens, respectively. This dataset includes various sources like web documents, code, and science articles, ensuring a broad and robust training corpus.

Architecture-Specific Enhancements

The paper discusses additional architectural decisions that contribute to the superior performance of Gemma 2 models:

- Logit Soft-Capping: Applied to limit the outputs of each attention layer, preventing extreme values which could destabilize training.

- RMSNorm for Normalization: RMSNorm is used for stabilizing the training process by normalizing the inputs and outputs of each transformer sub-layer.

- Deeper Networks: For models like the 9B and 27B, deeper architectures were found to marginally outperform wider networks, justifying the switch to increased depth within parameter constraints.

Performance Evaluation

The Gemma 2 models undergo rigorous evaluation across a plethora of benchmarks:

- Automated Benchmarks: Results on benchmarks like MMLU, GSM8K, ARC-c, HellaSwag, and others demonstrate that Gemma 2 models significantly outperform previous iterations and are competitive with larger models. The 27B model, for instance, achieves competitive performance metrics against the larger LLaMA-3 70B model while being considerably smaller.

- Human Evaluations: The instruction-tuned Gemma 2 models also show marked improvements in human preference evaluations and safety assessments. These models exhibit low violation rates across several safety metrics and maintain robust performance under adversarial conditions.

Ablations and Insights

The paper provides insightful ablations examining the impact of various architectural and training choices:

- Knowledge Distillation vs. Training from Scratch: Distilled models significantly outperform those trained from scratch, even when trained on an equivalent amount of data.

- Scaling Effects: Distillation continues to benefit larger models, indicating the scalable advantages of the technique.

- Attention Mechanisms: Switching to GQA from traditional multi-head attention provides inference speed benefits with minimal performance trade-offs.

Implications and Future Directions

The findings from Gemma 2 have broad implications for the development of efficient LLMs:

- Practical Scaling: Enhancements like interleaved attention mechanisms and knowledge distillation allow smaller models to achieve performance levels previously reserved for much larger models, democratizing access to advanced language understanding capabilities.

- Efficiency in Training and Inference: The adoption of techniques like GQA and logit soft-capping ensure that models remain computationally feasible during both training and deployment, making them accessible for a wider range of applications and environments.

The successful implementation of these techniques in Gemma 2 opens up avenues for further research into efficient and scalable model training. Future endeavors could involve the exploration of more advanced training signals, adaptive learning mechanisms, and further optimizations in attention mechanisms to push the envelope of performance versus practicality in LLM development.

In conclusion, Gemma 2 represents a significant step forward in the quest for high-performance yet practical LLMs. The advancements presented not only enhance the current state of small-scale models but also set the stage for future innovations in the field.