Accelerating Multimodal Foundation Models: A Deep Dive into LLaVA-Gemma

Introduction

The LLaVA-Gemma suite represents a significant contribution to the field of multimodal foundation models (MMFM) by harnessing the capabilities of the Gemma family of LLMs. Operating within the well-established LLaVA framework, this suite introduces compact visual LLMs (VLMs) that are both efficient and effective. Unlike previous iterations, LLaVA-Gemma brings to the fore the Gemma-2B and Gemma-7B variants, offering a nuanced exploration of the balance between computational demands and the depth of visual and linguistic understanding. This work is particularly notable for its utilization of the Gemma models' extensive token set, facilitating a comprehensive investigation into multimodal performance dynamics.

Methods

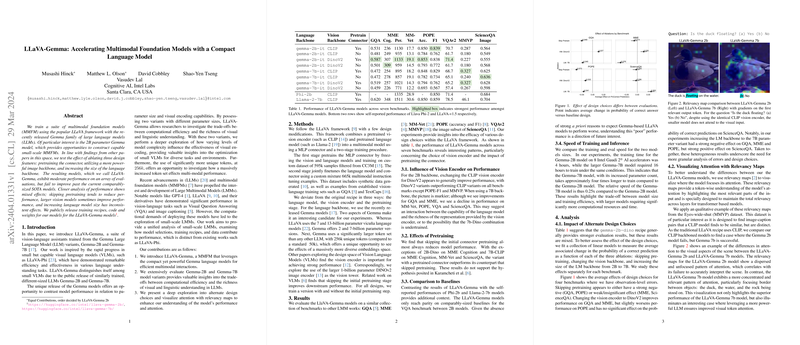

The methodology pivoted around three key design modifications within the LLaVA framework: the choice of LLM, the vision encoder, and the initial pretraining phase. The selection of Gemma models, with their distinct parameter sizes and expansive token sets, sets the stage for an intricate analysis of model complexity impacts. The paper also experiments with differing vision encoder architectures (CLIP and DinoV2) and evaluates the necessity of connector pretraining. This rigorously structured approach ensures a comprehensive exploration of model dynamics, contributing to a more detailed understanding of MMFM optimization strategies.

Results

The results of LLaVA-Gemma across various benchmarks provoke thought on several fronts. Primarily, the influence of the vision encoder on model performance suggests a nuanced interaction between LLM capability and vision encoder complexity. The demonstrated importance of the connector pretraining phase further adds depth to this analysis, challenging previous assertions in the literature about potential performance benefits of skipping this stage. Moreover, while comparisons with baseline models reveal areas wherein LLaVA-Gemma does not outperform existing SOTA models, these findings invite further scrutiny into model configurations and training methodologies.

Analysis

The paper goes beyond superficial performance metrics, exploring the effects of alternate design choices and the utility of relevancy maps in visualizing model attention. The analysis reveals a complex landscape where design modifications yield divergent impacts across different evaluations, highlighting the intricacies of MMFM optimization. The use of relevancy maps, in particular, offers insightful glimpses into the model's focal points, illustrating the practical implications of model variations in understanding visual cues.

Discussion

LLaVA-Gemma represents a substantial stride towards refining our understanding of small-scale MMFMs. By offering a dual analysis based on parameter size and token set expansiveness, it underscores the delicate balance between model size and its comprehension abilities. The paper encourages a discerning approach to MMFM training and optimization, advocating for a closer examination of design choices and their multifaceted effects on model performance. Indeed, LLaVA-Gemma not only contributes to the academic dialogue around MMFM efficiency and effectiveness but also equips practitioners with key insights for advancing the field.

In summary, LLaVA-Gemma's exploration into the optimization of small-scale VLMs within the MMFM paradigm presents a valuable resource for both theoretical inquiry and practical application. The nuanced examination of design choices and their varied impacts across benchmarks, coupled with the innovative use of relevancy maps, paves the way for future research directions and model enhancements. As the field continues to evolve, the insights gleaned from LLaVA-Gemma will undoubtedly shape the development and implementation of more capable and efficient multimodal models.