Introduction to Gemini Models

In the domain of AI, the newly introduced Gemini models represent a line of multimodal models capable of understanding and processing a multitude of modalities, such as images, audio, video, and text. Developed at Google, these models demonstrate remarkable abilities in executing complex reasoning tasks, making them suitable for a wide array of applications. Gemini is available in three distinct sizes: Ultra, Pro, and Nano, each optimized for specific use cases ranging from sophisticated reasoning to compact deployment scenarios.

Benchmark Performance

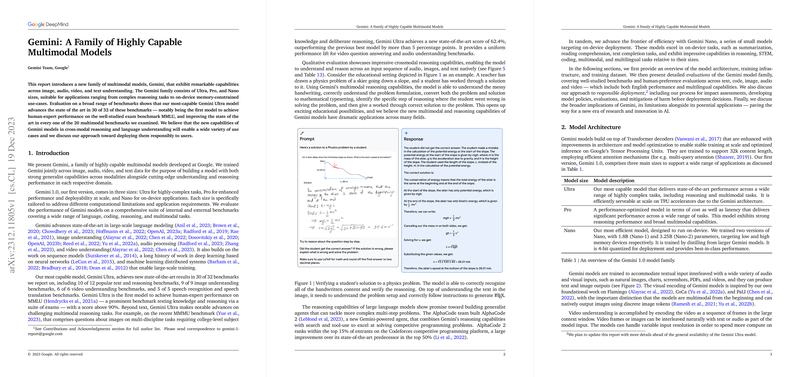

The Gemini model family has been rigorously evaluated across a comprehensive set of benchmarks, showcasing superior performance in numerous domains. The Gemini Ultra model, the most proficient variant, has significantly advanced the state of the art in 30 out of 32 benchmarks that include text and reasoning, image understanding, video understanding, and speech recognition and translation. Notably, Gemini Ultra is the first model to achieve human-expert level performance on the MMLU benchmark and substantially progress the field in challenging multimodal reasoning tasks like the MMMU benchmark.

Model Architecture and Training

Gemini models, built upon Transformer decoders, showcase an ability to handle extensive context lengths and employ efficient mechanisms such as multi-query attention. Specifically designed to cater to various application scopes, they are trained to manage textual inputs intertwined with audio and visual data, such as images, charts, videos, and audio signals. The training infrastructure utilizes the latest Google Tensor Processing Units, enabling large-scale training of the models across various data centers.

Potential Applications and Responsible Deployment

These models bring forth strong implications for cross-modal reasoning and understanding, which can drastically affect educational tools, interactive systems, and creative domains. The eventual deployment of these models demands strict adherence to responsible AI practices. Procedures involving comprehensive impact assessments, formulation of model policies, meticulous evaluations, and specific mitigations against potential harms have been put in place. The discussion sheds light on the intricate balance between increasing model helpfulness and maintaining safety, particularly in the realms of factuality and content policy adherence.

The introduction of Gemini models marks a salient milestone in AI, with a potential to catalyze future research and innovation. Though their capabilities are robust, they still encounter challenges like hallucinations and complex reasoning tasks, underlining the necessity for continual advancements in the field.