Efficient and Transparent Small LLMs: Introducing MobiLlama

Context and Motivation

The field of NLP has seen remarkable advancements with the development of LLMs, characterized by their vast parameter counts and exceptional abilities in handling complex language tasks. Despite their capabilities, the deployment of LLMs is hindered by their substantial computational and memory requirements, making them less feasible for applications constrained by resource availability, such as on-device processing and applications with stringent privacy, security, and energy efficiency considerations. Addressing these concerns, this paper introduces MobiLlama, a fully transparent, efficient, and open-source Small LLM (SLM) with 0.5 billion parameters, designed specifically for resource-constrained environments.

Related Work

Historically, the tendency has been toward constructing larger models to achieve better performance on NLP tasks. Although efficacious, this trend imposes limitations in terms of computational costs and model transparency. Recent efforts in the field of SLMs have started to explore the potential of downsizing without significantly sacrificing capabilities, focusing on model efficiency and the viability of deploying these models onto less capable hardware. However, a significant gap remains in the open-source availability of SLMs, limiting the scope for broader research and applications in diverse environments.

Proposed Methodology

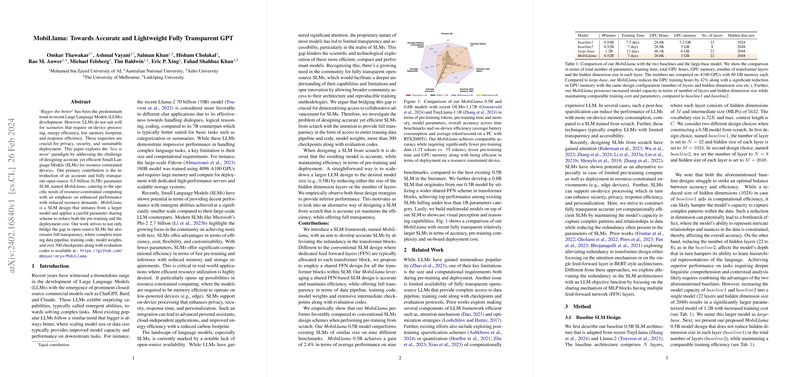

Focusing on the reduction of redundancy and computational demand without compromising model performance, MobiLlama employs a shared Feed Forward Network (FFN) configuration across transformer blocks. This design significantly diminishes the parameter count while retaining the model's effectiveness across a wide range of NLP tasks. The utilized training data, architecture details, and comprehensive evaluation metrics are made fully accessible to ensure transparency and reproducibility, aligning with the need for open research in this domain.

Key Contributions

- Design Efficiency: MobiLlama exhibits a paradigm of shared FFN layers across transformer blocks, leading to a substantial reduction in parameters while maintaining competitive performance across various benchmarks.

- Transparency and Accessibility: The entire training pipeline, including code, data, and checkpoints, is made available, fostering an open research environment.

- Benchmarking Performance: MobiLlama outperforms existing SLMs in its parameter class across nine distinct benchmarks, showcasing the effectiveness of the model in diverse NLP tasks.

Implementation Details

Underpinning MobiLlama is a strategic architecture configuration that balances the trade-off between model depth and width, ensuring optimal performance without an excessive increase in parameters or computational demand. The model is pre-trained on the versatile and rich Amber dataset, encompassing a broad spectrum of linguistic sources, to ensure a comprehensive understanding and representation of language nuances.

Evaluation and Results

Evaluating MobiLlama against existing models and baselines demonstrates its superior performance, particularly in tasks requiring complex language comprehension and generation. Moreover, the model achieves remarkable efficiency in deployment, showcasing lower energy consumption and reduced memory requirements on resource-constrained devices compared to larger counterparts.

Future Directions

While MobiLlama represents a leap towards more practical and deployable SLMs, future work may explore further optimization of the shared FFN design, expansion into more diverse tasks, and continued efforts to enhance the model's understanding and generation capabilities. Additionally, addressing potential biases and improving the model's fairness and robustness are vital areas for ongoing research.

Conclusion

MobiLlama stands as a testament to the feasibility of developing efficient, effective, and fully transparent SLMs. By making strides towards models that are not only computationally economical but also accessible and open for extensive research, MobiLlama contributes to the democratization and advancement of the field of NLP, inviting further exploration and innovation in the development of SLMs suited for a broader range of applications.

Acknowledgements

The development and evaluation of MobiLlama were facilitated by significant computational resources and collaborative efforts, highlighting the collective progress toward more sustainable and inclusive AI research.