Small LLMs: Survey, Measurements, and Insights

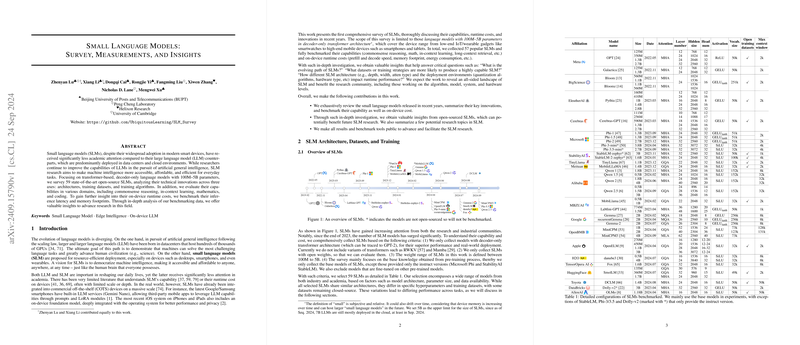

The paper "Small LLMs: Survey, Measurements, and Insights" authored by Zhenyan Lu et al. provides a comprehensive survey and systematic analysis of small LLMs (SLMs) with 100M–5B parameters. This work stands out for its extensive benchmarking of 59 state-of-the-art open-source SLMs across various domains, including commonsense reasoning, in-context learning, mathematics, and coding. The primary areas of focus in the survey include model architectures, training datasets, training algorithms, and their on-device runtime performance. Here, we detail the key findings and their broader implications.

Model Architecture

SLMs are primarily transformer-based, decoder-only architectures similar to those seen in larger LLMs. The paper finds noticeable trends in architectural choices:

- Attention Mechanisms: Group-Query Attention (GQA) is gaining traction over traditional Multi-Head Attention (MHA), due to its efficiency.

- Feed-Forward Networks (FFNs): There is a shift towards Gated FFNs from Standard FFNs, influenced by their superior performance.

- Activation Functions: SiLU has become the dominant activation function, replacing earlier preferences for ReLU and GELU.

- Normalization Technique: RMSNorm is now preferred over LayerNorm, contributing to better computational efficiency.

- Vocabulary Size: There is an increasing trend toward larger vocabularies, with recent models often exceeding 50K tokens.

These architectural innovations not only enhance the capability of SLMs but also significantly affect their runtime performance, which is crucial for deployment in resource-constrained environments.

Training Datasets

The quality and scale of pre-training datasets play a pivotal role in the performance of SLMs. The survey identifies twelve distinct datasets frequently used in training these models. Notably, two datasets—DCLM and FineWeb-Edu—stand out due to their superior performance. These datasets employ model-based filtering techniques, which enhance the training quality. The paper further highlights a trend of "over-training" SLMs, where models are trained using datasets significantly larger than those suggested by the Chinchilla law, to leverage the limited computational resources available on-device.

Training Algorithms

Innovative training algorithms further optimize SLM performance:

- Maximal Update Parameterization (μP): Applied in models like Cerebras-GPT, this method improves training stability and facilitates hyperparameter transferability.

- Knowledge Distillation: Models such as LaMini-GPT and Gemma-2 utilize this approach to transfer knowledge from larger models, enhancing their efficiency and performance.

- Two-Stage Pre-training Strategy: This strategy, used by MiniCPM, conservatively utilizes large-scale coarse-quality data in the initial phase and high-quality data in the annealing phase.

Capabilities and Performance Gains

The paper details significant performance improvements in SLMs from 2022 to 2024 across domains like commonsense reasoning, problem-solving, and mathematics. Particularly, the Phi family of models, trained on closed-source datasets, demonstrate exceptional improvements, suggesting that SLMs are nearing the performance levels of their larger counterparts. The survey also shows that while there is a general correlation between model size and performance, architectural innovations and high-quality datasets can enable smaller models to outperform larger ones in certain tasks.

In-Context Learning

SLMs have shown varying degrees of in-context learning capabilities, which improve performance on specific tasks. The paper suggests that larger models tend to exhibit stronger in-context learning abilities. Nevertheless, the benefits of in-context learning can vary significantly across different tasks and models.

Runtime Costs and Latency

The paper provides a thorough evaluation of runtime costs on devices, focusing on both latency and memory usage:

- Inference Latency: Inference latency is segmented into prefill and decode phases, with prefill latency being more impacted by model architecture.

- Quantization: Quantization significantly reduces inference latency and memory footprint. However, regular quantization precision (e.g., 4-bit) tends to be more effective than irregular precision (e.g., 3-bit).

- Hardware Variations: The performance varies significantly between GPU and CPU implementations, with GPUs generally showing better performance during more parallelizable tasks like the prefill phase.

Conclusions and Future Directions

The paper concludes with multiple key insights and potential future research directions for SLMs:

- Co-Design with Hardware: Future research should focus on extreme co-design and optimization of SLM architecture with specific device hardware to maximize efficiency.

- High-Quality Synthetic Datasets: There is a need for further research in standardizing and improving synthetic data curation for pre-training SLMs.

- Deployment-Aware Chinchilla Law: Developing a deployment-aware scaling law that considers the resource constraints of target devices could significantly optimize SLMs.

- Continual On-Device Learning: Exploring efficient on-device learning methods, such as zeroth-order optimization, could improve personalization without significant resource overhead.

- Device-Cloud Collaboration: Efficient strategies for SLM and LLM collaboration could balance privacy concerns with computational efficiency.

- Fair Benchmarking: There is a need for comprehensive and standardized benchmarks specifically tailored to the tasks SLMs are likely to encounter on devices.

- Sparse SLMs: Sparse architectures and runtime-level sparsity in SLMs remain underexplored and present opportunities for innovation.

In summary, the paper by Lu et al. provides valuable insights and advancements in the paper of SLMs. The detailed analysis of architectures, datasets, training methods, performance, and runtime costs forms a foundation for future research aimed at enhancing the efficiency and capabilities of SLMs in real-world deployments.