OpenELM: A Comprehensive Open Source LLM Framework

Introduction

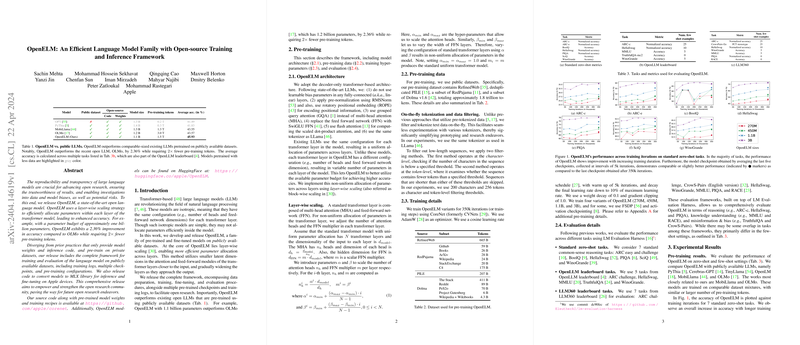

The recently developed OpenELM, a variant of transformer-based LLMs, introduces an innovative approach to parameter allocation using a layer-wise scaling methodology. This approach not only boosts model performance but also reduces the dependency on large-scale pre-training datasets, setting a new benchmark in model efficiency.

Model Architecture and Training Approach

OpenELM's architecture diverges from the traditional uniform parameter allocation in transformer layers. The model employs a layer-wise scaling strategy which adjusts the scale of parameters across different layers effectively:

- Each layer can have a varying number of attention heads and dimensions, allowing more flexible and efficient use of model capacity.

- Parameters grow from smaller dimensions in initial layers to larger dimensions closer to the output layer, optimizing the model's learning and representation capabilities.

OpenELM was trained using a combination of publicly available datasets amounting to 1.5 trillion tokens—significantly less than typically required by models of comparable complexity.

Key Results and Benchmarks

OpenELM achieves notable efficiency and performance improvements compared to existing models like OLMo and MobiLlama:

- With only 1.1 billion parameters, OpenELM demonstrates a 2.36% average accuracy improvement over OLMo, while requiring half the tokens for pre-training.

- Benchmarked on common NLP tasks, OpenELM consistently outperforms other models pre-trained on public datasets, with substantial leads on both zero-shot and few-shot settings.

OpenELM’s comprehensive release, including training logs and model weights, facilitates greater transparency and reproducibility in AI research, which is often hampered by proprietary practices.

Implications and Future Directions

The introduction of OpenELM signals a significant shift towards more accessible and efficient AI research tools. Given its open-source nature and superior performance metrics, OpenELM is poised to become a valuable resource for researchers aiming to develop more effective and efficient NLP models. Future research could explore further optimizations in parameter scaling and allocation to enhance model efficiency even more. In addition, the community might also develop improved versions of RMSNorm, tailored to better handle the computing demands of large-scale models like OpenELM.

Performance Analysis

Despite its improved accuracy, OpenELM shows a decrease in inference speed compared to models using more traditional normalization methods. This discovery has led to an ongoing exploration of how normalization impacts performance and efficiency, highlighting a potential area for further optimization. Future updates could address these bottlenecks, potentially adjusting or enhancing RMSNorm implementations to bridge the performance gap with LayerNorm.

Conclusion

OpenELM represents a significant advancement in the design and deployment of LLMs. By improving the efficiency of parameter usage and reducing the pre-training data requirements, OpenELM not only achieves state-of-the-art performance but also furthers the democratization of AI research, empowering a broader community to contribute to and expand upon this foundational work.