Introduction

LLMs have gained significant traction for their impressive language generation abilities. However, they often grapple with factual errors and hallucinations, highlighting inherent limitations in their parametric knowledge. While Retrieval-Augmented Generation (RAG) systems offer a practical supplement to LLMs by augmenting generation with external documents, their efficacy is critically dependent on the relevance and accuracy of these documents. Current approaches, however, may indiscriminately integrate irrelevant information, undermining the robustness of generation.

Corrective Strategies for Robust Generation

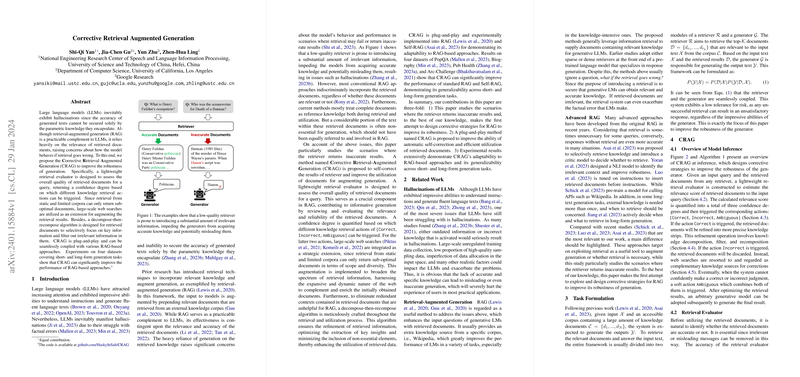

The paper introduces an innovative Corrective Retrieval Augmented Generation (CRAG) methodology designed to enhance the resilience of RAG systems. CRAG incorporates a lightweight retrieval evaluator alongside a varying approach to document use depending on the evaluator's output. The evaluator assigns actions based on the estimated relevance, falling under Correct, Incorrect, or Ambiguous categories. This approach pivots on a decomposition-recomposition algorithm which addresses static corpuses' limitations by selectively focusing on essential information and discarding irrelevant content.

Web Search Integration and Experimentation

A novel aspect of CRAG is its integration with large-scale web searches for cases when knowledge retrieval is deemed Incorrect. Leveraging the dynamic nature of the web, CRAG broadens the scope and variety of information at the model’s disposal. This ensures a rich set of external knowledge to amend the initial corpus results. CRAG is demonstrated to be a plug-and-play model and shows compatibility with existing RAG-based approaches. Validation across four datasets emphasizes its performance uplift and generalizability over tasks that demand both short and long-form generation.

Conclusion and Contribution

CRAG's design for self-correcting and enhancing the utilization of retrieved documents marks a notable step in addressing RAG's existing pitfalls. The retrieval evaluator is central, helping to avoid the inclusion of misleading information and prompting robust actions based on document assessment. The refined approach shows significant improvements over the standard RAG and Self-RAG, indicating wide applicability in scenarios where the integrity of retrieved information is questionable. The experiments encapsulate CRAG's adaptability to RAG-based methods and its capability to transcend challenges across various generation categories.