Benchmarking LLMs in Retrieval-Augmented Generation: An Analysis of Key Abilities

Introduction

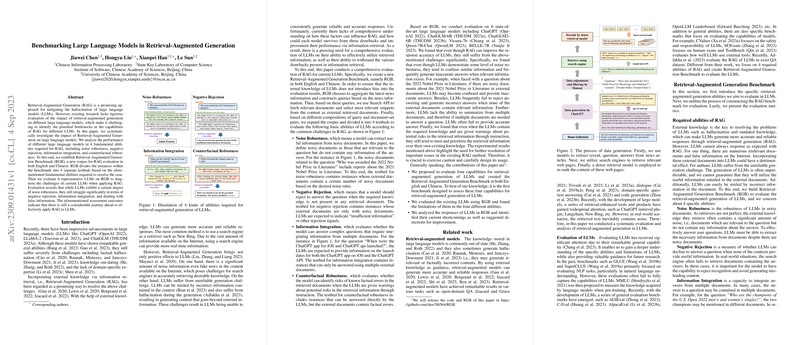

Retrieval-Augmented Generation (RAG) represents a significant stride in addressing the limitations of LLMs, notably in mitigating hallucinations, knowledge obsolescence, and improving domain-specific expertise. Despite the promising benefits of integrating external knowledge through retrieval methods, challenges persist regarding LLMs' effective use and reliability in leveraging external information. This paper presents a systematic examination of the impact of RAG on LLMs, focusing on four critical abilities: noise robustness, negative rejection, information integration, and counterfactual robustness. The paper pioneers the creation of the Retrieval-Augmented Generation Benchmark (RGB), encompassing both English and Chinese testbeds, to evaluate these abilities in LLMs.

Critical Abilities of RAG in LLMs

RAG introduces a paradigm where models consult external information to bolster their responses. This approach, however, underscores the need for models to possess specific capabilities to utilize external knowledge effectively:

- Noise Robustness: The ability of an LLM to sift useful information from noisy, irrelevant details in retrieved documents.

- Negative Rejection: The model's capacity to withhold responses when reliable information is absent in the retrieved documents.

- Information Integration: Competence in synthesizing answers from multiple documents, especially for complex queries requiring cross-referencing of information.

- Counterfactual Robustness: The adeptness at identifying and disregarding incorrect facts within the external knowledge, especially when preemptively warned about potential inaccuracies.

The Retrieval-Augmented Generation Benchmark (RGB)

The introduction of RGB constitutes a novel approach to assess these capabilities within LLMs. RGB is crafted from recent news information to ensure the benchmark's relevance and challenge to current models. The benchmark encompasses 4 testbeds, each tailored to evaluate one of the critical RAG abilities defined. This innovative benchmark facilitates a nuanced understanding of how LLMs perform RAG, identifying their strengths and shortcomings across different linguistic and task-specific contexts.

Evaluation Findings

The analysis of six leading LLMs on RGB unveiled substantial insights into the current state of RAG in LLMs. The models showcased varied levels of noise robustness, though a marked decline in performance was observed as the noise ratio increased. Conversely, a substantial challenge was identified in negative rejection, with models struggling to accurately withhold responses when confronted with noise-exclusive document sets. In information integration tasks, models demonstrated limited capabilities, suggesting a need for improved reasoning and synthesis over multiple documents. Counterfactual robustness proved particularly challenging, with models often misled by incorrect information despite internal knowledge contradicting the retrieved facts.

Discussion

The distilled insights from evaluating LLMs against RGB underscore the urgency for targeted improvements in RAG methodologies. While LLMs embody remarkable potential, their current usage of RAG reveals critical vulnerabilities—specifically in handling information noise, integrating multifaceted data points, and discerning factual inaccuracies. Addressing these challenges is pivotal for enhancing LLMs' reliability and applicability across diverse applications.

Future Directions

The findings advocate for a more nuanced approach to developing and refining RAG techniques in LLMs. Future research may focus on:

- Enhancing models' comprehension and contextual discrimination to improve noise robustness and negative rejection rates.

- Developing advanced reasoning algorithms for better information integration from disparate sources.

- Implementing robust fact-checking mechanisms within LLMs to bolster counterfactual robustness.

These future directions demonstrate a promising pathway to harnessing the full potential of RAG, thereby enabling LLMs to offer more accurate, reliable, and contextually aware responses.

Conclusion

The comprehensive evaluation conducted through RGB reveals that although RAG presents a beacon for advancing LLM capabilities, substantial hurdles remain. By systematically dissecting the necessary abilities for effective RAG implementation, this paper lays a foundational blueprint for future advancements in LLM development. As the field continues to evolve, focused efforts on addressing the outlined challenges will be instrumental in unlocking the transformative potential of retrieval-augmented generation within LLMs.