Summary of RQ-RAG: Learning to Refine Queries for Retrieval Augmented Generation

The paper introduces a novel framework, RQ-RAG, which innovatively enhances the capabilities of LLMs in handling the complexities of generating accurate responses to queries. Despite the remarkable advancements in LLMs, they often suffer from generating inaccurate or "hallucinatory" responses due to their dependence on pre-existing datasets which remain static post-training. This paper addresses these inherent limitations by advancing the concept of Retrieval-Augmented Generation (RAG), a method that integrates external documents into the response generation process to provide up-to-date, contextually relevant information.

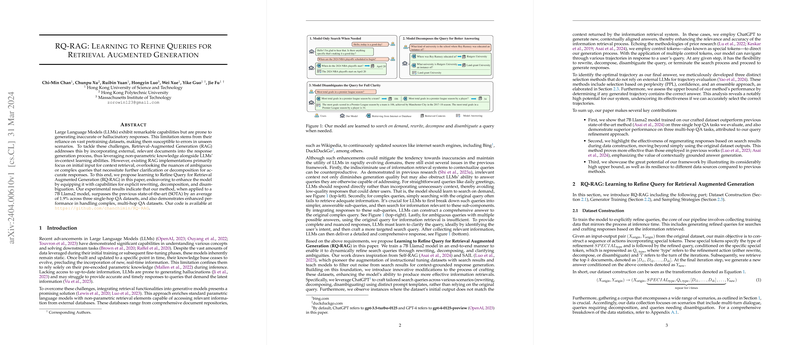

The principal enhancement proposed by the paper is the introduction of query refinement mechanisms into the retrieval process. Unlike traditional RAG systems that rely solely on initial input queries, RQ-RAG empowers the model with the ability to explicitly rewrite, decompose, and disambiguate queries. This refinement improves the relevance of retrieved contexts and enhances the model's performance both in single-hop and multi-hop question-answering tasks.

Key Contributions

- Enhanced Query Refinement: RQ-RAG enables models to perform explicit query rewriting, decomposition, and disambiguation. Experimental results show that RQ-RAG, applied to a 7B Llama2 model, surpasses previous state-of-the-art performance by an average of 1.9% across three single-hop QA datasets. Moreover, it demonstrates superior handling of complex, multi-hop QA tasks.

- Data Curation: The paper introduces a distinct data curation pipeline employing ChatGPT to auto-generate high-quality training datasets by refining search queries and regenerating contextually aligned responses. This process effectively creates robust datasets containing complex and ambiguous queries, enhancing the model's capability for nuanced query interpretation and response generation.

- Sampling Strategies: The paper proposes innovative sampling strategies, namely perplexity-based, confidence-based, and ensemble-based selection methods to determine the optimal path through generated trajectories. These strategies are crucial in selecting the most effective output without the reliance on external LLMs, emphasizing the system's potential upper bound for correct answer generation across diverse query types.

Empirical Evaluation and Results

The empirical evaluation convincingly demonstrates RQ-RAG's effectiveness, outperforming traditional LLM models and existing retrieval-augmented systems. Notably, the model achieves remarkable upper bounds, with significant success in generating correct answers across varied and complex queries, highlighting the structured enhancement in model design and execution.

Furthermore, the paper explores the impact of context regeneration and data source resilience. The experimental results indicate the notable benefit of regenerating answers based on retrieved contexts as opposed to static dataset outputs. Additionally, the model shows minimal performance variability across different data sources during inference, underscoring its robustness to external retrieval conditions.

Implications and Future Directions

The research in RQ-RAG has substantial implications for the development and deployment of LLMs in real-world applications that require dynamic and contextually relevant information retrieval. By effectively addressing the challenges of query ambiguity and complexity, RQ-RAG sets a precedent for subsequent methodologies that aim to blend generative capabilities with retrieval processes.

Future developments are likely to enhance trajectory selection further, leveraging even more advanced LLM scoring mechanisms. Additionally, exploration into integrating denoising techniques during context retrieval could provide additional performance gains. RQ-RAG's framework can serve as a foundation for refining AI's capacity to manage the ever-evolving demands of information retrieval and response generation in varied domains.