MatSynth: A Modern PBR Materials Dataset (2401.06056v3)

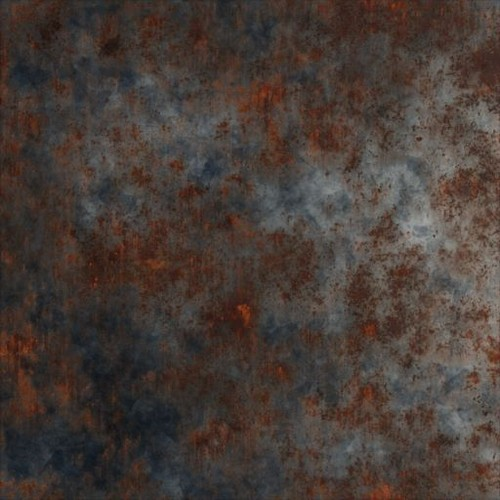

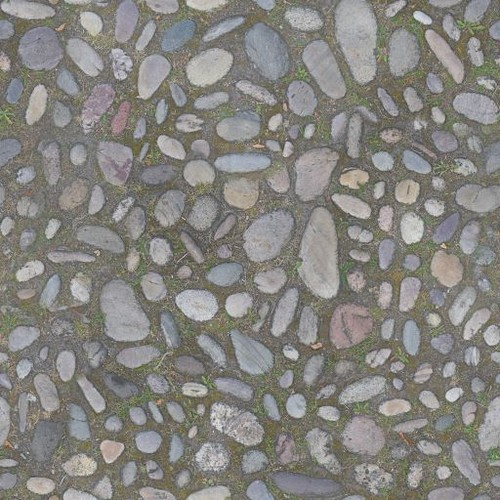

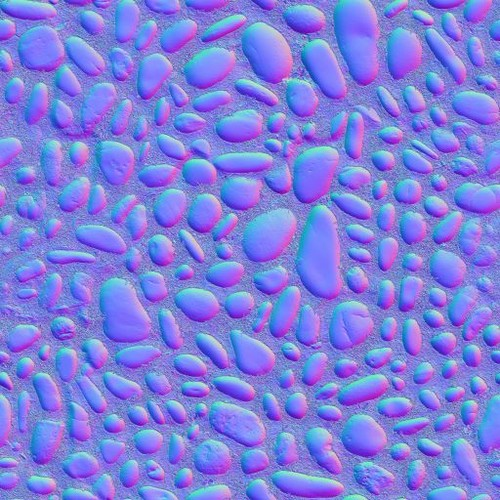

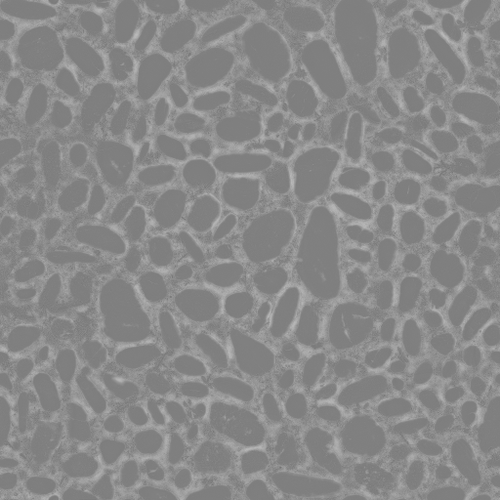

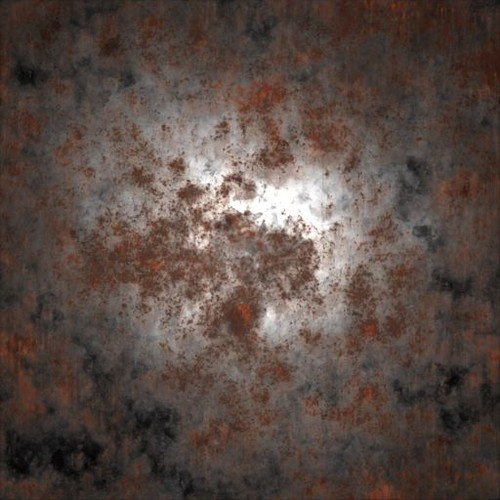

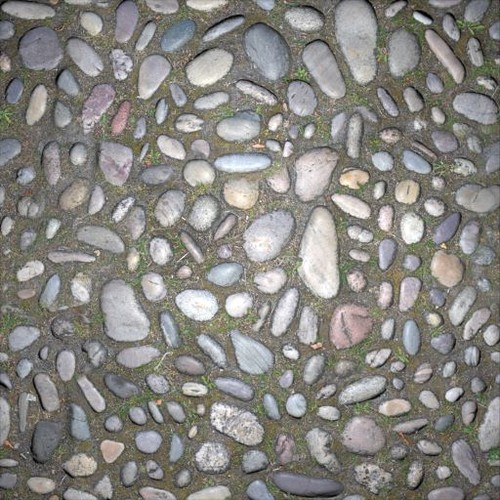

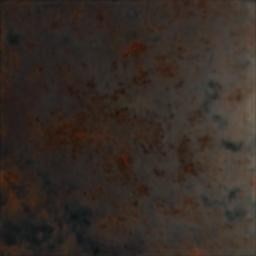

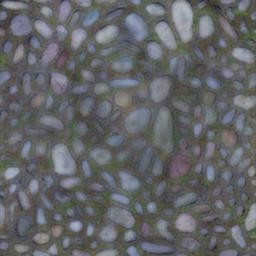

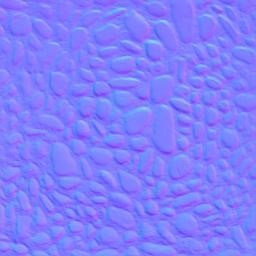

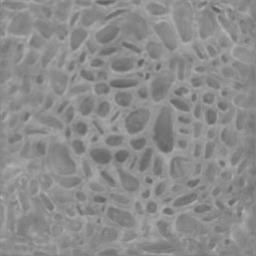

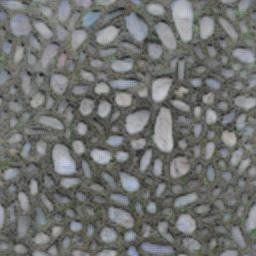

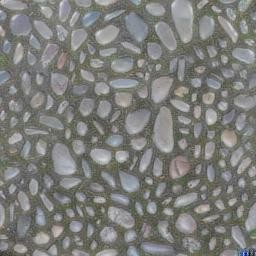

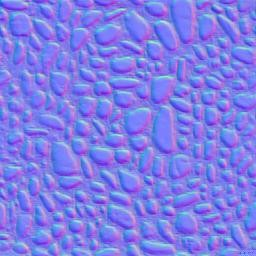

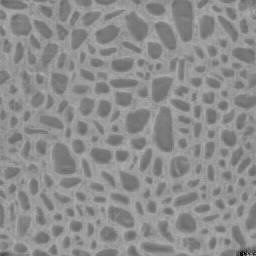

Abstract: We introduce MatSynth, a dataset of 4,000+ CC0 ultra-high resolution PBR materials. Materials are crucial components of virtual relightable assets, defining the interaction of light at the surface of geometries. Given their importance, significant research effort was dedicated to their representation, creation and acquisition. However, in the past 6 years, most research in material acquisiton or generation relied either on the same unique dataset, or on company-owned huge library of procedural materials. With this dataset we propose a significantly larger, more diverse, and higher resolution set of materials than previously publicly available. We carefully discuss the data collection process and demonstrate the benefits of this dataset on material acquisition and generation applications. The complete data further contains metadata with each material's origin, license, category, tags, creation method and, when available, descriptions and physical size, as well as 3M+ renderings of the augmented materials, in 1K, under various environment lightings. The MatSynth dataset is released through the project page at: https://www.gvecchio.com/matsynth.

- Adobe. Substance 3D Assets. https://substance3d.adobe.com/assets/, 2023.

- AmbientCG. https://www.ambientcg.com/, 2023.

- Language models are few-shot learners. Advances in neural information processing systems, 33:1877–1901, 2020.

- CGBookCase. https://www.cgbookcase.com/, 2023.

- Single-image svbrdf capture with a rendering-aware deep network. ACM Transactions on Graphics (SIGGRAPH Conference Proceedings), 37(128):15, 2018.

- Flexible svbrdf capture with a multi-image deep network. Computer Graphics Forum(Eurographics Symposium on Rendering Conference Proceedings), 38(4):13, 2019.

- Guided fine-tuning for large-scale material transfer. Computer Graphics Forum (Proceedings of the Eurographics Symposium on Rendering), 39(4), 2020.

- Deep polarization imaging for 3d shape and svbrdf acquisition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2021.

- The visual language of fabrics. ACM Transactions on Graphics (TOG), 42(4):1–15, 2023.

- An adaptive parameterization for efficient material acquisition and rendering. Transactions on Graphics (Proceedings of SIGGRAPH Asia), 37(6):274:1–274:18, 2018.

- Metappearance: Meta-learning for visual appearance reproduction. ACM Trans Graph (Proc. SIGGRAPH Asia), 41(4), 2022.

- Deep inverse rendering for high-resolution svbrdf estimation from an arbitrary number of images. ACM Trans. Graph., 38(4), 2019.

- Outcast: Single image relighting with cast shadows. Computer Graphics Forum, 43, 2022.

- Brdf representation and acquisition. Computer Graphics Forum, 35(2):625–650, 2016.

- Matformer: A generative model for procedural materials. ACM Trans. Graph., 41(4), 2022.

- Highlight-aware two-stream network for single-image svbrdf acquisition. ACM Trans. Graph., 40(4), 2021.

- Ultra-high resolution svbrdf recovery from a single image. ACM Trans. Graph., 42(3), 2023.

- MaterialGAN: Reflectance capture using a generative svbrdf model. ACM Trans. Graph., 39(6), 2020.

- Text2Mat: Generating Materials from Text. In Pacific Graphics Short Papers and Posters. The Eurographics Association, 2023.

- Generative modelling of brdf textures from flash images. ACM Trans Graph (Proc. SIGGRAPH Asia), 40(6), 2021.

- Generating Procedural Materials from Text or Image Prompts. In ACM SIGGRAPH 2023 Conference Proceedings, 2023.

- Analyzing and improving the image quality of stylegan. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 8110–8119, 2020.

- Modeling surface appearance from a single photograph using self-augmented convolutional neural networks. ACM Trans. Graph., 36(4), 2017.

- Learning to reconstruct shape and spatially-varying reflectance from a single image. In SIGGRAPH Asia 2018 Technical Papers, page 269. ACM, 2018.

- Inverse rendering for complex indoor scenes: Shape, spatially-varying lighting and svbrdf from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2475–2484, 2020.

- Openrooms: An open framework for photorealistic indoor scene datasets. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, pages 7190–7199, 2021.

- MaterIA: Single image high-resolution material capture in the wild. Computer Graphics Forum, 41(2):163–177, 2022.

- A data-driven reflectance model. ACM Transactions on Graphics (TOG), 22(3):759–769, 2003.

- Wes McDermott. The PBR Guide. Allegorithmic, 2018.

- Material swapping for 3d scenes using a learnt material similarity measure. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, pages 2034–2043, 2022.

- PolyHaven. https://www.polyhaven.com/, 2023.

- Learning transferable visual models from natural language supervision. In International conference on machine learning, pages 8748–8763. PMLR, 2021.

- Infinite photorealistic worlds using procedural generation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 12630–12641, 2023.

- Hierarchical text-conditional image generation with clip latents. arXiv preprint arXiv:2204.06125, 1(2):3, 2022.

- Hypersim: A photorealistic synthetic dataset for holistic indoor scene understanding. In International Conference on Computer Vision (ICCV) 2021, 2021.

- ShareTextures. https://www.sharetextures.com/, 2023.

- Materialistic: Selecting similar materials in images. ACM Trans. Graph., 42(4), 2023.

- Denoising diffusion implicit models. arXiv preprint arXiv:2010.02502, 2020.

- Synthetic datasets for autonomous driving: A survey, 2023.

- TextureCan. https://www.texturecan.com/, 2023.

- Giuseppe Vecchio. Accelerating reality: virtual environment generation for outdoor robot navigation using deep learning. PhD thesis, Universita degli studi di Catania, 2023.

- Surfacenet: Adversarial svbrdf estimation from a single image. In Proceedings of the IEEE/CVF International Conference on Computer Vision, pages 12840–12848, 2021.

- Controlmat: A controlled generative approach to material capture, 2023a.

- Matfuse: Controllable material generation with diffusion models, 2023b.

- VRay. VRay:MaterialLibrary. http://www.vray-materials.de/, 2023.

- Exploring clip for assessing the look and feel of images. In Proceedings of the AAAI Conference on Artificial Intelligence, pages 2555–2563, 2023.

- Psdr-room: Single photo to scene using differentiable rendering. In ACM SIGGRAPH Asia 2023 Conference Proceedings, 2023.

- Adversarial single-image svbrdf estimation with hybrid training. Computer Graphics Forum, 2021.

- Tilegen: Tileable, controllable material generation and capture. In SIGGRAPH Asia 2022 Conference Papers, New York, NY, USA, 2022a. Association for Computing Machinery.

- Tilegen: Tileable, controllable material generation and capture. In SIGGRAPH Asia 2022 Conference Papers, pages 1–9, 2022b.

- Photomat: A material generator learned from single flash photos. In ACM SIGGRAPH 2023 Conference Proceedings, New York, NY, USA, 2023. Association for Computing Machinery.

- Learning-based inverse rendering of complex indoor scenes with differentiable monte carlo raytracing. In SIGGRAPH Asia 2022 Conference Papers. ACM, 2022a.

- Irisformer: Dense vision transformers for single-image inverse rendering in indoor scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, pages 2822–2831, 2022b.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.