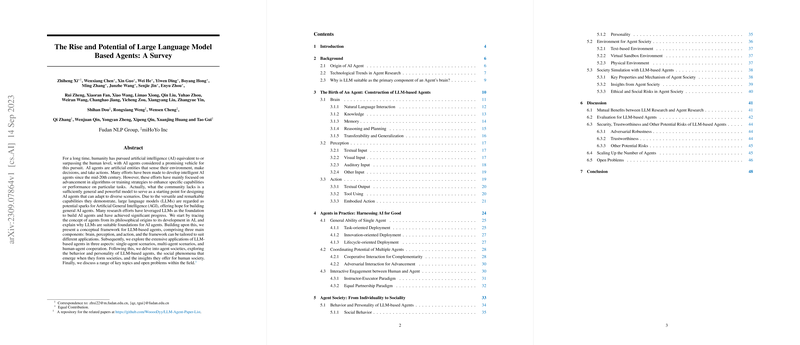

A Survey on the Rise and Potential of LLM Based Agents

The paper entitled "The Rise and Potential of LLM Based Agents: A Survey" by Xi et al. meticulously investigates the burgeoning domain of LLM-based agents. It encapsulates comprehensive insights, tracing the philosophical origins of agents to their current deployment in AI, and explores their structural framework and pragmatic applications.

Structural Overview and Functional Components

The authors argue that the community's longstanding focus on algorithmic refinement and training strategies has resulted in a fragmented approach to the development of AI agents. They propose that LLMs, given their versatile capabilities, present a promising foundation for building adaptable agents. Their framework is composed of three core components:

- Brain: Acts as the cognitive nucleus, simulating human cognitive functions such as reasoning, memory, planning, and generalization. LLMs manage natural language interactions, extensive knowledge databases, and potent memory modules, ensuring agents possess versatile problem-solving capabilities. For instance, methods such as Chain-of-Thought (CoT), Zero-shot-CoT, and Reflexion demonstrate LLMs' robust reasoning and planning abilities.

- Perception: This module extends beyond textual inputs to incorporate multimodal information, including visual and auditory data. Integration with advanced encoders and transfer learning techniques enriches the agent's understanding of its environment, vital for tasks requiring nuanced interaction and real-time response.

- Action: Encompassing textual outputs, tool utilization, and embodied actions, this module empowers agents to interact effectively and adaptively with the real world. By leveraging secondary applications like Toolformer and SayCan, agents extend their operational proficiency beyond conventional textual confines.

Pragmatic Applications

The paper intricately catalogues the diverse realms where LLM-based agents demonstrate functional efficacy:

- Task-Oriented Deployment: In scenarios spanning web navigation and daily life tasks, LLM-based agents enhance productivity by automating routine functions and offering specialized assistance, such as in online transactions and household chores. Studies like WebGPT and InterAct exemplify agents' adeptness at handling complex and dynamic environments.

- Innovation-Oriented Deployment: By integrating domain-specific tools and leveraging extensive knowledge repositories, LLM-based agents emerge as invaluable assets in scientific research and innovation. Projects like ChemCrow and Voyager underscore the potential of agents to autonomously navigate and contribute to cutting-edge fields.

- Lifecycle-Oriented Deployment: Here, agents like Voyager exhibit lifelong learning capabilities, continuously evolving by interacting with simulated environments. This reflects a stride towards achieving autonomous adaptability and sustained operational relevance.

Multi-Agent Systems and Societal Implications

The survey rigorously explores the dynamics of multi-agent systems, focusing on collaborative (e.g., CAMEL, ChatDev) and competitive (e.g., ChatEval, contextual debates) paradigms. These interactions not only boost efficiency but also reveal emergent societal behaviors and phenomena. By examining agent societies within controlled environments, the authors glean insights into coordinated behaviors, economic policies, and ethical decision-making processes.

Discussion and Forward-Looking Perspectives

Despite their demonstrated potential, LLM-based agents face inherent risks and open challenges. The authors emphasize critical issues such as adversarial robustness, trustworthiness, and ethical implications:

- Adversarial Robustness: Examining vulnerabilities to adversarial examples across various modalities, they suggest robust training techniques and human-in-the-loop supervision to safeguard against malicious exploitation.

- Trustworthiness: Addressing biases, hallucinations, and the necessity for calibrated predictions, they highlight methods like external knowledge integration and process supervision to enhance credibility.

- Ethical and Social Risks: From misusage to potential threats of unemployment and societal disruption, LLM-based agents require stringent regulatory standards and moral safeguards to ensure alignment with human values.

Open Problems and Future Directions

The paper culminates by speculating on future developments and unresolved questions, such as the viability of LLM-based agents as a path to AGI, the transition from virtual to physical environments, the concept of "Agent as a Service," and the scaling up of multi-agent systems. By addressing collective intelligence and the potential of these agents to evolve autonomously, they lay a roadmap for future research and practical implementation.

Conclusion

In essence, this comprehensive survey illuminates the multifaceted capabilities and broad applications of LLM-based agents. It underscores the potential of these agents to transform AI research and practical deployment, while also cautioning about ethical and security concerns. This meticulous documentation provides a foundational reference for researchers aiming to harness the capabilities of LLM-based agents for advanced AI applications.