LLM-Based Agents for Software Engineering: A Survey

Introduction

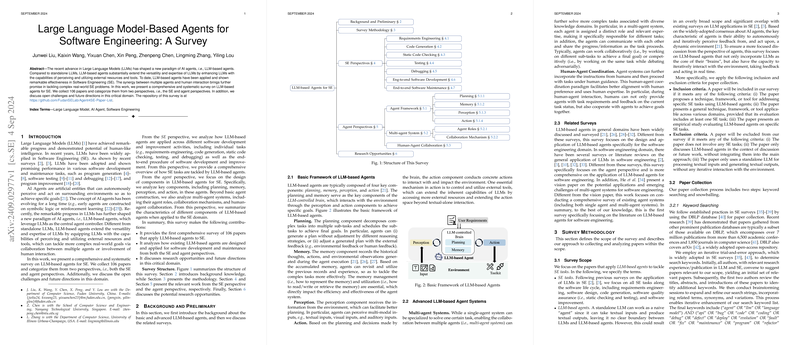

The rapid advancement in LLMs has engendered a novel paradigm in AI, specifically, the development of LLM-based agents. Unlike isolated LLMs, these agents significantly broaden the capabilities of LLMs, enabling the perception and utilization of external resources and tools. This paper provides a thorough survey of LLM-based agents applied to Software Engineering (SE), categorizing 106 collected papers from both SE and agent perspectives. The survey identifies current challenges and suggests future research directions.

SE Perspective

From the SE perspective, the paper analyzes how LLM-based agents are utilized across various phases of the software life cycle, including requirements engineering, code generation, static code checking, testing, debugging, and end-to-end software development and maintenance.

Requirements Engineering (RE)

LLM-based agents have demonstrated their utility in automating multiple phases of RE, such as elicitation, specification, and verification. For instance, Elicitron dynamically generates requirements by simulating user interactions while SpecGen creates Java Modeling Language specifications validated through OpenJML. Multi-agent frameworks like MARE cover multiple RE stages, including requirement elicitation, modeling, and verification.

Code Generation

LLM-based agents extend beyond standalone LLMs by incorporating planning and iterative refinement mechanisms to generate more accurate code. Strategies like Chain-of-Thought (CoT) planning decompose tasks into sub-tasks, enhancing effectiveness. Moreover, iterative feedback from tools, models, or humans refines the generated code. Agents like CodeCoT and CodePlan dynamically adapt their strategies based on hybrid feedback mechanisms combining model and tool feedback.

Static Code Checking

Static code checking benefits from multi-agent collaboration and the integration of static analysis tools. For instance, ART leverages tool libraries to enhance LLMs for static bug detection. IRIS and LLIFT combine traditional static analysis with LLM agents to pinpoint vulnerabilities and bugs. These agents dynamically navigate code repositories and validate static anomalies reported by tools.

Testing

In software testing, agents generate unit and system-level tests iteratively, refining them to minimize errors and maximize coverage. For example, TestPilot refines tests by analyzing error messages iteratively, while CoverUp focuses on generating high-coverage tests. System-level testing agents like KernelGPT and WhiteFox incorporate code parsers and dynamic execution tools to validate tests on OS kernels and compilers, respectively.

Debugging

Existing LLM-based agents like RepairAgent and AutoSD employ iterative refinement for program repair, incorporating compilation and execution feedback. Simplifying fault localization benefits from tool integration with spectrum-based methodologies as in AUTOFL. Unified debugging approaches further combine fault localization and program repair, e.g., FixAgent uses inter-agent collaboration to enhance debugging capabilities.

End-to-end Development and Maintenance

Agents facilitate complete software development and maintenance processes, leveraging models like the waterfall process model. Systems such as MetaGPT and AgileCoder simulate real-world development teams, incorporating multiple specialized roles like coders, testers, and managers. These agents dynamically collaborate, allocate tasks, and refine outputs iteratively.

Agent Perspective

From the agent perspective, the paper categorizes existing LLM-based agents into four key components: planning, memory, perception, and action.

Planning

Planning involves structuring and scheduling task execution. Some agents adopt single-path planning, generating a linear task sequence, while others implement multi-path strategies like MapCoder to explore various solutions. The representation of plans ranges from natural language to semi-structured formats.

Memory

Effective memory management is crucial in SE tasks requiring iterative refinement. Memory types include short-term (e.g., action-observation sequences) and long-term (e.g., distilled task trajectories). Shared and specific memory mechanisms help agents retain context and historical information, vital for coherent task execution.

Perception

Agents primarily rely on textual input perception, aligning with the text-rich nature of SE activities. Some agents also incorporate visual input for GUI tasks, utilizing image recognition models.

Action

The action component leverages external tools to extend agent capabilities beyond text generation. Tools include search engines, static analysis tools, testing frameworks, and dynamic instrumentation tools, which facilitate comprehensive SE task automation.

Future Directions

The survey highlights several open challenges and future research directions:

- Evaluation Metrics and Benchmarks: Developing comprehensive, fine-grained metrics and realistic benchmarks is critical for meaningful evaluations.

- Human-Agent Collaboration: Extending human participation across the software life cycle and designing effective interaction mechanisms are key areas for future exploration.

- Perception Modality: Broadening the range of perception modalities can improve agent flexibility and adaptability.

- Expanding SE Tasks: Developing agents tailored to underexplored SE tasks like design and verification can enhance their utility.

- Training Specialized LLMs: Incorporating diverse software lifecycle data into LLM training can create more robust models for SE agents.

- Integrating SE Expertise: Leveraging domain-specific SE techniques and methodologies can improve the efficiency and effectiveness of agent systems.

Conclusion

This survey provides a comprehensive analysis of the current landscape of LLM-based agents for SE. The paper explores the utilization of these agents across various SE activities and discusses the design of their core components. By addressing open challenges and outlining future research directions, this survey offers a roadmap for advancing the development and application of LLM-based agents in software engineering.