Overview of MAmmoTH: Building Math Generalist Models through Hybrid Instruction Tuning

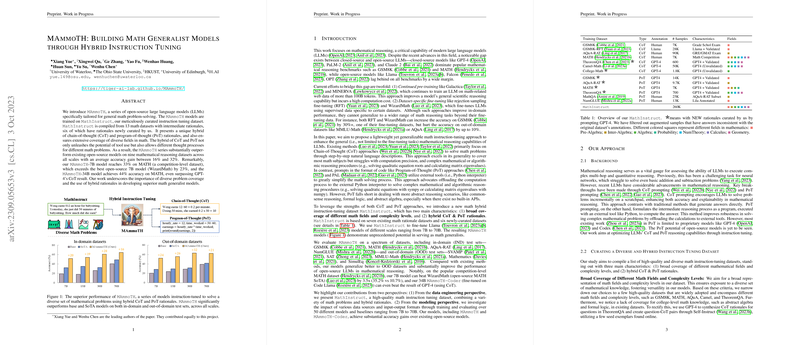

The paper presents "MAmmoTH," a series of open-source LLMs specialized in mathematical problem solving through a novel approach of hybrid instruction tuning. Specifically tailored to enhance the mathematical reasoning capabilities of LLMs, MAmmoTH models are trained on "MathInstruct," an instruction dataset that ingeniously combines Chain-of-Thought (CoT) and Program-of-Thought (PoT) rationales across a broad spectrum of mathematical subjects. The authors claim significant performance improvements over existing solutions on various mathematical reasoning benchmarks.

Core Contributions

- Hybrid Instruction Tuning Dataset: MathInstruct

The MathInstruct dataset is a central contribution, encompassing diverse mathematical fields and complexity levels. It integrates CoT and PoT rationales collected from 13 publicly available datasets, alongside six new datasets curated by the authors. This hybrid educational approach aims to leverage the strengths of both CoT, which facilitates reasoning through step-by-step thought processes, and PoT, which engages external tools like Python for calculation-heavy problems.

- Strong Empirical Performance

The paper reports that MAmmoTH models achieve an average accuracy gain between 16% to 32% on nine mathematical reasoning datasets at various scales. Notably, the MAmmoTH-7B model achieves 33% accuracy on the MATH competition-level dataset, outperforming the best comparable open-source model, WizardMath, by 23%. Further evaluation demonstrates the MAmmoTH-34B model's ability to surpass even closed-source models like GPT-4's CoT results.

- Evaluation and Baselines

The evaluation setup involves both in-domain (IND) and out-of-domain (OOD) test sets, covering datasets like GSM8K, MATH, AQuA-RAT, NumGLUE, and others. By outperforming both closed- and open-source models across these evaluations, the MAmmoTH series establishes a new benchmark for open-source LLMs in mathematical problem solving.

- Data Engineering and Implications for Future LLM Development

The engineering of MathInstruct demonstrates the critical role of diverse problem datasets in creating robust, generalist LLMs. The integration of hybrid rationales provides a dual approach to tackling mathematical problems, which can accommodate the varied nature of such tasks. The paper suggests that enhancing the training data with diverse sources promotes the model's generalizer skills, an insight that could drive future frameworks in LLM training for domain-specific tasks.

Implications and Future Directions

The work on MAmmoTH opens several avenues for future explorations. The hybrid instruction tuning method stands as a promising direction for developing LLMs in domains requiring both precise computations and complex multi-hop reasoning. Future research might consider expanding the scope to include different branches of mathematics or adapting the hybrid instruction tuning approach to other scientific fields requiring reasoning and calculation. There is also potential in examining the synergistic effects between CoT and PoT rationales when applied to other complex reasoning challenges.

While the hybrid models demonstrate superior adaptability and accuracy in various mathematical reasoning tasks, the paper also acknowledges that broader domain coverages and the incorporation of theorem-proving tasks would further enhance LLM capabilities. As models trained under this framework show marked improvement over existing baselines, the MAmmoTH series establishes a foundation for ongoing enhancements in mathematical AI models.

In summary, the paper illustrates MAmmoTH as a pivotal step in developing LLMs for mathematical reasoning, surpassing many existing models in efficacy and offering insights that could inform subsequent developments in specialized LLMs.