Insightful Overview of MuMath-Code: A Dual Approach to Enhancing Mathematical Reasoning in LLMs

The paper "MuMath-Code: Combining Tool-Use LLMs with Multi-perspective Data Augmentation for Mathematical Reasoning" presents a robust approach integrating LLM tool usage with data augmentation to enhance mathematical reasoning. It explores the synthesis of code-nested solutions and multi-perspective data augmentation as complementary methods to bolster the mathematical performance of open-source LLMs, specifically LLaMA-2 models.

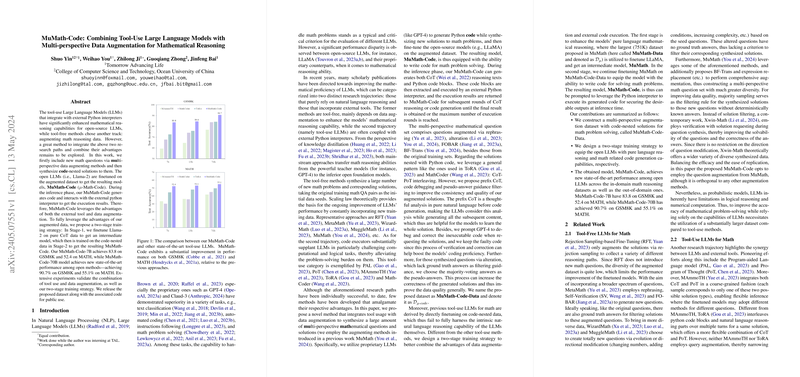

The authors introduce MuMath-Code, an innovative framework that synergizes external tool interactions with augmented data for mathematical problem-solving. The MuMath-Code model is developed using a two-stage training strategy, partitioning the learning process into stages focused on pure language mathematical reasoning and code-based interaction with a Python interpreter. This approach intends to leverage the strengths of open-source models in mathematical reasoning, traditionally an area dominated by proprietary models like GPT-4.

Key Contributions and Methodology

- Data Augmentation via Multi-perspective Methods:

- The authors utilize multi-perspective methods to generate new mathematical questions. These include rephrasing, FOBAR, BF-Trans, and expression replacement, which collectively enhance the diversity of the training data.

- Tool-Use with Code Synthesis:

- The synthesis of code-nested solutions enables LLMs to perform computations and logic checks that are challenging to execute using pure LLMs alone. This involves interleaving Python code with reasoning steps to allow the execution of mathematical solutions using external tools.

- Two-Stage Training Strategy:

- Stage-1 involves fine-tuning Llama-2 models on an augmented pure CoT dataset to bolster intrinsic language reasoning capability.

- Stage-2 shifts focus to training on data that involves code execution for problem-solving, equipping the models with tool interaction capability.

Numerical Results and Implications

MuMath-Code demonstrates significant improvements, achieving state-of-the-art results among open-source models. The MuMath-Code-7B model scores 83.8 on GSM8K and 52.4 on MATH, while the 70B version attains 90.7\% on GSM8K and 55.1\% on MATH. These results highlight the efficacy of combining data augmentation with tool-use methods in elevating mathematical reasoning capabilities in open-source LLMs.

Theoretical and Practical Implications

Theoretically, this integration of data augmentation and tool-use straddles the line between the intrinsic reasoning strengths of LLMs and the computational precision of external tools. It suggests a pathway towards hybrid models that can perform well on both natural language tasks and more structured problem-solving tasks like mathematics.

Practically, this development implies broader application potential for open-source LLMs, making them viable contenders against proprietary counterparts in fields requiring complex reasoning. It opens doors for further research into integrating various external tools with LLMs to handle domain-specific tasks, potentially leading to advancements in education, automated coding, and reasoning-intensive applications.

Future Research Directions

The promising results of MuMath-Code hint at exciting avenues for future exploration. Future research could delve into refining the integration of LLMs with other specialized tools or domains, optimizing the two-stage training mechanism for even broader applications, or exploring ways to automatically generate more complex and diverse training data. Additionally, the implications of blending tool-free and tool-use methodologies in domains outside mathematics warrant investigation.

Overall, this work underscores a nuanced approach to enhancing the mathematical reasoning prowess of open-source LLMs by harmonizing data augmentation and tool-use methodologies, providing a compelling model for future developments in the field of AI and machine learning.