OpenMathInstruct-1: Enhancing Mathematical Reasoning in LLMs with a Large-Scale Open Dataset

Overview of OpenMathInstruct-1

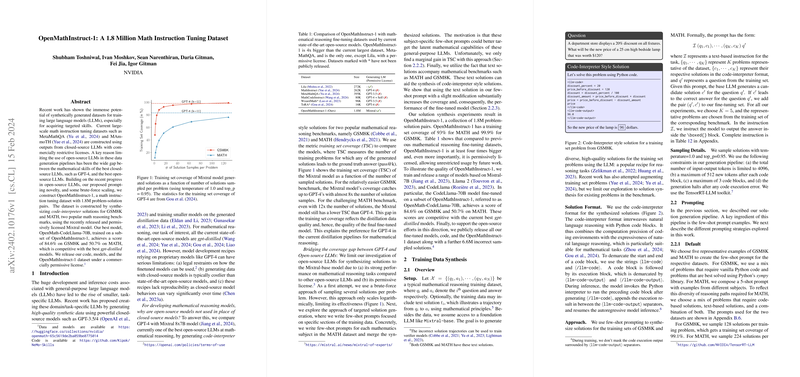

OpenMathInstruct-1 represents a significant progress in the domain of mathematical reasoning for LLMs, featuring a dataset comprising 1.8 million problem-solution pairs. This dataset is unique not only in its size—which is at least four times larger than the largest existing datasets in this domain—but also in its open licensing, facilitating unrestricted usage and contribution to future research. It addresses notable limitations in the current landscape of LLM training for mathematical reasoning by leveraging the recently released, permissively licensed Mixtral model for dataset generation.

Rationale Behind the Development

The inception of OpenMathInstruct-1 was motivated by the constraints posed by proprietary models in the existing data generation pipelines for mathematical reasoning. These constraints included legal restrictions on usage, higher generation costs, and challenges in reproducibility due to the opaque nature of closed-source models. Utilizing the Mixtral model, OpenMathInstruct-1 was compiled, aiming to bridge the performance gap observed between open-source LLMs and their closed-source counterparts in mathematical tasks.

Methodology

The generation of OpenMathInstruct-1 involved a blend of brute-force scaling and innovative prompting strategies. Notably, a novel approach using masked text solutions significantly boosted the efficiency of solution synthesis, leading to remarkable training set coverage of 99.9% for GSM8K and 93% for MATH benchmarks. This methodology showcased a leap in performance for the fine-tuned OpenMath-CodeLlama-70B model, which demonstrated competitive results against the leading gpt-distilled models on major mathematical reasoning benchmarks.

Implications and Future Directions

The creation and public release of OpenMathInstruct-1 under a permissively open license are poised to have profound implications for AI research, particularly in enhancing the mathematical reasoning capabilities of LLMs. The dataset not only sets a new benchmark for the scale and accessibility of training data in this niche but also exemplifies the potential for collaborative advancements in AI through open-source initiatives.

Additionally, the successful application of the Mixtral model for dataset generation exemplifies the narrowing performance gap between open-source and proprietary models in specialized tasks. This trend could encourage further contributions to open AI research, fostering an environment where advancements are not hindered by commercial restrictions.

The research also highlights the untapped potential of leveraging instructive data in LLM training. With OpenMathInstruct-1 now available, the AI research community is better equipped to explore novel training paradigms that could further refine the reasoning and problem-solving capabilities of LLMs.

Conclusion

OpenMathInstruct-1 represents a significant milestone in the quest for improving mathematical reasoning in LLMs. By providing an unprecedentedly large and openly accessible dataset, this research contribution not only elevates the capabilities of open-source models but also opens new avenues for collaborative research and development. As the AI field continues to evolve, resources like OpenMathInstruct-1 will be instrumental in shaping the future trajectory of intelligent systems capable of sophisticated reasoning and knowledge application.