Insights into "MathScale: Scaling Instruction Tuning for Mathematical Reasoning"

The paper "MathScale: Scaling Instruction Tuning for Mathematical Reasoning" presents a methodological approach to enhancing the mathematical reasoning abilities of LLMs through the creation of a scalable and effective dataset. This approach leverages frontier LLMs, like GPT-3.5, to generate high-quality mathematical reasoning data, thereby addressing the limitations imposed by existing datasets like GSM8K and MATH.

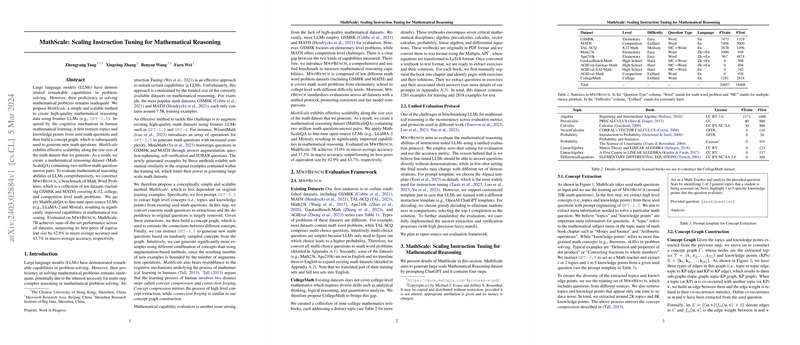

MathScale adopts a novel data generation pipeline, reflecting cognitive mechanisms observed in human learners. The critical stages involve topic and concept extraction from seed questions, constructing concept graphs, and synthesizing new math questions from these graphs. This methodology significantly expands the volume of training data, enabling the construction of the MathScaleQA dataset, comprising two million question-answer pairs. The process efficiently decouples data generation from the constraints of limited existing datasets.

The effectiveness of MathScale is evaluated using M WP B ENCH , a benchmark spanning K-12 to competition-level math problems, enabling consistent and fair model comparisons. MathScale-7B, a model fine-tuned using the MathScaleQA dataset, significantly outperforms baseline models of equivalent size, achieving 42.9% higher micro-average accuracy and 43.7% higher macro-average accuracy. These improvements illustrate the scalability and superiority of the approach, as the MathScale-7B surpasses its peers by a substantial margin.

A key facet is its concept graph framework, derived from the mathematical principles of concept compression and connection forging. This aligns with Tall's theory of mathematical learning, suggesting parallels between the MathScale pipeline and effective human learning strategies. By emphasizing the extraction of both "topics" and "knowledge points," MathScale generates a more diverse dataset, which is crucial for better model generalization.

Although the work primarily focuses on natural language reasoning, its implications hint at opportunities for incorporating program-based tool usage, akin to methods like ToRA. However, the current scope is limited to natural language reasoning without integrating tool-based reasoning, reserving this exploration for future developments.

The research points to notable scalability in enhancing large-scale mathematical datasets. The inclusion of diverse mathematical concepts and the effective transformation of raw seed data into structured information positions MathScale favorably as a foundation for future research. It opens avenues for exploring the LLaMA-2 70B model, extending the dataset size beyond two million examples, and the potential of integrating programming tools for comprehensive reasoning capabilities.

In conclusion, MathScale exemplifies a strategic approach to data augmentation in AI-driven mathematical reasoning. While it showcases profound accuracy improvements, the research encourages further exploration into combining cognitive and computational strategies for a truly holistic instruction-tuning approach.