- The paper demonstrates that adversarial training increases model reliance on spurious features, thereby compromising natural distributional robustness.

- A theoretical analysis using linear regression with Gaussian data shows that ℓ1/ℓ2 norms heighten non-core feature reliance while ℓ∞ depends on feature scale.

- Empirical studies across benchmarks such as RIVAL10 and ImageNet variants confirm that adversarial training shifts model sensitivity, reducing performance under distribution shifts.

Explicit Tradeoffs between Adversarial and Natural Distributional Robustness

Introduction

The paper examines the complex relationship between adversarial robustness and natural distributional robustness in deep neural networks, focusing on how adversarial training influences model reliance on spurious features. This work presents a comprehensive theoretical and empirical analysis showing that adversarial training can increase a model's dependence on spurious features, impacting its robustness to distribution shifts.

Theoretical Foundations

The paper begins with a theoretical investigation using a linear regression model with Gaussian data comprising core and spurious features. It is shown that adversarial training with ℓ1 and ℓ2 norms increases reliance on spurious features, while reliance occurs with ℓ∞ only if spurious features have a larger scale. The adversarial loss encourages models to distribute reliance across more features, thereby using spurious ones to mitigate attacks.

Empirical Evidence

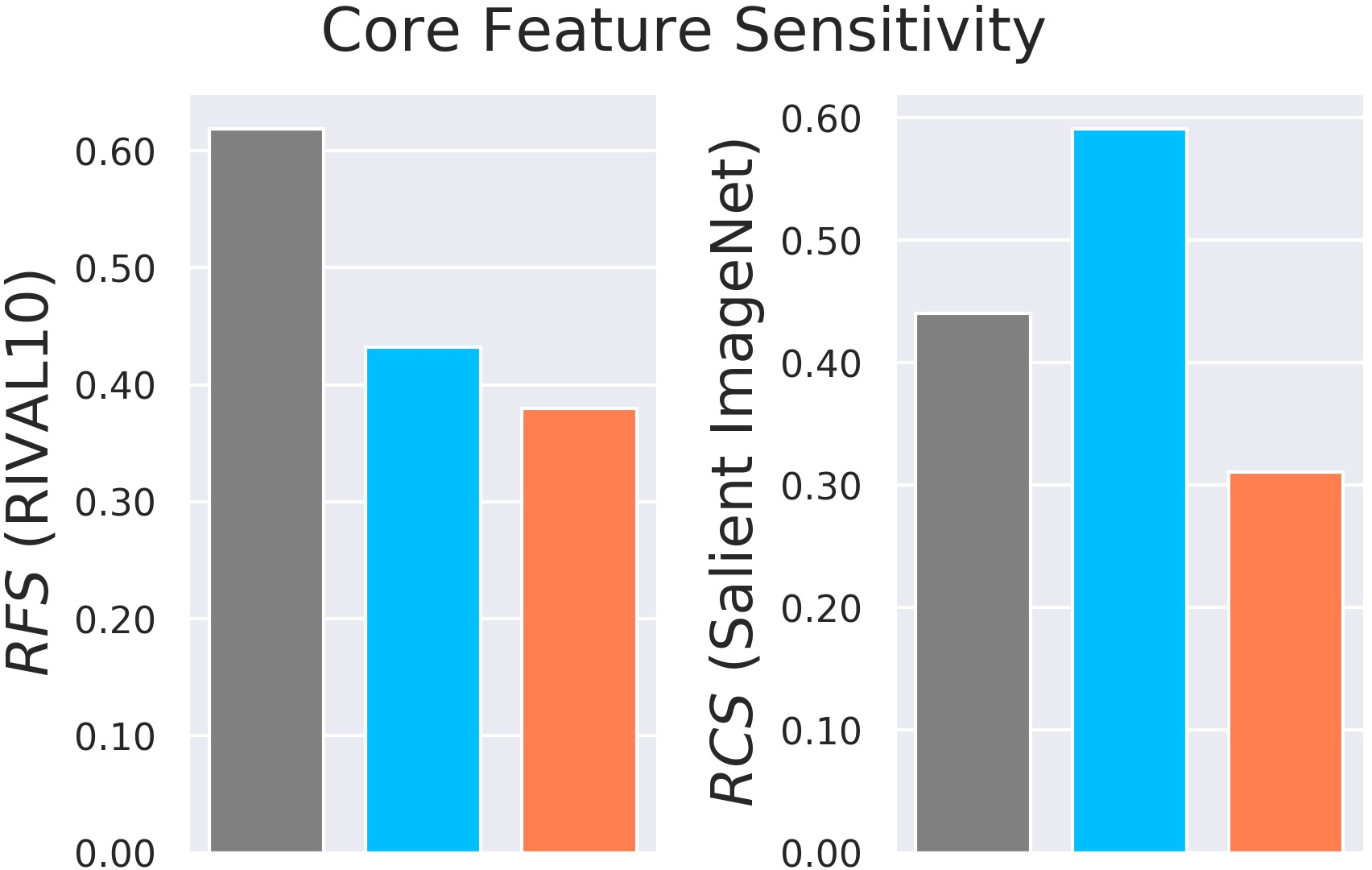

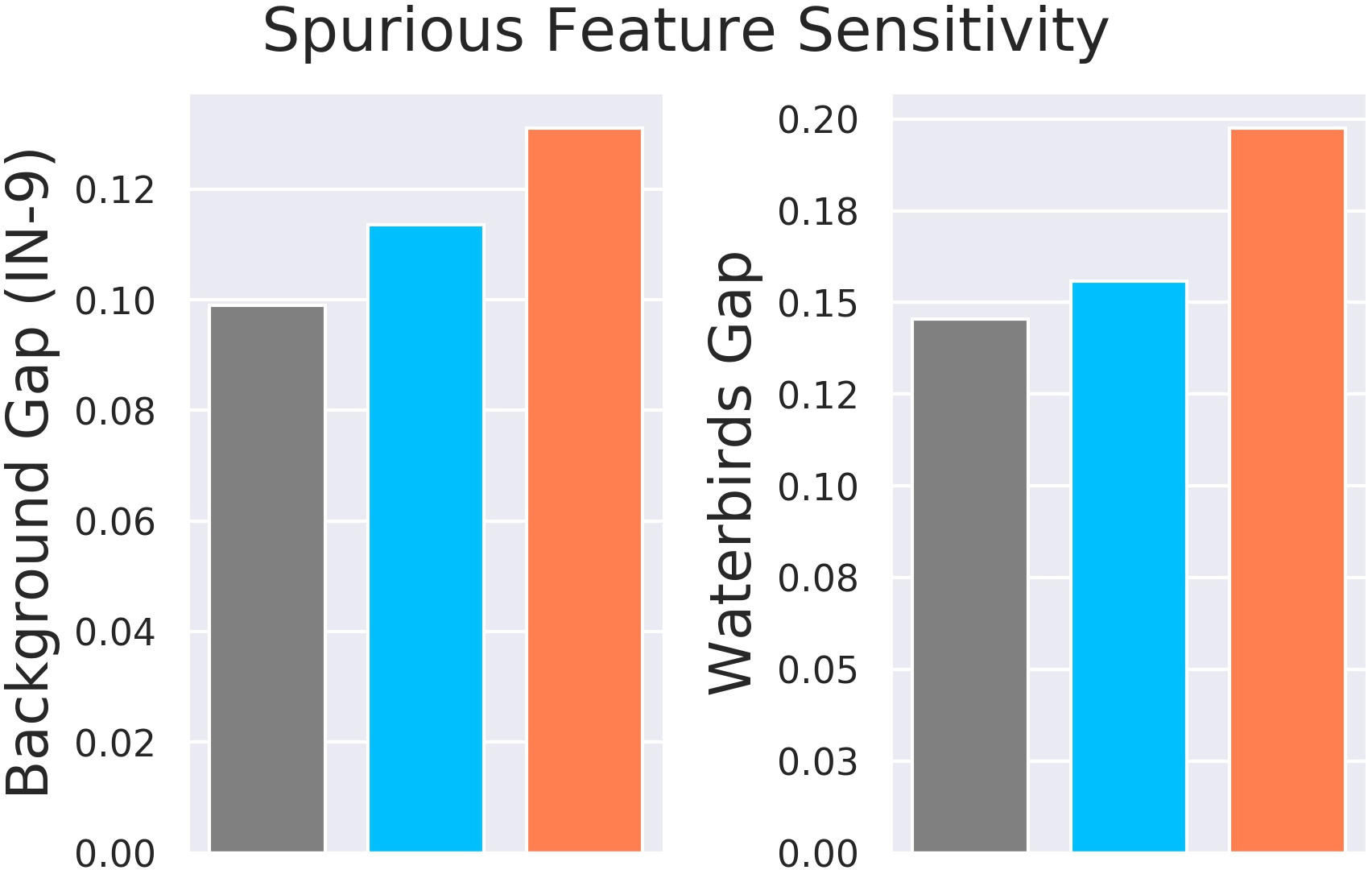

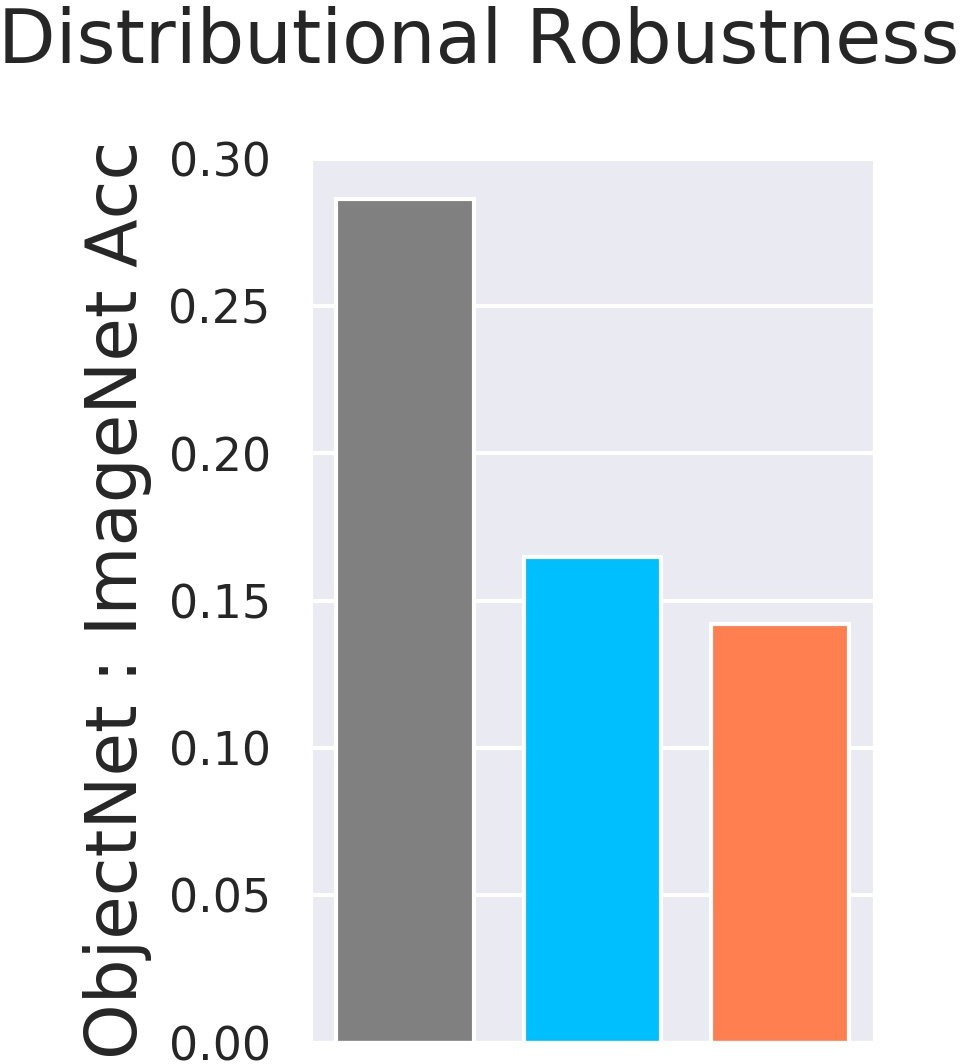

Extensive experiments are conducted on datasets like RIVAL10, Salient ImageNet-1M, ImageNet-9, Waterbirds, and ObjectNet to evaluate the implications of adversarial training on spurious feature reliance.

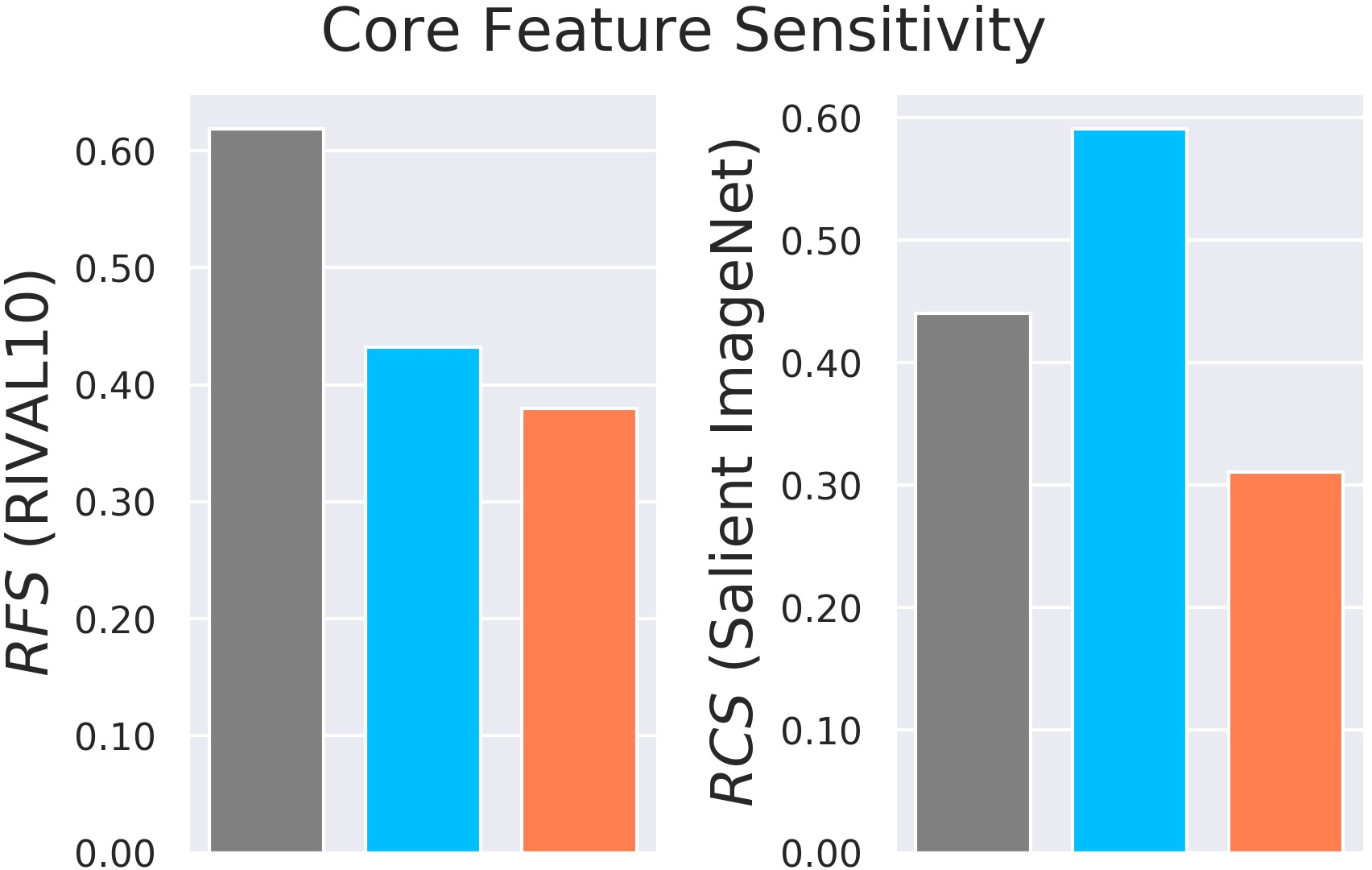

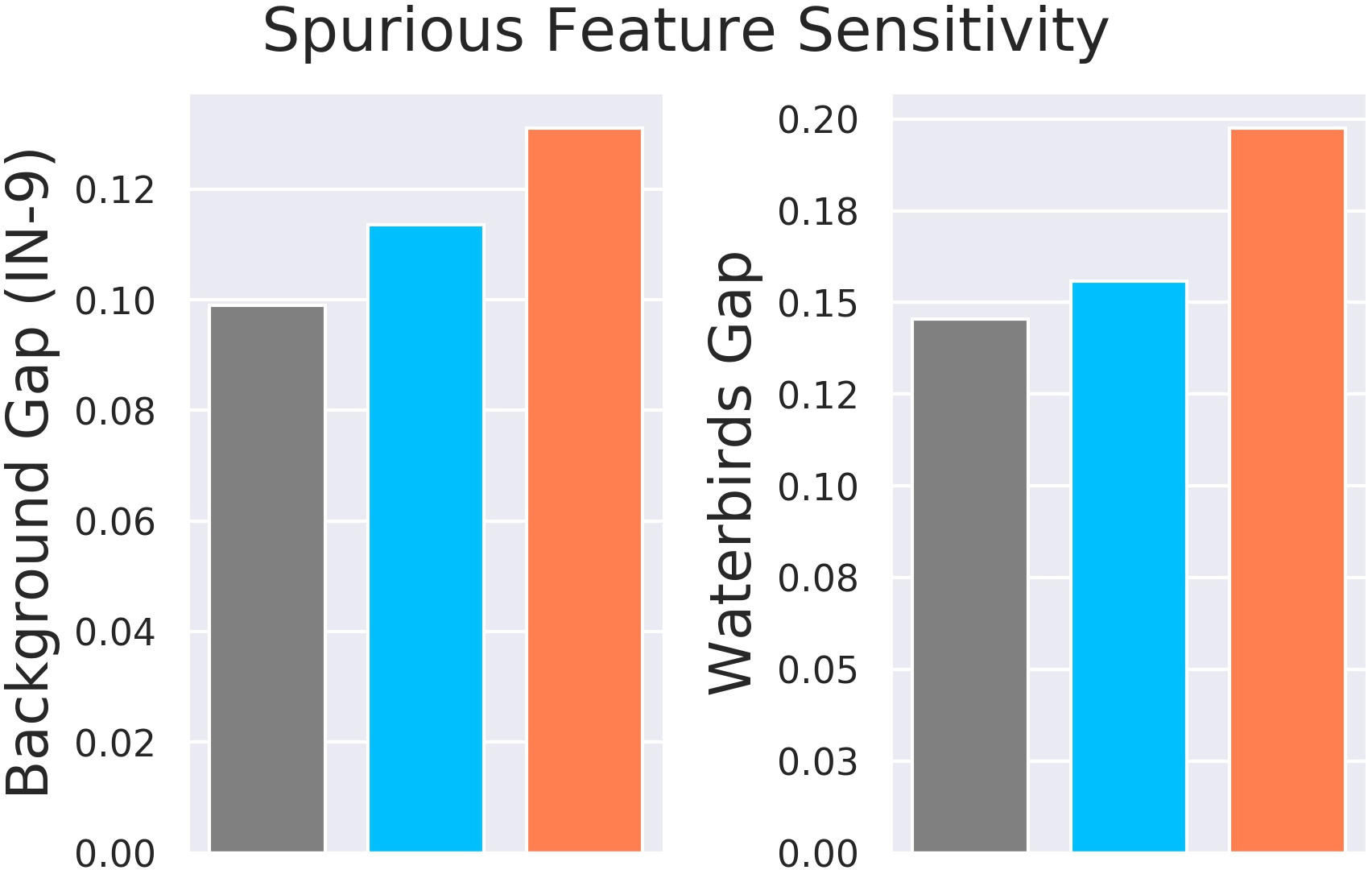

Figure 1: Snapshot of empirical evidence using {\it RIVAL10, Salient ImageNet-1M, ImageNet-9, Waterbirds,} and {\it ObjectNet} benchmarks.

These experiments validate theoretical predictions, demonstrating that adversarially trained models exhibit greater sensitivity to spurious features across various settings.

Impact on Distributional Robustness

The paper highlights a critical trade-off: while adversarial training enhances adversarial robustness, it can reduce the model's ability to generalize under distribution shifts where spurious correlations are altered.

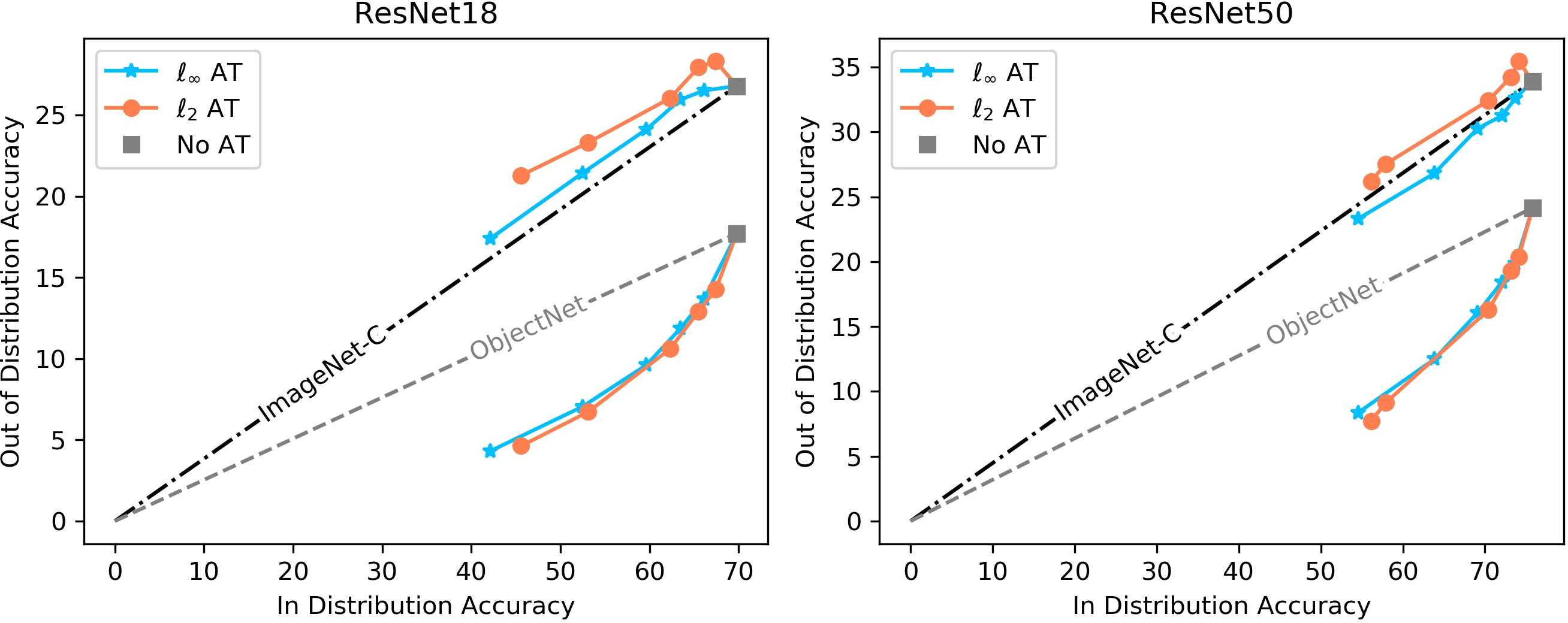

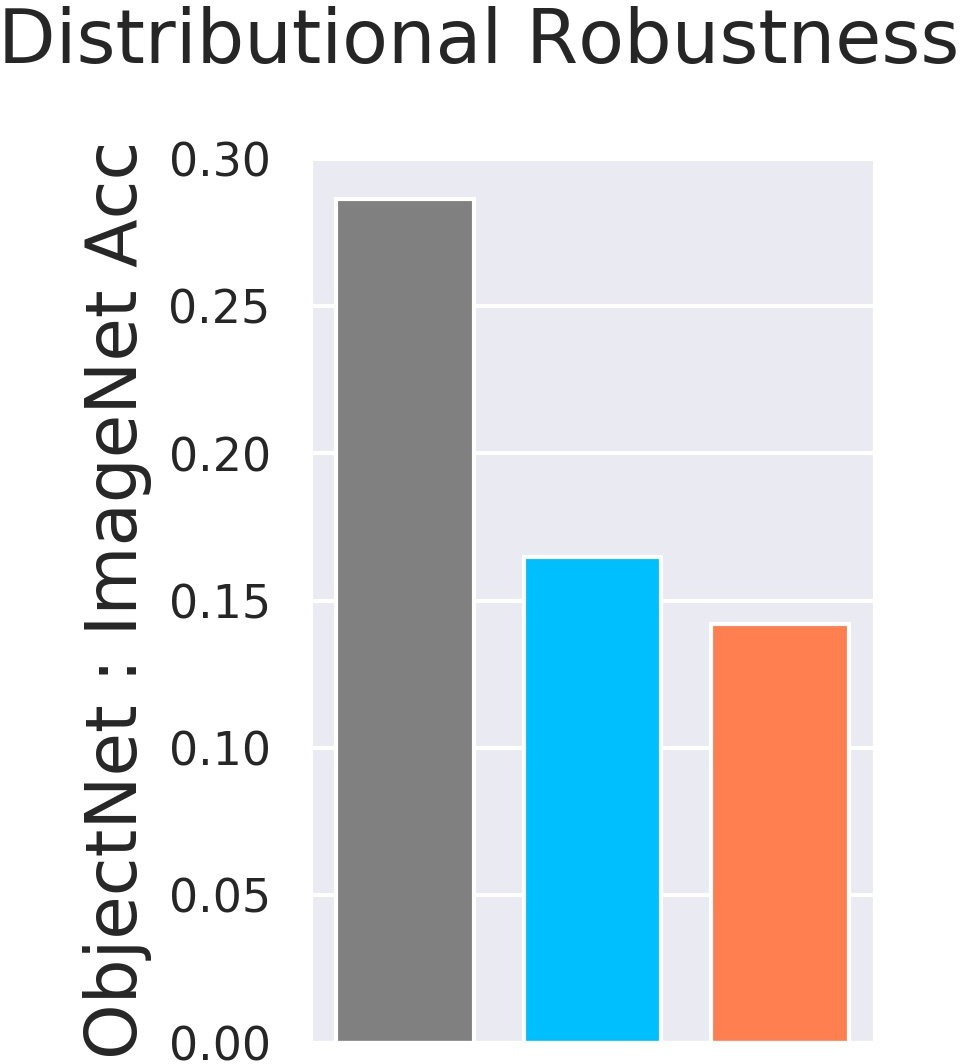

Figure 2: OOD accuracy vs standard ImageNet accuracy for adversarially trained ResNets.

This finding illustrates that models trained with adversarial techniques are more susceptible when natural contexts, such as backgrounds, are changed, diverging from expectations set by standard benchmarks.

Sensitivity Evaluation

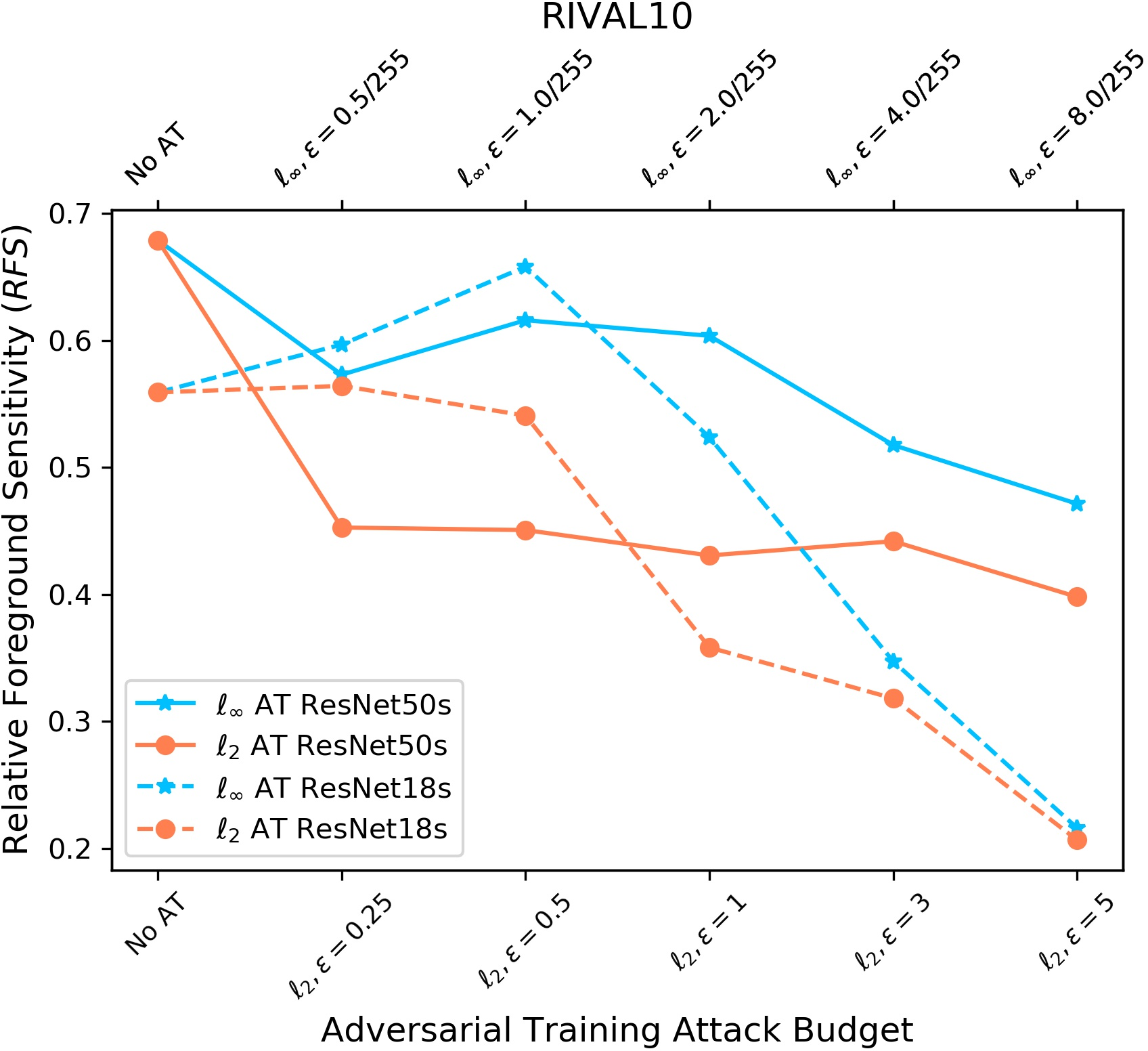

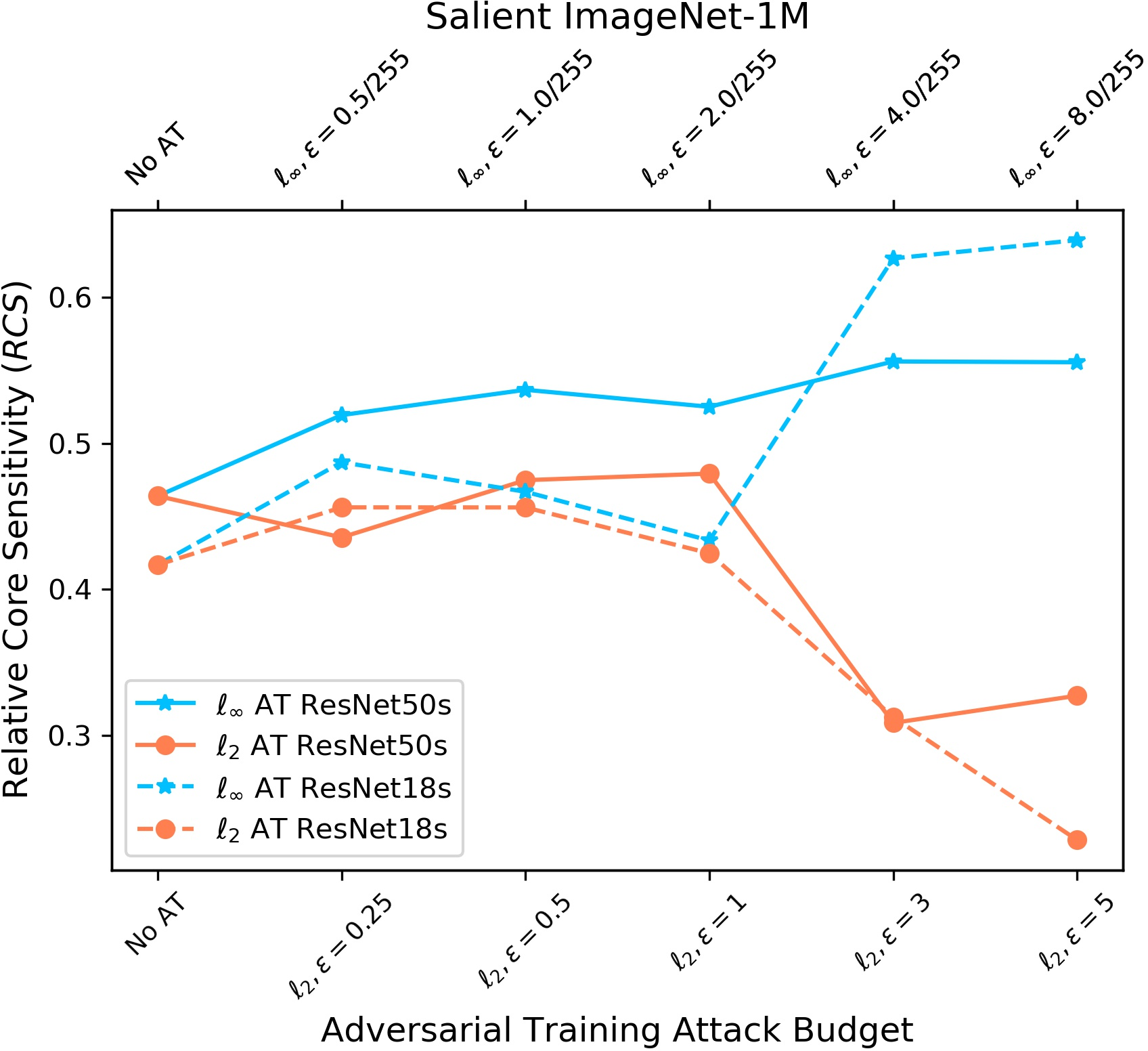

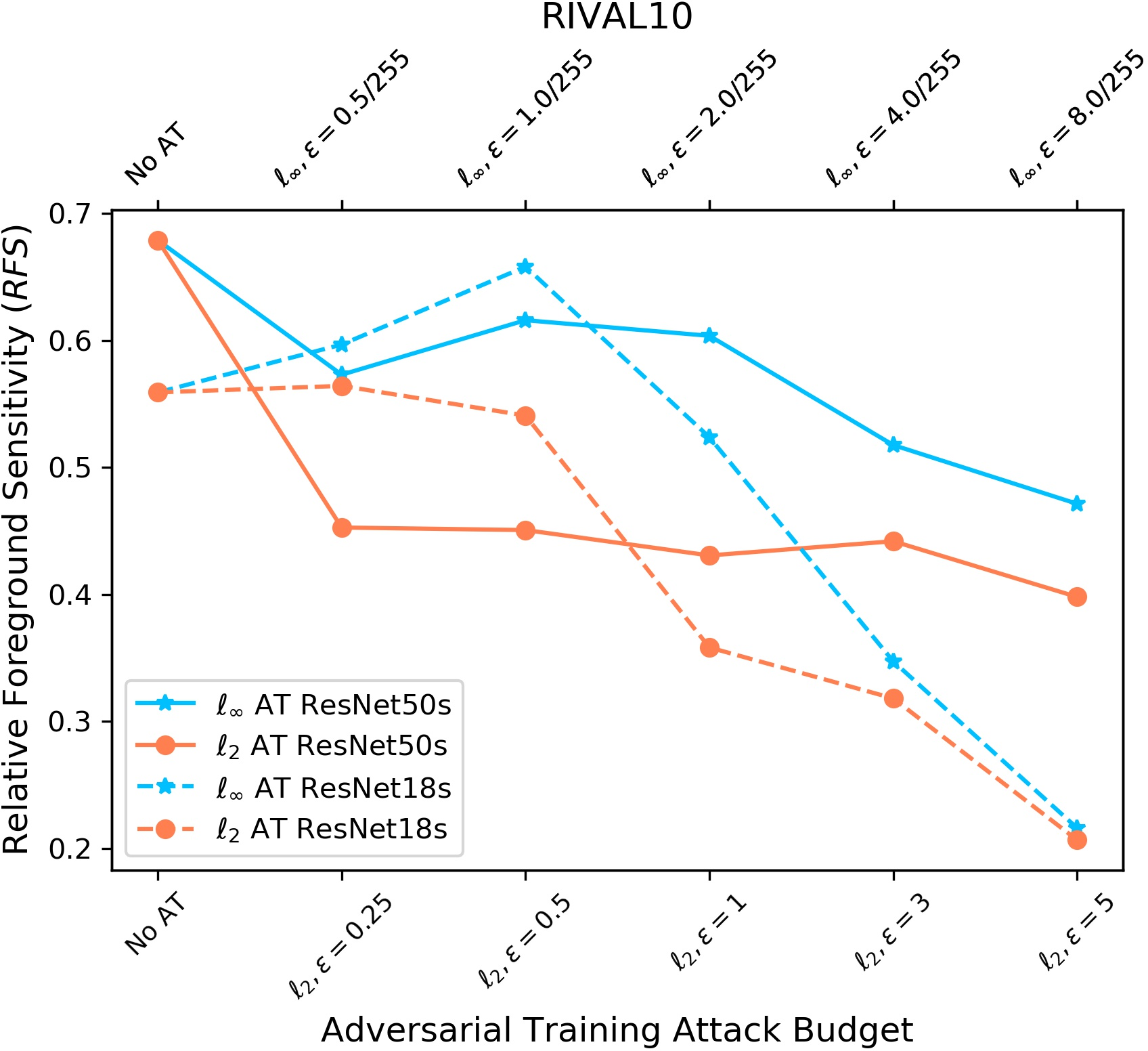

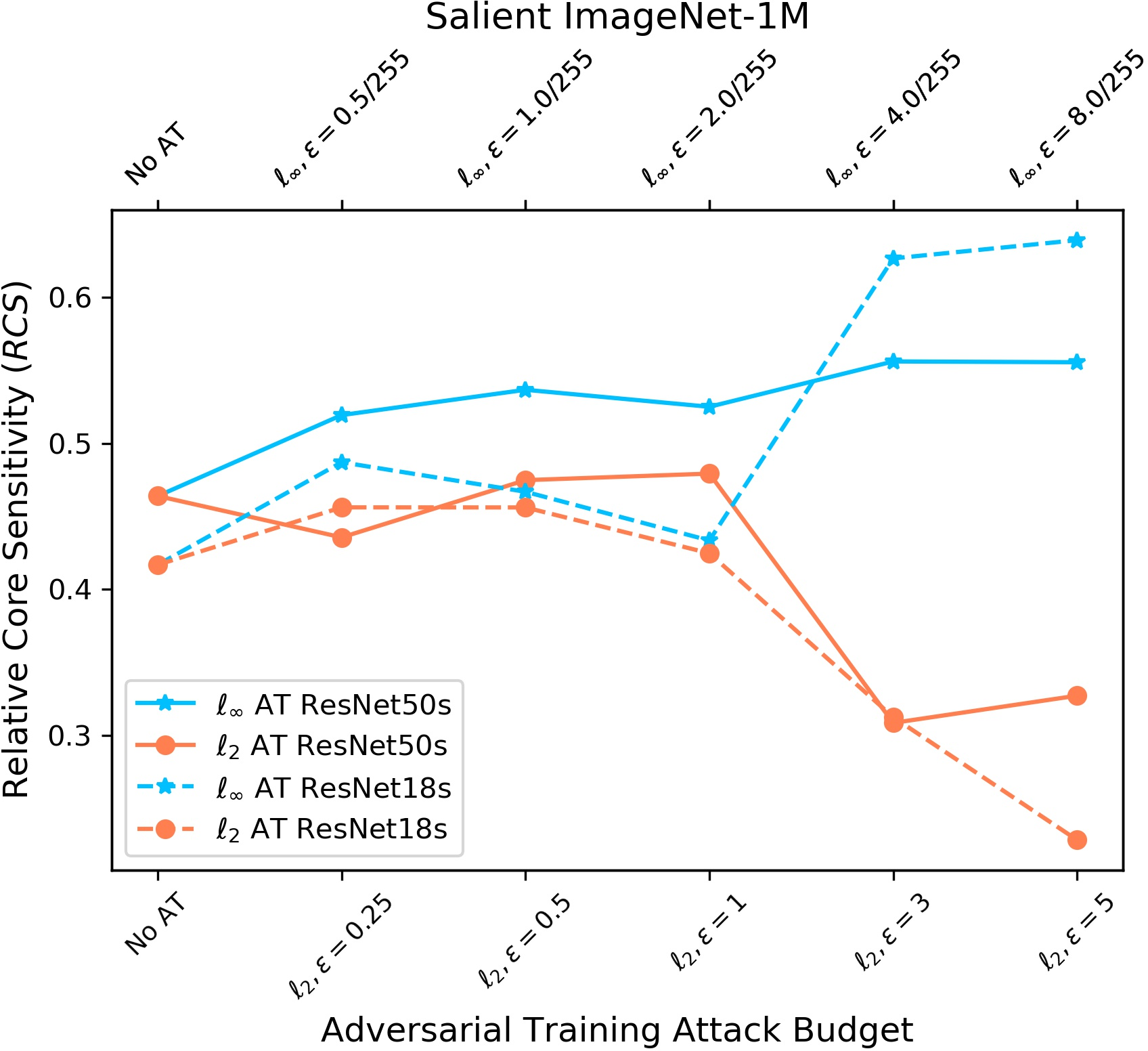

The paper further investigates model sensitivity to core versus spurious features using noise-based metrics like RFS and RCS, showing that adversarial training shifts sensitivity away from core features.

Figure 3: Noise-based evaluation of model sensitivity to foreground (RFS on RIVAL10) or core (RCS on Salient ImageNet-1M) regions.

This suggests that adversarial training can unintentionally prioritize robustness against adversarial features at the cost of natural feature sensitivity.

Real-World Implications

The findings emphasize that while adversarial training can secure models against crafted inputs, it necessitates caution due to its potential to weaken robustness against genuine distribution shifts. This balancing act presents new challenges for deploying models in dynamically evolving real-world environments.

Conclusion

This research critically examines the dual axes of adversarial and natural distribution robustness, revealing intricate trade-offs induced by adversarial training. The insights call for comprehensive evaluation strategies encompassing all robustness aspects before deploying AI models in sensitive applications. Future work should explore strategies for balancing these competing robustness objectives effectively.