- The paper presents ModelLight with a novel integration of model-based RL and meta-learning to significantly reduce the need for real-world interactions.

- The methodology uses an ensemble of LSTM models and MAML to capture dynamic traffic patterns and enable rapid adaptation across urban scenarios.

- Experiments on real-world datasets show marked reductions in travel times and improved computational efficiency compared to existing methods.

The paper "ModelLight: Model-Based Meta-Reinforcement Learning for Traffic Signal Control" (2111.08067) presents an innovative approach to optimizing traffic signal control through the integration of Model-Based Reinforcement Learning (MBRL) and meta-learning techniques. Traffic congestion poses significant challenges in urban environments, and effective signal control can substantially alleviate these issues, improving city mobility and economic productivity.

Introduction and Problem Definition

The authors identify traffic signal control as a critical area for reducing congestion, where traditional approaches rely on fixed schemes or require extensive optimization in response to dynamic traffic patterns. @@@@2@@@@ (RL) offers significant advantages over traditional methods by enabling adaptive control based on real-time data. However, model-free RL approaches suffer from low sample efficiency, requiring extensive interaction with the real environment, which is impractical for large-scale deployment.

ModelLight introduces a model-based meta-reinforcement learning framework aimed at improving data efficiency and reducing the dependency on real-world interactions. By learning an ensemble of models for road intersections and employing meta-learning, ModelLight enhances the adaptability and robustness of traffic signal control mechanisms.

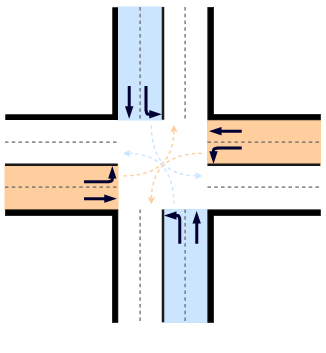

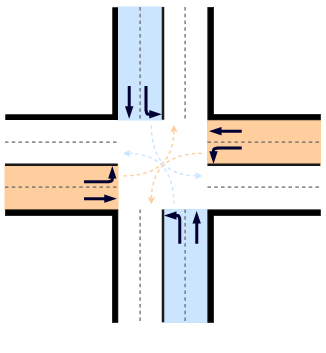

Figure 1: Standard intersection with 8 incoming lanes and primary phases. (a) shows the structure of the intersection while (b) shows the primary traffic phases of traffic flow. Right-hand turn lanes are typically not modelled in traffic flow models.

Methodology

Markov Decision Process for Traffic Signal Control

ModelLight approaches traffic signal control as a Markov Decision Process (MDP) defined by states, actions, transitions, and rewards. The states encompass critical variables like the number of waiting vehicles and current signal phases, while actions involve selecting phases for subsequent periods. The reward function inversely correlates with queue lengths, encouraging minimal traffic delays.

The framework employs LSTM models to capture dynamic traffic patterns, leveraging ensemble learning to mitigate model biases and compounding errors. Meta-learning techniques like MAML are integrated to optimize model initializations, facilitating rapid adaptation to new tasks and environments.

Experimental Evaluation

The paper validates ModelLight using rigorous experiments on real-world datasets from various urban environments. By comparing against state-of-the-art methods like MetaLight and MAML, ModelLight demonstrates superior performance in terms of travel time reduction and computational efficiency.

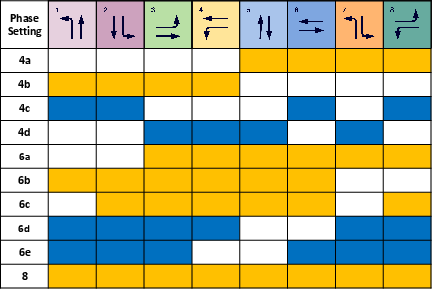

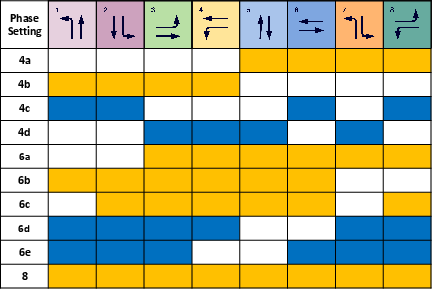

Figure 2: Ten different phase settings. Yellow represents phase setting 1 (PS1) and blue represents phase setting 2 (PS2).

Results indicate that ModelLight consistently achieves lower average travel times compared to competing methods, demonstrating enhanced learning efficiency across different traffic scenarios and intersection types. Notably, ModelLight reduces the need for real-world interactions substantially, addressing practical deployment constraints effectively.

Conclusion

ModelLight's integration of MBRL and meta-learning marks a significant advancement in traffic signal control, offering a scalable and data-efficient solution with robust adaptability to diverse urban scenarios. Future work may focus on expanding the approach to multi-intersection networks and exploring transfer learning potentials to further improve data efficiency. This research underscores the transformative potential of AI-driven methodologies in optimizing urban infrastructure systems.