- The paper introduces a scalable IG-RL approach that leverages graph convolutional networks to model traffic dynamics for signal control.

- It models intersections, lanes, and vehicles as graph nodes, enabling effective zero-shot transfer across diverse road networks.

- Experimental results on synthetic and Manhattan networks demonstrate significant improvements over traditional MARL and heuristic methodologies.

Inductive Graph Reinforcement Learning for Traffic Signal Control

Introduction

This paper presents a novel approach termed Inductive Graph Reinforcement Learning (IG-RL) for adaptive traffic signal control (ATSC). The method attempts to solve the complex problem of managing traffic signal systems in urban environments where the coordination of a vast number of traffic signal controllers (TSCs) is required. By framing the ATSC task as a Markov decision process (MDP) and utilizing graph-convolutional networks (GCNs), IG-RL moves beyond heuristic-based approaches to provide a scalable solution that can generalize across varying road networks and traffic scenarios.

Figure 1: Model. We illustrate the computational graph corresponding to one of the connections a TSC observes at its intersection. One vehicle is located on the connection's inbound lane while two are located on its outbound lane.

Methodology

IG-RL leverages GCNs to enable scalable, decentralized reinforcement learning. The methodology models TSCs, lanes, vehicles, and connections as nodes within an evolving graph structure, allowing IG-RL to learn detailed representations of traffic dynamics. Unlike traditional multi-agent reinforcement learning (MARL), which suffers from nonstationarity and specialization limitations, IG-RL uses a shared policy framework that adapts to various network architectures without additional retraining. This makes it capable of zero-shot transfer to novel environments and traffic patterns.

Graph-Based Representation

The use of GCNs in IG-RL is pivotal to capturing the spatiotemporal intricacies of traffic dynamics. By embedding vehicles and lanes as graph nodes, IG-RL exploits fine-grained data, representing every vehicle as a node with dynamic interactions captured through edges. This granular representation enables the model to adjust to changing traffic densities and configurations across different intersections, supporting both inductive learning and parameter sharing.

Figure 2: Four randomly generated road networks. Thickness indicates the number of lanes per direction (between 1 and 2 per direction for a maximum of 4 lanes per edge).

Experimental Evaluation

The efficacy of IG-RL was tested on both synthetic and real-world networks, including the challenging Manhattan road network with nearly 4,000 TSCs. The experiments demonstrate significant improvements over MARL, as well as heuristic approaches like fixed-time control and greedy methods favoring high-speed traffic flow. In synthetic road network tests, IG-RL outperformed all baselines, showing robustness in generalizing to untrained road architectures and traffic regimes.

Synthetic Road Networks

The initial synthetic road network experiments assessed IG-RL's ability to adapt to networks it was not trained on. The results showed that IG-RL could manage unknown intersections more effectively than specialized MARL agents, highlighting its superior generalization capacity.

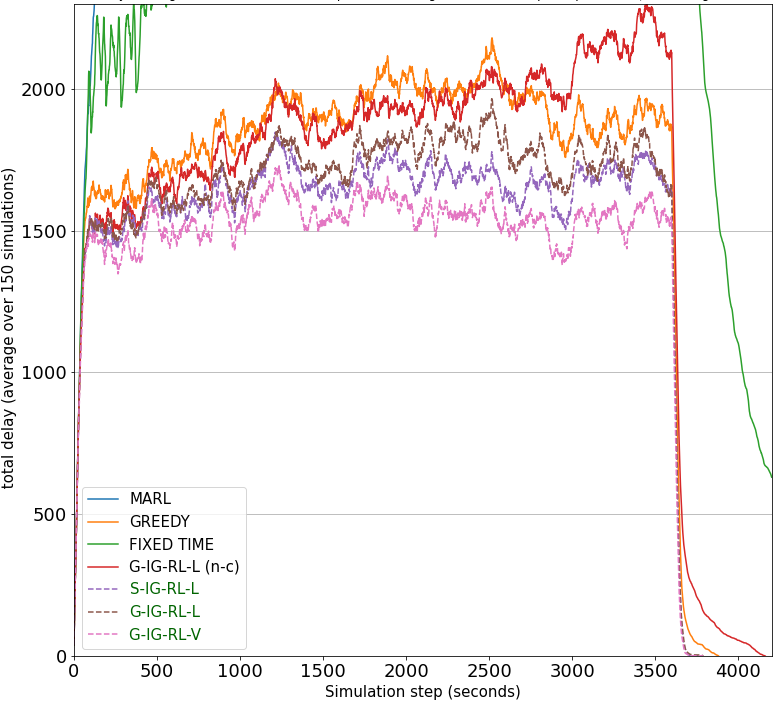

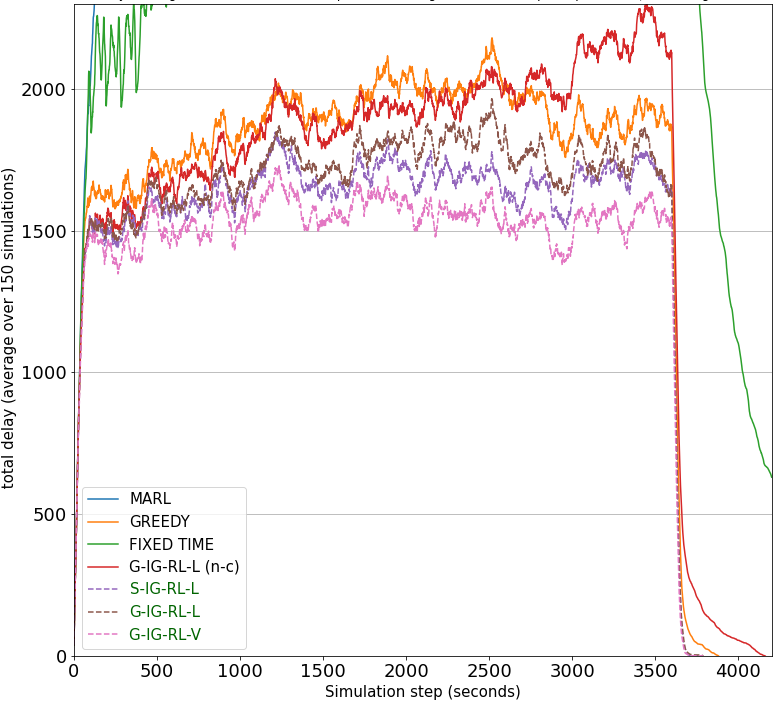

Figure 3: Trips Durations: Default Traffic Regime | Synthetic Road Networks.

Manhattan Road Network

Transferred without additional training, IG-RL scaled efficiently to manage the complex Manhattan network. The zero-shot adaptability proved pivotal for deploying RL-based traffic control in large-scale real-world settings, demonstrating IG-RL's potential for practical deployment.

Figure 4: Total Delay Evolution: Default Traffic Regime | Synthetic Road Networks. For clarity, this figure focuses on competitive approaches with lower delays (which stabilize early on).

Implications and Future Work

IG-RL holds promising implications for real-world implementations of ATSC, offering reduced congestion and enhanced traffic management efficiency in urban settings. Future research could explore extending the model to incorporate multi-modal transportation data, such as pedestrian and cyclist inputs, to further refine traffic model accuracy. Additionally, exploring coordination mechanisms between decentralized MDPs through deeper GCN layers or recurrent structures could enhance performance further.

Conclusion

IG-RL marks a step forward in scalable traffic control solutions, overcoming traditional MARL limitations with a decentralized, inductive approach that leverages GCN's flexibility. The transferable policy framework not only eases deployment across diverse urban landscapes but also enhances adaptability to dynamic traffic patterns, promising more sustainable urban mobility solutions. The study sets the groundwork for future advancements in deep reinforcement learning applications for complex multi-agent systems like traffic signal control.

Note: The supplementary material provides additional insights into algorithmic details and comprehensive experimental results.