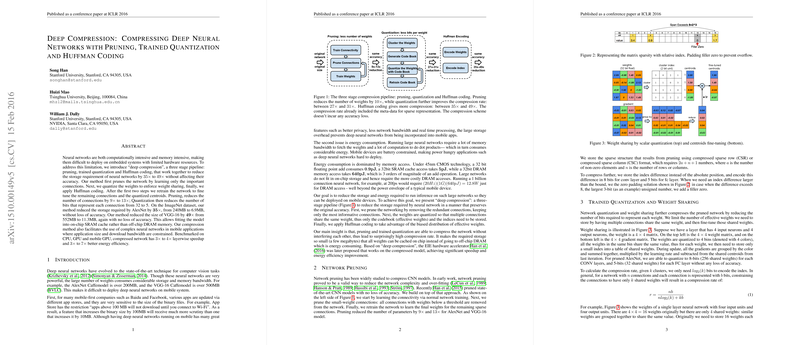

Deep Compression (Han et al., 2015 ) introduces a three-stage pipeline designed to significantly reduce the storage footprint of deep neural networks, making them practical for deployment on resource-constrained devices like mobile phones and embedded systems. The core idea is to combine pruning, trained quantization with weight sharing, and Huffman coding to achieve high compression rates without sacrificing model accuracy. The paper demonstrates compression factors of to on prominent networks like AlexNet and VGG-16.

The motivation behind Deep Compression stems from the observation that state-of-the-art neural networks are often computationally and memory-intensive. Large model sizes pose challenges for mobile application deployment (due to download size constraints) and energy efficiency (due to costly off-chip DRAM accesses). The paper highlights that DRAM access is orders of magnitude more energy-consuming than on-chip SRAM access or computation. Therefore, reducing model size such that it fits into on-chip caches is a primary goal.

Here are the practical implementation details and applications of each stage:

1. Network Pruning

Goal: Remove redundant connections (weights) that contribute little to the network's accuracy. Method:

- Train the network: Start with a pre-trained, dense neural network.

- Prune small weights: Identify and remove connections whose absolute weight values are below a predefined threshold. This threshold is typically chosen based on the desired sparsity level.

- Retrain the network: Fine-tune the weights of the remaining sparse connections. This step is crucial to recover any accuracy loss introduced by pruning. Implementation:

- Pruning results in sparse weight matrices. These sparse structures must be stored efficiently. Common formats are Compressed Sparse Row (CSR) or Compressed Sparse Column (CSC).

- The paper proposes storing the index difference between non-zero elements instead of absolute indices. For typical sparse networks where non-zero elements are relatively close, this difference is small and can be encoded with fewer bits (e.g., 8 bits for convolutional layers, 5 bits for fully connected layers). Filler zeros are used if the difference exceeds the encoding capacity for a given number of bits.

- During retraining, a mask can be applied to the weight updates to ensure that the pruned connections remain zero. Results: Pruning alone reduces the number of weights by to for AlexNet and VGG-16, respectively, while maintaining accuracy. This significantly reduces the storage required for the weight values themselves, but introduces the overhead of storing indices.

2. Trained Quantization and Weight Sharing

Goal: Reduce the number of bits required to represent each remaining weight by having multiple connections share the same weight value. Method:

- Apply k-means clustering: After pruning and retraining, apply one-dimensional k-means clustering to the non-zero weights in each layer (independently per layer). Each cluster centroid represents a shared weight value.

- Weight Sharing: Replace each weight with the index of the cluster centroid it belongs to. Only the set of centroid values (the "codebook") and the indices for each weight need to be stored.

- Fine-tune shared weights: Retrain the network again, but this time the training updates the centroid values instead of individual weights. Gradients for all weights belonging to a cluster are summed and used to update the cluster's centroid. Implementation:

- The network weights are replaced by indices into a codebook (a small array of shared weight values).

- During feed-forward and back-propagation, there is an indirect lookup step to retrieve the actual weight value using the index.

- The gradient update rule for a centroid is $\frac{\partial \cal{L}}{\partial C_k} = \sum_{i,j} \frac{\partial \cal{L}}{\partial W_{ij}} \mathds{1}(I_{ij}=k)$, where is the weight at index , is its centroid index, and $\mathds{1}(.)$ is the indicator function.

- The paper investigates centroid initialization methods (Forgy, density-based, linear) and finds that linear initialization generally yields the best accuracy by ensuring large weights are better represented (Han et al., 2015 ). Results: Quantization further compresses the pruned network. For AlexNet, weights are quantized to 8 bits for CONV layers and 5 bits for FC layers. For VGG-16, 8 bits for CONV and 5 bits for FC. This reduces the bits per non-zero weight from 32-bit floating-point to 5-8 bits. The combination of pruning and quantization achieves compression rates of to for ImageNet models without accuracy loss.

3. Huffman Coding

Goal: Leverage the non-uniform distribution of the quantized weights and sparse index differences for additional lossless compression. Method: Apply standard Huffman coding to the bitstreams representing the quantized weight indices and the sparse index differences. Symbols that appear more frequently are assigned shorter codewords. Implementation: This is typically an offline step applied after the pruning and quantization fine-tuning are complete. The Huffman codebook must be stored along with the compressed model data. Results: Huffman coding provides an additional compression, boosting the total compression rate from (pruning + quantization) to for AlexNet and from to for VGG-16 (Han et al., 2015 ).

Combined Results and Practical Implications

The three stages of Deep Compression work synergistically. Pruning reduces the number of weights, making quantization more effective as there are fewer values to cluster. Quantization reduces the bits per remaining weight. Huffman coding provides a final lossless squeeze. The paper shows that pruning and quantization combined achieve much higher compression before accuracy drops compared to applying either method alone (Figure 6).

The resulting compressed models are significantly smaller. AlexNet reduces from 240MB to 6.9MB, and VGG-16 from 552MB to 11.3MB. These sizes are small enough to potentially fit into on-chip SRAM caches, drastically reducing energy consumption during inference by avoiding costly DRAM accesses. This is particularly beneficial for real-time, low-latency applications (batch size = 1) common in embedded and mobile contexts.

The paper benchmarks the pruned network (as off-the-shelf libraries don't fully support the quantized sparse format) and shows notable speedups ( to ) and energy efficiency improvements ( to ) for batch size=1 on CPU, GPU, and mobile GPU (Tegra K1). This demonstrates the practical performance benefits of a reduced memory footprint and sparsity, even without fully exploiting the weight sharing at the hardware/library level.

| Time (us) | AlexNet FC6 Dense (batch=1) | AlexNet FC6 Sparse (batch=1) | VGG16 FC6 Dense (batch=1) | VGG16 FC6 Sparse (batch=1) |

|---|---|---|---|---|

| Titan X | 541.5 | 134.8 | 1467.8 | 167.0 |

| Core i7-5930k | 7516.2 | 3066.5 | 35022.8 | 3774.3 |

| Tegra K1 | 12437.2 | 2879.3 | 35427.0 | 4377.2 |

| Power (Watts) | AlexNet FC6 Dense (batch=1) | AlexNet FC6 Sparse (batch=1) | VGG16 FC6 Dense (batch=1) | VGG16 FC6 Sparse (batch=1) |

|---|---|---|---|---|

| Titan X | 157 | 181 | 166 | 189 |

| Core i7-5930k | 83.5 | 42.3 | 70.6 | 38.0 |

| Tegra K1 | 5.1 | 5.9 | 5.3 | 5.6 |

Detailed timings and power consumption values for specific layers and batch sizes can be found in Appendix A of the paper (Han et al., 2015 ), reproduced in Table 4 and Table 5 above.

Implementation Considerations and Future Work

The paper notes that fully realizing the speedup and energy efficiency potential of the quantized and sparse network requires specialized hardware or optimized software libraries that can efficiently handle the indirect lookup via indices and the compressed index representations. Standard libraries like cuSPARSE or MKL SPBLAS primarily support basic sparse formats (like CSR/CSC) but may not optimize for the specific structures resulting from trained quantization and index difference encoding. This motivated subsequent work on dedicated hardware accelerators like EIE [han2016eie] tailored for such compressed models.

In summary, Deep Compression (Han et al., 2015 ) provides a powerful, multi-stage pipeline for compressing deep neural networks for deployment on resource-constrained platforms. Its practical impact lies in enabling large models to fit into limited memory, reducing bandwidth requirements, and improving energy efficiency, particularly crucial for real-time mobile and embedded AI applications. The techniques described are practical steps that can be implemented using existing deep learning frameworks with careful management of sparse data structures and retraining phases.