Exploring the Efficacy of Pruning for Model Compression in Deep Neural Networks

This paper presents an in-depth exploration of model pruning as a technique for compressing deep neural networks, aimed at improving efficiency in resource-constrained environments without significantly compromising performance. The authors investigate the trade-offs between large, sparse models and their small, dense counterparts across various neural network architectures, leveraging a novel gradual pruning method designed to be simple and broadly applicable.

Key Contributions

The main contributions of this paper are twofold. First, it empirically demonstrates that large-sparse models consistently outperform small-dense models of equivalent memory footprint. Second, it introduces a straightforward gradual pruning approach that can be easily integrated into the training process.

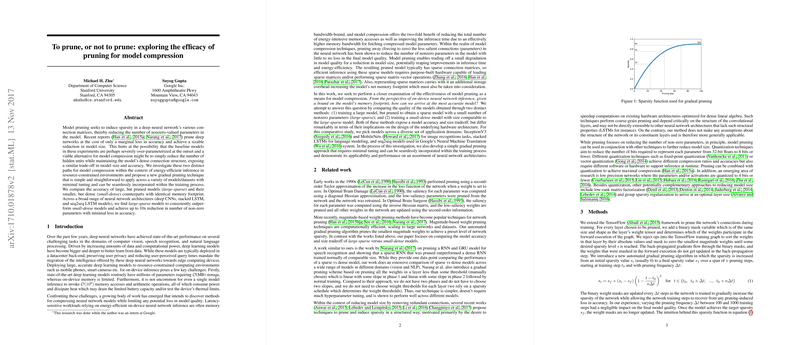

Gradual Pruning Technique

The proposed gradual pruning technique involves progressively increasing the sparsity of a neural network's parameters during training. The process begins after a few initial training epochs or from a pre-trained model, and the sparsity level is gradually raised to a desired final value over several pruning steps. This method ensures a smooth transition, allowing the network to recover from any accuracy loss induced by pruning.

Empirical Evaluation

The performance of the gradual pruning technique was evaluated across a diverse set of neural network models:

- Image Recognition:

- InceptionV3: Experiments revealed that models pruned up to 87.5% maintained competitive top-5 classification accuracy with a minimal degradation of about 2%. This provided an 8x reduction in the number of non-zero parameters.

- MobileNets: The 90% sparse model outperformed the dense model with a 0.25 width multiplier by over 10.2% in top-1 accuracy, showcasing the pruning benefits even in highly parameter-efficient architectures.

- LLMing:

- Penn Tree Bank (PTB) LSTM: Sparse models exhibited superior performance to their dense counterparts with significantly fewer parameters. For instance, a large-model pruned by 90% outperformed a dense medium-model in perplexity while having only a third of the parameters.

- Machine Translation:

- Google Neural Machine Translation (GNMT): Pruned models demonstrated robustness with near baseline BLEU scores despite up to 90% sparsity. The 90% sparse GNMT model achieved comparable performance to a dense model of three times its size.

Model Storage Considerations

The paper also discusses the memory overhead associated with sparse matrix representations. While pruning reduces the number of non-zero parameters, the storage overhead due to indexing must be considered. Bit-mask and compressed sparse row/column (CSR(C)) representations were evaluated, with the latter generally offering lower overhead for highly sparse models.

Implications and Future Directions

The findings suggest significant practical implications:

- Deployment: The superior performance of large-sparse models implies that pruning can be an effective strategy for deploying deep learning models in memory and power-constrained environments, such as mobile devices and edge computing scenarios.

- Hardware Development: The observed benefits emphasize the need for specialized hardware accelerators optimized for efficient sparse matrix storage and computation.

Future research directions could extend the interaction between pruning and model quantization, investigating how these techniques collectively impact model accuracy and efficiency. Given the sparsity-induced storage overhead, the effects of quantizing sparse model parameters merit closer attention.

Conclusion

This work offers a robust comparative paper between sparse and dense neural network models, underlining the efficacy of pruning as a valuable model compression technique. The demonstrated gains in efficiency and ease-of-use of the proposed gradual pruning method should encourage broader adoption and prompt further innovations in both software and hardware to support sparse neural networks.

For those interested, the TensorFlow pruning library used to produce these results is made available via the following repository: TensorFlow Pruning Library.

By providing a clear empirical foundation and practical tools, this paper contributes valuable insights to the field of neural network compression, aiding both academic researchers and industry practitioners in developing more efficient models.