Learning both Weights and Connections for Efficient Neural Networks

The paper "Learning both Weights and Connections for Efficient Neural Networks" presents a significant contribution to efforts to enhance neural network efficiency in computational and memory-constrained environments. Song Han et al. introduce a unique approach that both prunes and learns optimal connections in neural networks, reducing storage and computational requirements substantially while maintaining the original network's accuracy. This essay will summarize the methodology, results, implications, and potential future directions of this research.

Introduction and Motivation

Neural networks, especially large models with millions or billions of parameters, demand substantial computational and memory resources. This restricts their deployment on mobile and embedded systems. With a growing need for efficient neural network models, the authors propose a three-step pruning method that achieves the desired reduction in resource consumption.

Methodology

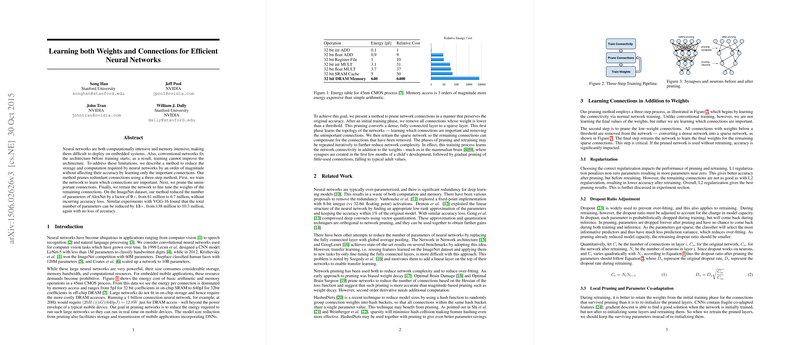

The proposed method involves:

- Initial Training: The network is first trained in a typical manner to determine important connection weights.

- Pruning: Connections with weights below a specified threshold are removed, introducing sparsity.

- Retraining: The resulting sparse network is retrained to fine-tune the remaining weights, ensuring that it can compensate for the pruned connections.

This iterative process can be repeated to progressively refine the pruned network. Notably, the method also incorporates techniques such as dropout ratio adjustment during retraining and the employment of L2 regularization to ultimately enhance post-pruning performance.

Experimental Results

The experiments conducted using Caffe and GPUs on various datasets and architectures confirm the efficacy of this method. Key findings include:

- AlexNet on ImageNet: The number of parameters was reduced from 61 million to 6.7 million (a 9x reduction) without accuracy loss.

- VGG-16 on ImageNet: Parameters reduced from 138 million to 10.3 million (a 13x reduction) with no loss in accuracy.

- LeNet on MNIST: Significant parameter reductions of around 12x, exemplifying the method's capability across different architectures and datasets.

Discussion and Implications

The research demonstrates that by pruning redundant connections and retraining, neural networks can be made significantly more efficient. This has several implications:

- Efficiency: The reduction in model size facilitates real-time deployment on mobile devices, aligning with the constraints of embedded systems.

- Energy Consumption: By fitting more computations into on-chip memory, the energy expenditure for off-chip DRAM accesses decreases dramatically.

- Model Compression: The approach can lead to more compact model storage, beneficial for scenarios involving model transmission and storage.

Comparative Analysis

When compared to other state-of-the-art model reduction techniques, such as Deep Fried Convnets, SVD-based compression, and data-free pruning, the proposed method achieves superior parameter savings with no degradation in accuracy. This positions the method as a robust solution to the pressing issue of neural network efficiency.

Future Directions

A few directions for future exploration include:

- Further Layer-wise Optimization: Investigate more granular layer-wise pruning techniques tailored to different types of layers (CONV vs FC).

- Cross-domain Applications: Extend pruning techniques to other neural network models used in domains such as natural language processing and reinforcement learning.

- Hardware Acceleration: Development of specialized hardware that can leverage the sparsity introduced by pruning for even greater efficiency gains.

Conclusion

The presented method by Song Han et al. stands as a pivotal advancement in the quest for efficient neural networks. By unveiling an innovative pruning approach that retains accuracy while substantially reducing parameter counts, this research paves the way for more practical and scalable neural network deployments on resource-constrained environments. Further exploration and refinement of this methodology will likely continue to push the boundaries of what can be achieved in efficient neural network design and deployment.