Memory in the Age of AI Agents (2512.13564v1)

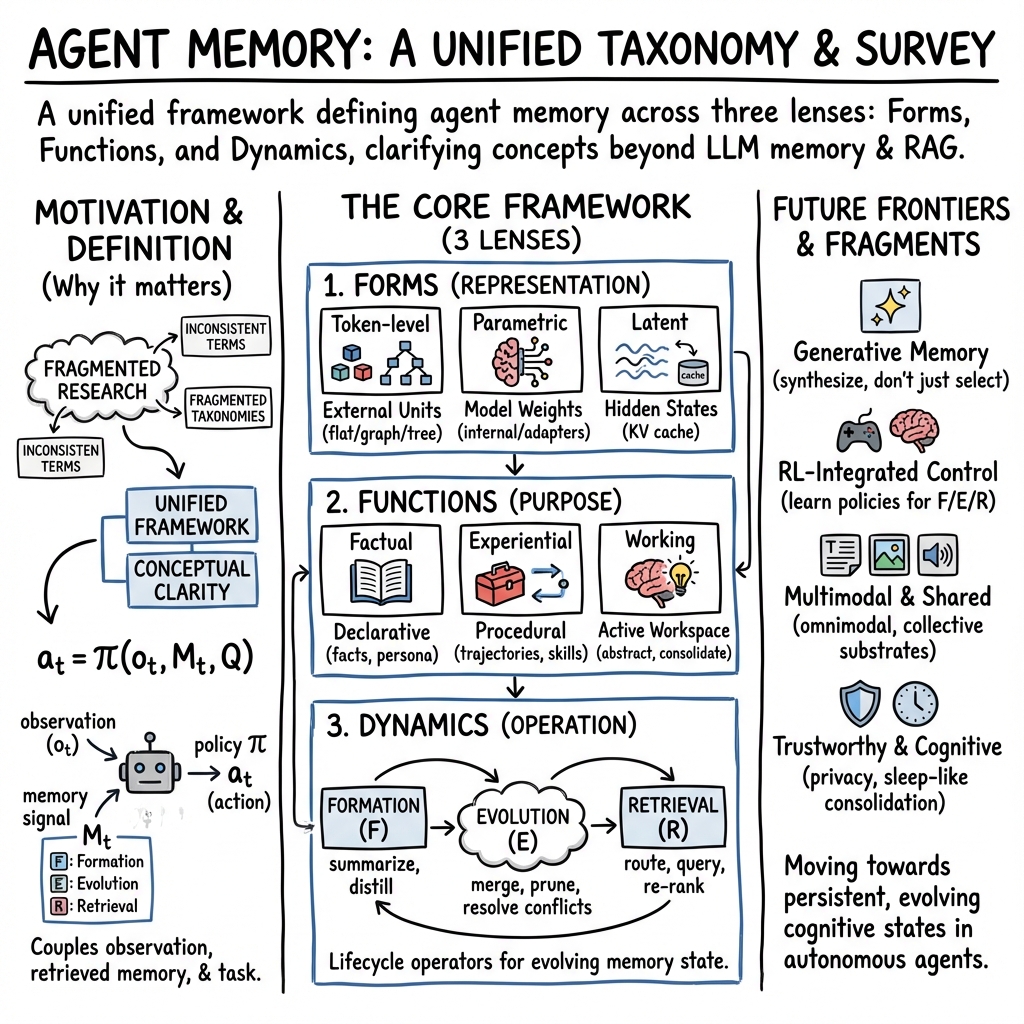

Abstract: Memory has emerged, and will continue to remain, a core capability of foundation model-based agents. As research on agent memory rapidly expands and attracts unprecedented attention, the field has also become increasingly fragmented. Existing works that fall under the umbrella of agent memory often differ substantially in their motivations, implementations, and evaluation protocols, while the proliferation of loosely defined memory terminologies has further obscured conceptual clarity. Traditional taxonomies such as long/short-term memory have proven insufficient to capture the diversity of contemporary agent memory systems. This work aims to provide an up-to-date landscape of current agent memory research. We begin by clearly delineating the scope of agent memory and distinguishing it from related concepts such as LLM memory, retrieval augmented generation (RAG), and context engineering. We then examine agent memory through the unified lenses of forms, functions, and dynamics. From the perspective of forms, we identify three dominant realizations of agent memory, namely token-level, parametric, and latent memory. From the perspective of functions, we propose a finer-grained taxonomy that distinguishes factual, experiential, and working memory. From the perspective of dynamics, we analyze how memory is formed, evolved, and retrieved over time. To support practical development, we compile a comprehensive summary of memory benchmarks and open-source frameworks. Beyond consolidation, we articulate a forward-looking perspective on emerging research frontiers, including memory automation, reinforcement learning integration, multimodal memory, multi-agent memory, and trustworthiness issues. We hope this survey serves not only as a reference for existing work, but also as a conceptual foundation for rethinking memory as a first-class primitive in the design of future agentic intelligence.

Sponsor

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

What is this paper about?

This paper is a guide to “memory” for AI agents built on LLMs. Think of an AI agent like a game character: it observes the world, makes plans, uses tools, talks to people, and learns from what happens. Memory is what lets the agent remember facts, learn from experience, and keep track of what it’s doing over time. The authors explain different kinds of memory, how they work, how they’re used, and where the field is going.

What questions does it try to answer?

The paper tackles five simple questions:

- What exactly counts as “agent memory,” and how is it different from close ideas like LLM memory, RAG (retrieval-augmented generation), and context engineering?

- What shapes or forms can memory take inside an agent?

- What jobs does memory do for an agent?

- How does memory get created, updated, and looked up over time?

- What exciting directions should researchers explore next?

How did the authors study it?

This is a survey paper, which means the authors read a lot of recent work and organized it into a clear, easy-to-navigate framework. They do three main things:

1) Set the stage: What’s an agent and how does memory plug in?

- They describe an agent as a decision-maker that observes its environment, thinks with an LLM, uses tools, and acts.

- Memory is a separate system the agent can write to (store what happened) and read from (recall what’s useful) across steps and across tasks.

They describe a simple memory life cycle using everyday ideas:

- Formation: turning important moments into memory entries (like jotting down a useful tip after trying something).

- Evolution: cleaning, merging, or upgrading memory (like tidying your notes, removing duplicates, or summarizing big lessons).

- Retrieval: pulling out the right memory at the right time (like searching your notes when faced with a similar problem).

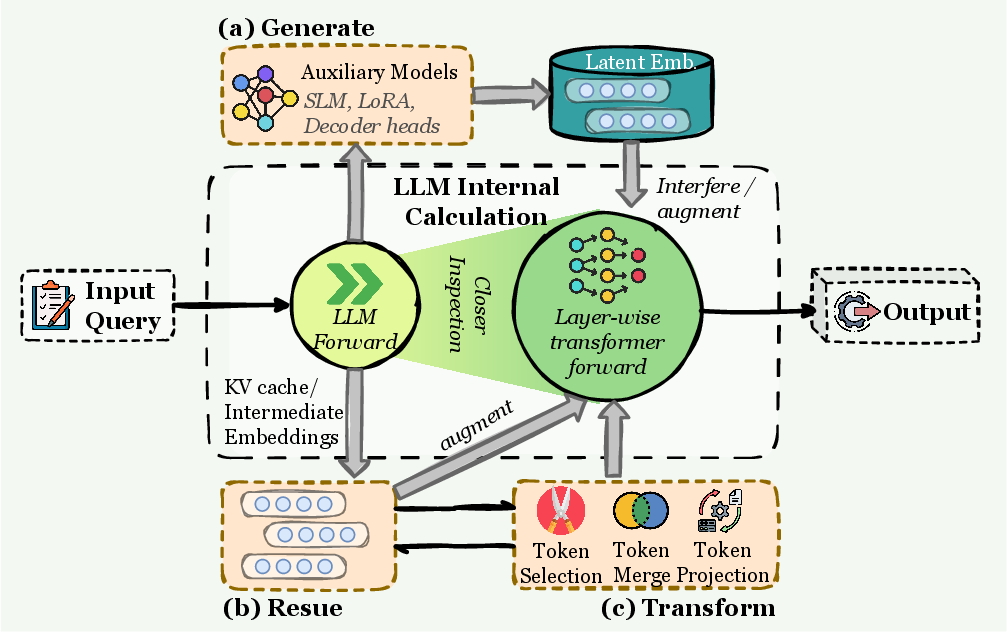

2) Offer a new “Forms–Functions–Dynamics” framework

The authors organize the field with three lenses—think of a library analogy:

- Forms: What do the shelves and containers look like? (How memory is stored)

- Functions: What jobs do the books do for you? (Why memory is useful)

- Dynamics: How do books get added, organized, and found over time? (How memory changes)

3) Compare agent memory to similar ideas

They explain how “agent memory” differs from:

- LLM memory: techniques that change how the model itself holds information internally (like making the “brain” better at long passages). This is more about the model’s architecture than an agent’s ongoing, reusable memory.

- RAG (retrieval-augmented generation): looking up facts from a static knowledge base (like Googling during a single task). Agent memory, in contrast, keeps evolving from the agent’s own experiences across many tasks.

- Context engineering: packing the most important instructions and facts into a limited “context window” (like organizing a small backpack). Agent memory is the long-lived knowledge store; context engineering is about how to cram the right pieces into the model at the moment.

They also collect benchmarks (tests) and frameworks (open-source tools) so people can build and evaluate memory systems more easily.

What did they find?

The main “results” of a survey are clear definitions and a simple map of the field. Here are the key takeaways.

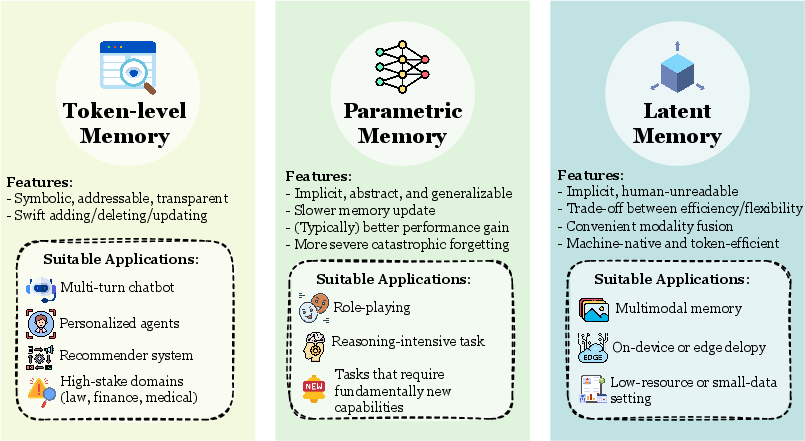

A clearer set of memory “forms” (how memory is stored)

- Token-level memory: explicit notes you can see and edit, like a diary, timeline, or a database of snippets, graphs, or summaries.

- Parametric memory: knowledge baked into the model’s learned weights (its “brain wiring”) through training or fine-tuning.

- Latent memory: temporary or evolving internal states during thinking, like a mental scratchpad or hidden “thoughts in progress.”

Why this matters: different forms are good for different needs—token-level memory is easy to inspect and fix; parametric memory is fast to access but hard to edit; latent memory captures ongoing context but may not persist by itself.

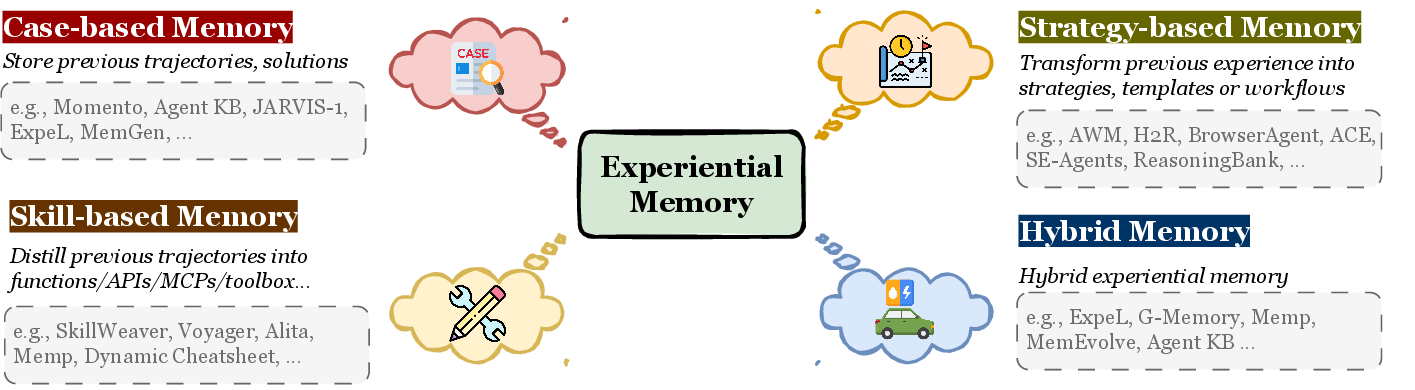

A sharper view of memory “functions” (what memory is for)

- Factual memory: keeps facts about users and the world, like preferences, profiles, or discovered knowledge.

- Experiential memory: captures lessons from successes and failures—skills, strategies, and reusable patterns learned from past tasks.

- Working memory: a short-term “scratchpad” for complex tasks—plans, intermediate steps, tool outputs—so the agent doesn’t lose track mid-task.

Why this matters: splitting memory by job is more useful than just “short-term vs long-term,” because these jobs happen at different times and need different tools.

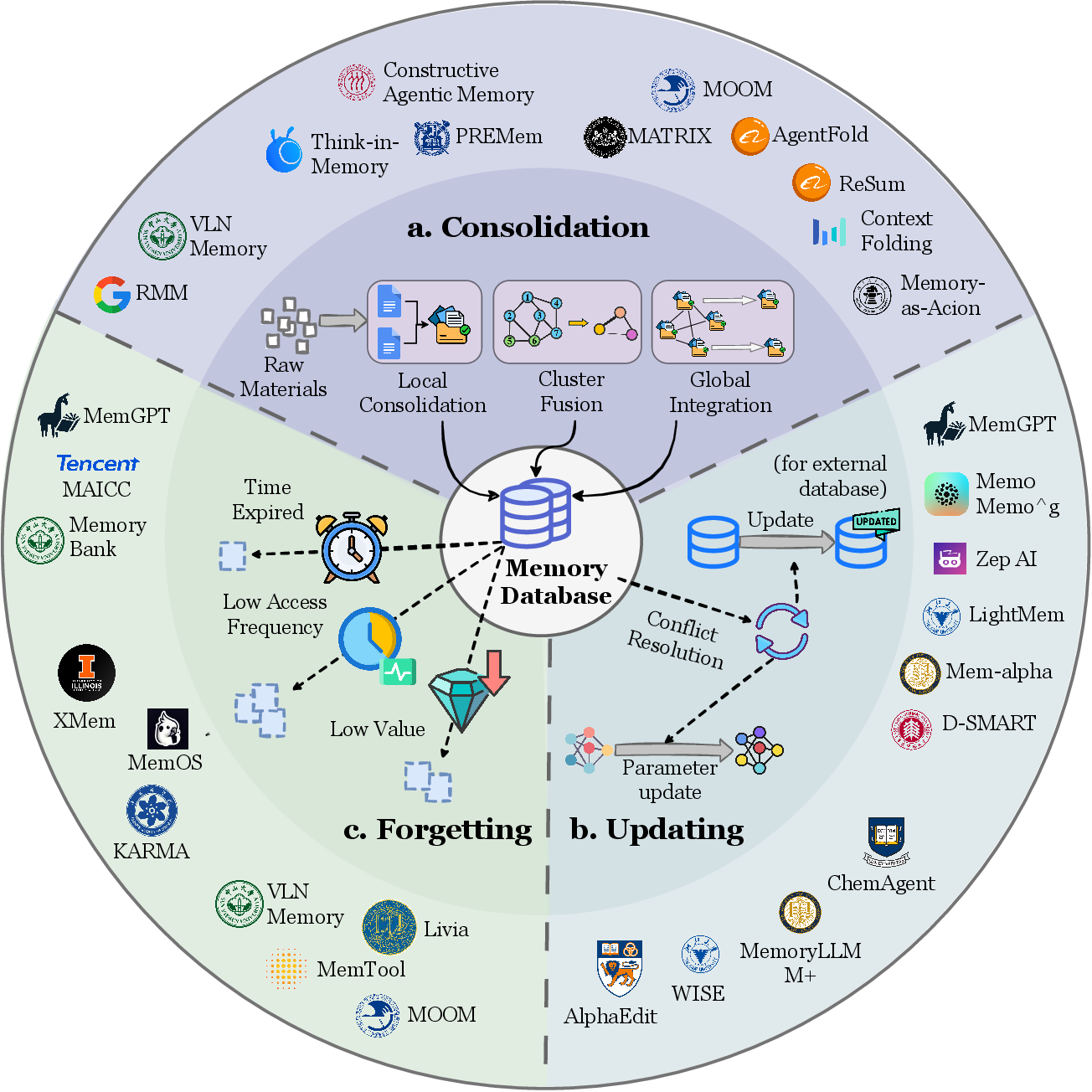

A practical model of memory “dynamics” (how memory changes)

- Formation → Evolution → Retrieval is a helpful way to think about how memory grows and how to control its quality.

- This life cycle applies both within a single task (e.g., summarizing steps as you go) and across many tasks (e.g., building a skill library).

Clearer boundaries with nearby ideas

- LLM memory is about the model’s internals (like longer attention spans). Agent memory is about a persistent, evolving store the agent deliberately uses.

- RAG is mainly “look it up in a static library.” Agent memory is “build your own library from experience—then look it up.”

- Context engineering is how you pack inputs into the model right now. Agent memory is what you’ve collected and refined over days or weeks.

Helpful resources and future directions

The paper lists benchmarks and frameworks to test and build agent memory. It also spotlights promising directions:

- Automation-oriented memory design: letting agents organize and clean their own memory.

- Integrating reinforcement learning: learning what to store, when to recall, and how to improve decisions.

- Multimodal memory: handling text, images, audio, and more together.

- Shared memory for multi-agent teams: letting agents collaborate through a common knowledge base.

- Trustworthiness: preventing wrong, outdated, or private data from polluting memory; making memory transparent and safe.

Why does this matter?

If we want AI agents that don’t forget important details, improve with practice, and act consistently over long periods, we need better memory. A solid memory system helps:

- Personal assistants remember your preferences and goals.

- Research agents build up reusable knowledge from past projects.

- Coding and planning agents reuse strategies instead of starting from scratch.

- Teams of agents share what they learn and coordinate better.

- All agents become more reliable, efficient, and safe over time.

Bottom line

Memory is a core skill for AI agents, not just a handy add-on. This paper gives a simple, unified way to think about memory’s shapes (forms), jobs (functions), and growth (dynamics). With clearer definitions, better tools, and attention to safety, we can build agents that learn continually and help more effectively in the real world.

Knowledge Gaps

Knowledge gaps, limitations, and open questions

The paper leaves the following issues unresolved; each point specifies a concrete gap that future work can directly address:

- Missing operational criteria to delineate “agent memory” from LLM memory, RAG, and context engineering: define a decision procedure (e.g., a checklist or flowchart) that classifies a system based on persistence, self-evolution, internal/external storage, and cross-task use, and validate it across representative systems.

- Lack of formal properties and guarantees for the memory lifecycle operators F (formation), E (evolution), and R (retrieval): develop mathematical characterizations (e.g., monotonicity, stability, boundedness, convergence) and derive algorithmic templates with provable performance or error bounds.

- Unspecified retrieval scheduling policies: formulate and evaluate strategies for when to retrieve (one-shot, intermittent, continuous), under compute/latency budgets, with adaptive triggers learned from context and uncertainty.

- No unified evaluation protocol for factual, experiential, and working memory: design controlled benchmarks that isolate each function (e.g., personalized knowledge retention, skill acquisition across tasks, context-window continuity) and standardized metrics for correctness, utility, and persistence.

- Absent causal attribution methods: build experimental designs that disentangle performance gains due to memory vs reasoning/planning/tool-use (e.g., paired ablations, counterfactual replay, causal graphs over agent components).

- Incomplete guidance on selecting memory forms for functions: provide evidence-backed decision rules that map token-level/parametric/latent memory to task profiles (environment type, horizon, resource constraints), including failure modes and trade-offs.

- Weakly specified conflict handling and consistency guarantees in memory evolution: create mechanisms to detect, resolve, and audit contradictions (e.g., versioning, confidence-weighted merges, provenance tracking) with measurable integrity metrics.

- No standard for memory correctness verification: introduce verification pipelines (e.g., schema checks, constraint validation, cross-source corroboration) and define acceptance/rejection criteria before integration into persistent memory.

- Limited treatment of safety, privacy, and governance: develop privacy-preserving memory formation (e.g., differential privacy or federated memory), access controls, consent management, and defenses against memory poisoning/injection, with compliance audits.

- Undefined policies for multi-agent shared memory: design concurrency control (locking, CRDTs, conflict-free merges), access control (roles/scopes), and consistency models (eventual vs strong), and quantify their impact on coordination efficiency and errors.

- Underexplored evaluation of multimodal memory: specify alignment and grounding across text, image, audio, and embodied state; develop cross-modal retrieval metrics (recall, latency, semantic coherence) and datasets that test temporal continuity.

- Unclear boundaries and methods for parametric memory updates: define safe “write” operations into model weights (fine-tuning, LoRA, continual learning) with rollback, audit trails, and safeguards against catastrophic forgetting and misgeneralization.

- Poorly characterized latent memory persistence across tasks: investigate whether and how hidden states or caches should persist beyond single tasks, their reproducibility implications, and risks (e.g., leakage, contamination), and standardize policies.

- Missing cost/utility models: quantify storage growth, indexing costs, retrieval latencies, and memory-induced token overhead; propose optimization frameworks to balance accuracy, user experience, and resource budgets.

- No standardized procedures for experience distillation into reusable tools: define criteria for when experiences become tools, their packaging (interfaces, tests), and evaluation of tool reusability and transfer across domains.

- Lack of metrics for agent identity continuity and personalization quality: create measures for preference retention, persona stability, drift detection, and user satisfaction under long-horizon interaction.

- Limited theoretical integration with cognitive science: provide a normative mapping from declarative/episodic/semantic and working memory to the survey’s forms–functions–dynamics, and test cognitive-inspired hypotheses in agent settings.

- Unspecified integration with reinforcement learning: formalize memory as part of the agent’s state in POMDPs, learn F/E/R via RL (credit assignment, off-policy corrections), and benchmark sample-efficiency gains and robustness.

- No co-design framework with context engineering: develop automated schedulers that jointly optimize memory retrieval, summarization, and context assembly under token limits, with guarantees on information retention and inference correctness.

- Scalability and systems questions remain open: study distributed memory stores, sharding/replication strategies for vector/graph indices, and their implications on throughput, consistency, and fault tolerance in real-time agent deployments.

- Reproducibility and comparability gaps: specify reference implementations of memory operators, logging/provenance standards, and evaluation harnesses to ensure fair comparisons across systems and tasks.

- Limited treatment of non-iid streaming and concept drift: develop adaptive memory policies (aging, reweighting, forgetting) and drift detection mechanisms with empirical validation in long-lived, evolving environments.

Glossary

- Action space: The set of possible actions an agent can take, often heterogeneous (language, tools, planning, environment control). "A distinguishing feature of LLM-based agents is the heterogeneity of their action space."

- Agent memory: A persistent, self-evolving system that stores, retrieves, and updates information to support agent cognition and behavior across tasks. "As research on agent memory rapidly expands and attracts unprecedented attention, the field has also become increasingly fragmented."

- Agentic RAG: A retrieval paradigm where an agent autonomously decides when and how to retrieve information as part of its decision loop. "Agentic RAG integrates retrieval into an autonomous decision-making loop, where an LLM agent actively controls when, how, and what to retrieve"

- Attention-sparsity mechanisms: Architectural techniques that reduce attention computation by focusing on salient parts of long sequences. "attention-sparsity mechanisms, or externalized KV-store expansions—are more appropriately classified as LLM memory rather than agent memory."

- Autoregressive LLM backbone: An LLM architecture that generates outputs token by token conditioned on prior context, underlying many agent actions. "These actions, though diverse in semantics, are unified by the fact that they are produced through an autoregressive LLM backbone conditioned on a contextual input."

- Context engineering: A design methodology that optimizes what information goes into the model’s context window under resource constraints. "Context engineering is a systematic design methodology that treats the context window as a constrained computational resource."

- Context window: The bounded input context an LLM can attend to during inference. "the context window as a constrained computational resource"

- Controlled stochastic transition model: A probabilistic model describing how environment states change given current state and action. "the environment evolves according to a controlled stochastic transition model"

- Evolution operator: The function that consolidates and integrates memory candidates into the existing memory base. "Formed memory candidates are integrated into the existing memory base through an evolution operator"

- Experiential memory: Memory of procedures and experiences that incrementally improves an agent’s problem-solving ability through execution. "experiential memory, which incrementally enhances the agent's problem-solving capabilities through task execution"

- Externalized KV-store expansions: Methods that extend or offload the transformer’s key–value states to external storage for longer effective memory. "attention-sparsity mechanisms, or externalized KV-store expansions—are more appropriately classified as LLM memory rather than agent memory."

- Factual memory: Stored factual knowledge gleaned from interactions with users and environments. "factual memory, which records knowledge from agents' interactions with users and the environment"

- Formation operator: The function that transforms interaction artifacts into candidate memory entries. "A formation operator [...] selectively transforms these artifacts into memory candidates"

- Graph RAG: Retrieval augmented generation that structures knowledge as graphs and uses graph traversal/ranking for context retrieval. "Graph RAG systems structure the knowledge base as a graph, ranging from knowledge graphs to concept graphs or document-entity relations"

- Graph-structured memory: Memory organized as nodes and edges capturing relations, updated as the agent accumulates insights. "graph-structured memory arises naturally when agents accumulate relational insights over time"

- Hierarchical Memory (3D): A multi-level memory architecture enabling vertical abstraction and cross-layer reasoning. "Hierarchical Memory (3D) employs multi-level forms, such as Pyramids or Multi-layer graphs, to facilitate vertical abstraction and cross-layer reasoning"

- KV compression: Techniques to compress the transformer’s key–value cache to fit within resource constraints. "Even KV compression and context-window management"

- Key–value (KV) cache: The transformer’s internal cache of keys and values used by attention to reuse past computations. "managing the transformer’s key–value (KV) cache"

- Knowledge graph: A structured representation of entities and relations used for retrieval and reasoning. "structured representations such as knowledge graphs and indexing strategies appear in both communities’ methods"

- Latent memory: Memory represented in hidden states or continuous latent structures that persist and evolve during interaction. "we identify three dominant realizations of agent memory, namely token-level, parametric, and latent memory."

- LLM memory: Memory mechanisms centered on the LLM’s internal state, caching, or architecture rather than external agent memory. "agent memory almost fully subsumes what has traditionally been referred to as LLM memory."

- LLM-based agent: An agent built around an LLM that interacts over time with capabilities like reasoning, planning, memory, and tool use. "LLM-based agents increasingly serve as the decision-making core of interactive systems that operate over time"

- Memory Evolution: The phase where memory is consolidated, deduplicated, and reorganized after formation. "Memory Evolution."

- Memory Formation: The phase where information artifacts are turned into candidate memory entries. "Memory Formation."

- Memory Retrieval: The process of fetching relevant memory given current observations and task specifications. "Memory Retrieval."

- Modular RAG: A pipeline-style RAG architecture with components for indexing, retrieval, reranking, filtering, and context assembly. "Modular RAG refers to architectures in which the retrieval pipeline is decomposed into clearly specified components, such as indexing, candidate retrieval, reranking, filtering, and context assembly"

- Multi-agent systems: Settings with multiple agents coordinating or acting sequentially within a shared environment. "In multi-agent systems, this abstraction allows for either sequential decision-making"

- Multi-hop QA: Question answering tasks requiring retrieval and reasoning over multiple linked pieces of information. "multi-hop QA settings where related context is progressively added"

- Parametric memory: Memory stored implicitly in model parameters and accessed during forward computation. "Parametric Memory (\Cref{ssec:parametric})"

- Planner--executor architectures: Agent designs that separate planning (decomposing tasks) from execution (acting on plans). "planner--executor architectures"

- Reinforcement learning: A learning paradigm where agents improve policies through interaction and reward signals. "have been largely internalized within model parameters through reinforcement learning"

- Retrieval operator: The function mapping memory state and current observation into a task-relevant memory signal. "where denotes a retrieval operator that constructs a task-aware query"

- Retrieval-augmented generation (RAG): Techniques that ground LLM outputs by retrieving external knowledge during inference. "retrieval-augmented generation (RAG)"

- Rolling summary technique: An ongoing summarization strategy that maintains a condensed, up-to-date working memory. "Similarly, the rolling summary technique serves as a shared foundational primitive"

- Semantic similarity matching: Retrieval based on semantic embeddings to find contextually similar memory items. "realized through vector search, semantic similarity matching, or rule-based filtering"

- Self-reflection: Methods in which an agent reviews and refines its own reasoning or outputs to improve performance. "Self-reflection and iterative refinement methods"

- Test-time scaling: Approaches that enhance performance at inference by adding computation or memory augmentation. "memory-augmented test-time scaling methods"

- Token-level Memory: Memory stored as explicit, discrete units (e.g., text chunks, visual tokens) that are externally accessible. "Token-level Memory (\Cref{ssec:token})"

- Token pruning: Techniques that remove low-importance tokens from context to fit within the window and reduce noise. "token pruning and importance-based selection methods"

- Vector database: A datastore of embeddings enabling efficient similarity search over memory or documents. "it may take the form of a text buffer, key--value store, vector database, graph structure, or any hybrid representation."

- Working memory: Short-term, task-specific memory that manages workspace information during an ongoing task. "we distinguish between factual memory, which records knowledge from agents' interactions with users and the environment; experiential memory, which incrementally enhances the agent's problem-solving capabilities through task execution; and working memory"

Practical Applications

Below is a concise mapping from the survey’s taxonomy and insights to practical, real-world applications. Each item names a specific use case, links it to sectors, outlines the tools/workflows implied by the paper’s “forms–functions–dynamics” view of memory, and notes assumptions or dependencies that affect feasibility.

Immediate Applications

- Memory-augmented customer support agents (software, retail, telecommunications)

- Use case: Persist user preferences, past issues, resolutions; reduce repeat questions; route tickets intelligently.

- Tools/workflows: Token-level factual memory (dialogue logs, rolling summaries), vector DB (e.g., FAISS/Milvus), graph memory for account relationships (Mem0, Zep, MemOS), retrieval operator R tuned via modular/graph RAG, context engineering for compression/summarization.

- Assumptions/dependencies: Consent and retention policies; PII handling; reliable memory formation F (good extraction templates); scalable retrieval latency; guardrails to prevent hallucinated recalls.

- Personalized consumer assistants (daily life, productivity apps)

- Use case: Recall routines, preferences, commitments; proactively remind, plan, and personalize recommendations.

- Tools/workflows: Token-level factual memory + working memory (rolling summaries), agentic RAG for calendar/docs, hierarchical memory schemas (e.g., pyramids for habits → tasks), context scheduling for window limits.

- Assumptions/dependencies: Local/on-device memory where possible; user controls (view/edit/delete); robust summarization to avoid drift; lightweight compute.

- Sales/CRM co-pilot with experiential memory (enterprise, finance)

- Use case: Distill successful playbooks from interactions; surface proven strategies for similar accounts; track multi-contact threads.

- Tools/workflows: Experiential memory pools (patterns, SOPs); formation F from outcomes and notes; evolution E for consolidation/conflict resolution; graph memory (account ↔ stakeholders ↔ events).

- Assumptions/dependencies: Good outcome labels; consistent logging; integration with CRM; governance to avoid bias (e.g., overfitting to loud accounts).

- Enterprise knowledge assistants beyond static RAG (software, legal, consulting)

- Use case: Move from document lookup to persistent, evolving knowledge bases that capture “how we do things” and corrections over time.

- Tools/workflows: Token-level factual + experiential memory layered over RAG; agentic RAG for iterative retrieval; evolution E to reconcile conflicts; context engineering for long workflow threads.

- Assumptions/dependencies: Document hygiene; access control; provenance tracking; scalable graph/store maintenance.

- Software engineering agents with working/factual memory (software)

- Use case: Track repo states, PR history, flaky tests, past fixes; reuse patches; accelerate bug triage and refactoring.

- Tools/workflows: Working memory (scratchpads, build logs), factual memory (issue histories), dynamic cheat-sheet/test-time scaling (Self-RAG-style), retrieval from code graphs; SWE-bench Verified-style evaluation.

- Assumptions/dependencies: Sandboxed execution; CI/CD integration; robust summarization of build/test artifacts; versioning-aware retrieval.

- Web research and browsing agents (media, competitive intelligence, academia)

- Use case: Multi-session research with memory of prior leads, hypotheses, and curated sources; reduce duplication and drift.

- Tools/workflows: Agentic RAG (Self-RAG, PlanRAG) + token-level factual memory; working memory for session threads; evolution E to consolidate reading notes; browse benchmarks (GAIA, BrowseComp).

- Assumptions/dependencies: Source credibility scoring; deduplication; bias mitigation; long-context management.

- Recommender systems with agent memory (media, e-commerce)

- Use case: Store interpretable preference traces; support transparent, controllable recommendations; adjust to shifts.

- Tools/workflows: Token-level factual memory of interactions; graph memory (users ↔ items ↔ attributes); evolution E for recency and drift; context engineering for session personalization.

- Assumptions/dependencies: Privacy, consent; drift detection; balanced exploration/exploitation; fairness monitoring.

- Healthcare triage/chat navigation with short- and long-term memory (healthcare, digital health)

- Use case: Remember non-diagnostic context across sessions (e.g., lifestyle patterns, medication reminders) and avoid re-asking sensitive questions.

- Tools/workflows: Token-level factual memory; strict retention/governance; role-based retrieval pipelines; rolling summaries to manage context window; audit logs.

- Assumptions/dependencies: HIPAA/GDPR compliance; clinician oversight; data minimization; robust deletion mechanisms.

- Education/tutoring agents (education)

- Use case: Maintain longitudinal learning profiles, misconceptions, goal progress; adapt exercises and explanations.

- Tools/workflows: Factual + experiential memory (skills mastered, failed attempts), hierarchical memory (concept → exercise → outcome), retrieval R per lesson, working memory for current session state.

- Assumptions/dependencies: Consent, parental controls; interoperability with LMS; calibration to avoid overfitting to past errors.

- Financial investigation and forensics (finance, compliance)

- Use case: Persistent case memory across data sources; multi-hop reasoning over transactions, entities, narratives; auditability.

- Tools/workflows: Graph memory (entities/transactions/events), agentic retrieval for multi-hop trails, evolution E for conflict resolution, context engineering for long chains.

- Assumptions/dependencies: Access to clean KYC/AML data; audit/provenance; human-in-the-loop for escalation; false-positive control.

- Multi-agent collaboration with shared memory (software, operations, creative)

- Use case: Planner–executor teams that share task states, constraints, and decisions; reduce coordination overhead.

- Tools/workflows: Shared token-level workspace memory (MemOS/Zep-style buffers, graphs); explicit communication actions; retrieval R scoped per role; conflict-handling policies in evolution E.

- Assumptions/dependencies: Protocols for write/read permissions; deadlock avoidance; traceability; cost control for multi-agent runs.

- Memory governance and evaluation workflows (industry/academia)

- Use case: Establish retention schedules, consent flows, audit trails; evaluate memory with LoCoMo, LongMemEval, StreamBench; monitor drift/poisoning.

- Tools/workflows: Memory lifecycle operators (F/E/R) instrumented for logging; benchmark-driven regression; context engineering policies for compression/summarization.

- Assumptions/dependencies: Organizational buy-in; cross-functional privacy/legal; reliable metrics; ongoing red-teaming.

Long-Term Applications

- Reinforcement learning deeply integrated with memory (software, robotics)

- Use case: Agents learn when/how to write, evolve, and retrieve memory; optimize policies that internalize experiential patterns.

- Tools/workflows: RL over memory operators F/E/R; memory-aware rewards; off-policy replay from memory stores; agent distillation of reusable tools/skills.

- Assumptions/dependencies: Stable training signals; safety constraints; scalable infra for long-horizon credit assignment.

- Parametric and latent memory for durable skills (software, on-device AI)

- Use case: Consolidate frequent experiences into model parameters or persistent latent states for faster, more reliable performance.

- Tools/workflows: Fine-tuning/LoRA for parametric memory; architectures with persistent latent states (e.g., Mamba/RWKV-like); test-time adaptation.

- Assumptions/dependencies: Data curation; catastrophic forgetting controls; hardware optimization; evaluation of real gains vs. external memory.

- Multimodal memory across time (healthcare, robotics, media)

- Use case: Persist video/audio/sensor traces with relational links to decisions/outcomes; enable temporal grounding and “episodic” recall for embodied tasks.

- Tools/workflows: Token-level multimodal memory (visual/audio tokens), graph memory linking modalities, compression/summarization for long streams, agentic RAG over multimodal indices.

- Assumptions/dependencies: Storage/compute costs; robust multimodal retrieval; privacy for recordings; synchronization across sensors.

- Fleet-level shared memory for enterprise agent ecosystems (software, operations)

- Use case: Organization-wide memory bus; cross-team skill sharing; reusable playbooks; collective situational awareness.

- Tools/workflows: Multi-tenant shared memory graphs; permissioned evolution E; provenance and lineage; MCP-like protocols for standardized access.

- Assumptions/dependencies: Access control and tenancy; conflict resolution; governance and safety; interoperability across toolchains.

- Trustworthy memory: provenance, auditing, and anti-poisoning (policy, compliance)

- Use case: Regulated memory pipelines with attestations; traceable “right to be forgotten”; defenses against memory poisoning and adversarial retrieval.

- Tools/workflows: Cryptographic signing of memory entries; audit logs; anomaly detection in evolution E; red-team simulations; certified deletion.

- Assumptions/dependencies: Regulatory standards; cross-vendor cooperation; transparency tooling; incident response capacity.

- Clinical decision support with longitudinal memory (healthcare)

- Use case: Memory-aware CDS that integrates longitudinal patterns and episodic context to support clinicians.

- Tools/workflows: Structured factual memory from EHR; safe retrieval scopes; clinician-feedback-driven evolution; hybrid parametric/external memory for frequent patterns.

- Assumptions/dependencies: Clinical validation; FDA/EMA approval pathways; bias/fairness; robust safety nets and liability frameworks.

- Autonomous lab/science agents with experiential memory (academia, R&D, biotech)

- Use case: Agents accumulate protocols, failures, and heuristics; plan experiments leveraging past “experience.”

- Tools/workflows: Experiential memory with outcome-linked artifacts; graph memory of hypotheses ↔ methods ↔ results; RL for experimental planning.

- Assumptions/dependencies: Accurate metadata; lab integration; reproducibility checks; oversight to prevent unsafe actions.

- City-scale digital twins and infrastructure planning agents (energy, transport, urban policy)

- Use case: Long-horizon memory of interventions, sensor trends, incidents; plan and simulate policies with historical context.

- Tools/workflows: Hierarchical memory (neighborhood → city); multimodal token streams; agentic retrieval for policy analysis; evolution E to reconcile conflicting sensors.

- Assumptions/dependencies: Data sharing agreements; robust simulation fidelity; privacy; political governance.

- Financial risk and AML agents with evolving memory (finance)

- Use case: Lifelong pattern discovery across jurisdictions; cross-entity linkage; scenario testing with persistent memory.

- Tools/workflows: Permissioned graph memory; provenance-aware retrieval; RL-driven prioritization; human-in-the-loop adjudication.

- Assumptions/dependencies: Legal barriers to data movement; explainability requirements; false positive management.

- Lifelong learner records and credentialing (education, policy)

- Use case: Portable learning memories; competency graphs; personalized curricula over decades.

- Tools/workflows: Graph memory of competencies; interoperable standards; evolution E for progression; verifiable credentials.

- Assumptions/dependencies: Policy/standards alignment; privacy-first architectures; stakeholder governance.

- Cross-application “Memory OS” platforms (software)

- Use case: Standardized memory bus that applications and agents plug into; unified F/E/R APIs; developer tooling and dashboards.

- Tools/workflows: MemOS-like abstractions; context engineering schedulers; schema registries; observability and governance layers.

- Assumptions/dependencies: Ecosystem adoption; backward compatibility; security; vendor-neutral specification.

- Safety and alignment for memory-centric agents (all sectors)

- Use case: Detect and mitigate harmful memory content; control spillover between tasks/users; align recall with norms and policies.

- Tools/workflows: Policy-as-code for retrieval; scoped memory views; red-teaming; counter-poisoning evolution strategies; consent-aware formation filters.

- Assumptions/dependencies: Clear organizational norms; labeling infrastructure; continuous monitoring; escalations and kill-switches.

Notes on Assumptions and Dependencies Across Applications

- Data governance: Consent, retention schedules, access control, “right to be forgotten,” auditability.

- Technical readiness: Reliable formation/extraction pipelines, retrieval quality, context management (compression, summarization), latency control.

- Safety and trust: Hallucination containment, memory poisoning defenses, conflict resolution, provenance tracking.

- Scalability: Storage and compute for long-horizon, multimodal, or multi-agent memory; cost-aware scheduling of F/E/R operations.

- Interoperability: Standards and protocols (e.g., MCP-like) for shared memory across tools and agents.

- Evaluation: Adoption of memory-specific benchmarks (LoCoMo, LongMemEval, StreamBench, SWE-bench Verified, GAIA, BrowseComp) to track real gains.

Collections

Sign up for free to add this paper to one or more collections.