AReaL-Hex: Accommodating Asynchronous RL Training over Heterogeneous GPUs (2511.00796v1)

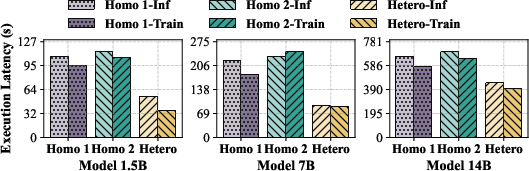

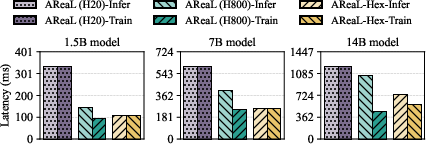

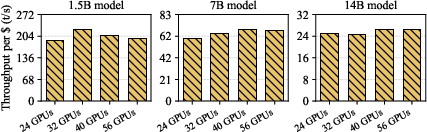

Abstract: Maximizing training throughput and cost-efficiency of RL for LLMs is essential to democratize this advanced technique. One promising but challenging approach is to deploy such a computational workflow over heterogeneous GPUs. Unlike conventional large-scale LLM pretraining, RL training generally decomposes into three coupled stages, i.e., rollout generation, reward computation, and policy/value updates, which exhibit markedly different compute intensities, memory footprints, and communication patterns. Recent research shows that fully asynchronous RL training can disaggregate these stages across disjoint hardware pools without sacrificing training stability, creating a great opportunity for real-world heterogeneous deployment. To this end, we present AReaL-Hex, a heterogeneity-aware asynchronous RL training system that effectively schedules how to execute rollout generation and policy model training over heterogeneous GPUs while enforcing data staleness bounds. Concretely, we use a two-phase scheduler: (i) a constrained search with MILP to select per-stage parallelization strategies and workload assignments given a resource budget, and (ii) a graph-partitioning step that allocates heterogeneous GPUs and interconnects to maximize end-to-end throughput. Built atop a fully asynchronous RL architecture, AReaL-Hex maps HBM-I/O-bound generation and compute-bound optimization to more cost-efficient resources and balances their producer-consumer interactions to avoid both idleness and stale rollout trajectories. On the mathematical reasoning task with various model scales (1.5B, 7B, and 14B), compared to homogeneous deployments of state-of-the-art asynchronous RL systems: (i) When maintaining the same total budgets, AReaL-Hex delivers up to 1.50x higher training throughput; (ii) When achieving the same training throughput, AReaL-Hex results in up to 1.46x reduction in training cost.

Sponsored by Paperpile, the PDF & BibTeX manager trusted by top AI labs.

Get 30 days freePaper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Collections

Sign up for free to add this paper to one or more collections.