- The paper provides a unified taxonomy of hardware platforms, control paradigms, and learning-based models for robot manipulation.

- It advances high-level planning by integrating LLM/MLLM methods with code generation for precise task control.

- It identifies data bottlenecks and generalization challenges, offering strategies to bridge sim-to-real gaps in diverse applications.

Towards a Unified Understanding of Robot Manipulation: A Comprehensive Survey

Introduction and Scope

This survey provides an exhaustive and structured synthesis of the field of robot manipulation, with a particular emphasis on learning-based control, the integration of multimodal foundation models, and the identification of persistent bottlenecks in data and generalization. The work systematically organizes the landscape of robotic manipulation, spanning hardware, control paradigms, benchmarks, datasets, manipulation tasks, methodological taxonomies, and real-world applications. The survey's breadth and depth are illustrated in its organizational overview.

Figure 1: The survey's structure, highlighting the progression from foundational background to methods, bottlenecks, and applications.

Hardware, Benchmarks, and Datasets

The survey begins by categorizing the hardware platforms that underpin manipulation research, including single-arm, bimanual, dexterous, soft, mobile, quadrupedal, and humanoid robots. The diversity of platforms is critical for evaluating generalization and cross-embodiment transfer.

Figure 2: Taxonomy of hardware platforms used in manipulation research.

Benchmarks and datasets are comprehensively reviewed, with a focus on their role in standardizing evaluation and enabling reproducibility. The survey distinguishes between single-embodiment and cross-embodiment simulators, and highlights the evolution of datasets from small, manually labeled collections to large-scale, multimodal, and language-conditioned corpora. The increasing prevalence of trajectory, affordance, and embodied QA datasets is noted as a driver for more generalizable and semantically grounded policies.

Figure 3: Overview of simulators and benchmarks, categorized by manipulation type and embodiment.

Manipulation Task Taxonomy

The survey introduces a unified taxonomy of manipulation tasks, encompassing basic, dexterous, deformable, mobile, quadrupedal, and humanoid manipulation. For each category, the survey contrasts non-learning-based (analytical, optimization, sampling) and learning-based (RL, IL, VLA) approaches, and identifies the unique challenges and methodological trends in each domain.

A detailed analysis is provided for grasping, with a taxonomy that spans vision-only, language-driven, and multimodal approaches. The survey highlights the shift from 2D rectangle-based to 6-DoF and dexterous grasp representations, the integration of language for instruction-driven grasping, and the emergence of foundation models for end-to-end grasp prediction.

Figure 4: Taxonomy of grasping methods, illustrating the progression from vision-only to language-driven and foundation model-based approaches.

For basic manipulation, the survey presents a method taxonomy that is extensible to other manipulation tasks.

Figure 5: Unified method taxonomy for basic manipulation, generalizable to other task categories.

High-Level Planning: LLMs, MLLMs, and Structured Reasoning

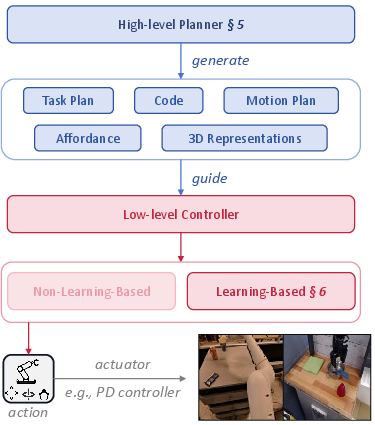

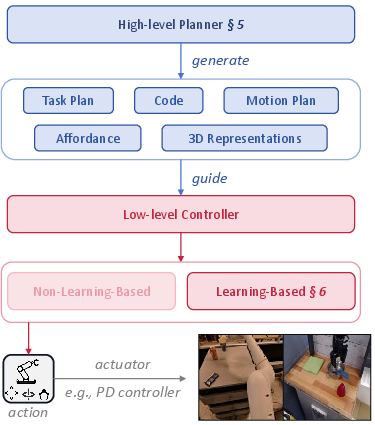

A central contribution of the survey is the expansion of high-level planning to encompass LLM-based and MLLM-based task planning, code generation, motion planning, affordance learning, and 3D scene representations. The taxonomy clarifies the roles of LLMs and MLLMs in open-vocabulary task decomposition, skill sequencing, and closed-loop feedback, and details the integration of code generation for fine-grained control.

Figure 6: Taxonomy of high-level planners, including LLM/MLLM-based planning, code generation, motion planning, affordance learning, and 3D scene representations.

The survey also emphasizes the increasing importance of affordance learning and 3D representations as mid-level planning modules, bridging perception and action through structured proposals and scene graphs.

Low-Level Learning-Based Control: Strategies and Architectures

The survey proposes a novel taxonomy for low-level learning-based control, decomposing it into learning strategy (RL, IL, RL+IL), input modeling (modality selection and encoding), latent learning (representation and action abstraction), and policy learning (decoding to executable actions).

Auxiliary tasks, such as world modeling, video prediction, contrastive learning, and goal extraction, are highlighted as critical for improving sample efficiency and generalization.

Figure 7: Taxonomy of auxiliary tasks for learning-based control, including world models, video prediction, contrastive learning, and goal extraction.

Latent learning is dissected into pretrained latent representations and latent action learning, with dual-system architectures and quantized/continuous latent spaces discussed as mechanisms for scalable and transferable control.

Figure 8: Overview of latent learning paradigms, including pretrained encoders and dual-system latent action architectures.

Bottlenecks: Data and Generalization

The survey identifies data collection/utilization and generalization as the two central bottlenecks in robot manipulation. It provides a taxonomy of data collection paradigms (teleoperation, human-in-the-loop, synthetic, crowdsourcing) and utilization strategies (selection, retrieval, augmentation, expansion, reweighting).

Figure 9: Overview of data collection paradigms, including replica arms, XR interfaces, human-in-the-loop, and synthetic generation.

Generalization is categorized into environment, task, and cross-embodiment dimensions. The survey details strategies for sim2real transfer, SE(3)/SIM(3)-equivariance, long-horizon and compositional generalization, few-shot/meta/continual learning, and latent alignment for cross-embodiment transfer.

Figure 10: Overview of generalization strategies, spanning environment, task, and cross-embodiment axes.

Applications and Future Directions

The survey concludes with an extensive review of real-world applications, including household assistance, agriculture, industry, AI4Science, art, and sports. It highlights the increasing deployment of learning-based manipulation in unstructured and dynamic environments, while noting the persistent reliance on rule-based systems in safety-critical industrial contexts.

Figure 11: Overview of robotic manipulation applications across diverse domains.

The final section outlines four core challenges for the field: (1) building a general-purpose "robot brain" for multi-embodiment control and lifelong learning, (2) overcoming the data bottleneck and sim-to-real gap, (3) enabling deep multimodal physical interaction, and (4) ensuring safety and collaboration in human-robot and multi-robot settings. The survey advocates for hybrid paradigms that integrate learning-based adaptability with the robustness of classical control, and emphasizes the need for scalable, standardized data and simulation infrastructure.

Conclusion

This survey delivers a comprehensive and systematic reference for the field of robot manipulation, integrating foundational background, methodological taxonomies, bottleneck analyses, and application scenarios. The work's unified perspective and detailed organization provide both a roadmap for newcomers and a structured index for experienced researchers. The survey's identification of persistent bottlenecks and articulation of future research directions will inform the development of more general, robust, and safe embodied intelligence systems.