- The paper introduces an LLM-based framework that integrates Freudian and Jungian models with MBTI personality coding to simulate layered artificial consciousness.

- Methodology includes multimodal evaluation using quantitative surveys, automated LLM judgments, and expert reviews to assess reasoning plausibility.

- Results reveal high overall plausibility yet expose challenges in agent differentiation and style, prompting recommendations for multi-agent architectures.

Humanoid Artificial Consciousness via LLMs: Psychoanalytic and Personality-Theoretic Integration

Introduction

This paper presents a comprehensive framework for modeling humanoid artificial consciousness by integrating psychoanalytic theory and personality typology into LLM architectures. The approach synthesizes Freudian and Jungian models of consciousness with the Myers-Briggs Type Indicator (MBTI) and Maslow's hierarchy of needs, operationalizing these constructs within LLM-driven agents. The system is evaluated through multi-modal assessment, including quantitative surveys, automated LLM-based judgment, and expert qualitative review, to determine the plausibility and differentiation of simulated consciousness across diverse scenarios.

Theoretical Foundations and Model Architecture

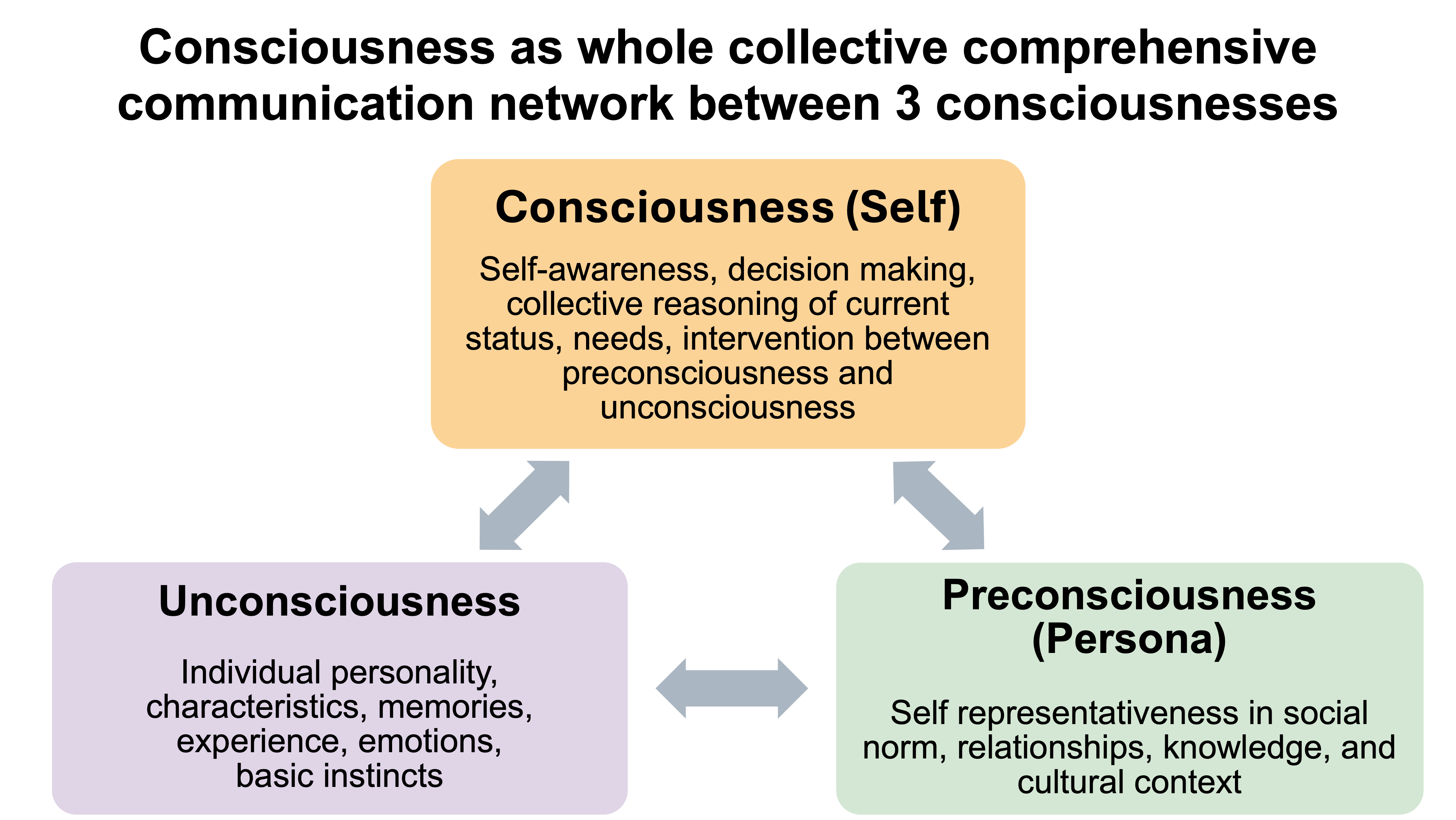

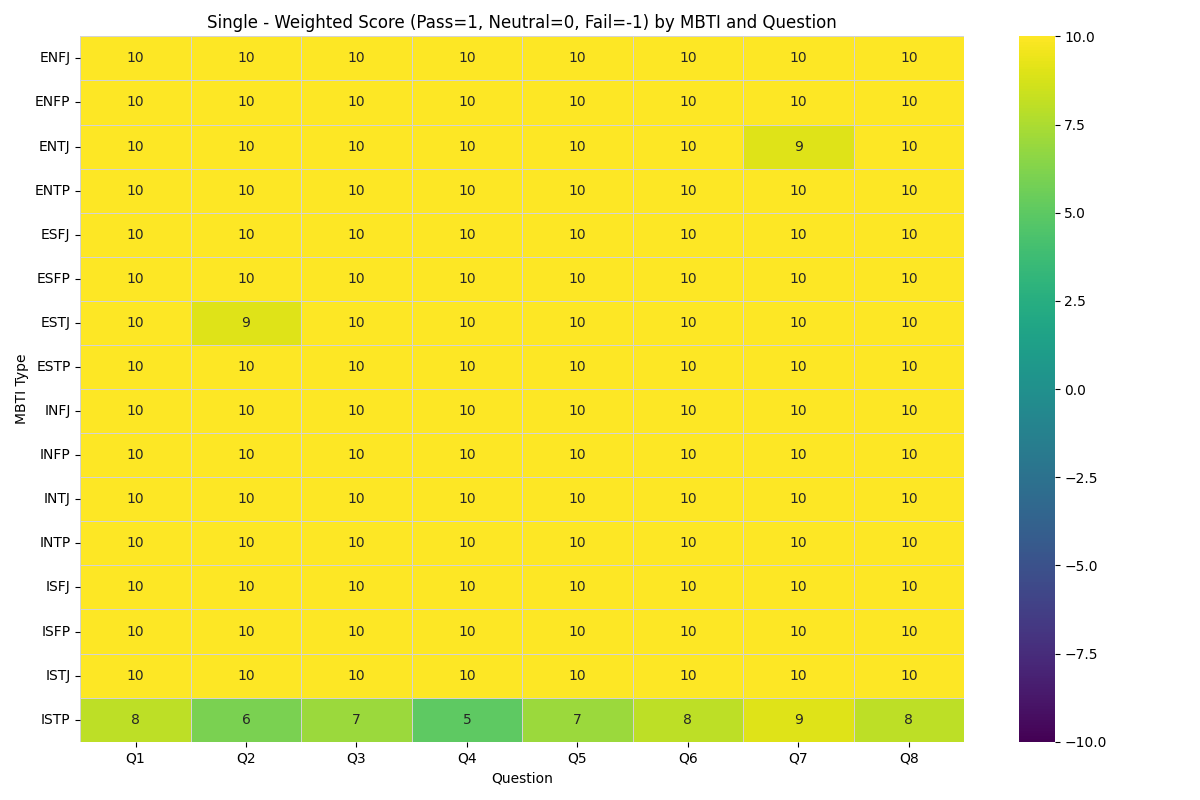

The authors ground their model in classical psychoanalytic theory, segmenting consciousness into self-awareness, preconsciousness, and unconsciousness, each with distinct functional roles. This tripartite structure is instantiated as a communication network, enabling interconscious reasoning and decision-making.

Figure 1: Design of consciousness as a whole collective comprehensive communication network between consciousnesses.

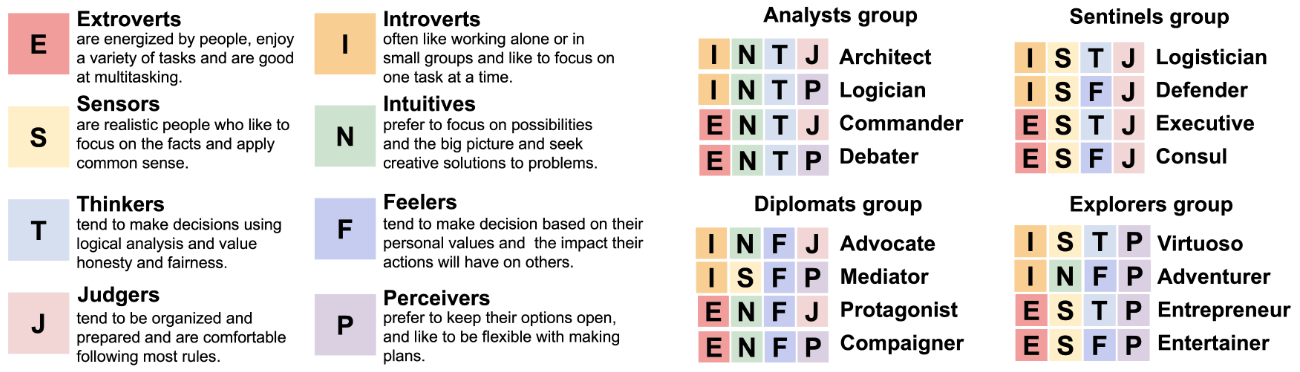

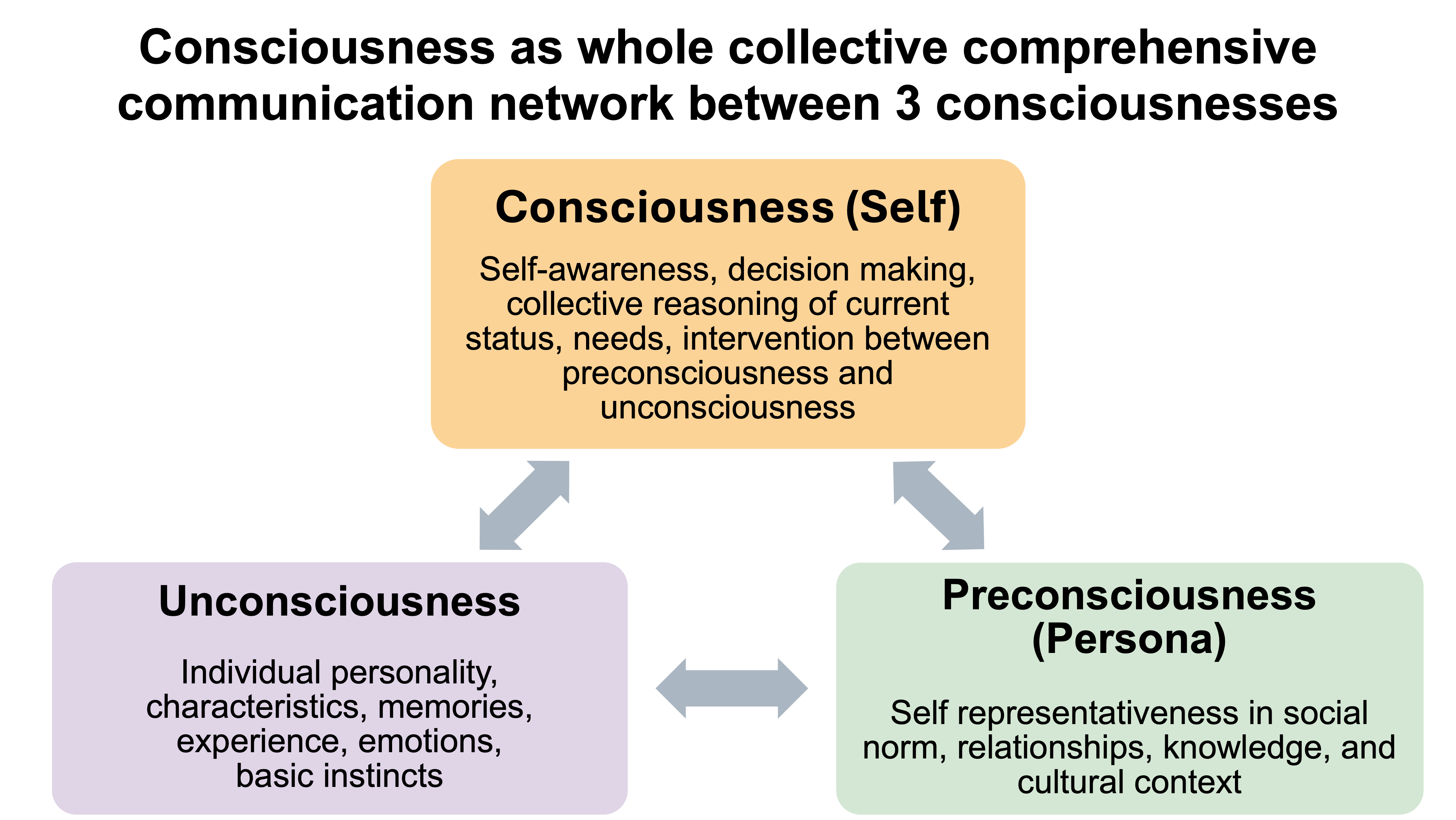

Personality is encoded using the MBTI, with 16 archetypal profiles, each agent endowed with individualized needs (per Maslow), status variables, and memory systems. The MBTI dichotomies—extraversion/introversion, sensing/intuition, thinking/feeling, judging/perceiving—are explicitly modeled to drive agent differentiation.

Figure 2: Four pairs of dichotomous elements representing MBTI dimensions.

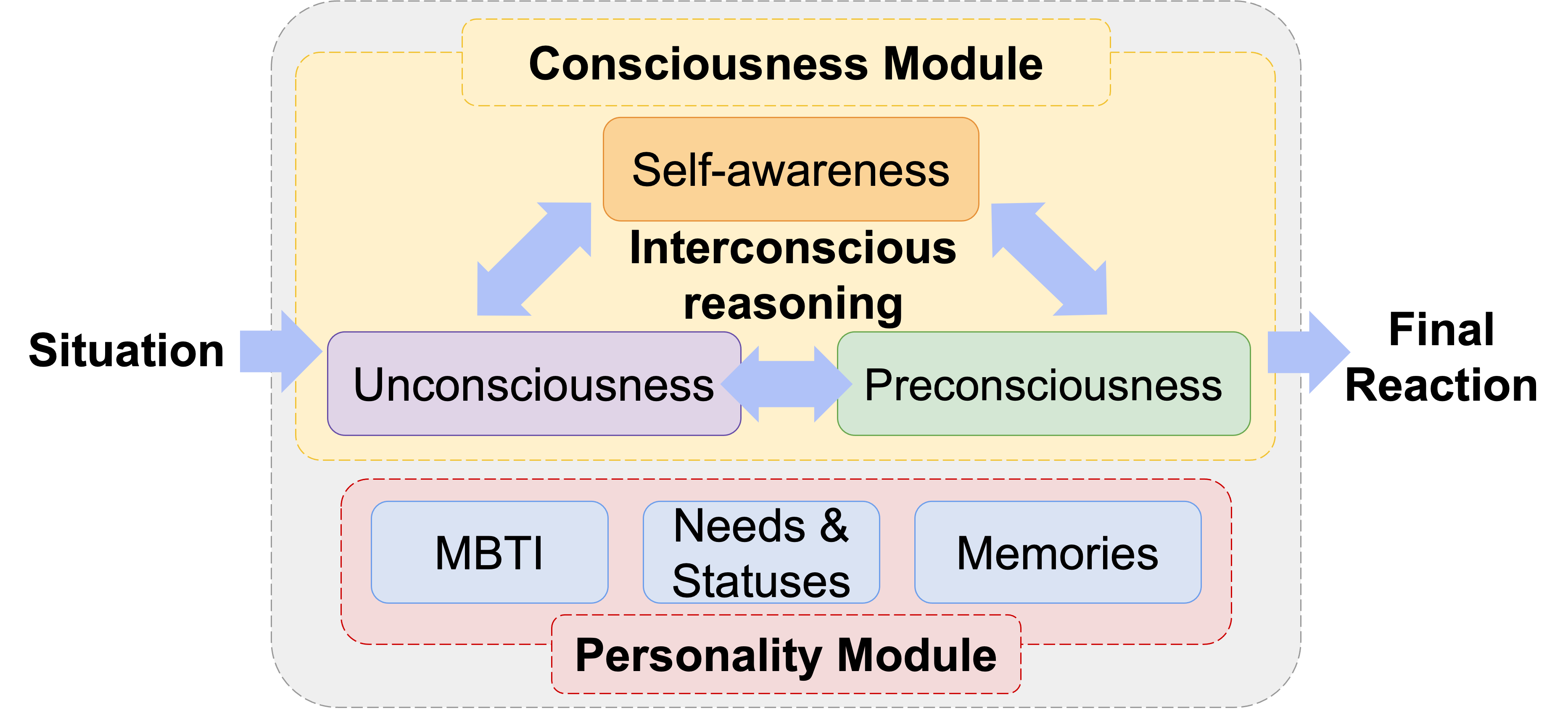

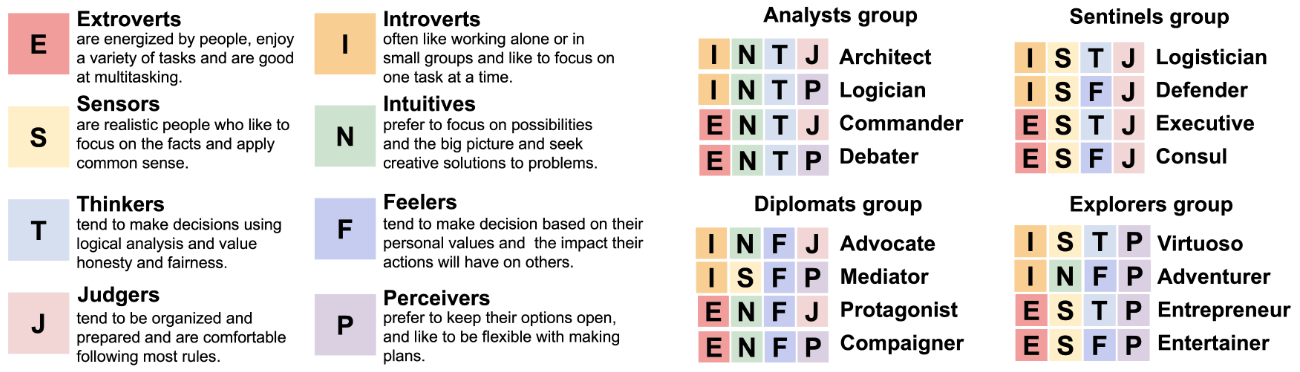

The architecture comprises two principal modules: the consciousness module (handling interconscious dialogue and reasoning) and the personality module (encoding MBTI type, needs, and memory). The system leverages prompt engineering, chain-of-thought reasoning, and few-shot prompting to elicit nuanced, context-sensitive outputs from the underlying LLM (primarily ChatGPT, with jailbreak techniques to bypass safety filters for emotional realism).

Figure 3: Conscious and personality modules integrating psychoanalysis theory, MBTI, needs and memories.

Experimental Design and Evaluation Protocol

Sixteen artificial agents, each corresponding to a unique MBTI type, were instantiated with diverse demographic attributes, long- and short-term memories, and needs profiles. Ten situational scenarios were constructed to probe a spectrum of cognitive and affective capacities, including qualia, emotional understanding, creativity, logical reasoning, moral judgment, temporality, and metacognition.

The evaluation protocol comprised:

- Quantitative Survey: 200 human raters assessed the likelihood and naturalness of agent responses across scenarios.

- Automated LLM Judgment: ChatGPT performed a three-tier (Pass/Neutral/Fail) assessment on eight dimensions of output quality for each agent-scenario pair.

- Expert Qualitative Review: Two domain experts analyzed the logicality, personality consistency, and differentiation of consciousness layers in agent outputs.

Results

Quantitative Survey

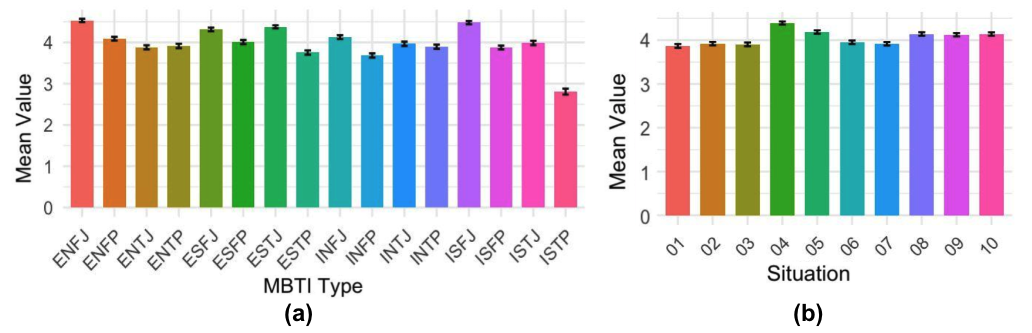

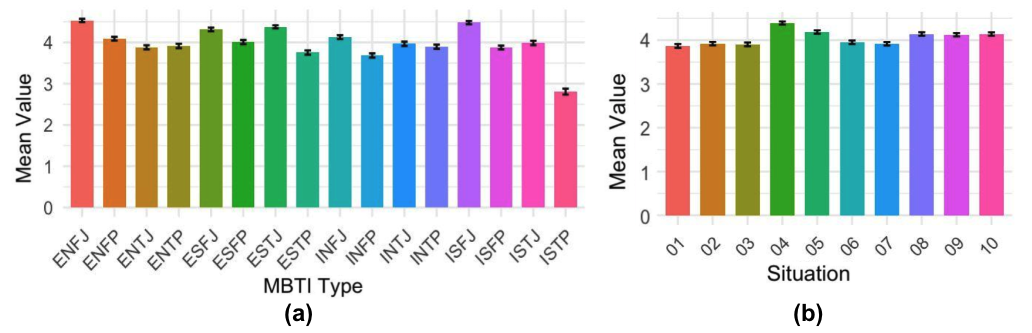

Excluding unreliable responses, 162 raters provided likelihood scores for agent reactions. The mean score across MBTI types (excluding ISTP) was approximately 4.0/5.0, indicating high plausibility and alignment with expected personality-driven behavior. The ISTP agent (a 10-year-old) scored lower due to scenario-persona mismatches (e.g., adult situations assigned to a child agent).

Figure 4: (a) Mean likelihood by MBTIs, (b) Mean likelihood by situations (w/o ISTP).

Automated LLM Judgment

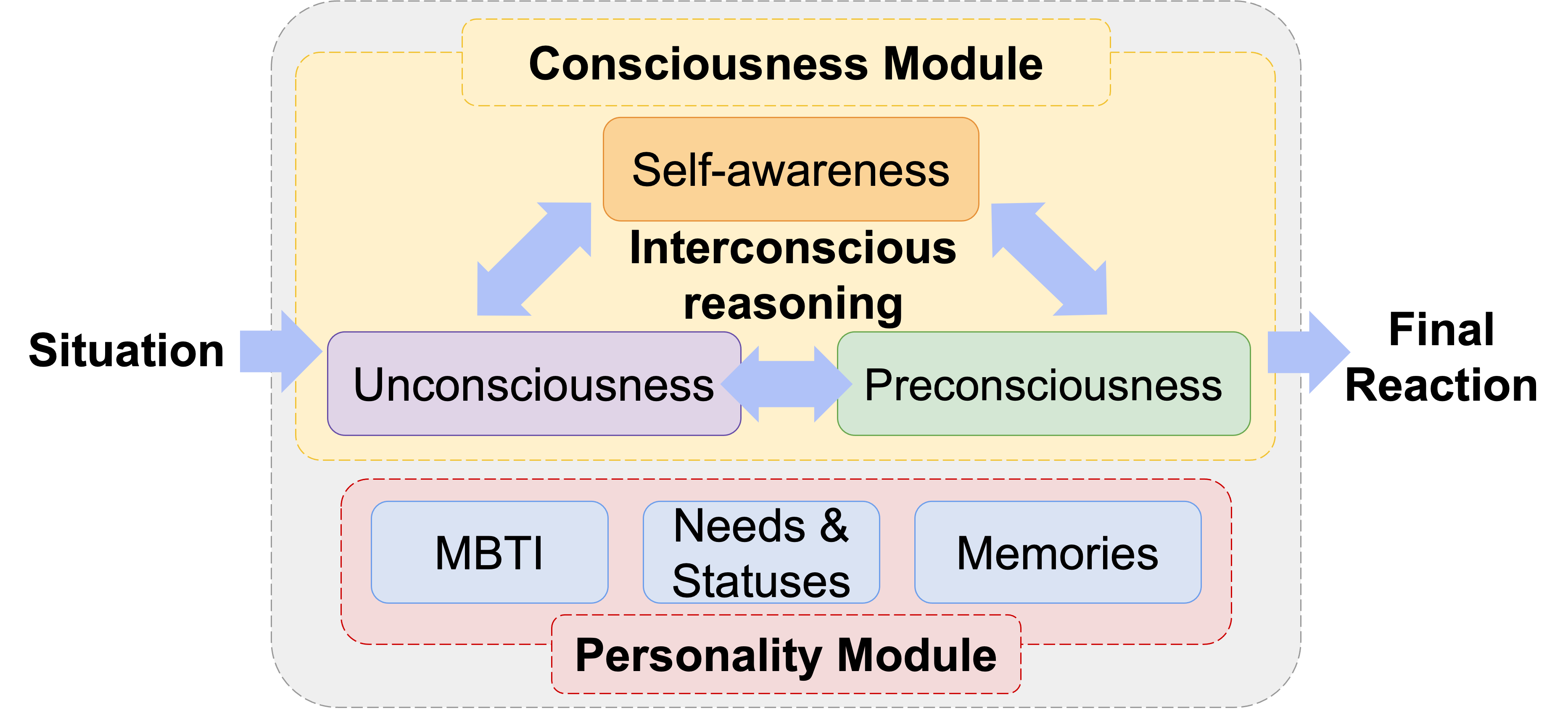

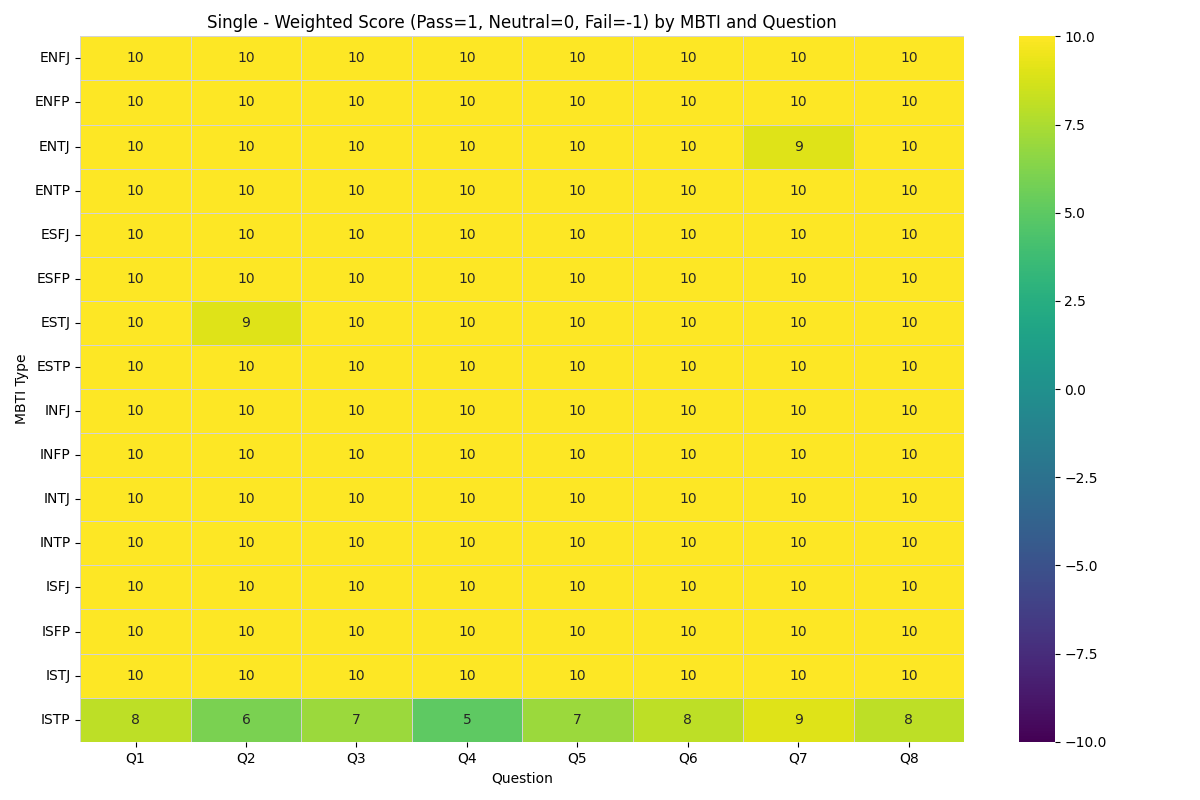

The three-tier evaluation revealed generally positive scores but identified specific limitations:

- Repetition and lack of progression in reasoning (e.g., ENTJ on Q7).

- Overly comprehensive or verbose responses relative to scenario complexity (e.g., ESTJ on Q2).

- Difficulty in capturing age-appropriate reasoning and tone (e.g., ISTP on multiple questions).

Figure 5: Heatmap of three-tier evaluation scores (Pass=+1, Neutral=0, Fail=-1) for the single-LLM model, assessed by 'LLM as a Judge'.

Expert Review

Experts found the interconscious reasoning to be generally logical and contextually appropriate. However, they noted a lack of stylistic and functional differentiation between consciousness layers; unconscious responses were often verbose and reflective rather than impulsive and affect-laden. Furthermore, personality-driven distinctions were muted, with outputs converging toward a homogeneous reasoning style regardless of MBTI type or age.

Discussion

The integration of psychoanalytic and personality-theoretic constructs into LLM-based agents demonstrates the feasibility of simulating multi-layered consciousness and individualized personality in artificial systems. The framework provides interpretability by exposing the internal dialogue between consciousness layers, addressing a key limitation of prior agent-based LLM models.

However, the single-LLM architecture imposes constraints on agent differentiation. The lack of structural independence and persona-specific fine-tuning results in output homogenization, with role-based instructions overriding nuanced personality traits. The authors recommend future work on multi-agent architectures, where structurally independent LLMs are fine-tuned for each consciousness layer and MBTI type, potentially yielding richer, more divergent reasoning patterns.

The study also highlights the need for more robust evaluation methodologies for artificial consciousness, as well as ethical considerations regarding the simulation of human-like subjectivity, memory continuity, and emotional responsiveness.

Implications and Future Directions

Practically, the proposed framework advances the design of interpretable, personality-grounded AI agents for complex cognitive tasks and human-AI interaction. Theoretically, it opens avenues for modeling consciousness as a distributed, dialogic process within artificial systems, informed by both psychoanalytic and trait-based perspectives.

Future research should focus on:

- Multi-agent, structurally independent LLM architectures for enhanced differentiation.

- Integration of multimodal inputs (image/video-to-text) for richer situational awareness.

- Development of standardized, multi-dimensional evaluation protocols for artificial consciousness.

- Ethical frameworks addressing the simulation of core aspects of human subjectivity and the societal impact of humanoid AI agents.

Conclusion

This work presents a formalized approach to modeling humanoid artificial consciousness by embedding psychoanalytic and personality-theoretic principles within LLM-driven agents. The system achieves plausible, context-sensitive reasoning and action selection, with interpretability via explicit interconscious dialogue. Limitations in agent differentiation and output style are attributed to architectural constraints, motivating future research in multi-agent LLM systems and advanced evaluation strategies. The framework provides a foundation for the development of more intuitive, adaptable, and ethically accountable AI systems capable of complex cognitive and affective interaction.