- The paper presents a modular framework using model-enhanced residual learning to robustly stabilize humanoid end-effectors amid locomotive disturbances.

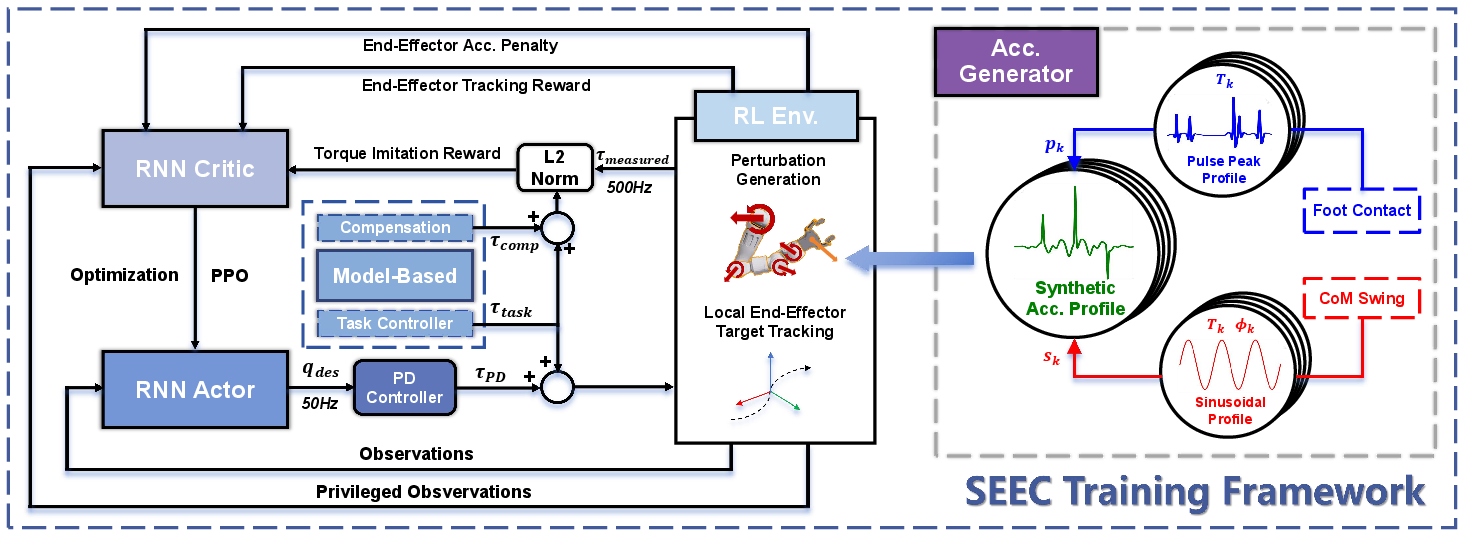

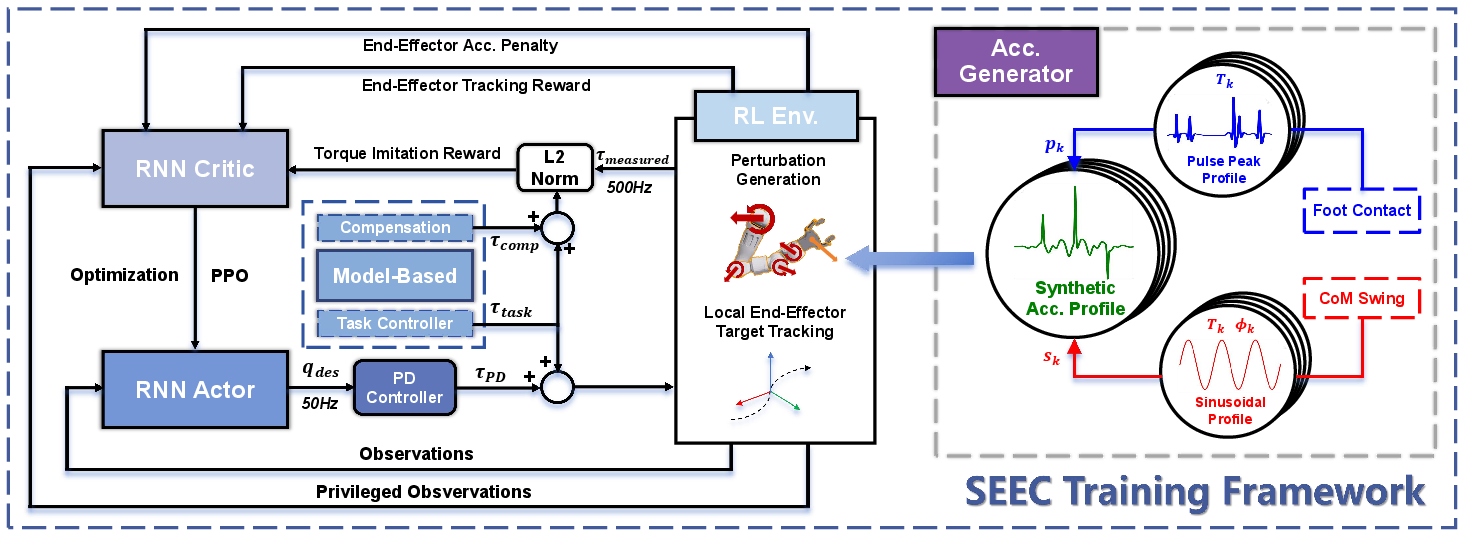

- Methodology decouples upper-body manipulation and lower-body locomotion, integrating analytic compensation torques with simulated base perturbations.

- Experimental results show significant reductions in end-effector accelerations in simulation and real-world tasks, validating robustness and zero-shot transferability.

SEEC: Stable End-Effector Control with Model-Enhanced Residual Learning for Humanoid Loco-Manipulation

Introduction and Motivation

The challenge of achieving stable and precise arm end-effector control during dynamic humanoid locomotion is a critical bottleneck for practical loco-manipulation. Humanoid robots, due to their high DoF and inherent dynamic instability, are particularly susceptible to base-induced disturbances that propagate to the arms, resulting in significant end-effector accelerations and degraded manipulation performance. Traditional model-based controllers offer precise control but are limited by model inaccuracies and unmodeled real-world effects. Conversely, learning-based approaches can adapt to such uncertainties but often overfit to specific training conditions and lack robustness to out-of-distribution disturbances, especially when manipulation and locomotion are tightly coupled.

The SEEC framework addresses these limitations by introducing a model-enhanced residual learning paradigm that decouples upper-body (manipulation) and lower-body (locomotion) control. The upper-body controller is trained to compensate for a wide spectrum of locomotion-induced disturbances using model-based analytic compensation signals and a perturbation generator, enabling robust and transferable end-effector stabilization across diverse and unseen locomotion controllers.

Figure 1: System framework overview of SEEC. The architecture decouples upper-body and lower-body controllers, with the upper-body RL module trained to compensate for lower-body-induced disturbances using model-based acceleration compensation and simulated base perturbations.

Methodology

Decoupled Control Architecture

SEEC employs a modular architecture, separating the control of the lower body (locomotion) and upper body (manipulation). The lower-body controller is trained for robust locomotion using standard sim-to-real RL pipelines, while the upper-body controller is responsible for end-effector stabilization and manipulation. Two key assumptions are made: (1) negligible arm-to-base back-coupling, and (2) a robust locomotion controller that can tolerate upper-body disturbances.

Model-Enhanced Residual Learning

The core of SEEC is a residual RL policy for the upper body, trained to compensate for base-induced disturbances. The training pipeline consists of:

- Simulated Base Acceleration: Realistic base motion is emulated in simulation by injecting fictitious wrenches corresponding to sampled base twists and accelerations, capturing both impulsive (foot-ground contact) and periodic (CoM sway) components. This exposes the policy to a diverse set of disturbances, promoting robustness.

- Analytic Compensation Torque: Using operational-space control, the analytic compensation torque required to cancel base-induced end-effector accelerations is computed. This torque is combined with task-oriented control signals for target tracking.

- Residual Policy Training: The RL policy is trained to output joint targets to a low-level PD controller, with a reward function that penalizes deviation from the sum of analytic compensation and task torques. Auxiliary rewards regularize control effort, end-effector acceleration, and action smoothness. The policy is trained with PPO using recurrent actor-critic networks.

Perturbation Generation and Robustness

A key innovation is the perturbation generator, which samples base acceleration profiles from a distribution covering realistic gait cycles and contact transients. This enables the upper-body policy to learn compensation strategies that generalize to unseen locomotion controllers and walking patterns, supporting zero-shot transfer without joint retraining.

Experimental Results

Simulation Benchmarks

SEEC was evaluated in simulation on the Booster T1 humanoid across multiple locomotion scenarios: stepping, forward, lateral, and rotational walking. Metrics focused on end-effector linear and angular acceleration (mean and max). Ablation studies compared SEEC to:

- IK-based control

- RL without simulated base acceleration

- RL with simulated base acceleration but without model-based torque guidance

- SEEC variants with components ablated

SEEC consistently achieved the lowest end-effector accelerations across all tasks. Notably, removing the operational-space torque or torque-guided reward led to substantial performance degradation, confirming the necessity of model-based guidance for effective compensation.

Robustness to Unseen Locomotion Policies

SEEC demonstrated superior robustness when deployed with previously unseen locomotion controllers. In contrast, pre-trained and co-trained baselines exhibited significant performance degradation or outright failure due to excessive arm accelerations. SEEC's modular design and perturbation-driven training enabled a 34.4% and 21.5% average degradation in mean linear and angular acceleration, respectively, compared to 57.5% and 60.1% for co-trained baselines.

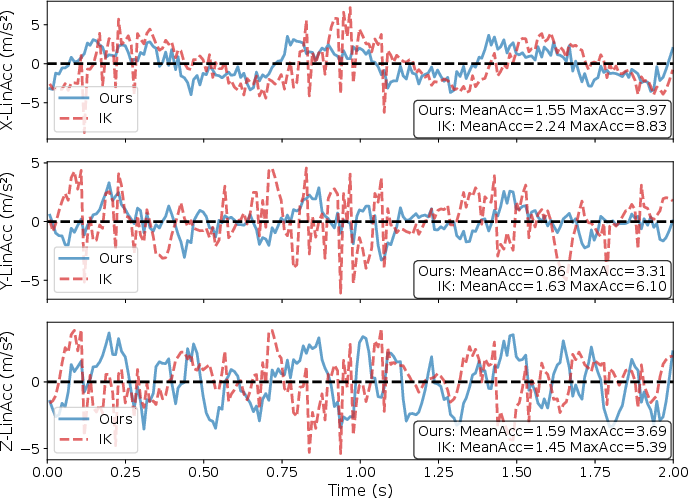

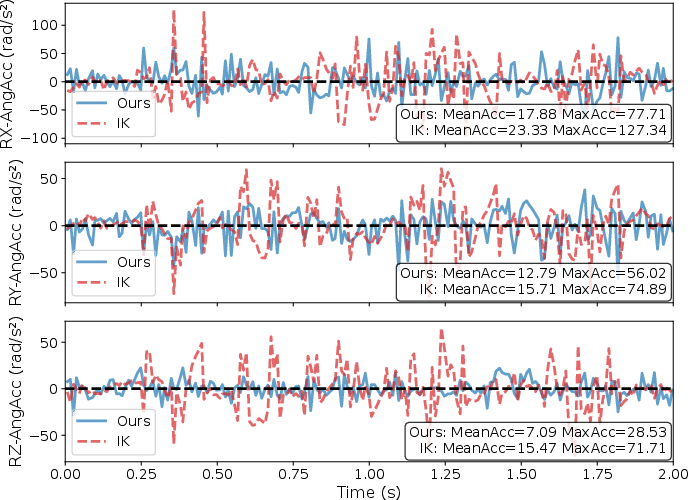

Real-World Hardware Validation

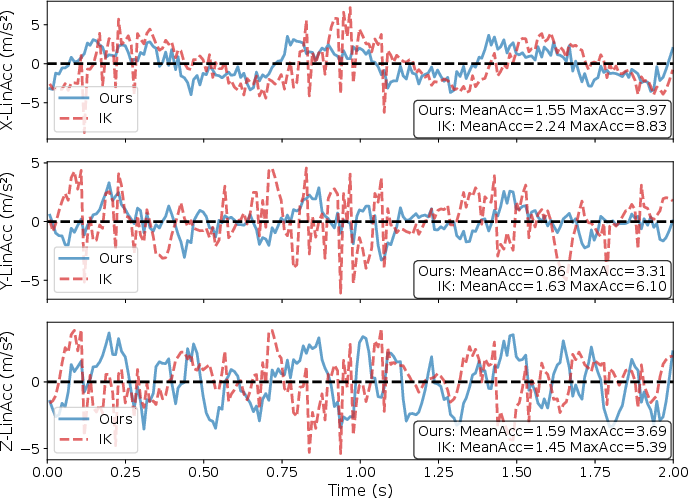

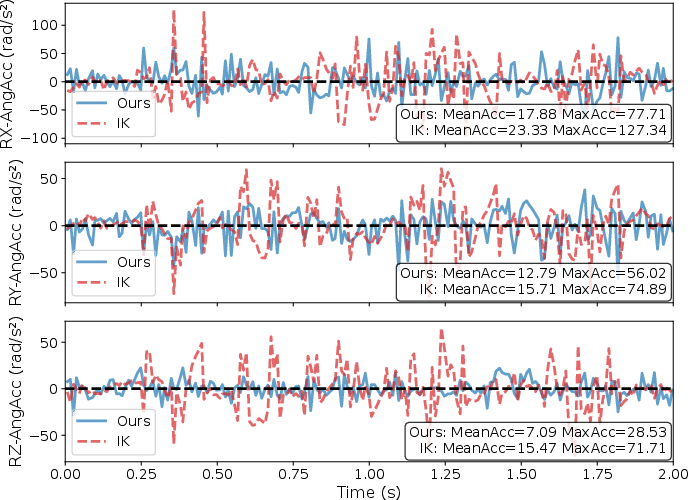

SEEC was deployed on the Booster T1 hardware. End-effector acceleration was measured using motion capture. SEEC reduced mean linear acceleration from 3.57 to 2.82 m/s² and mean angular acceleration from 41.1 to 24.2 rad/s² compared to the IK baseline, with a notably smoother acceleration profile.

Figure 2: End-effector acceleration plots in real-world evaluation. The blue line indicates the acceleration profile of SEEC, and the dotted red line represents the IK baseline.

SEEC was validated on complex real-world tasks requiring stable end-effector control under dynamic locomotion:

- Chain Holding: SEEC suppressed oscillatory dynamics, maintaining the chain nearly vertical, while the baseline failed due to excessive oscillations.

- Mobile Whiteboard Wiping: SEEC maintained smooth trajectories and steady contact forces, enabling effective wiping.

- Plate Holding: SEEC allowed the robot to carry a plate of snacks without spillage, while the baseline caused significant spillage due to end-effector oscillations.

Figure 3: Plate holding task. SEEC enables stable plate carrying without spillage, while the IK baseline results in significant spillage due to end-effector oscillations.

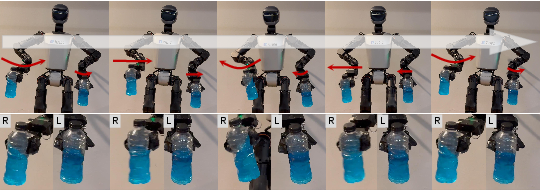

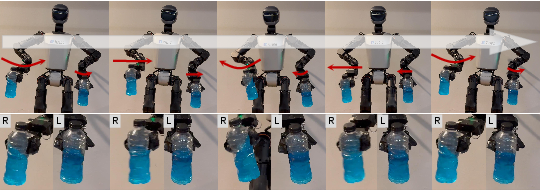

- Bottle Holding: SEEC minimized liquid surface vibration, while the baseline induced pronounced oscillations and spillage.

Figure 4: Bottle holding task. The left arm (SEEC) achieves stable holding with minimal liquid vibration, while the right arm (IK baseline) exhibits pronounced oscillations.

Discussion and Implications

SEEC demonstrates that model-enhanced residual learning, combined with a perturbation-driven training regime, enables robust and transferable end-effector stabilization for humanoid loco-manipulation. The decoupled architecture supports modular policy reuse and zero-shot transfer across diverse locomotion controllers, addressing a key limitation of prior tightly coupled approaches.

The analytic compensation torque provides a principled supervisory signal, allowing the RL policy to focus on learning the residual required to bridge the sim-to-real gap and unmodeled effects. The perturbation generator ensures robustness to a wide range of real-world disturbances, a critical requirement for practical deployment.

Strong numerical results include a 36% reduction in mean linear acceleration and a 26% reduction in mean angular acceleration compared to ablated variants, and a 21–34% degradation under unseen locomotion policies versus 57–60% for baselines.

Potential limitations include reliance on accurate proprioceptive state estimation and the assumption of negligible arm-to-base coupling. Future work could integrate constrained model-based controllers, richer state estimation (e.g., global pose), and proactive disturbance rejection to further enhance stability and task versatility.

Conclusion

SEEC provides a robust, modular, and transferable solution for stable end-effector control in humanoid loco-manipulation. By integrating model-based analytic compensation with residual RL and perturbation-driven training, SEEC achieves superior stability and robustness in both simulation and real-world tasks. The framework's decoupled design and demonstrated zero-shot transferability mark a significant step toward practical, general-purpose humanoid loco-manipulation. Future research should explore tighter integration of model-based and learning-based control, improved state estimation, and extension to more complex collaborative and contact-rich tasks.