- The paper demonstrates that prompt engineering can guide small language models to generate code with marginal energy improvements over human-written solutions.

- It evaluates four SLMs with strategies like Chain-of-Thought prompting, finding notable energy savings for Qwen2.5-Coder-3B and StableCode-3B.

- The study highlights that simpler prompts can outperform complex ones, emphasizing model-dependent strategies for energy-efficient, edge-friendly code.

Energy-Efficient Code Generation via Prompting Small LLMs

Introduction

The paper "Toward Green Code: Prompting Small LLMs for Energy-Efficient Code Generation" (2509.09947) addresses the intersection of prompt engineering and sustainable software development, focusing on the energy efficiency of code generated by Small LLMs (SLMs). The paper is motivated by the substantial environmental impact of LLMs, particularly their high energy consumption and carbon footprint during both training and inference. SLMs, with their reduced parameter count and computational requirements, present a promising alternative for fundamental programming tasks, especially in resource-constrained or edge environments. The central research question is whether prompt engineering can guide SLMs to generate code that is more energy-efficient than human-written solutions.

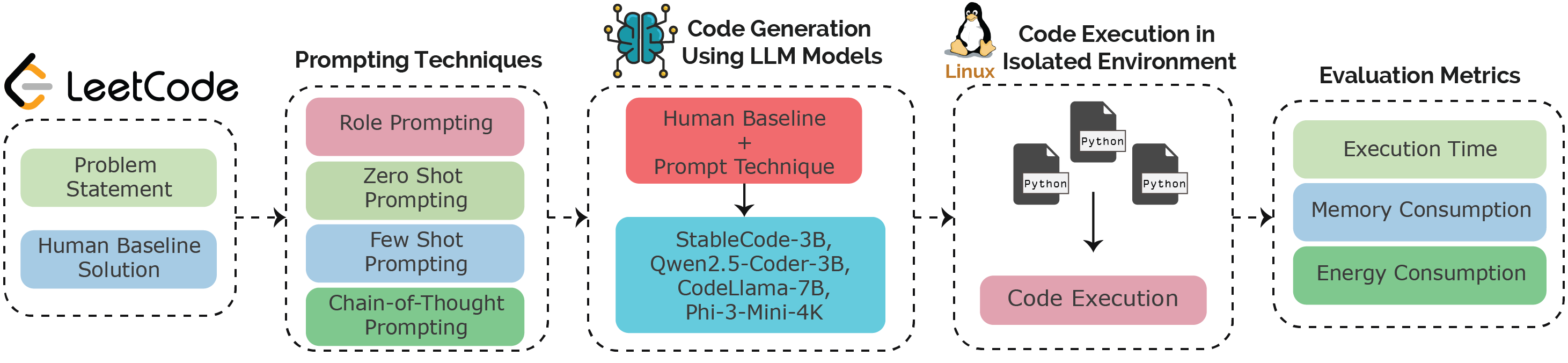

Figure 1: Overall methodology for evaluating SLMs and prompting strategies on energy-efficient code generation.

Experimental Design and Methodology

The paper evaluates four open-source SLMs: StableCode-Instruct-3B, Qwen2.5-Coder-3B-Instruct, CodeLlama-7B-Instruct, and Phi-3-Mini-4K-Instruct. Each model is tested on 150 Python problems from LeetCode, evenly distributed across easy, medium, and hard categories. The baseline for comparison is the most upvoted human-written solution for each problem.

Four prompting strategies are employed:

- Role Prompting: Assigns the model a senior software engineer persona, instructing it to optimize for energy efficiency.

- Zero-Shot Prompting: Directly instructs the model to optimize the provided code without examples.

- Few-Shot Prompting: Supplies the model with pairs of unoptimized and optimized code examples before the target task.

- Chain-of-Thought (CoT) Prompting: Provides a step-by-step optimization strategy (generated by GPT-5) alongside the code, encouraging explicit reasoning.

For each generated solution, runtime (ms), peak memory usage (KiB), and energy consumption (mWh, measured via CodeCarbon) are recorded. All experiments are conducted in a controlled Linux environment on a Google Cloud VM with dedicated CPU cores, ensuring reproducibility and minimizing external variability.

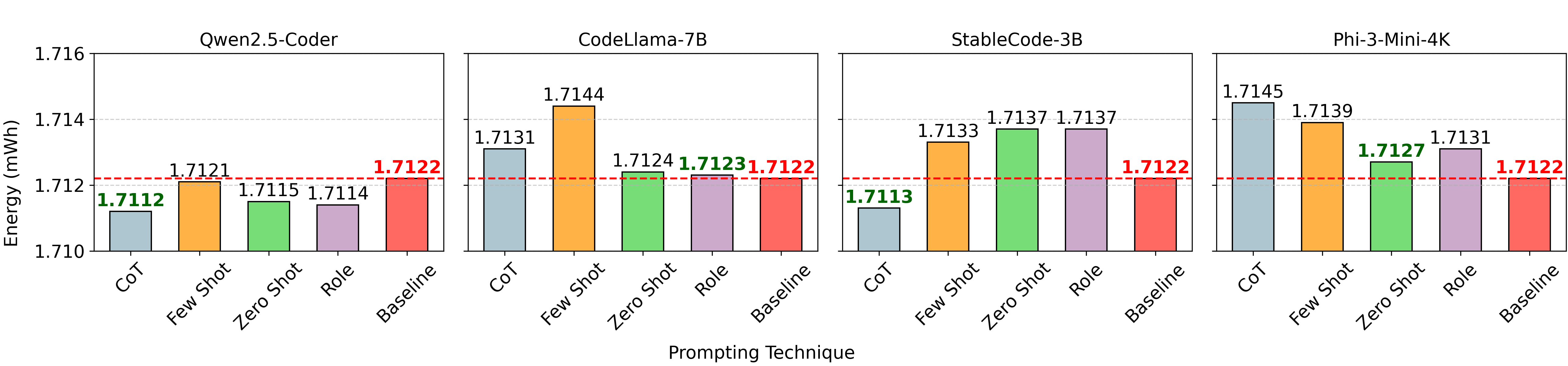

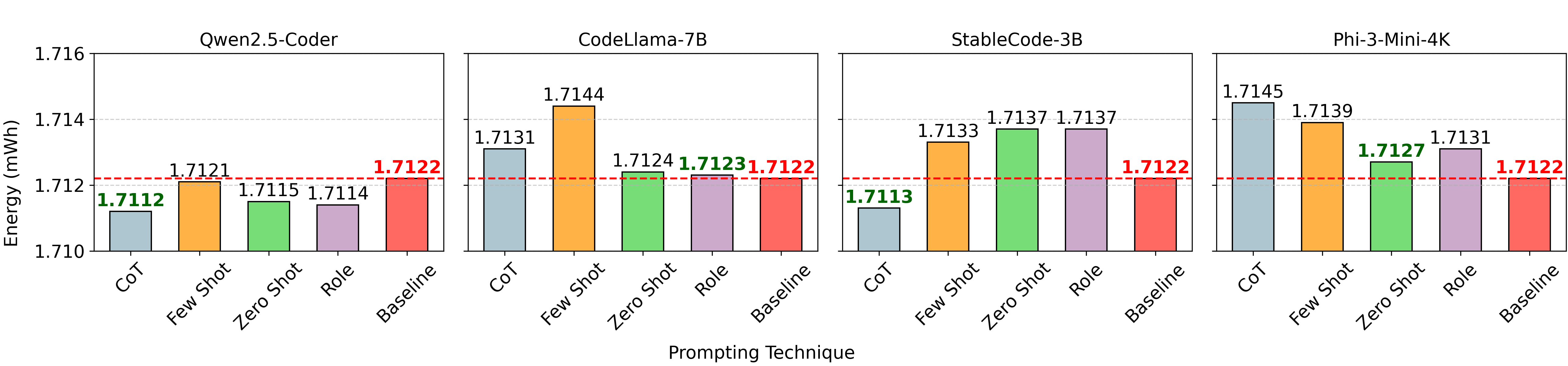

The results reveal that energy consumption for generated code is tightly clustered around the baseline (1.7122 mWh), with only marginal improvements observed for certain models and prompting strategies. Notably, Qwen2.5-Coder-3B and StableCode-3B achieve energy savings below the baseline, but only under specific conditions.

Figure 2: Energy consumption of four SLMs under different prompting strategies compared to the baseline (1.7122 mWh, red dashed line).

- Qwen2.5-Coder-3B: All prompting strategies yield energy consumption below the baseline, with CoT prompting achieving the lowest (1.7112 mWh).

- StableCode-3B: Only CoT prompting results in energy savings (1.7113 mWh); other strategies exceed the baseline.

- CodeLlama-7B and Phi-3-Mini-4K: No prompting strategy outperforms the baseline; in some cases, energy consumption increases.

Few-Shot prompting consistently fails to deliver the lowest energy usage for any model, suggesting that increased prompt complexity does not necessarily translate to improved efficiency. Simpler strategies (Zero-Shot, Role) sometimes yield better results, indicating that prompt simplicity may be advantageous for certain SLMs.

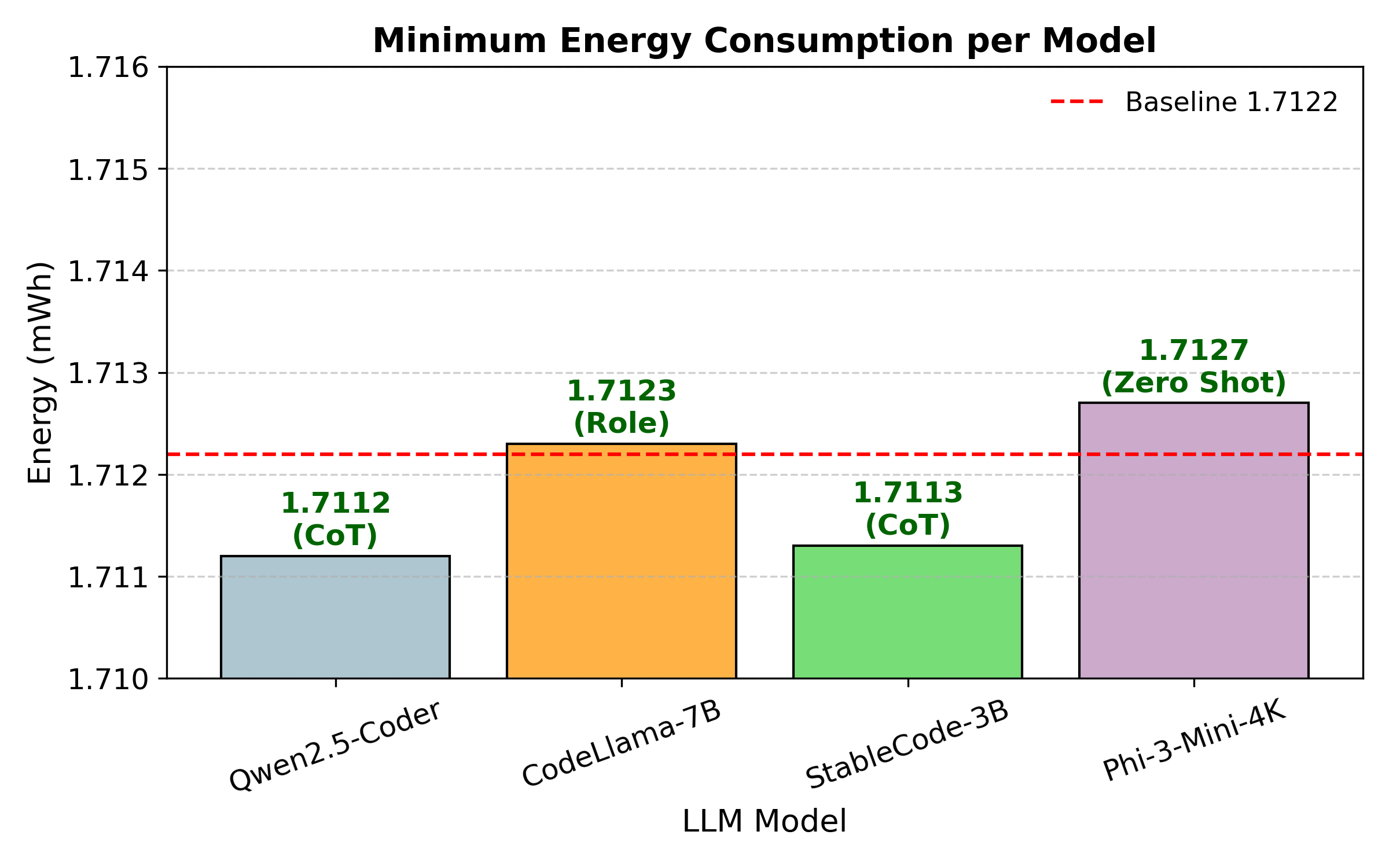

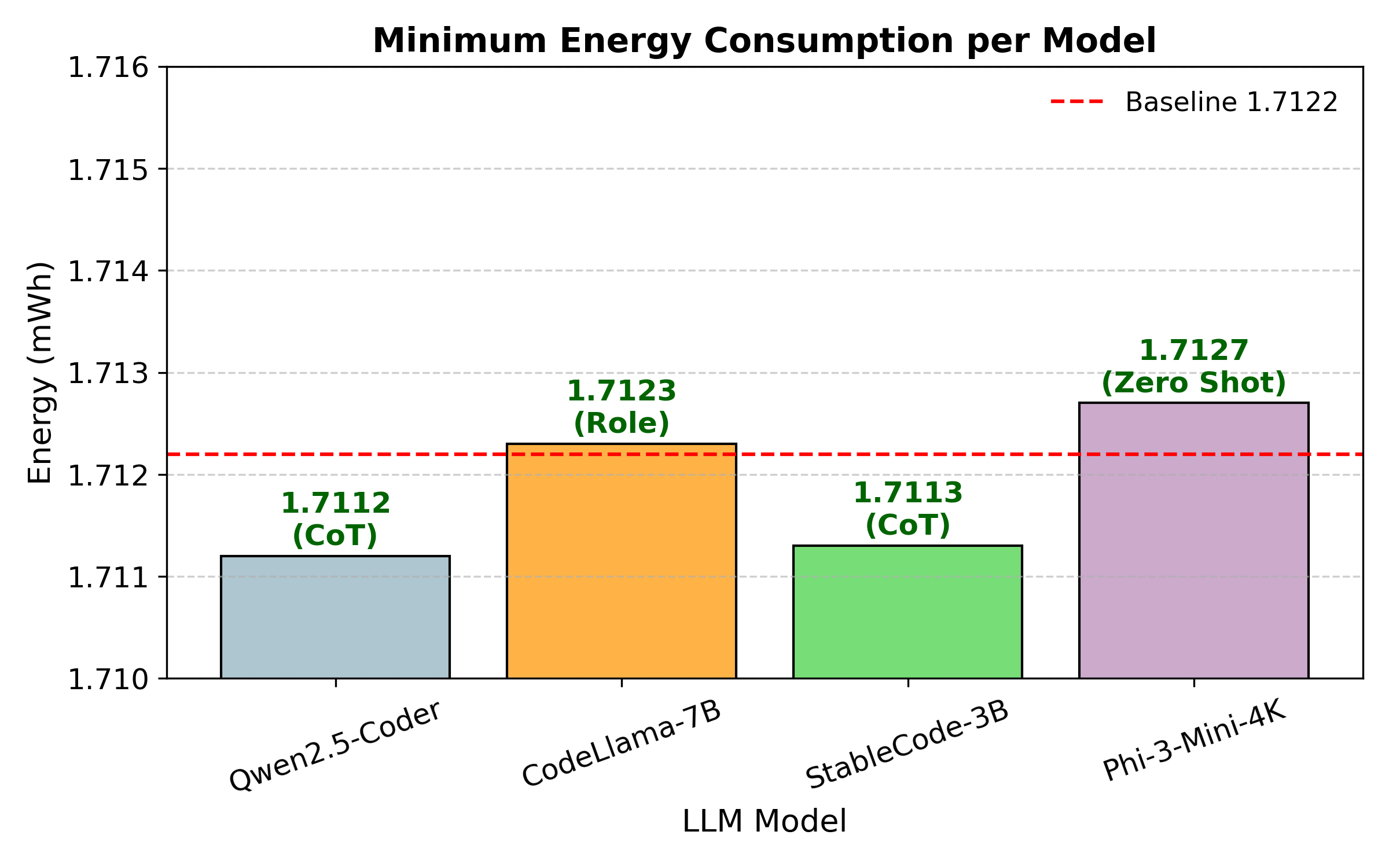

Figure 3: Minimum energy consumption observed for each model under its most efficient prompting strategy, shown in comparison to the baseline (1.7122 mWh).

Analysis and Implications

The findings demonstrate that the effectiveness of prompt engineering for energy-efficient code generation is highly model-dependent. CoT prompting, which encourages explicit reasoning, is beneficial for Qwen2.5-Coder-3B and StableCode-3B, but not for CodeLlama-7B or Phi-3-Mini-4K. This suggests that the internal architecture and training data of SLMs play a significant role in their responsiveness to different prompt types.

The marginal energy savings observed (on the order of 0.001 mWh) highlight the challenge of achieving substantial sustainability gains through prompt engineering alone. While SLMs can match or slightly outperform human-written code in terms of energy efficiency, the improvements are not universal and may be offset by increased runtime or memory usage in some cases.

From a practical perspective, these results provide actionable guidance for developers and researchers:

- Model Selection: Qwen2.5-Coder-3B and StableCode-3B are preferable for energy-efficient code generation when paired with CoT prompting.

- Prompting Strategy: Simpler prompts may be more effective for certain models; complex strategies like Few-Shot do not guarantee better efficiency.

- Deployment Considerations: SLMs are suitable for edge environments where resource constraints are critical, but prompt engineering must be tailored to the specific model and task.

Limitations

The paper is limited to Python and LeetCode problems, restricting generalizability to other programming languages and domains. Potential data leakage from benchmark problems in model training sets may inflate performance estimates. Measurement overhead and system fluctuations, though minimized, may introduce minor variability in reported metrics.

Future Directions

Further research should explore:

- Cross-Language Generalization: Extending the analysis to other languages (e.g., C++, Java) to assess the universality of findings.

- Fine-Tuning for Sustainability: Investigating whether targeted fine-tuning of SLMs on energy-efficient code corpora amplifies prompt engineering benefits.

- Hybrid Approaches: Combining prompt engineering with automated code profiling and feedback loops to iteratively optimize for energy efficiency.

- Edge Deployment: Evaluating real-world energy savings in edge and mobile environments, where SLMs are most likely to be deployed.

Conclusion

Prompt engineering can guide certain SLMs toward generating code that is marginally more energy-efficient than human-written solutions, but the benefits are model- and strategy-dependent. CoT prompting is effective for Qwen2.5-Coder-3B and StableCode-3B, while other models do not consistently benefit. The results underscore the need for careful selection of both model and prompting strategy to achieve sustainable software development. Future work should address broader language coverage, fine-tuning, and real-world deployment scenarios to further advance green AI programming.