- The paper demonstrates that applying reinforcement learning with verifiable rewards transforms large language models into robust reasoning models capable of tackling complex tasks like math and coding.

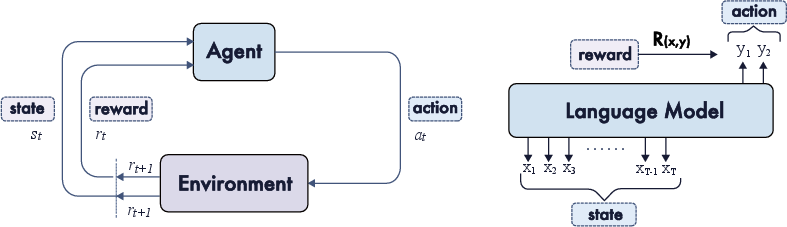

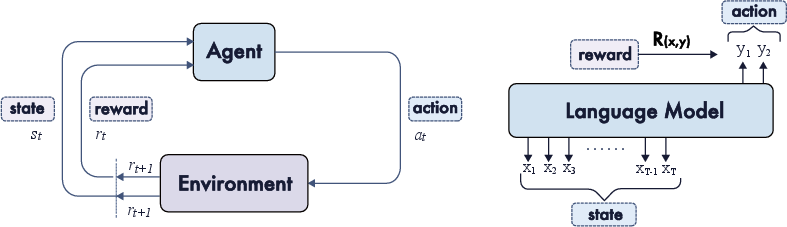

- It formalizes the RL framework for language models by mapping states, actions, policies, and rewards to tokens, prompts, and sequence-level correctness.

- Results indicate that critic-free methods, dynamic sampling strategies, and hybrid reward designs enable scalable and efficient training of advanced reasoning capabilities.

A Survey of Reinforcement Learning for Large Reasoning Models

Introduction and Motivation

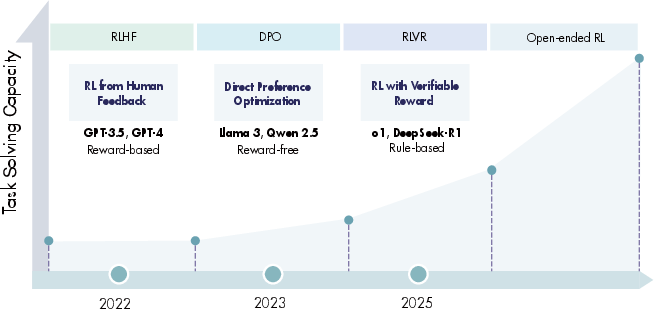

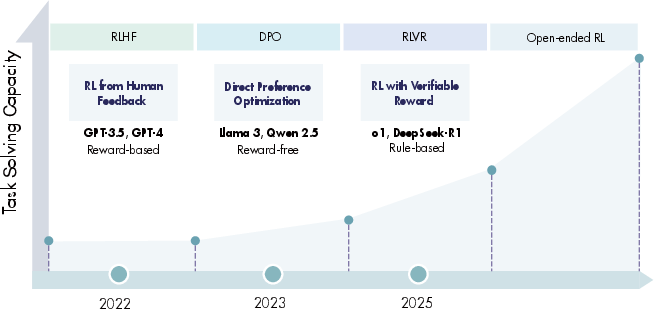

Reinforcement Learning (RL) has become a central methodology for enhancing the reasoning capabilities of LLMs, transforming them into Large Reasoning Models (LRMs). The surveyed work provides a comprehensive synthesis of the field, focusing on the application of RL to LLMs for complex logical tasks, such as mathematics, coding, and agentic behaviors. The survey emphasizes the transition from RL for human alignment (e.g., RLHF, DPO) to RL for verifiable reasoning (RLVR), highlighting the emergence of new scaling axes—particularly the allocation of test-time compute and the explicit incentivization of reasoning processes.

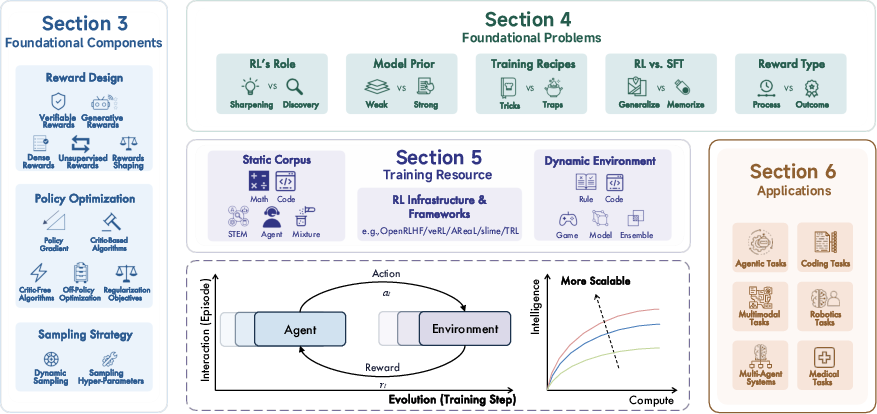

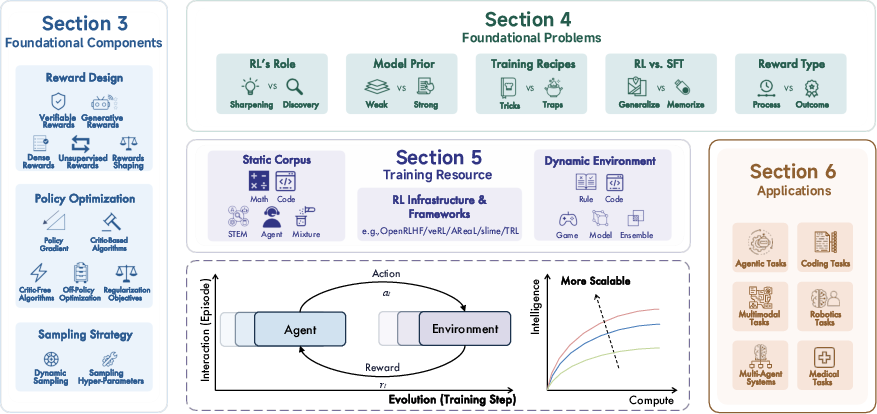

Figure 1: Overview of the survey, highlighting foundational RL components for LRMs, open problems, resources, and applications, with a focus on large-scale agent-environment interactions and long-term evolution.

Evolution of RL for LLMs and LRMs

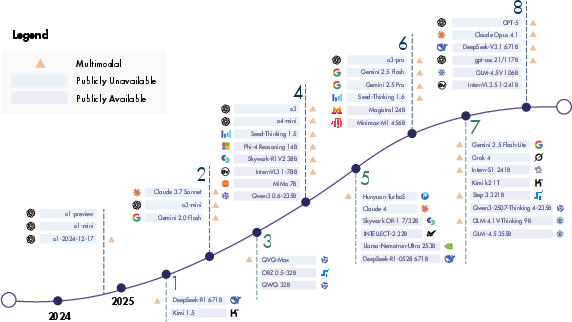

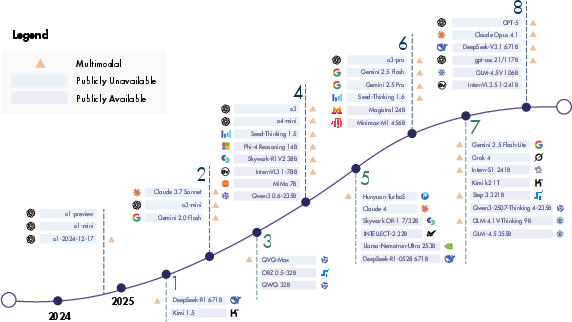

The field has evolved from RLHF and DPO, which primarily address human alignment, to RLVR, which leverages verifiable, programmatic rewards for tasks with objective ground truth (e.g., math, code). This shift is exemplified by models such as OpenAI o1 and DeepSeek-R1, which demonstrate that RL with verifiable rewards can induce sophisticated, long-form reasoning behaviors, including planning, reflection, and self-correction. The survey documents a timeline of both open-source and proprietary models, showing rapid progress in reasoning benchmarks and the expansion of RL to multimodal and agentic domains.

Figure 2: RLHF and DPO as dominant alignment methods, with RLVR emerging as a key trend for complex task-solving in LRMs; open-ended RL is identified as a major future challenge.

Figure 3: Timeline of representative reasoning models trained with RL, spanning language, multimodal, and agentic models.

The survey formalizes the mapping of RL concepts to the LLMing domain:

- State: Prompt plus generated tokens so far.

- Action: Next token selection.

- Policy: The LLM itself.

- Reward: Typically assigned at the sequence level (e.g., correctness), but can be decomposed to token, step, or turn levels for denser feedback.

- Transition: Deterministic concatenation of tokens.

This MDP formulation enables the direct application of RL algorithms to LLMs, with the learning objective being the maximization of expected reward over the data distribution.

Figure 4: RL and LMs as agents—tokens as actions, context as state, and rewards typically at the response level.

Foundational Components

Reward Design

The survey provides a taxonomy of reward types:

- Verifiable Rewards: Rule-based, programmatic checkers for math/code; highly scalable and robust against reward hacking.

- Generative Rewards: Model-based verifiers and reward models (GenRMs) for subjective or non-verifiable tasks, including rubric-based and co-evolving systems.

- Dense Rewards: Token-, step-, and turn-level rewards for improved credit assignment and sample efficiency.

- Unsupervised Rewards: Model-specific (e.g., consistency, confidence, self-rewarding) and model-agnostic (e.g., heuristic, data-centric) approaches to bypass human annotation bottlenecks.

- Reward Shaping: Combination of rule-based and model-based signals, group baselines, and Pass@K-aligned objectives to stabilize and align training.

The survey highlights the critical role of verifiable rewards in scaling RL for reasoning, while generative and dense rewards are essential for subjective or process-oriented tasks.

Policy Optimization

RL for LLMs is dominated by first-order, gradient-based algorithms:

- Critic-based: PPO and variants, using value models for token-level advantage estimation; effective but computationally intensive.

- Critic-free: REINFORCE, GRPO, and derivatives, using sequence-level or group-normalized advantages; more scalable for RLVR tasks.

- Off-policy: Methods leveraging historical or offline data, importance sampling, and hybrid SFT+RL objectives for improved sample efficiency.

- Regularization: KL and entropy regularization to balance exploration and stability; length penalties to control reasoning cost.

The survey notes that critic-free methods, especially GRPO and its variants, have become the de facto standard for large-scale RLVR due to their simplicity and scalability.

Sampling Strategies

Efficient sampling is crucial for RL stability and performance:

- Dynamic Sampling: Online filtering, curriculum learning, and prioritized sampling to focus compute on informative or under-mastered examples.

- Structured Sampling: Tree-based rollouts, shared prefixes, and segment-wise sampling for efficient credit assignment and compute reuse.

- Hyperparameter Tuning: Careful management of temperature, entropy, and sequence length budgets to balance exploration, efficiency, and cost.

Foundational Problems

The survey identifies and analyzes several open problems:

- Sharpening vs. Discovery: Debate over whether RL merely sharpens latent capabilities or enables genuine discovery of new reasoning patterns. Recent evidence suggests both phenomena can occur, depending on training duration, regularization, and model priors.

- RL vs. SFT: RL tends to generalize better under distribution shift, while SFT is prone to memorization. Hybrid and unified paradigms are emerging as best practice.

- Model Priors: RL responsiveness varies dramatically across model families (e.g., Qwen vs. Llama); mid-training and curriculum design can mitigate weak priors.

- Training Recipes: Minimalist, reproducible recipes (e.g., dynamic sampling, decoupled clipping) are favored over complex normalization tricks; unified evaluation protocols are needed.

- Reward Type: Outcome rewards are scalable but risk unfaithful reasoning; process rewards offer dense guidance but are costly to annotate. Hybrid approaches are promising.

Training Resources

The survey catalogs the ecosystem of RL resources:

- Static Corpora: High-quality, verifiable datasets for math, code, STEM, and agentic tasks, with increasing emphasis on process traces and difficulty stratification.

- Dynamic Environments: Rule-based, code-based, game-based, and model-based environments for scalable, interactive RL; essential for agentic and open-ended tasks.

- RL Infrastructure: Open-source frameworks (e.g., TRL, OpenRLHF, Verl, AReaL, NeMo-RL, ROLL, slime) supporting distributed, asynchronous, and agentic RL pipelines, with growing support for multimodal and multi-agent settings.

Applications

RL for LRMs has enabled advances across multiple domains:

- Coding: RLVR and agentic RL have improved competitive programming, domain-specific code generation, and repository-level software engineering.

- Agentic Tasks: RL-trained agents excel in tool use, search, browsing, GUI/computer use, and deep research, with asynchronous rollouts and memory mechanisms reducing latency.

- Multimodal: RL enhances both understanding (image, video, 3D) and generation (image, video) in multimodal models, with unified frameworks for cross-modal reasoning.

- Multi-Agent Systems: RL enables improved collaboration, credit assignment, and policy optimization in LLM-based MAS, though efficient communication and scalability remain open challenges.

- Robotics: RL for Vision-Language-Action (VLA) models addresses data scarcity and generalization, with outcome-level rewards enabling scalable training.

- Medical: RL is well established for verifiable medical tasks; non-verifiable tasks require rubric-based or offline RL, with scalability and stability as ongoing challenges.

Future Directions

The survey outlines several promising research avenues:

- Continual RL: Lifelong learning and adaptation to evolving tasks and data.

- Memory-based RL: Structured, reusable experience repositories for agentic reasoning.

- Model-based RL: Integration of world models for richer state representations and scalable reward generation.

- Efficient Reasoning: Resource-rational compute allocation and adaptive halting policies.

- Latent Space Reasoning: RL in continuous latent spaces for smoother, more powerful reasoning.

- RL for Pre-training: RL as a scaling strategy during pre-training, not just post-training.

- Diffusion-based LLMs: RL for DLLMs, with challenges in likelihood estimation and trajectory optimization.

- Scientific Discovery: RL-driven LLMs for open-ended, verifiable scientific tasks.

- Architecture-Algorithm Co-Design: RL as a mechanism for dynamic, hardware-aware model adaptation.

Conclusion

This survey provides a systematic synthesis of RL for LRMs, emphasizing the centrality of verifiable rewards, scalable policy optimization, and efficient sampling. The field is rapidly advancing toward more general, autonomous, and efficient reasoning models, with RL serving as both a catalyst and a bottleneck. Theoretical and practical challenges remain, particularly in reward design, generalization, and infrastructure, but the trajectory toward scalable, agentic, and multimodal reasoning is clear. Future progress will depend on unified evaluation, reproducible recipes, and the integration of RL throughout the model lifecycle—from pre-training to deployment.