- The paper presents a comprehensive taxonomy of trustworthiness dimensions, including truthfulness, safety, robustness, fairness, and privacy.

- It analyzes Chain-of-Thought prompting and LRMs using various training strategies like supervised fine-tuning, DPO, and reinforcement learning, revealing trade-offs such as increased hallucination risks.

- Empirical results underscore vulnerabilities to adversarial attacks, biases, and privacy breaches, emphasizing the need for robust evaluation protocols and enhanced defense mechanisms.

Survey of Trustworthiness in Reasoning with LLMs

Introduction

The surveyed paper presents a comprehensive taxonomy and analysis of trustworthiness in reasoning with LLMs, focusing on the impact of Chain-of-Thought (CoT) prompting and the emergence of Large Reasoning Models (LRMs). The work systematically reviews five core dimensions: truthfulness, safety, robustness, fairness, and privacy, with an emphasis on how advanced reasoning capabilities both enhance and challenge trustworthiness. The survey synthesizes findings from recent literature, highlights methodological advances, and identifies unresolved vulnerabilities and open research questions.

Reasoning Paradigms: CoT Prompting and LRMs

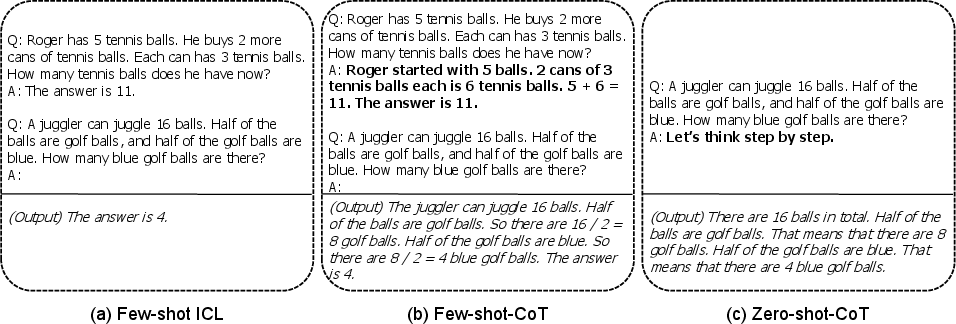

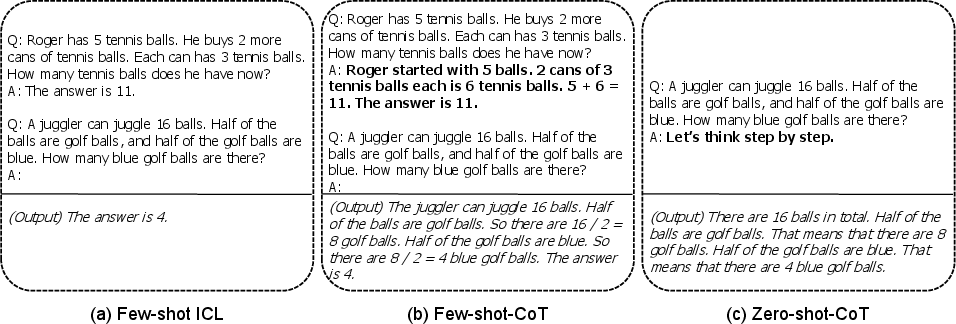

LLM reasoning is formalized as the joint generation of intermediate reasoning steps (T) and final answers (A), with CoT prompting serving as a primary technique for eliciting interpretable reasoning. Few-shot-CoT relies on annotated demonstrations, while zero-shot-CoT uses generic prompts such as "Let's think step by step" to induce reasoning.

Figure 1: Illustration of typical CoT prompting. Few-shot-CoT uses several examples with the reasoning process to elicit CoT, and zero-shot-CoT uses a prefix prompt to induce the reasoning process.

LRMs, exemplified by models like OpenAI o1 and DeepSeek-R1, are trained to autonomously generate reasoning traces. Training strategies include supervised fine-tuning (SFT), Direct Preference Optimization (DPO), and reinforcement learning (RL) with process or outcome reward models. Multimodal LRMs extend these techniques to vision-language domains, requiring specialized data generation and reward modeling.

Truthfulness: Hallucination and Faithfulness

Hallucination

Reasoning models, while more structured and persuasive, are susceptible to hallucinations that are harder to detect and more credible to users. Studies consistently show that LRMs can exhibit higher hallucination rates than non-reasoning models, especially in non-reasoning tasks and when faced with unanswerable questions. Hallucination frequency correlates with CoT length and training paradigm, with outcome-based RL fine-tuning exacerbating the issue due to high policy variance and entropy.

Detection and mitigation strategies include:

- Fine-grained Process Reward Models (FG-PRM) for type-specific hallucination detection.

- Reasoning score metrics based on hidden state divergence.

- Training-based mitigation via reward shaping (e.g., encouraging "I don't know" responses).

- Planning-based mitigation by decoupling reasoning plans from multimodal inputs.

Faithfulness

Faithfulness is defined as the alignment between the model's reasoning trace and its actual decision process. Evaluation methods include CoT intervention (truncation, error injection), input intervention (biasing prompts), and parameter intervention (unlearning specific reasoning steps). Metrics such as Area Over Curve (AOC) and Leakage-Adjusted Simulatability (LAS) are used to quantify faithfulness.

Empirical findings reveal contradictory trends: some studies report that larger models are less faithful despite higher accuracy, while others find the opposite. Faithfulness is also task-dependent and sensitive to post-training techniques (SFT, DPO, RLVR). Symbolic reasoning and self-refinement mechanisms (e.g., LOGIC-LM, SymbCoT, FLARE) are proposed to enhance faithfulness, but unfaithfulness remains unresolved, especially in high-stakes domains.

Safety: Vulnerability, Jailbreak, Alignment, and Backdoor

Vulnerability Assessment

Open-source LRMs are demonstrably vulnerable to jailbreak attacks, with attack success rates (ASR) reaching 100% in some benchmarks. Reasoning traces can amplify harmfulness by providing detailed, actionable content. Safety performance varies across datasets, topics, and languages, with pronounced vulnerabilities in cybersecurity and discrimination domains. Multimodal LRMs inherit similar weaknesses, and self-correction in reasoning traces is only partially effective.

Jailbreak Attacks and Defenses

Advanced reasoning enables more sophisticated jailbreak attacks, including multi-turn prompt obfuscation, cipher decoding, and narrative wrapping. Automated attack frameworks (e.g., AutoRAN, Mousetrap) exploit reasoning steps to bypass safety alignment. Defense strategies leverage reasoning-augmented guardrail models (e.g., GuardReasoner, X-Guard, RSafe), curriculum learning, and reward model-based detection. Decoding-phase defenses (e.g., Saffron-1) optimize inference-time safety, while post-hoc guardrail models (e.g., ReasoningShield) target harmful reasoning traces.

Alignment and Safety Tax

Safety alignment via CoT data collection and post-training (SFT, DPO, RL) is effective but incurs a "safety tax," sacrificing general capabilities for improved safety. Empirical studies show that RLHF and model merging can mitigate but not eliminate this trade-off. Alignment strategies for LRMs involve curated CoT datasets, zero-sum game frameworks, and dual-path safety heads for key sentence detection.

Backdoor Attacks

Backdoor vulnerabilities are present in both training-time (data poisoning, trigger insertion) and inference-time (prompt manipulation, RAG poisoning) settings. Reasoning models are more susceptible due to their complex reasoning traces. Defensive approaches include Chain-of-Scrutiny, reasoning step analysis, and agent-based repair mechanisms.

Robustness: Adversarial Noise, Overthinking, Underthinking

Robustness is challenged by adversarial input perturbations, misleading reasoning steps, and manipulations of thinking length. CoT prompting and reasoning-based bias detectors improve robustness but do not fully counteract vulnerabilities. Benchmarks (e.g., MATH-Perturb, Math-RoB, CatAttack) reveal that LRMs are sensitive to operator changes, distractors, and negation prompts. Overthinking and underthinking are emergent phenomena, with models generating excessively long or abnormally short reasoning traces, often triggered by unanswerable or adversarial inputs. Mitigation strategies span prompt engineering, training-based interventions, and inference-time scaling.

Fairness

Reasoning models exhibit persistent biases across dialects, genders, and personas. CoT prompting reduces but does not eliminate bias, and explicit reasoning can sometimes exacerbate discrimination. Bias detection models (e.g., BiasGuard) employ reasoning-augmented training, but fairness remains contingent on data quality and distribution.

Privacy

Privacy risks arise from both model memorization and prompt inference. Unlearning methods (R-TOFU, R2MU) are insufficient to fully erase sensitive information, and multi-turn reasoning can inadvertently reveal private data. Model IP protection leverages watermarking and antidistillation sampling, with CoT-based fingerprints embedded in reasoning traces. Prompt-related privacy attacks (e.g., GeoMiner) exploit attribute inference capabilities, and current guardrails are inadequate for defense.

Future Directions

The survey identifies several open research areas:

- Standardized, robust faithfulness metrics to resolve contradictory findings.

- Deeper analysis of safety mechanisms, dataset construction, and RL contributions.

- Development of fine-grained, discriminative safety, privacy, and fairness benchmarks.

- Exploration of combined training-based and training-free methods for faithfulness and robustness.

Conclusion

This survey provides a structured taxonomy and synthesis of trustworthiness in reasoning with LLMs, highlighting both advances and unresolved vulnerabilities. The interplay between reasoning capability and trustworthiness is complex, with improvements in interpretability and performance often accompanied by expanded attack surfaces and new risks. Continued research is required to develop reliable, safe, and fair reasoning models, with emphasis on robust evaluation protocols, alignment strategies, and privacy-preserving mechanisms.