Overview of "Igniting Language Intelligence: The Hitchhiker's Guide From Chain-of-Thought Reasoning to Language Agents"

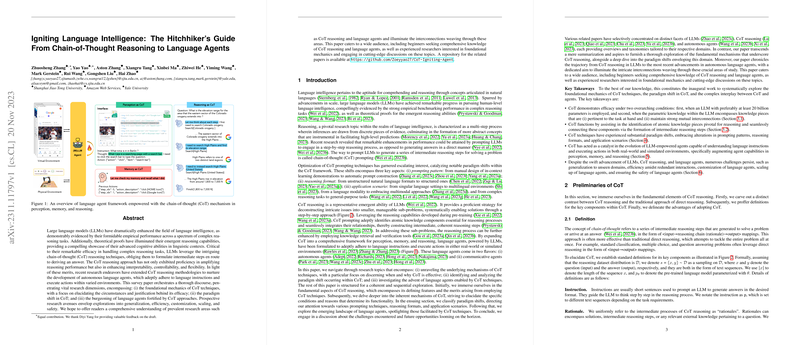

This paper provides a comprehensive survey on the advancements and applications of Chain-of-Thought (CoT) reasoning techniques in LLMs and their role in the evolution of language agents. It systematically explores the foundational mechanics of CoT techniques, delineates paradigm shifts within CoT, and examines the progression towards autonomous language agents.

Chain-of-Thought (CoT) Reasoning

CoT reasoning is a transformative technique enabling LLMs to perform complex reasoning tasks by generating intermediate reasoning steps. This method enhances interpretability, controllability, and flexibility, making LLMs more adept at solving intricate problems. The survey categorizes advancements in CoT into three primary dimensions: the foundational mechanics, paradigm shifts, and implications for language agents.

Foundational Mechanics

From an engineering standpoint, CoT is effective when an LLM with at least 20 billion parameters is employed, and the task requires multi-step reasoning. The training data must contain strongly interconnected knowledge clusters. Theoretically, CoT assists LLMs in identifying and connecting atomic knowledge pieces, thus enabling coherent reasoning steps.

Paradigm Shifts

The survey identifies significant paradigm shifts in CoT techniques:

- Prompting Pattern: Strategies evolved from manual prompt design to automatic prompt generation, enhancing zero-shot and few-shot capabilities.

- Reasoning Format: CoT has expanded into structured formats, including tree-like and table-like formulations, enabling more sophisticated reasoning processes.

- Application Scenario: CoT has been applied across multilingual and multimodal environments, extending its utility beyond traditional text tasks to include complex real-world scenarios.

Language Agents

Leveraging CoT reasoning, language agents have emerged, capable of interacting with real-world or simulated environments. These agents utilize CoT not only in reasoning but also in perception and memory operations. The capabilities are demonstrated in diverse fields such as autonomous control, interactive agents, and programming aids.

Numerical Results and Claims

The paper highlights substantial improvements in reasoning task performance due to CoT techniques. For example, notable gains in benchmark datasets like GSM8K and AQuA underscore CoT's utility in improving reasoning accuracy. Furthermore, the effectiveness of self-consistency techniques in enhancing CoT's impact on commonsense reasoning tasks is demonstrated.

Implications and Future Directions

The findings suggest that CoT has considerable potential in developing more advanced language agents, promoting autonomous and interactive capabilities. Future research is encouraged in the areas of generalization to unseen domains, enhancing agent efficiency, customization, and addressing safety concerns.

Conclusion

This survey provides an in-depth exploration of CoT reasoning, its paradigm shifts, and its transformative impact on language agents. The paper offers a forward-looking perspective on the integration of CoT techniques in LLMs, aiming to harness their full potential in AI development. The challenges outlined invite further inquiry into refining these models, ensuring their scalability, efficiency, and safety in increasingly complex environments.