- The paper presents a novel multi-output spiking neuron architecture that integrates a non-linear reset feedback to stabilize learning under low-bit conditions.

- The method combines stateful SNN dynamics with deep SSM computational strengths through a learnable reset mechanism triggered when thresholds are exceeded.

- Experimental results indicate competitive performance in tasks like keyword spotting and event-based vision, with minimal computational overhead suitable for edge deployment.

Low-Bit Data Processing Using Multiple-Output Spiking Neurons with Non-linear Reset Feedback

Introduction

Neuromorphic computing represents an innovative approach to achieving low-latency and energy-efficient signal processing through the application of Spiking Neural Networks (SNNs). These networks, inspired by biological neurons, utilize stateful units to process low-bit data effectively. While SNNs traditionally employ biologically plausible models such as Integrate-and-Fire (IF) neurons, this paper introduces a novel approach integrating aspects of both SNNs and Deep State-Space Models (SSMs). SSMs are typically designed with high-precision activation functions and lack reset mechanisms. The proposed model introduces a multiple-output spiking neuron architecture that combines linear state transitions with a non-linear feedback mechanism through a reset. This enables the exploitation of the advantages offered by the stateful dynamics of SNNs with the computational prowess of deep SSMs.

Proposed Neuron Model

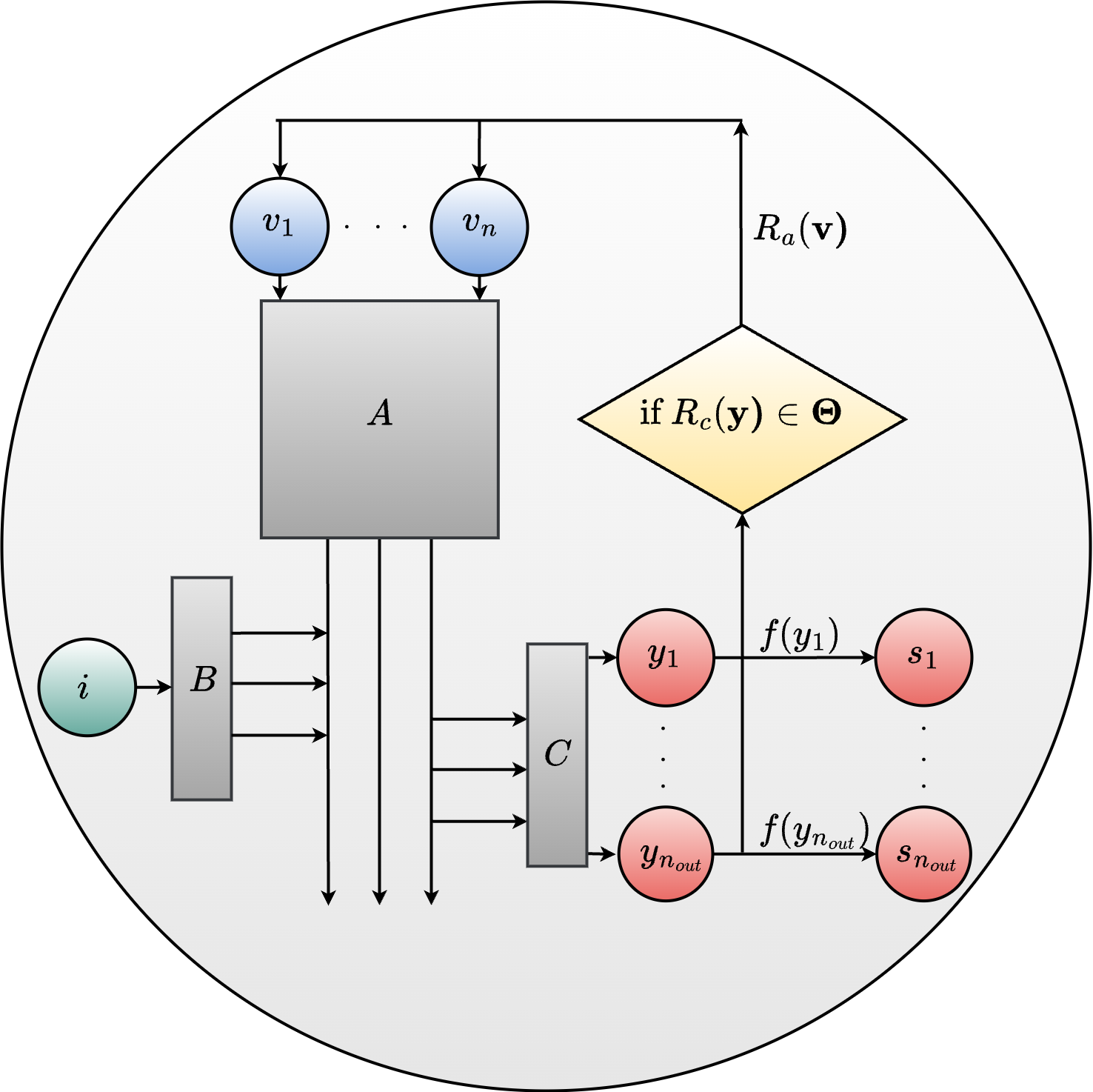

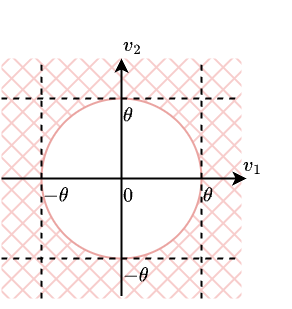

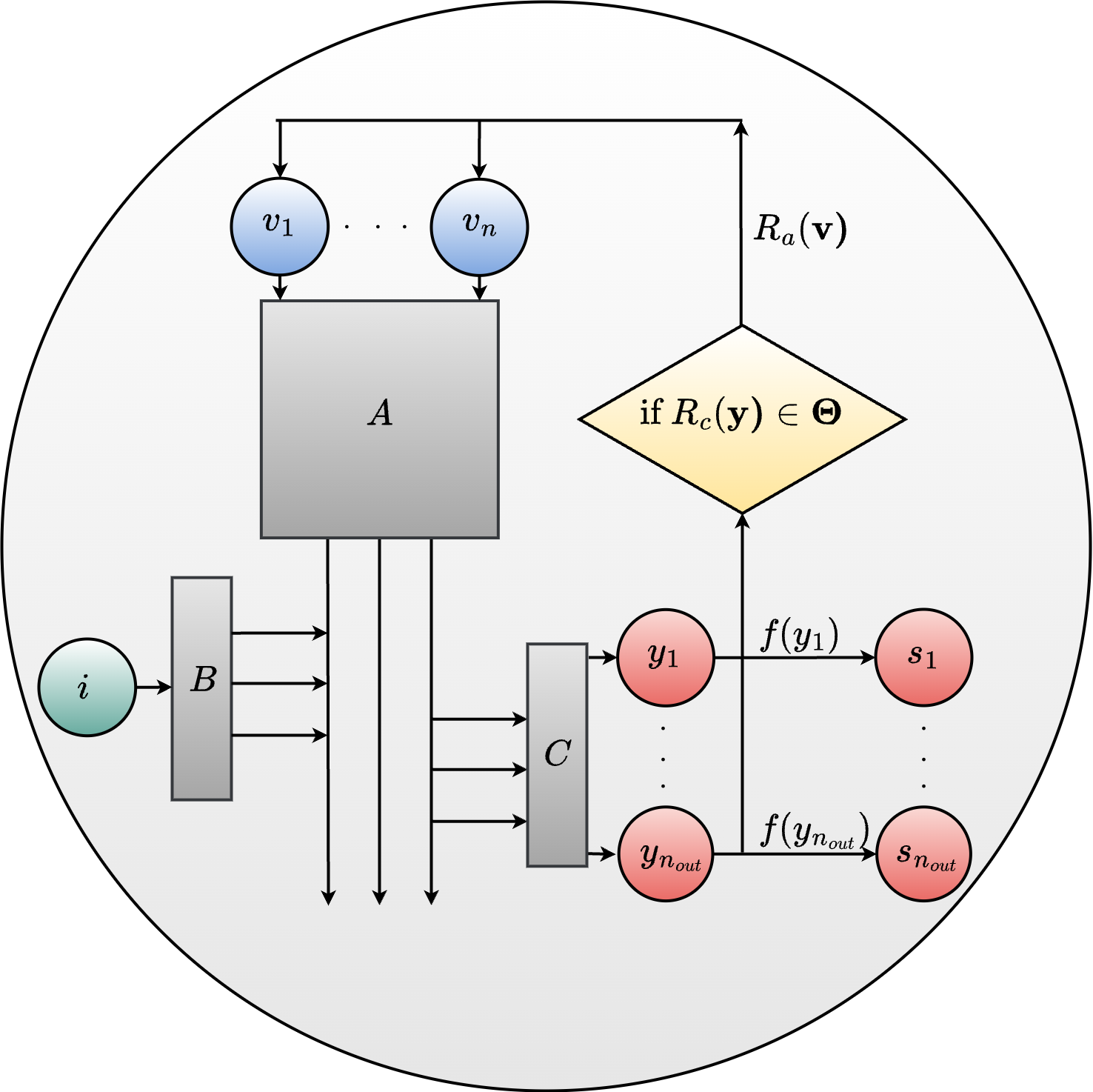

The proposed neuron model leverages a multiple-output framework with a general state transition matrix and a reset mechanism defined by learnable parameters. This contrasts with the typical single-output model prevalent in SNN literature. By embracing a complex-valued diagonal state transition, the neuron model allows for enriched dynamics. Specifically, the reset condition and action are separated from conventional spiking behavior, offering a unique mechanism where the reset is triggered not solely by spikes but by exceeding a specific threshold. This mechanism can facilitate learning even when the neuron dynamics are unstable, which is generally constrained in typical SSM models.

Figure 1: Illustration of dynamics of a neuron with multiple-outputs and a general reset mechanism.

Experimental Results

The experimental evaluation consists of tasks such as keyword spotting, event-based vision, and sequential pattern recognition. The neuron model demonstrates comparable performance against existing benchmarks in the SNN literature. Notably, the reset mechanism proved advantageous in overcoming instability within neuron dynamics, leading to robust learning processes even under conditions where high precision is not maintained.

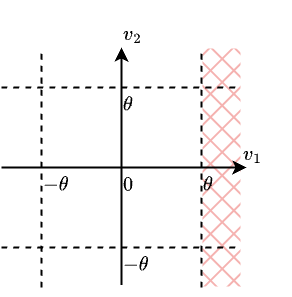

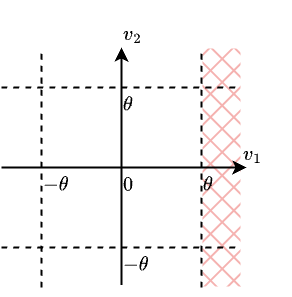

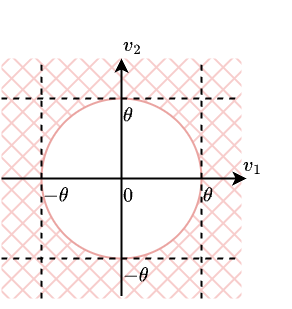

Figure 2: Illustration of adLIF neuron reset condition with v1>θ.

The results consistently show that the proposed architecture holds promise in various data modalities, emphasizing its applicability across different domains. The versatility is further demonstrated by its ability to generalize across multilingual spoken word recognition and gesture-based data processing.

Computational Considerations

Implementing the proposed model requires acknowledging the computational overhead introduced primarily by the reset mechanism. This overhead is minimal, consisting of three additional learnable parameters per neuron, and slightly increased computational operations without significantly affecting the overall efficiency. The architecture ensures scalability and deployability, suitable for edge applications where power and latency constraints are paramount.

Conclusion

The paper introduces an innovative multiple-output spiking neuron model that bridges the gap between traditional SNNs and deep SSM architectures. It highlights the potential benefits of integrating non-linear reset mechanisms to enhance learning stability, particularly within unstable neuron dynamics. Such integration opens pathways for more expressive and biologically inspired SSM-based architectures, potentially enriching both theoretical understanding and practical implementation in neuromorphic computing scenarios. Future investigations may explore refining learnable reset mechanisms and exploring real-time deployment in neuromorphic hardware platforms.