- The paper presents a modular multi-agent framework that unifies perception, reasoning, planning, and action execution for enhanced GUI automation.

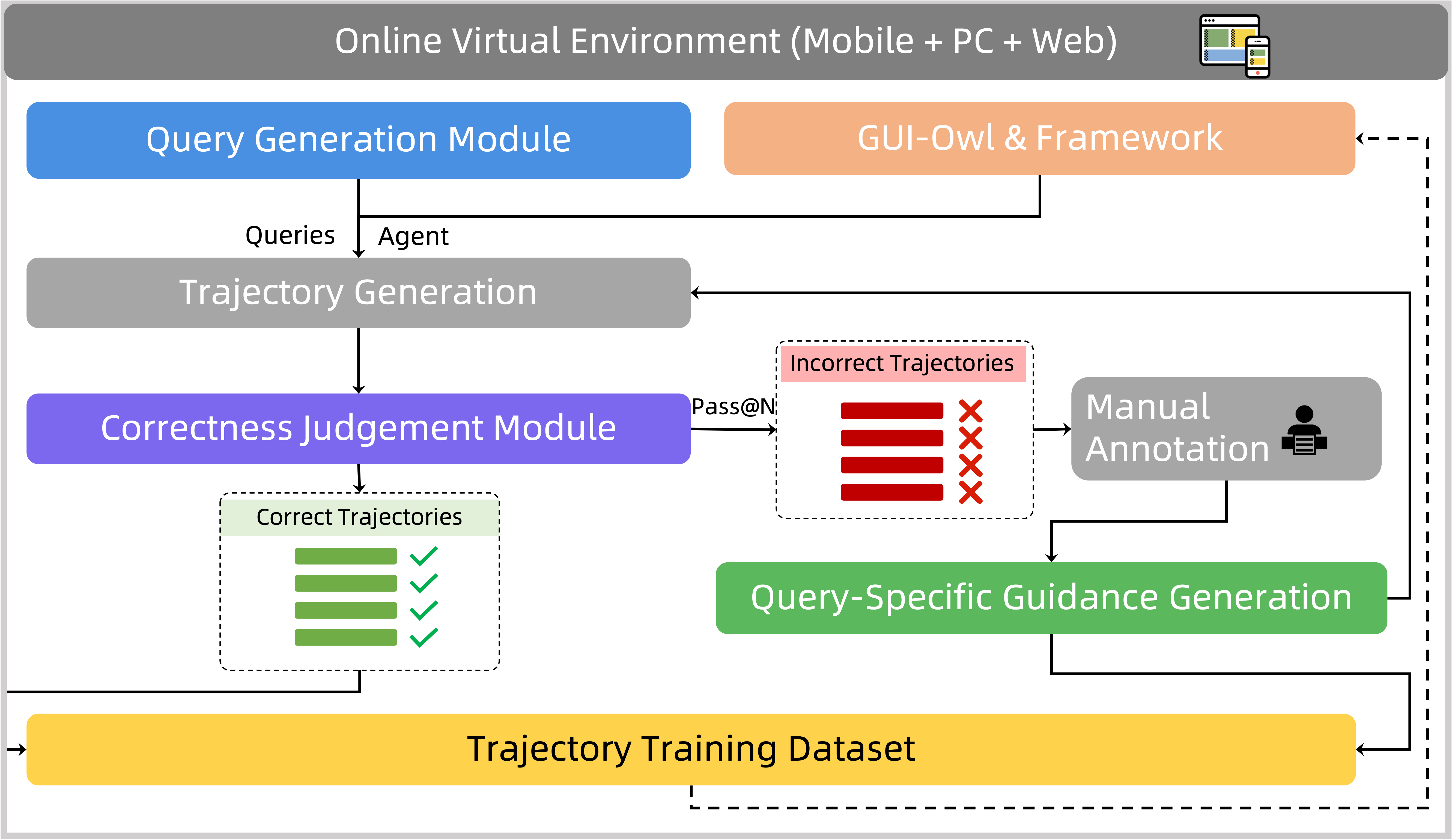

- It introduces a self-evolving trajectory data production pipeline and scalable reinforcement learning to improve performance across diverse benchmarks.

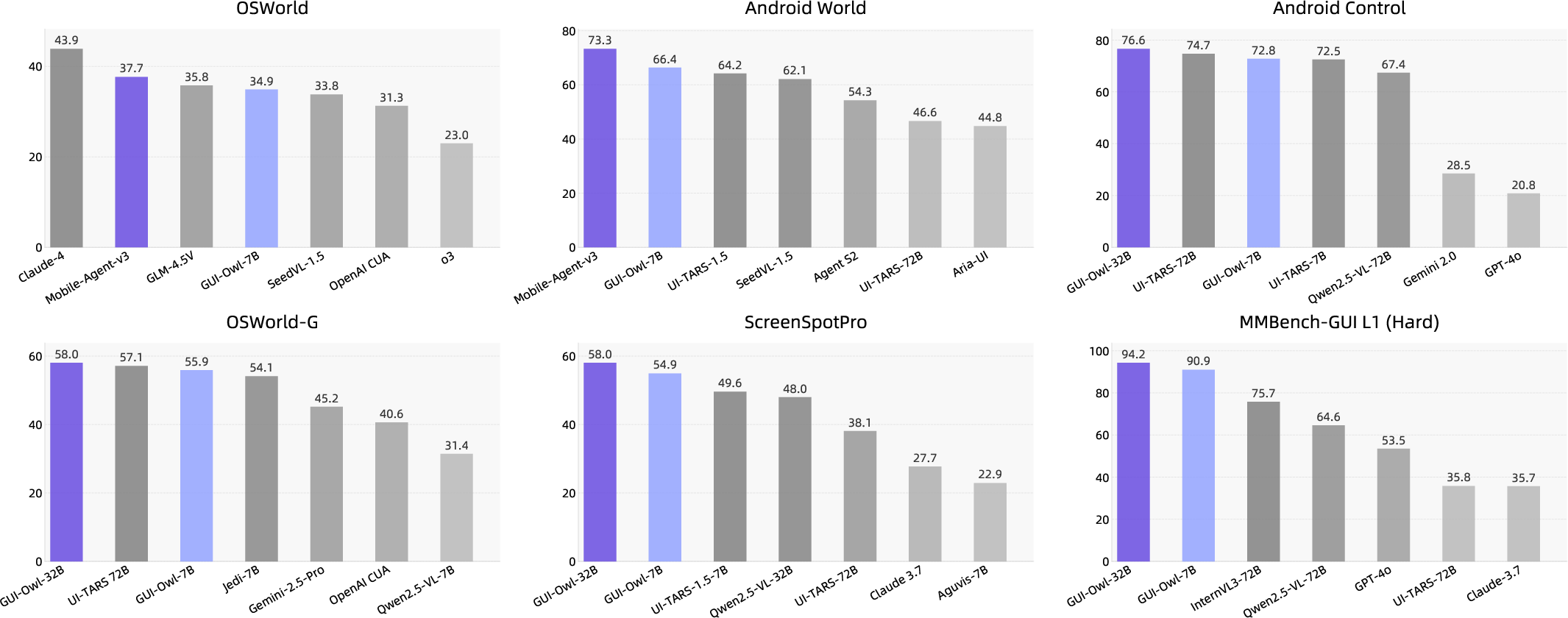

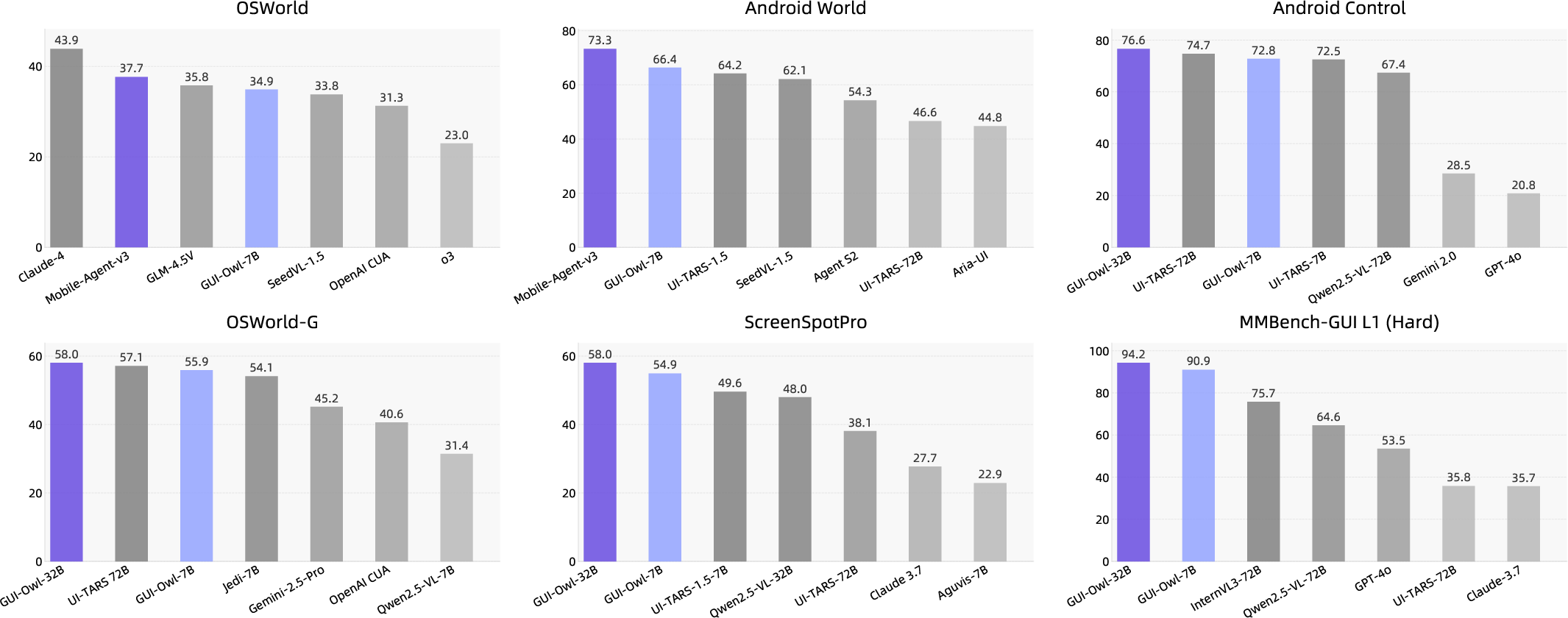

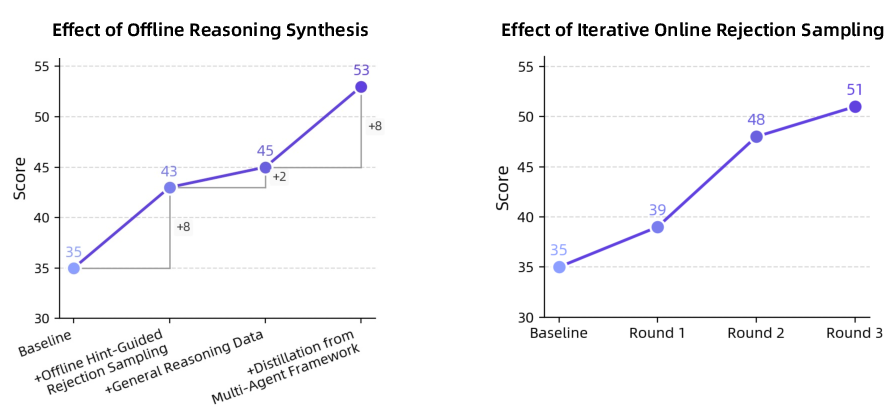

- Empirical results demonstrate state-of-the-art scores on both desktop and mobile environments, validating the effectiveness of the GUI-Owl model.

Mobile-Agent-v3: Foundational Agents for GUI Automation

Overview and Motivation

Mobile-Agent-v3 introduces a comprehensive agentic framework for GUI automation, centered around the GUI-Owl model. The work addresses the limitations of prior approaches—namely, the lack of generalization to unseen tasks, poor adaptability to dynamic environments, and insufficient integration with multi-agent frameworks. GUI-Owl is designed as a native end-to-end multimodal agent, unifying perception, grounding, reasoning, planning, and action execution within a single policy network. The system is evaluated across ten benchmarks spanning desktop and mobile environments, demonstrating robust performance in grounding, question answering, planning, decision-making, and procedural knowledge.

Figure 1: Performance overview on mainstream GUI-automation benchmarks.

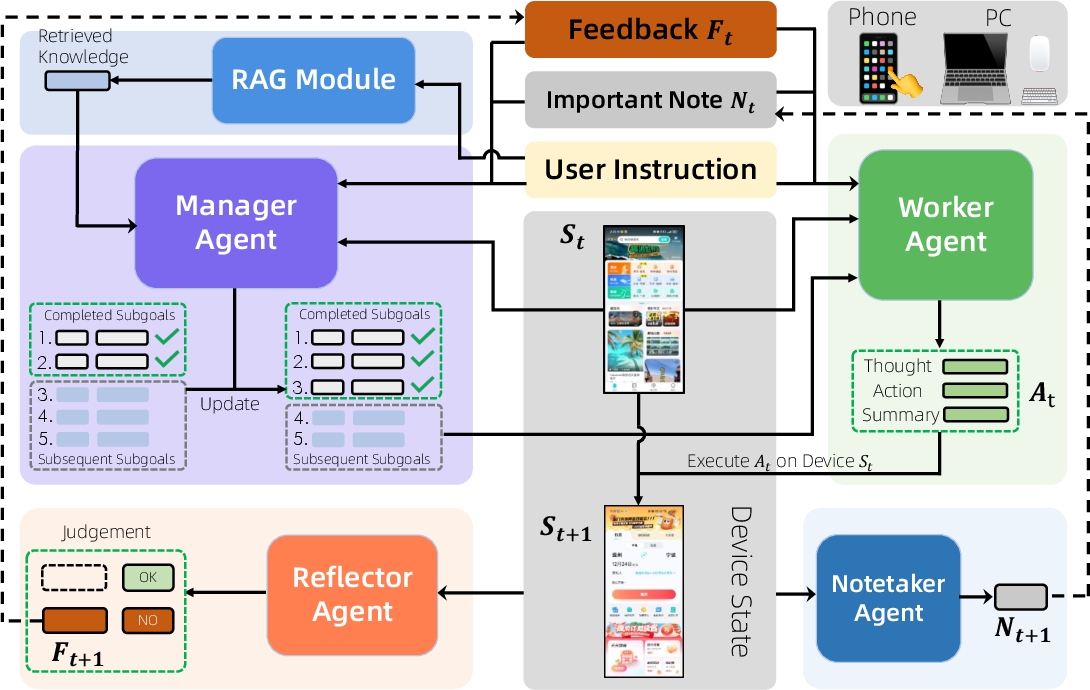

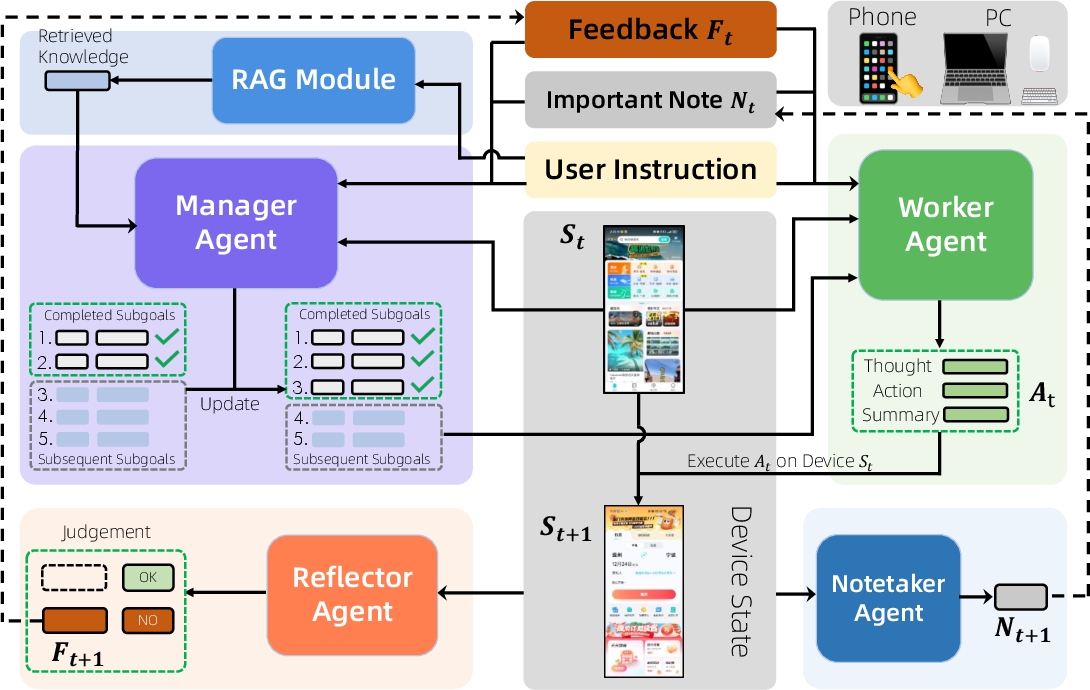

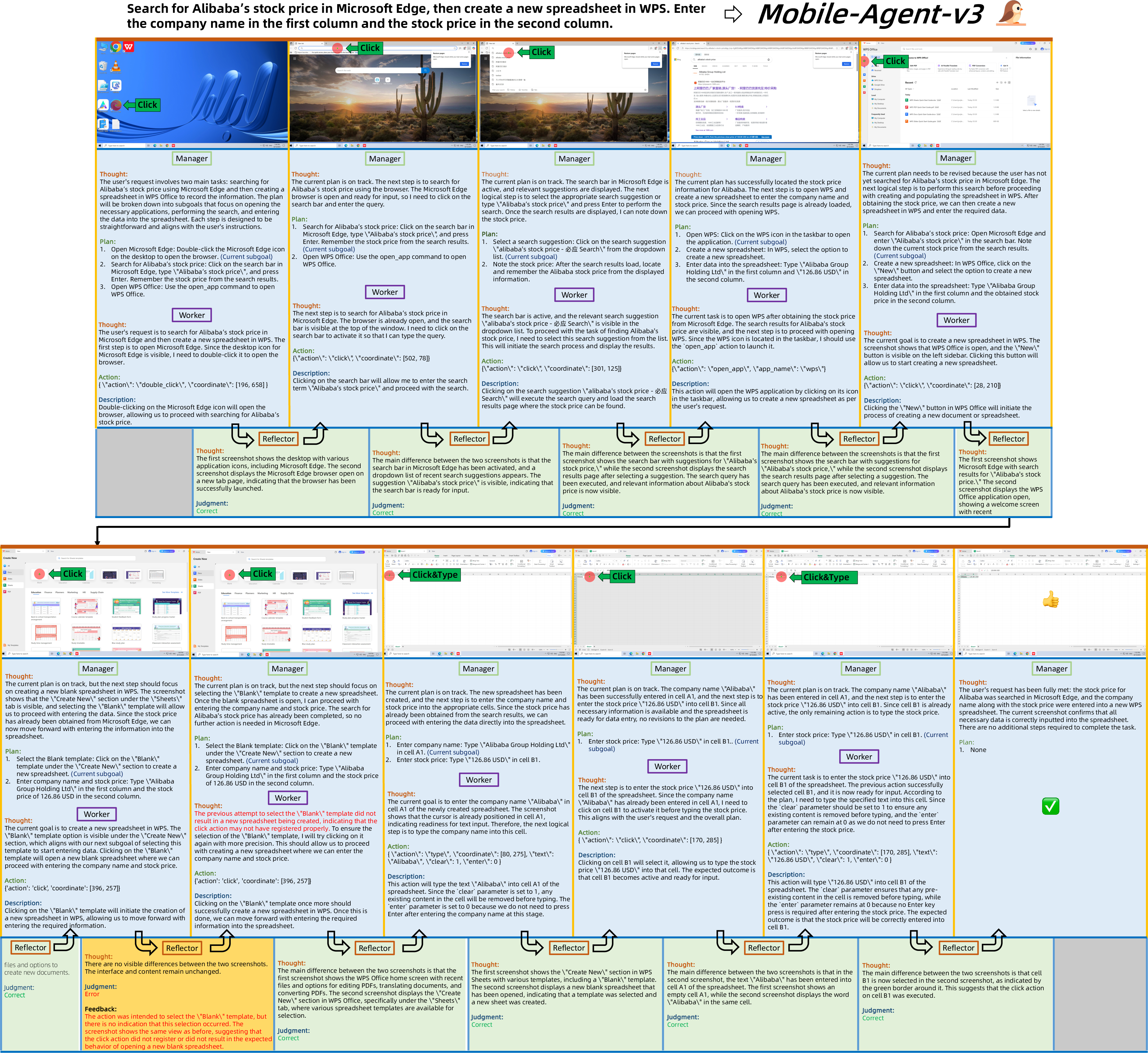

System Architecture and Multi-Agent Framework

Mobile-Agent-v3 is built upon GUI-Owl and extends its capabilities through a modular multi-agent architecture. The framework supports both desktop and mobile platforms, leveraging a cloud-based virtual environment infrastructure for scalable data collection and training. The agentic system comprises specialized modules:

- Manager Agent: Strategic planner, decomposes high-level instructions into subgoals and dynamically updates plans based on feedback.

- Worker Agent: Executes actionable subgoals, interacts with the GUI, and records reasoning and intent.

- Reflector Agent: Evaluates outcomes, provides diagnostic feedback, and enables self-correction.

- Notetaker Agent: Maintains persistent contextual memory, storing critical information for long-horizon tasks.

- RAG Module: Retrieves external world knowledge to inform planning and execution.

Figure 2: Overview of Mobile-Agent-v3, illustrating multi-platform support and core capabilities.

Figure 3: Mobile-Agent-v3 architecture, detailing the six core modules and their interactions.

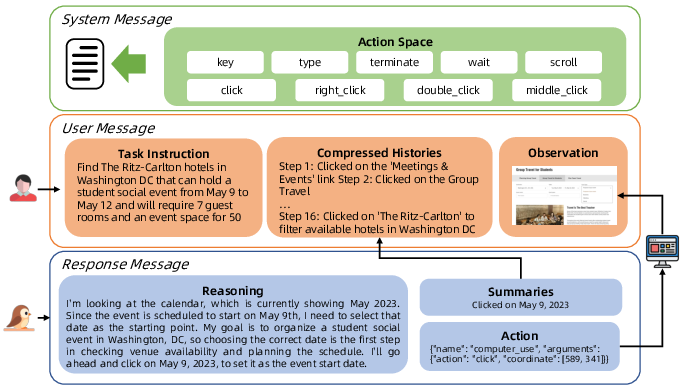

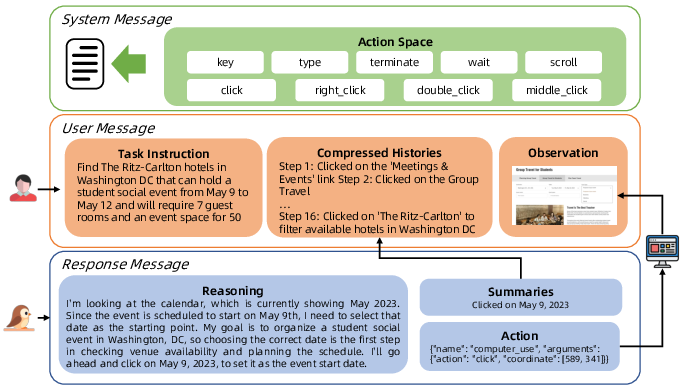

End-to-End GUI Interaction and Reasoning

GUI-Owl models GUI interaction as a multi-turn decision process, where the agent selects actions based on current observations and historical context. The interaction flow is structured to maximize reasoning transparency and adaptability:

- System Message: Defines available action space.

- User Message: Contains task instructions, compressed histories, and current observations.

- Response Message: Includes agent's reasoning, action summaries, and final action output.

The agent is required to output explicit reasoning before executing actions, with conclusions summarized and stored to manage context length constraints.

Figure 4: Illustration of the interaction flow of GUI-Owl, showing the structured message exchange.

Self-Evolving Trajectory Data Production

A key innovation is the self-evolving trajectory data production pipeline, which automates the generation and validation of high-quality interaction data. This pipeline leverages GUI-Owl's own capabilities to roll out trajectories, assess correctness, and iteratively improve the model. The process includes:

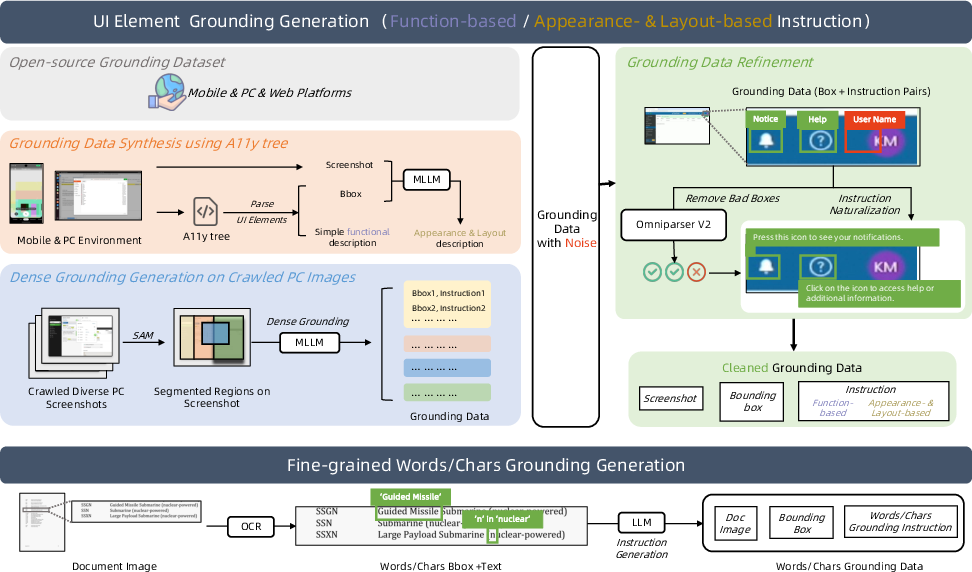

Diverse Data Synthesis for Foundational Capabilities

The framework constructs specialized datasets for grounding, planning, and action semantics:

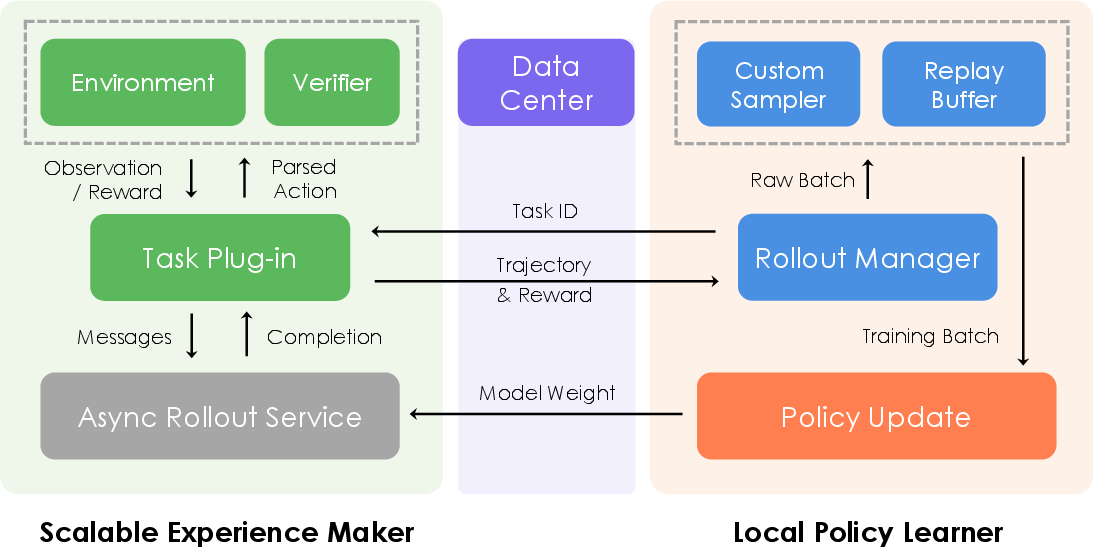

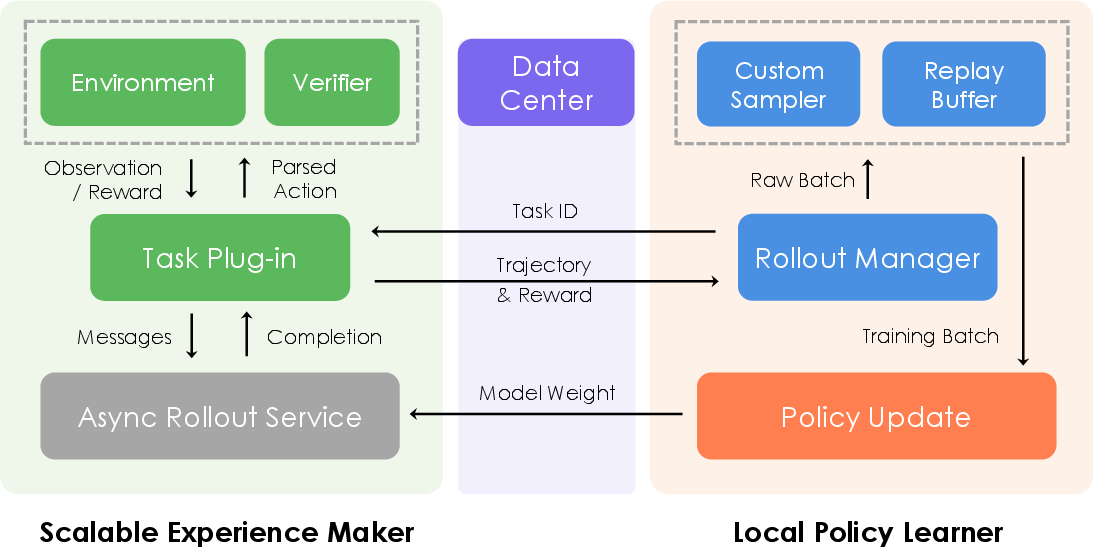

Scalable Reinforcement Learning Infrastructure

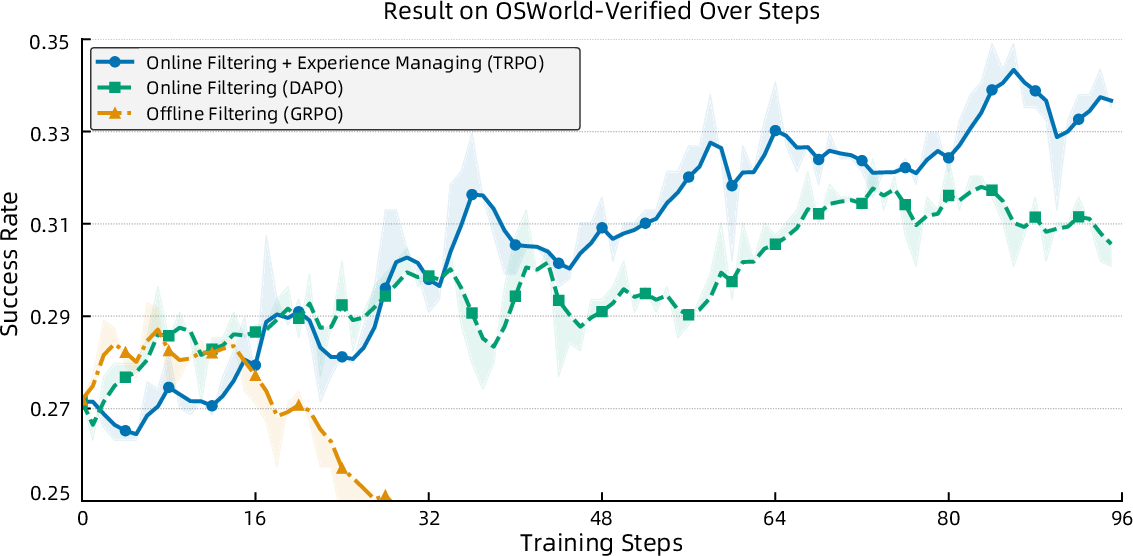

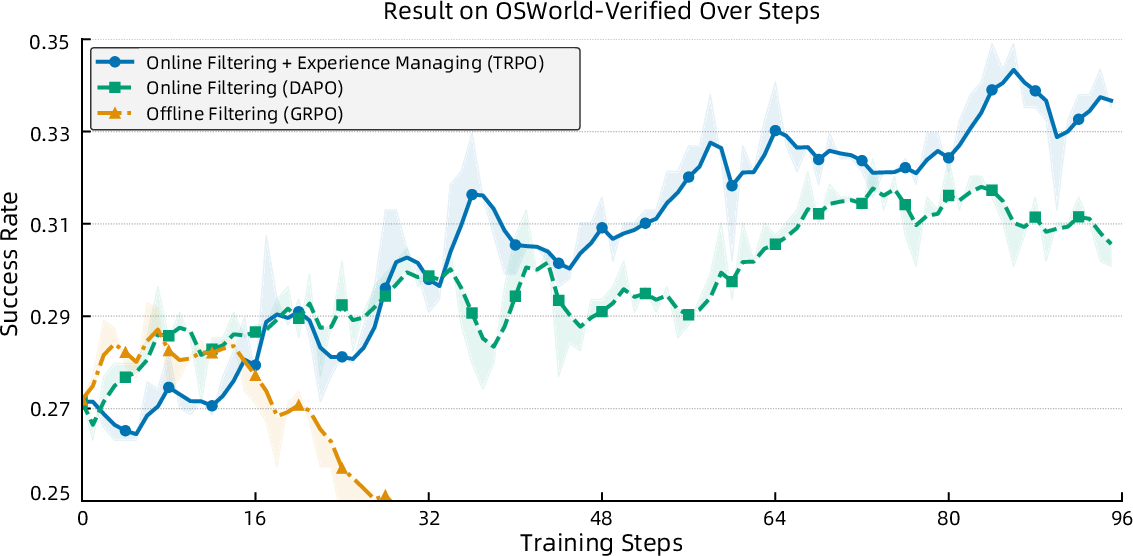

Mobile-Agent-v3 employs a scalable RL infrastructure, supporting asynchronous training and decoupled rollout–update processes. The RL framework unifies single-turn reasoning and multi-turn agentic training, enabling high-throughput experience generation and policy optimization. The introduction of Trajectory-aware Relative Policy Optimization (TRPO) addresses the challenge of sparse, delayed rewards in long-horizon GUI tasks by distributing normalized trajectory-level advantages across all steps.

Figure 7: Overview of the scalable RL infrastructure, highlighting parallelism and unified interfaces.

Training Paradigm and Data Management

GUI-Owl is initialized from Qwen2.5-VL and trained in three stages:

- Pre-training: Large-scale corpus for UI understanding and reasoning.

- Iterative Tuning: Real-world deployment, trajectory cleaning, and offline reasoning data synthesis.

- Reinforcement Learning: Asynchronous RL for direct environment interaction, focusing on execution consistency and success rate.

Figure 8: Training dynamics of GUI-Owl-7B on OSWorld-Verified, showing the impact of different data filtering and experience management strategies.

Empirical Results and Analysis

GUI-Owl-7B and GUI-Owl-32B achieve state-of-the-art results across grounding, comprehensive GUI understanding, and end-to-end agentic benchmarks. Notably:

- AndroidWorld: GUI-Owl-7B scores 66.4, Mobile-Agent-v3 reaches 73.3.

- OSWorld: GUI-Owl-7B scores 34.9 (RL-tuned), Mobile-Agent-v3 achieves 37.7.

- MMBench-GUI-L2: GUI-Owl-7B scores 80.49, GUI-Owl-32B reaches 82.97, outperforming proprietary models including GPT-4o and Claude 3.7.

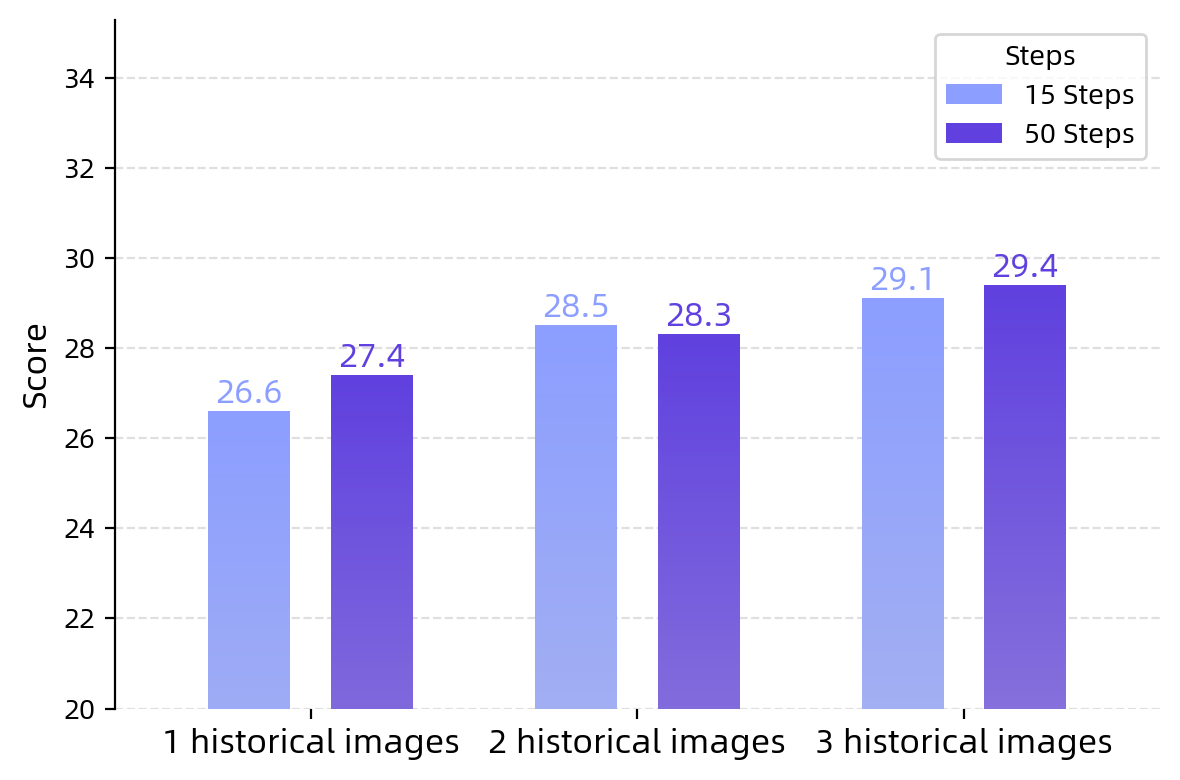

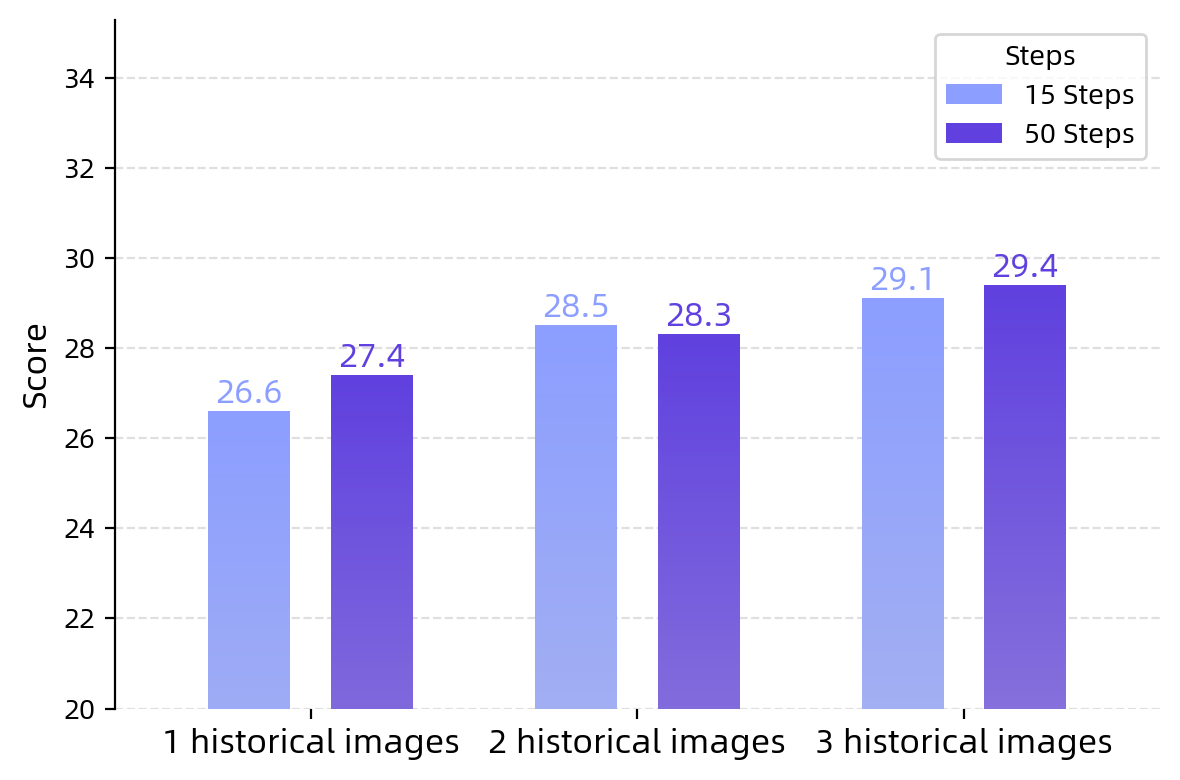

Performance scales with increased historical context and interaction-step budgets, indicating strong long-horizon reasoning and correction capabilities.

Figure 9: Performance of GUI-Owl-7B on OSWorld-Verified with varying historical images and step budgets.

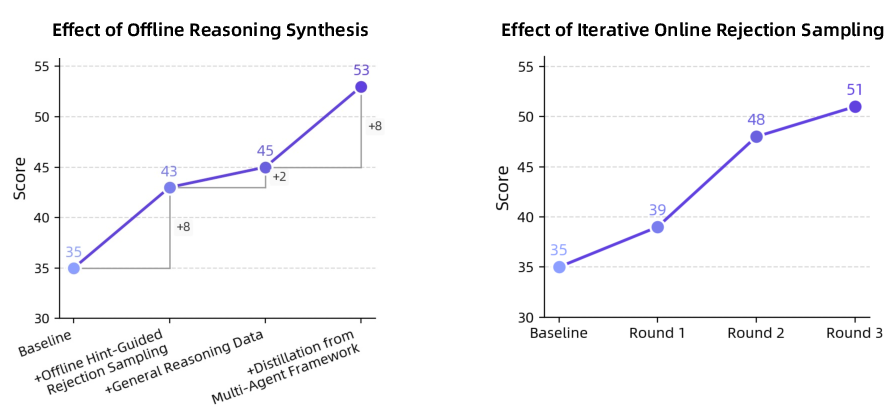

Figure 10: Effect of reasoning data synthesis on Android World, demonstrating incremental gains from diverse reasoning sources.

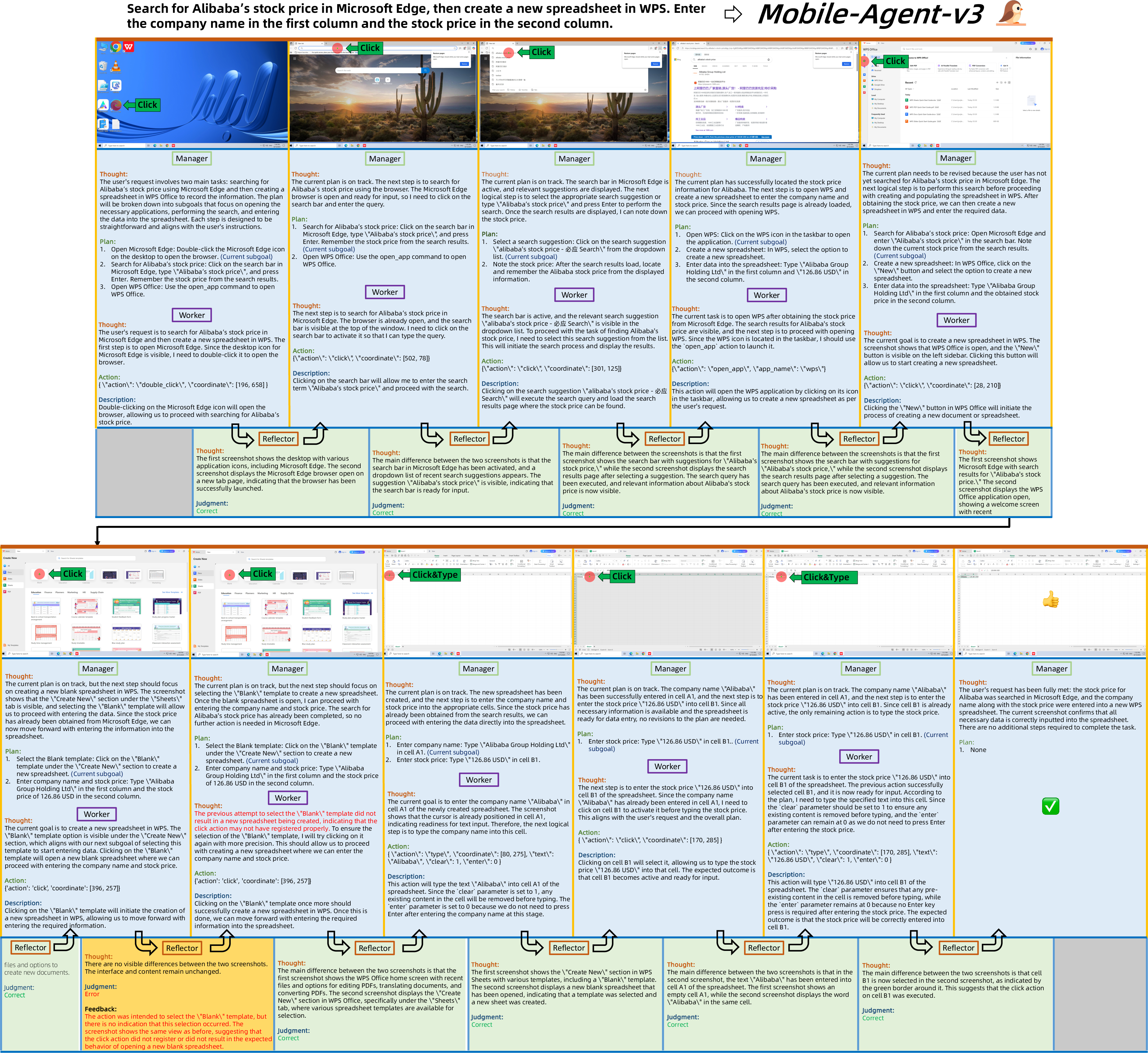

Agentic Workflow and Case Study

The integrated workflow of Mobile-Agent-v3 is formalized as a cyclical process, with agents coordinating to decompose tasks, execute actions, reflect on outcomes, and persist critical information. The system demonstrates robust self-correction and adaptability in complex desktop and mobile scenarios.

Figure 11: A case of a complete Mobile-Agent-3 operation process on a desktop platform, highlighting successful reflection and correction.

Implications and Future Directions

Mobile-Agent-v3 establishes a new paradigm for GUI automation, combining self-evolving data pipelines, modular multi-agent architectures, and scalable RL. The demonstrated generalization across platforms and benchmarks suggests strong potential for real-world deployment in productivity, accessibility, and autonomous digital assistants. The explicit reasoning and reflection mechanisms provide a foundation for further research into explainable agentic systems and robust long-horizon planning.

The trajectory-level RL and experience management strategies highlight the importance of data efficiency and dynamic adaptation in agentic training. Future work may explore more granular credit assignment, hierarchical planning, and integration with external tool-use and retrieval systems.

Conclusion

Mobile-Agent-v3 and GUI-Owl represent a significant advancement in foundational agents for GUI automation, achieving robust cross-platform performance and demonstrating effective integration of perception, reasoning, planning, and action. The modular multi-agent framework, self-evolving data production, and scalable RL infrastructure collectively enable state-of-the-art results and provide a blueprint for future agentic systems in dynamic digital environments.