- The paper presents ComputerRL, a framework combining API-GUI integration, multi-stage RL, and Entropulse to significantly enhance desktop automation.

- It employs a distributed infrastructure and novel step-level GRPO, achieving up to 64% performance gains and 134% improvement over traditional methods.

- Empirical results on OSWorld demonstrate state-of-the-art success, effective error recovery, scalability, and broader applicability in device-control tasks.

ComputerRL: Scaling End-to-End Online Reinforcement Learning for Computer Use Agents

Introduction and Motivation

The paper presents ComputerRL, a comprehensive framework for training autonomous agents to operate complex desktop environments using end-to-end online reinforcement learning (RL). The motivation stems from the limitations of existing GUI agents, which are constrained by human-centric interface designs and the inefficiency of behavior cloning (BC) and RL approaches in large-scale, real-world desktop automation. ComputerRL addresses these challenges by introducing a hybrid API-GUI interaction paradigm, a scalable distributed RL infrastructure, and a novel training strategy—Entropulse—to mitigate entropy collapse and sustain policy improvement.

Framework Architecture and API-GUI Paradigm

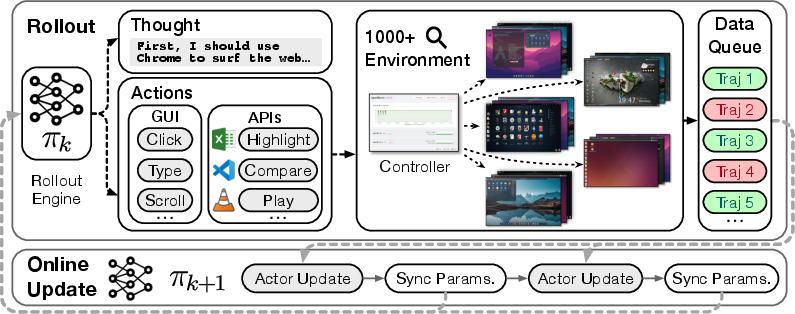

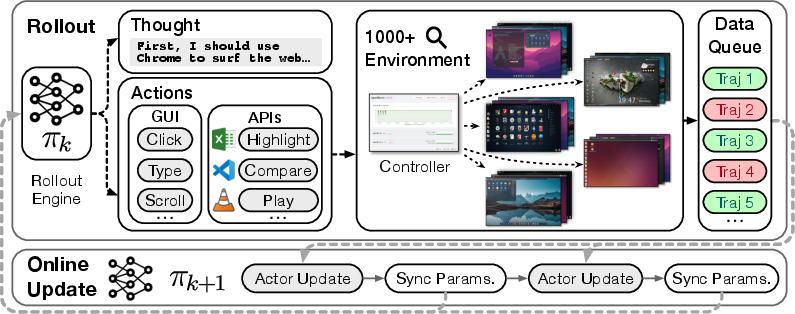

ComputerRL's architecture is built around three pillars: the API-GUI action space, a robust parallel desktop environment, and a fully asynchronous RL training framework.

The API-GUI paradigm unifies programmatic API calls with direct GUI actions, enabling agents to leverage the efficiency of APIs while retaining the flexibility of GUI manipulation. The framework automates API construction for desktop applications using LLMs, which analyze user task exemplars, generate interface definitions, implement APIs via application-specific libraries, and produce test cases for validation. This automation substantially reduces manual effort and increases the agent's operational versatility.

Figure 1: Overview of ComputerRL framework, integrating API-GUI actions and large-scale parallel desktop environments for efficient agent training.

The environment is based on a refactored Ubuntu desktop, supporting thousands of concurrent virtual machines via containerization (qemu-in-docker) and distributed multi-node clustering (gRPC). This design overcomes resource bottlenecks and instability in prior platforms, enabling high-throughput sampling and robust agent-environment interaction.

Training Methodology: Behavior Cloning, RL, and Entropulse

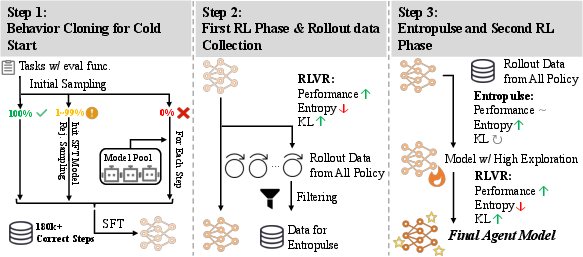

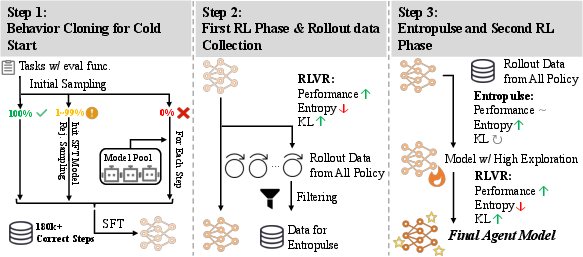

ComputerRL training proceeds in three stages:

- Behavior Cloning (BC) Cold-Start: The agent is initialized by imitating trajectories collected from multiple general LLMs, stratified by task difficulty and augmented for hard cases via model pooling. Only successful trajectories are retained for supervised fine-tuning, ensuring a diverse and high-quality base policy.

- Step-Level Group Relative Policy Optimization (GRPO): RL is performed using a step-level extension of GRPO, where each action in a trajectory is assigned a reward based on verifiable, rule-based task completion. This direct reward assignment facilitates efficient policy optimization and robust learning signals.

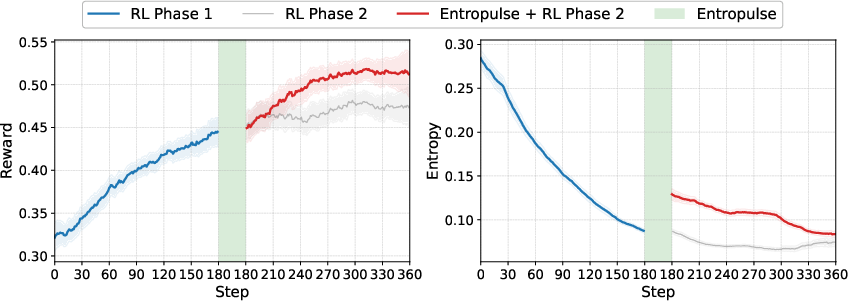

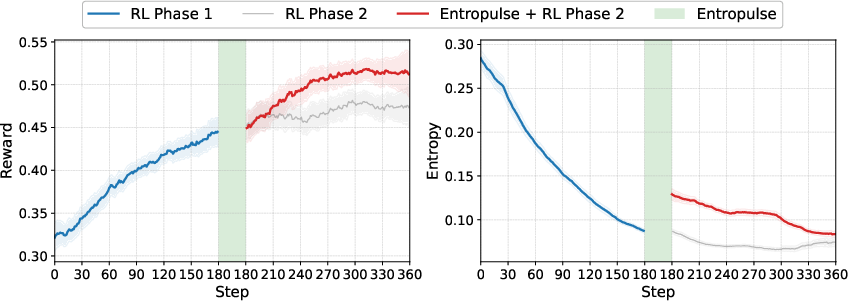

- Entropulse: To counteract entropy collapse and stagnation in extended RL training, Entropulse alternates RL with supervised fine-tuning (SFT) on successful rollouts. This restores policy entropy, enhances exploration, and enables further performance gains in subsequent RL phases.

Figure 2: ComputerRL training pipeline, illustrating BC initialization, RL with step-level GRPO, and Entropulse for entropy recovery.

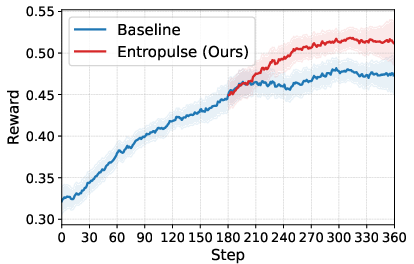

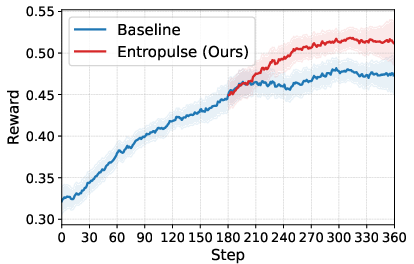

Figure 3: Training curves showing reward and entropy dynamics, with Entropulse restoring exploration and improving final performance.

Empirical Results and Analysis

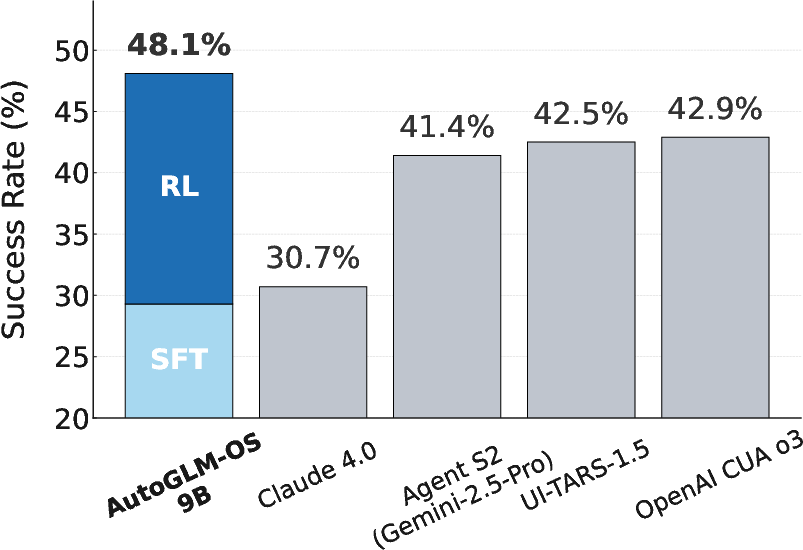

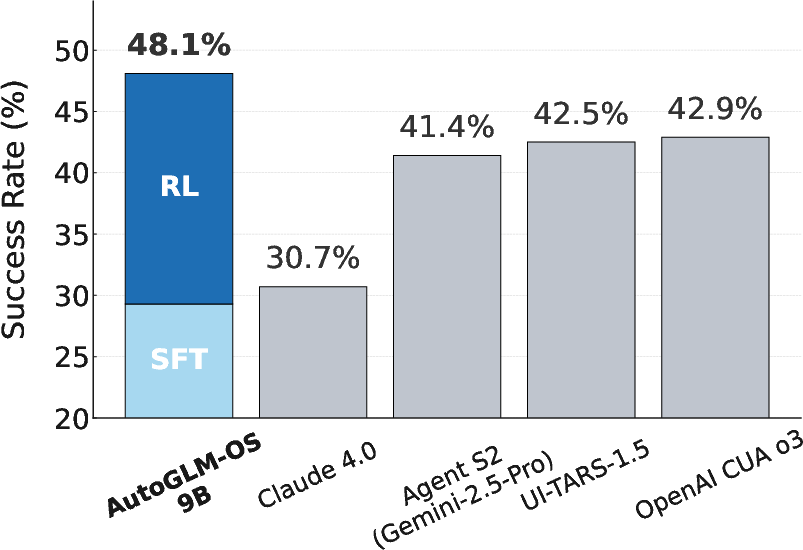

ComputerRL is evaluated on the OSWorld benchmark using open-source LLMs (GLM-4-9B-0414 and Qwen2.5-14B). The AutoGLM-OS-9B agent achieves a state-of-the-art success rate of 48.1%, representing a 64% performance gain from RL and outperforming leading proprietary and open models (e.g., OpenAI CUA o3 at 42.9%, UI-TARS-1.5 at 42.5%, Claude Sonnet 4 at 30.7%).

Figure 4: Success rates of agents on OSWorld, with AutoGLM-OS surpassing previous state-of-the-art models.

Ablation studies demonstrate the superiority of the API-GUI paradigm over GUI-only approaches, with a 134% improvement in average success rate and up to 350% gains in specific domains. Multi-stage training, particularly the Entropulse phase, is shown to be critical for maintaining exploration and achieving optimal performance.

Qualitative Case Studies

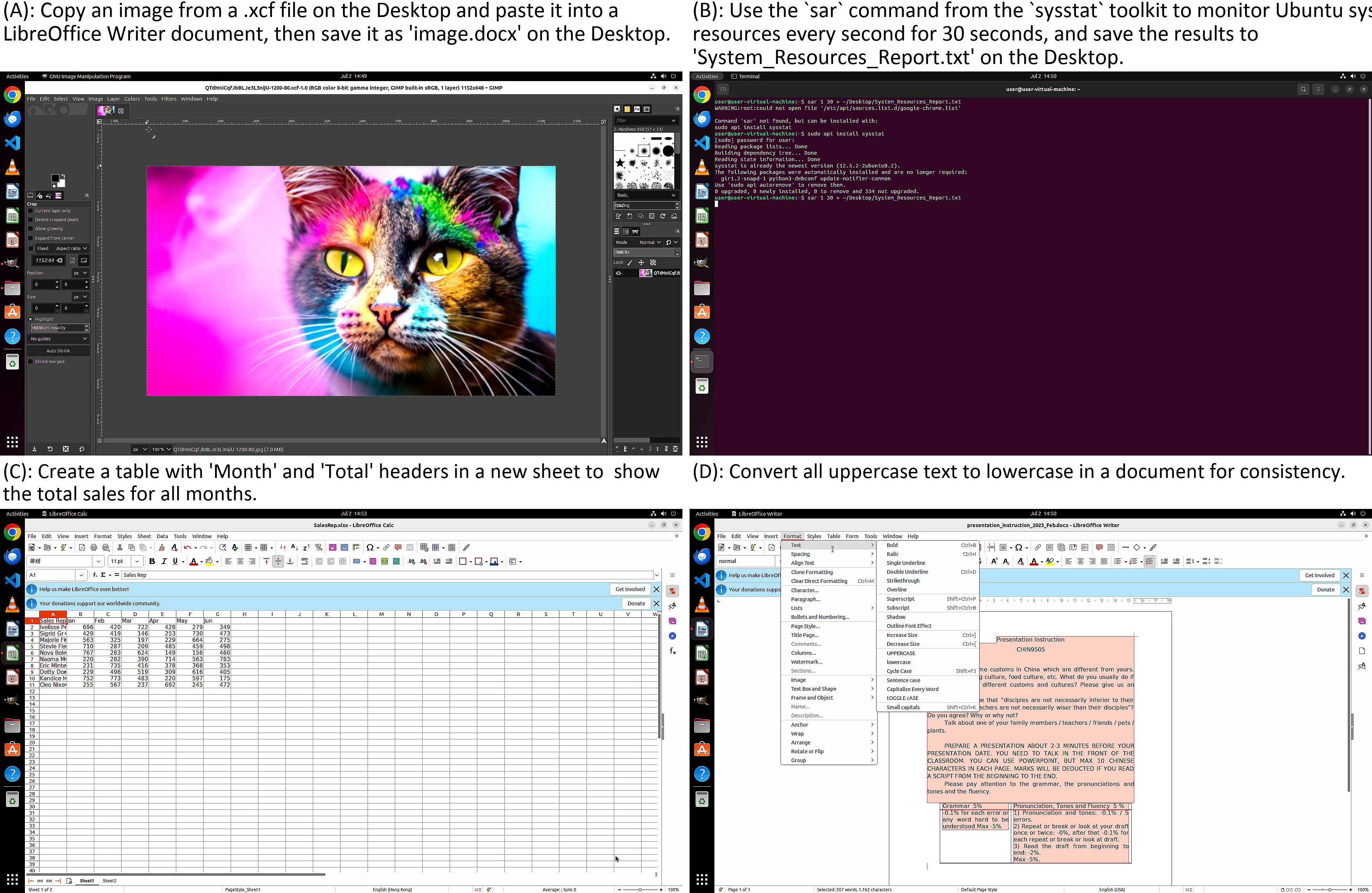

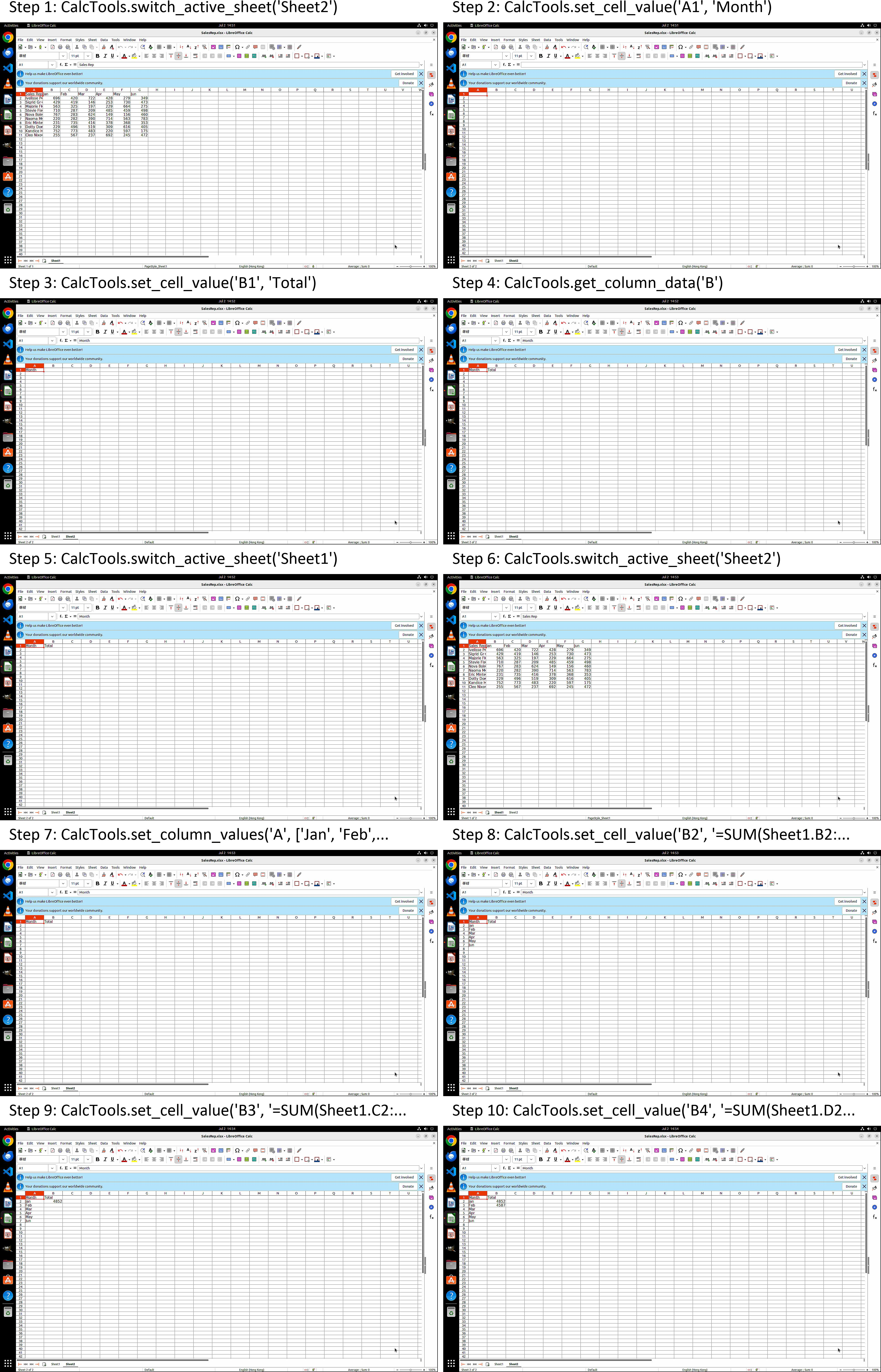

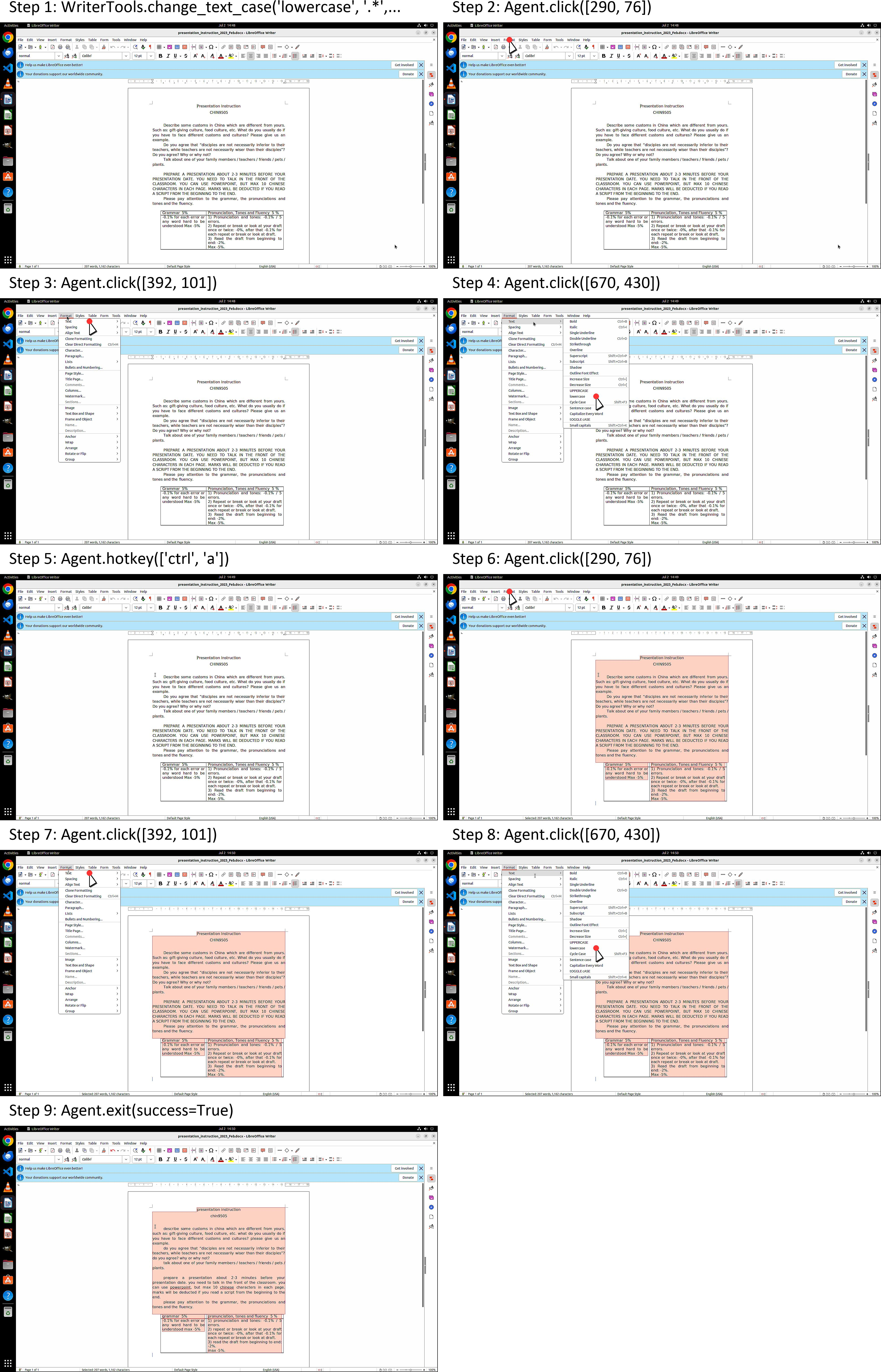

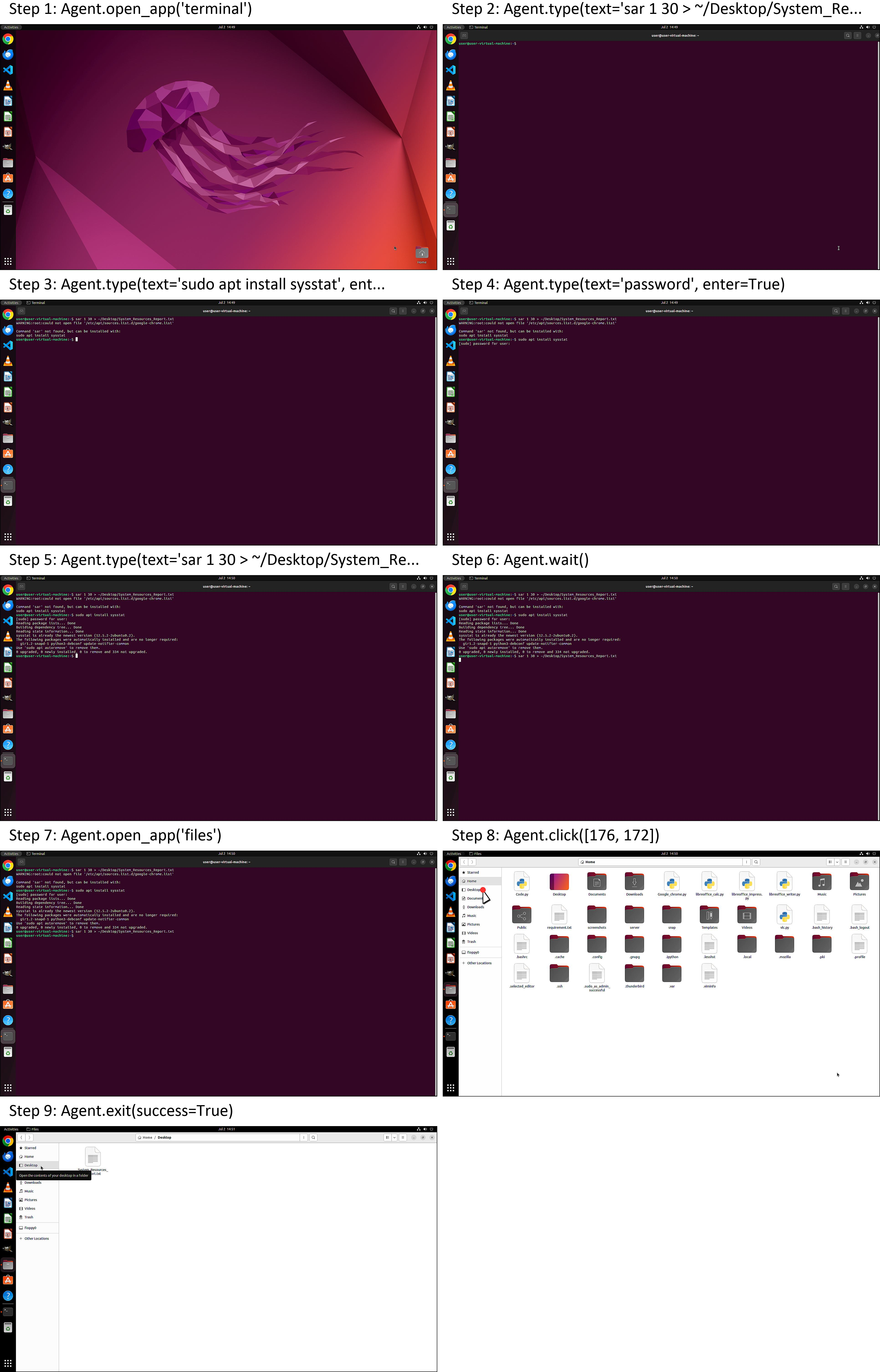

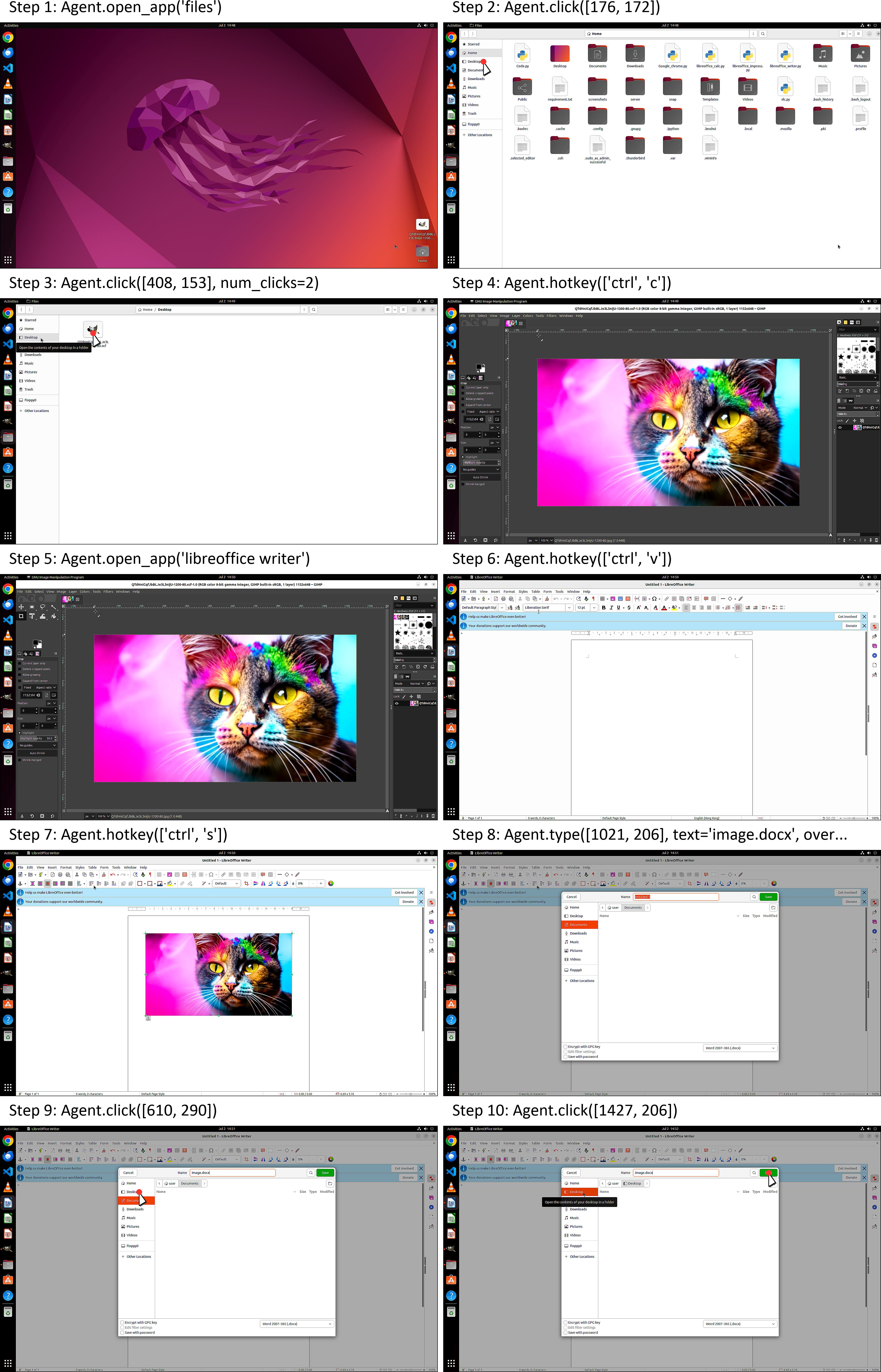

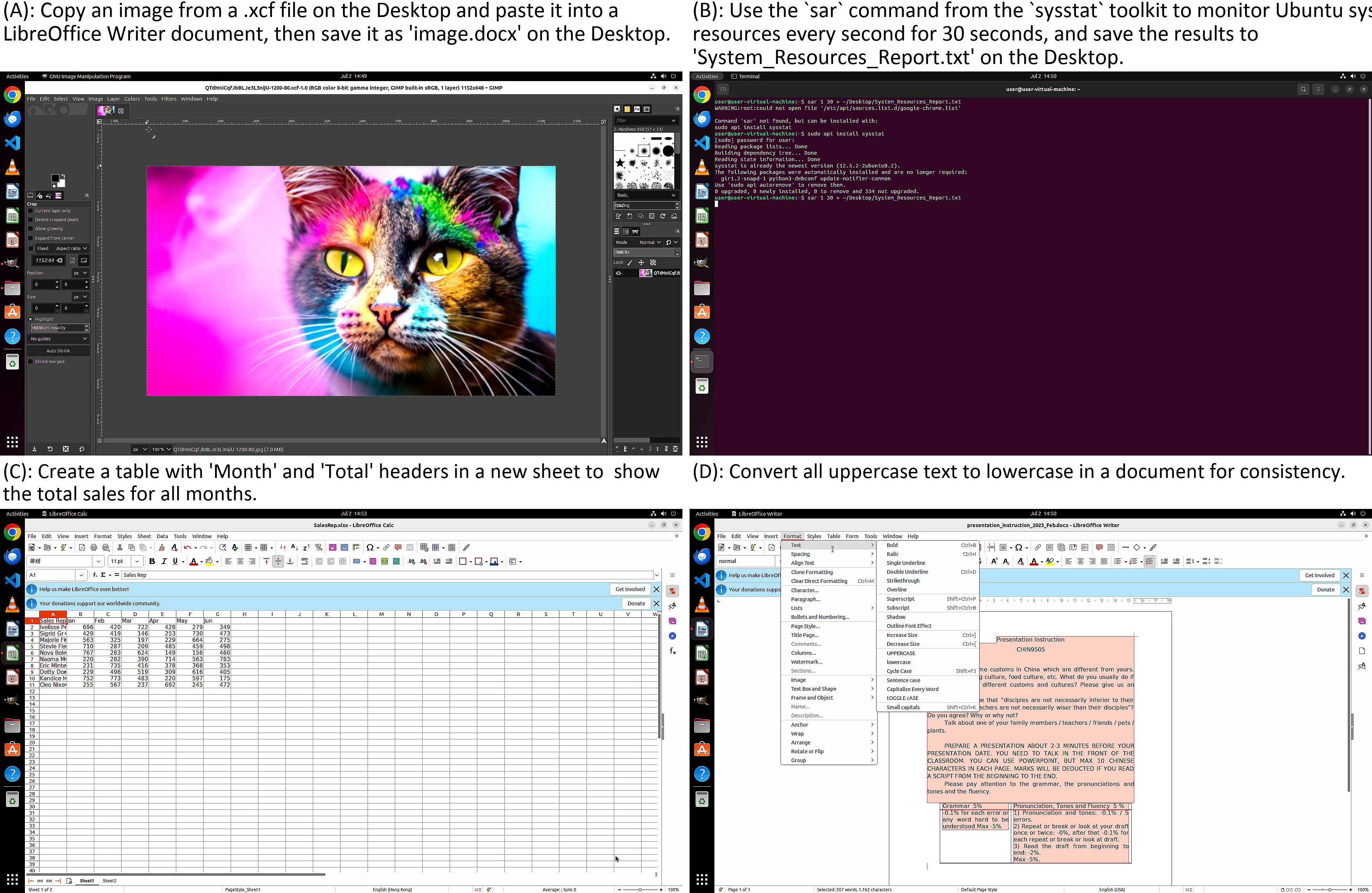

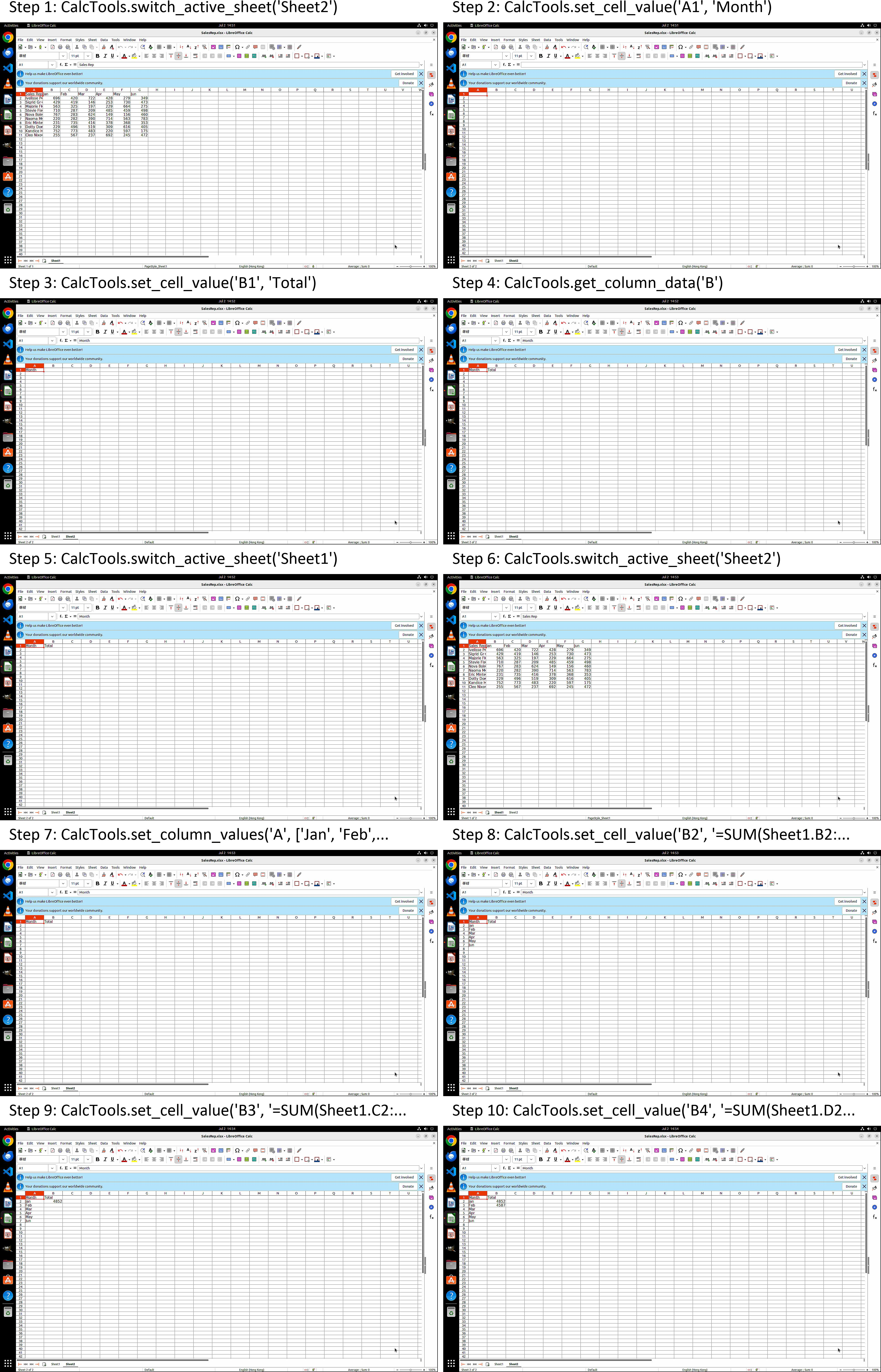

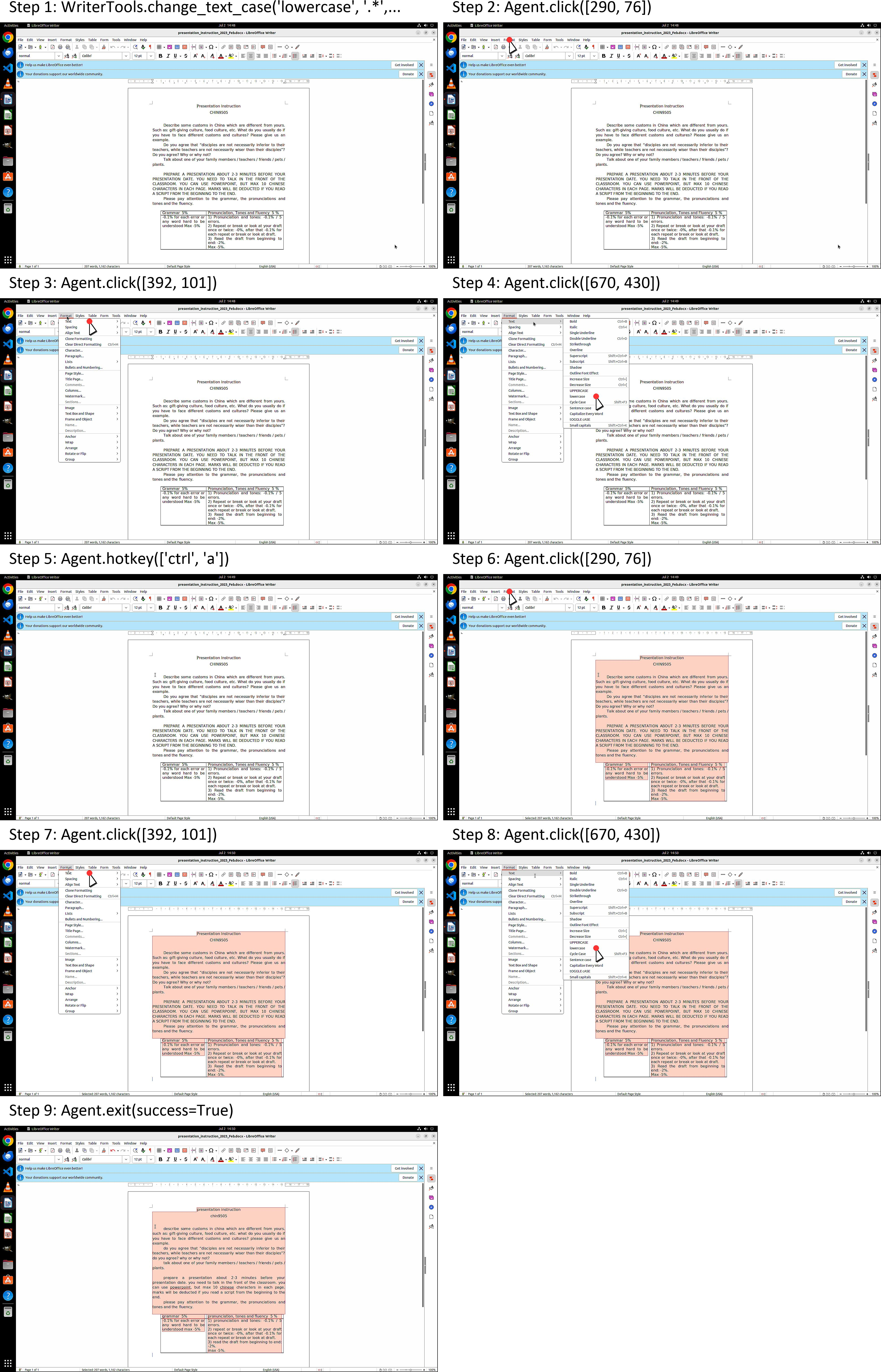

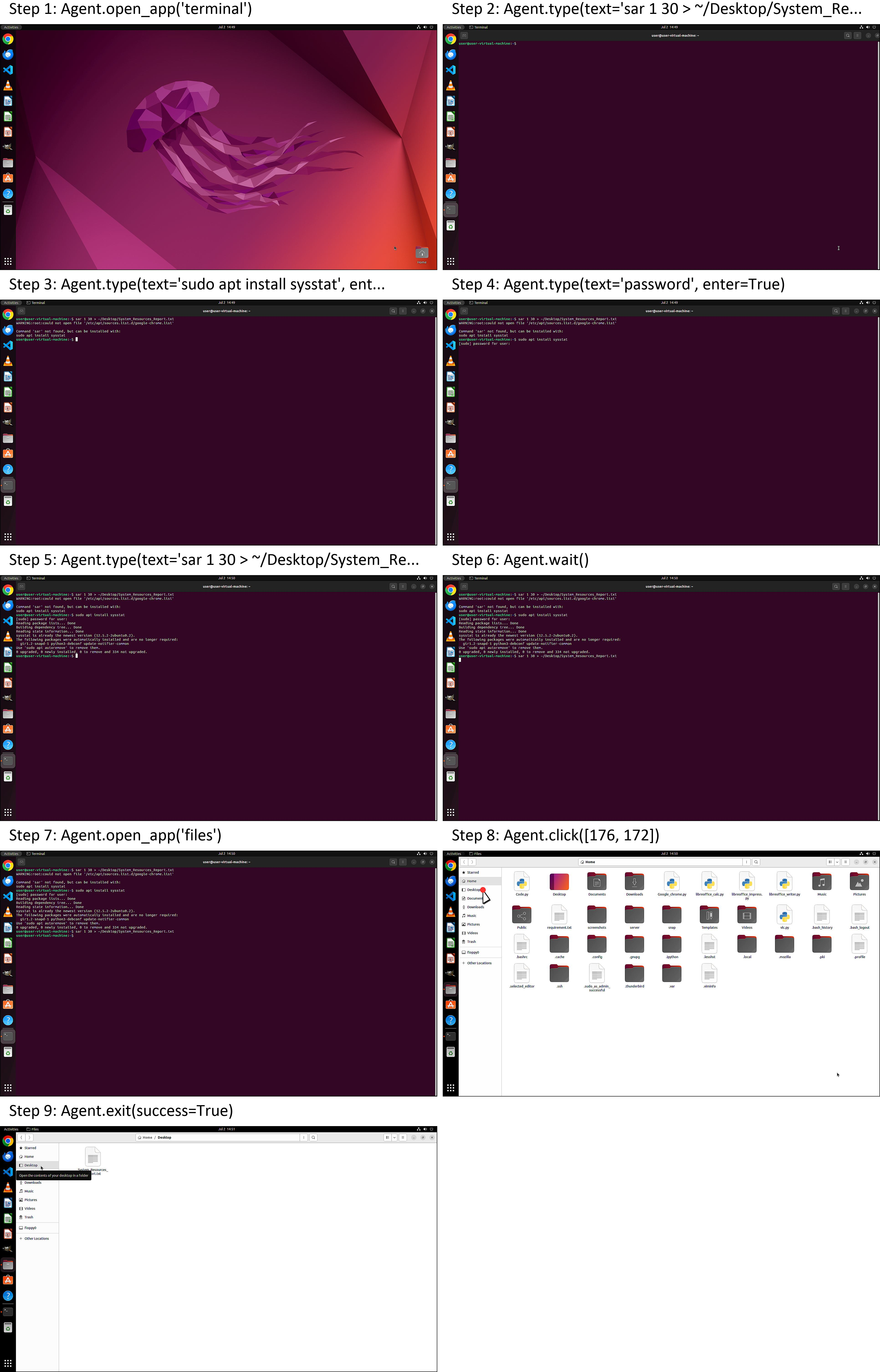

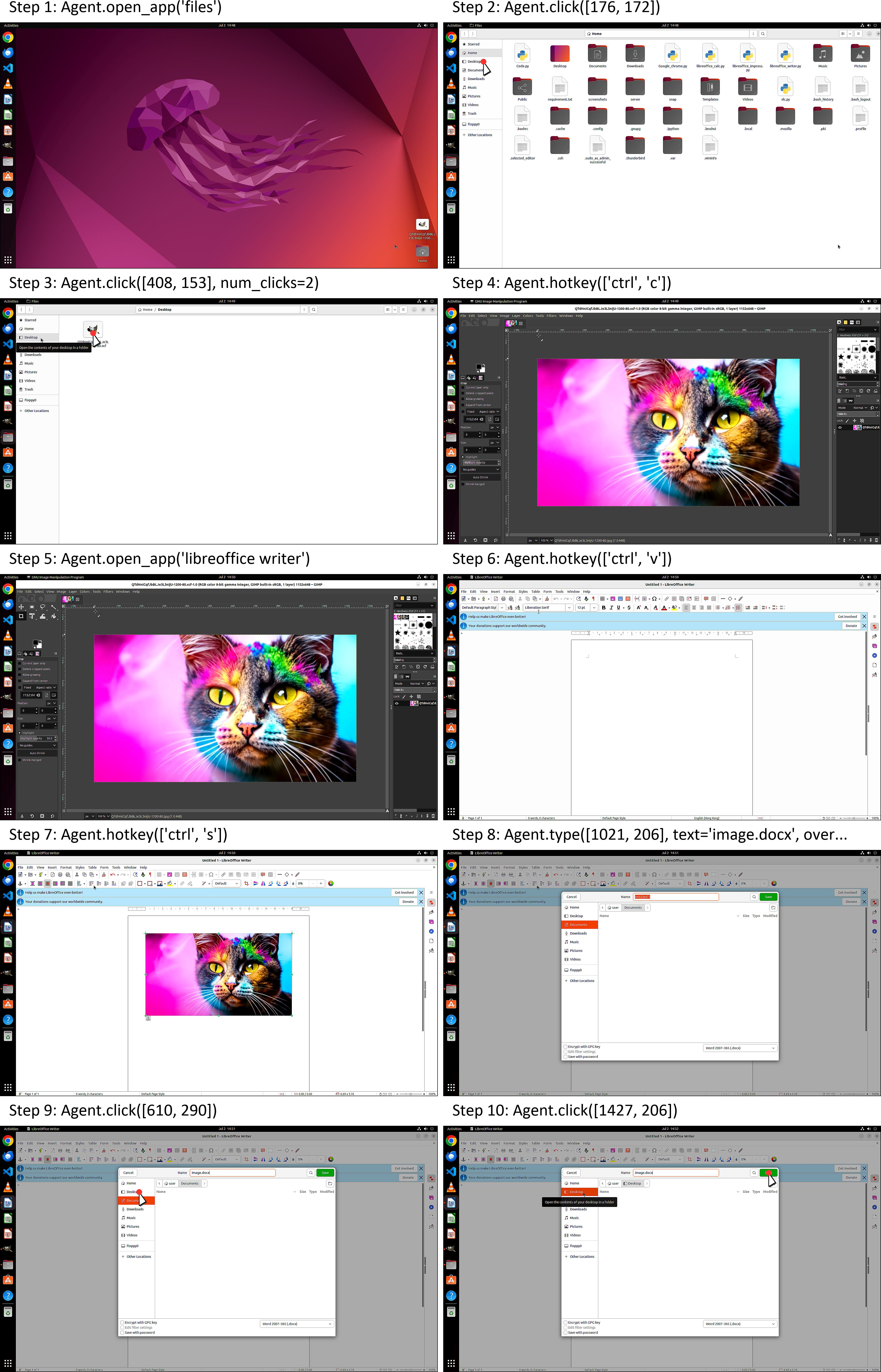

The paper provides detailed case studies illustrating the agent's capabilities across diverse desktop tasks, including table creation in LibreOffice Calc, text formatting in Writer, system resource monitoring via Terminal, and image manipulation between GIMP and Writer.

Figure 5: AutoGLM-OS executing representative user tasks across multiple desktop applications.

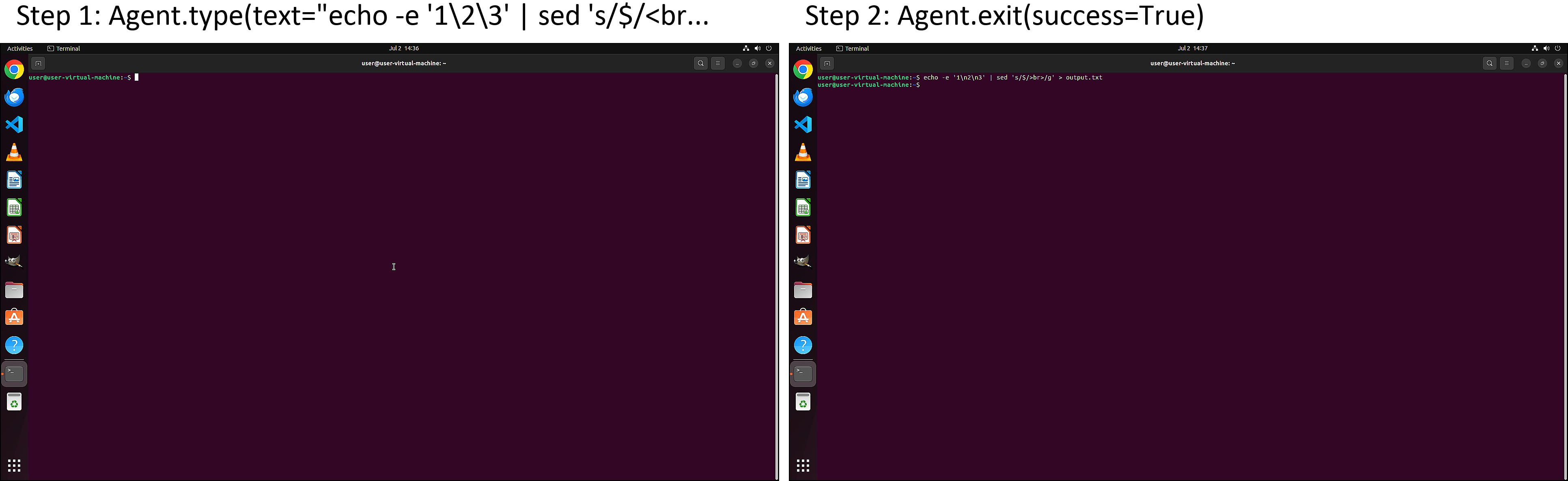

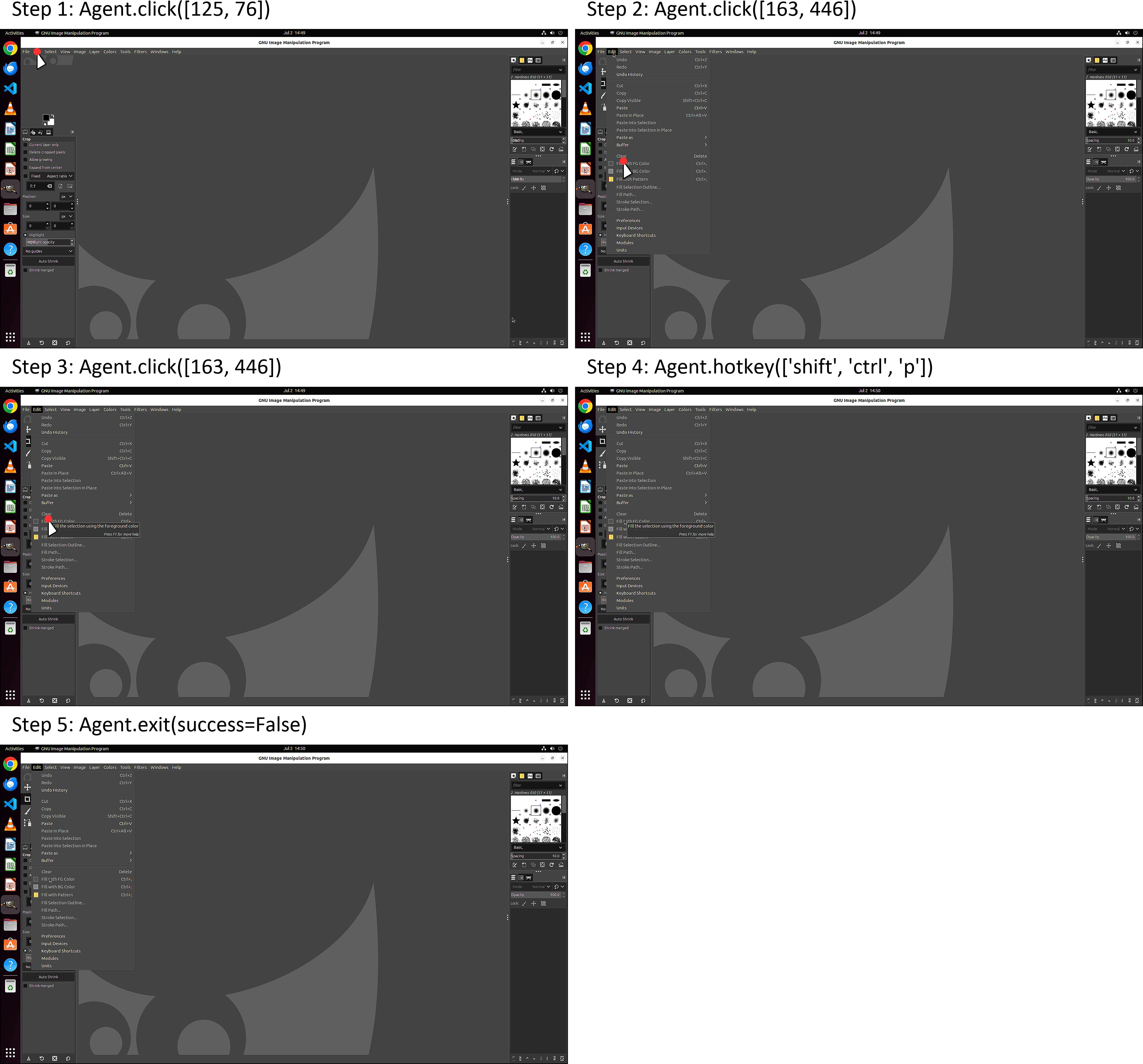

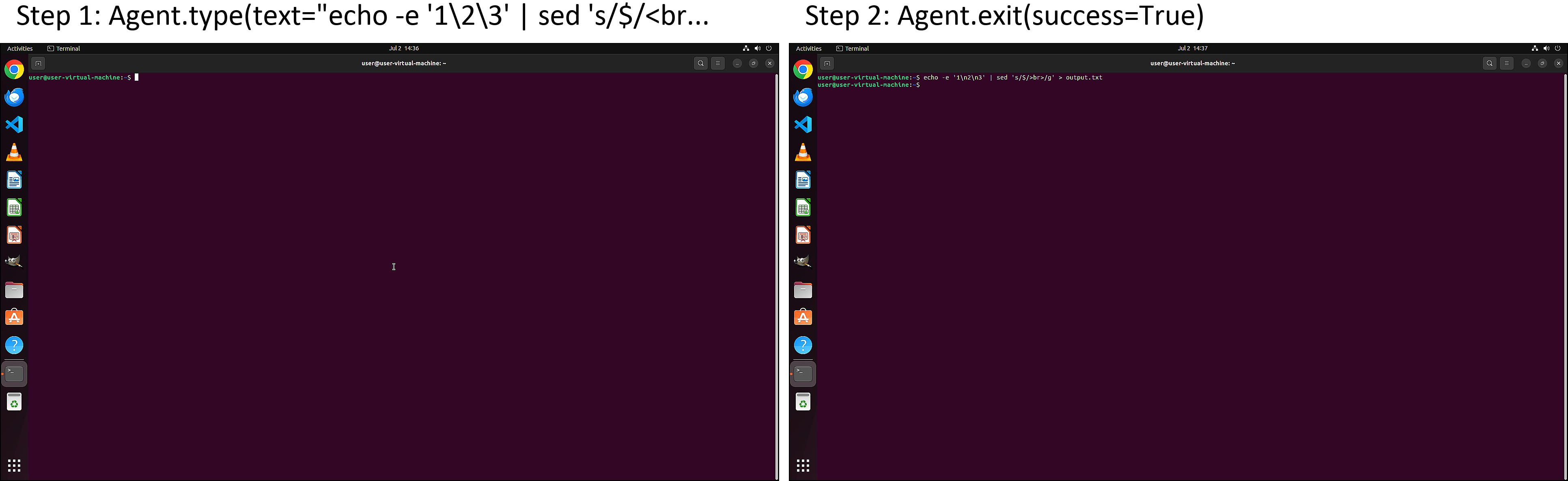

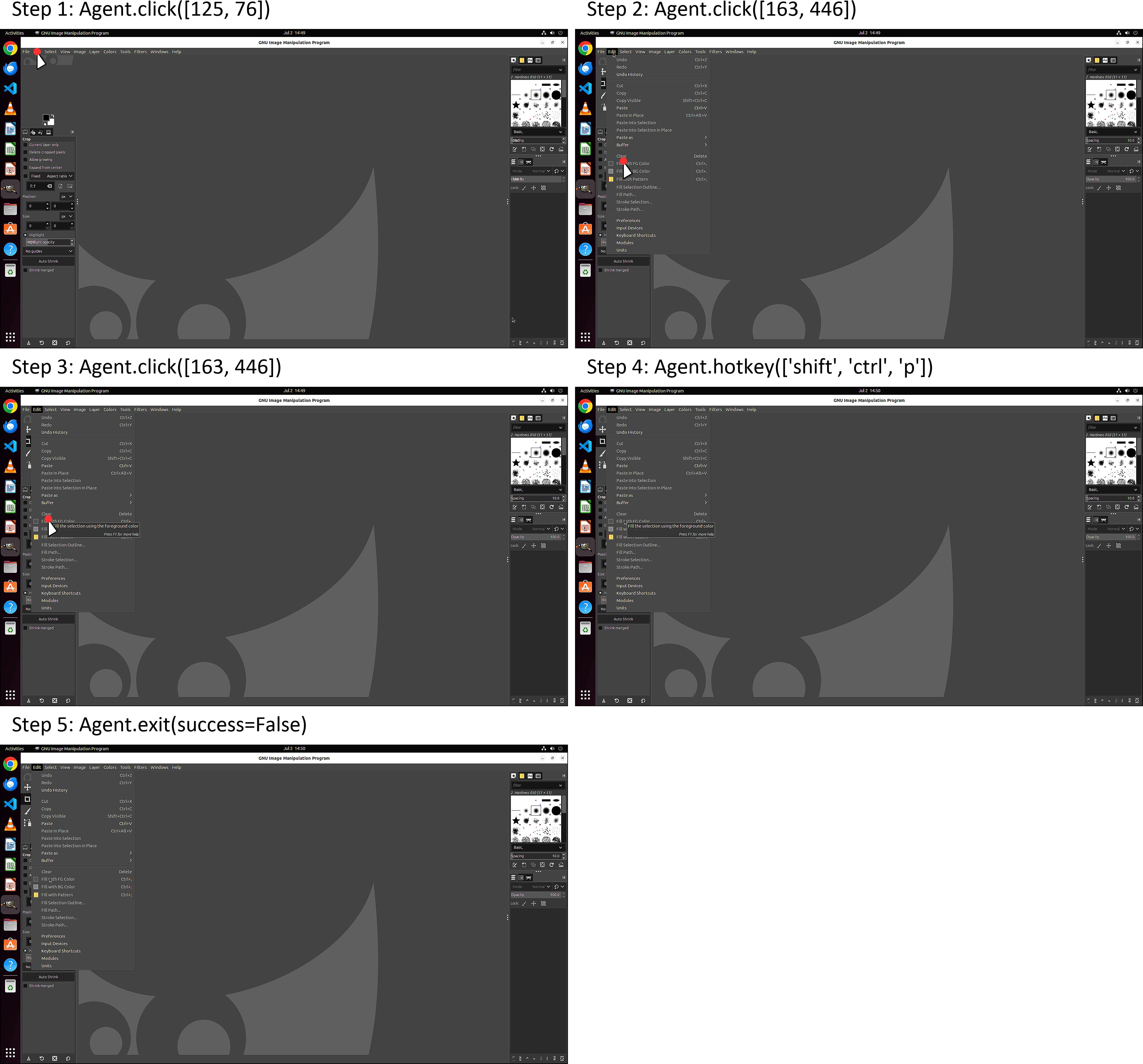

Additional figures highlight both successful and failed task executions, elucidating error modes such as question misunderstanding and click operation failures.

Figure 6: Stepwise execution of a table creation task in LibreOffice Calc.

Figure 7: Execution of a text case conversion task in LibreOffice Writer.

Figure 8: System resource monitoring and report generation via Terminal.

Figure 9: Image transfer from GIMP to LibreOffice Writer and document saving.

Figure 10: Example of a question misunderstanding error during task execution.

Figure 11: Example of a click operation error in GIMP theme change.

Implications and Future Directions

ComputerRL establishes a scalable and generalizable foundation for autonomous desktop agents, with strong empirical evidence for the effectiveness of hybrid API-GUI actions and multi-stage RL training. The framework's distributed infrastructure and automated API construction pipeline are directly applicable to broader device-control and multimodal agent research.

Theoretical implications include the validation of step-level GRPO for RL in complex environments and the utility of entropy restoration via SFT for sustained policy improvement. Practically, the system enables efficient training and deployment of agents capable of long-horizon planning, multi-application coordination, and robust error recovery.

Future work should focus on expanding training diversity, integrating advanced multimodal perception, and developing hierarchical planning for long-duration workflows. Safety and alignment remain critical, necessitating granular permissioning and pre-action validation protocols.

Conclusion

ComputerRL advances the state of the art in desktop automation by integrating API-based and GUI actions, scalable RL infrastructure, and entropy-aware training. The framework demonstrates superior reasoning, accuracy, and generalization on OSWorld, laying the groundwork for persistent, capable, and safe autonomous computer use agents.