- The paper introduces SSRL, a framework for training LLM agents via self-search reinforcement learning without external search APIs.

- The methodology employs repeated sampling, intrinsic rewards, and format-based signals to enhance internal knowledge retrieval.

- Empirical results demonstrate improved accuracy and efficiency across benchmarks, with faster convergence and effective sim-to-real transfer.

SSRL: Self-Search Reinforcement Learning for LLMs as World Knowledge Simulators

The SSRL framework addresses the challenge of training LLM-based agents for search-driven tasks without incurring the prohibitive costs of interacting with external search engines during RL. The central hypothesis is that LLMs, due to their extensive pretraining on web-scale corpora, encode substantial world knowledge that can be elicited and utilized for agentic search tasks. SSRL quantifies the intrinsic search capability of LLMs via structured prompting and repeated sampling ("Self-Search"), and then enhances this capability through RL with format-based and rule-based rewards, enabling models to refine their internal knowledge utilization.

Inference-Time Scaling and Self-Search Analysis

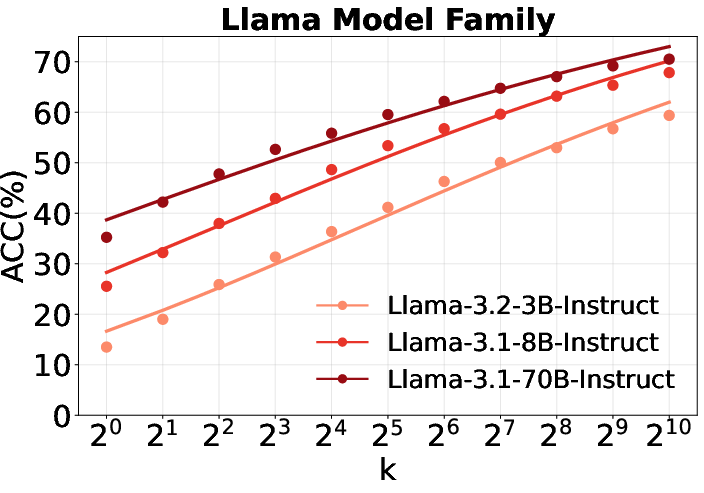

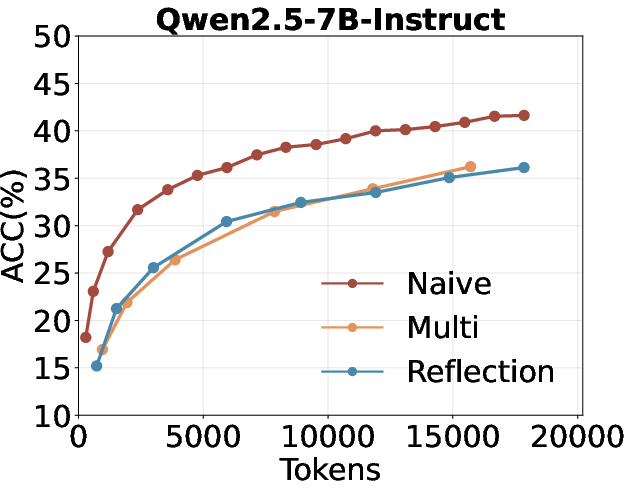

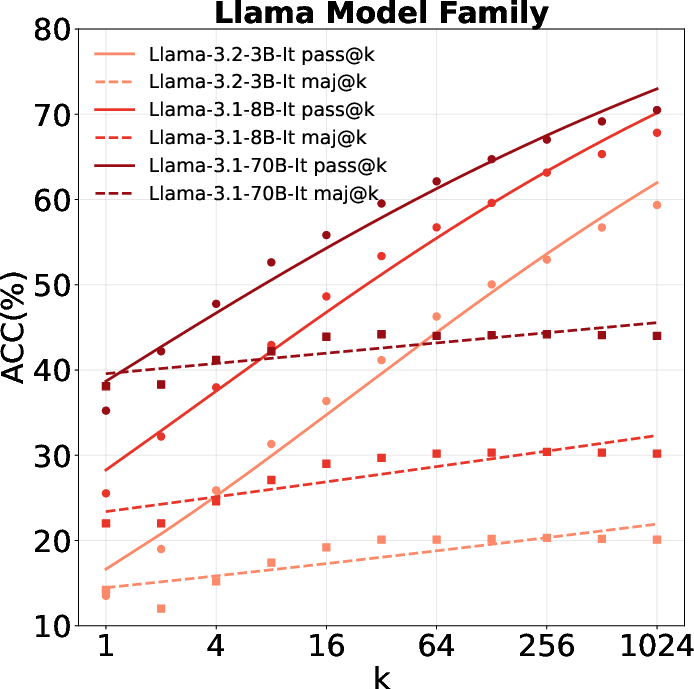

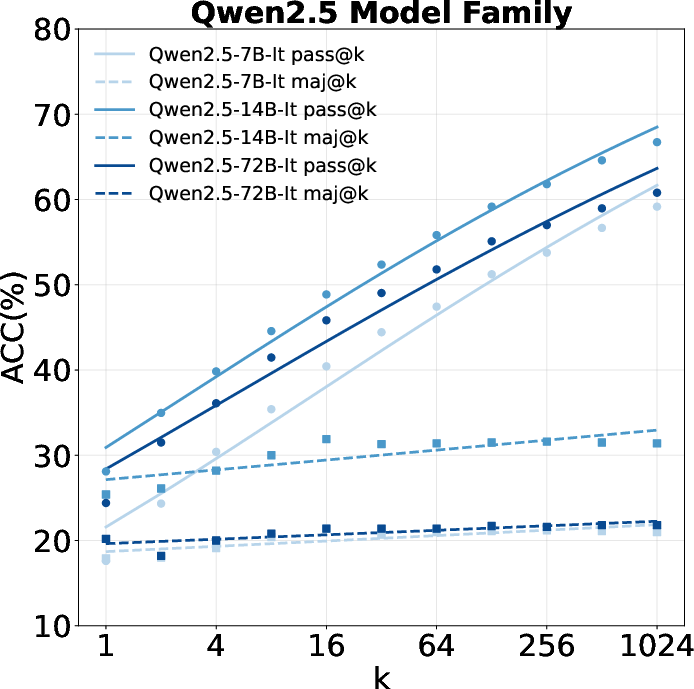

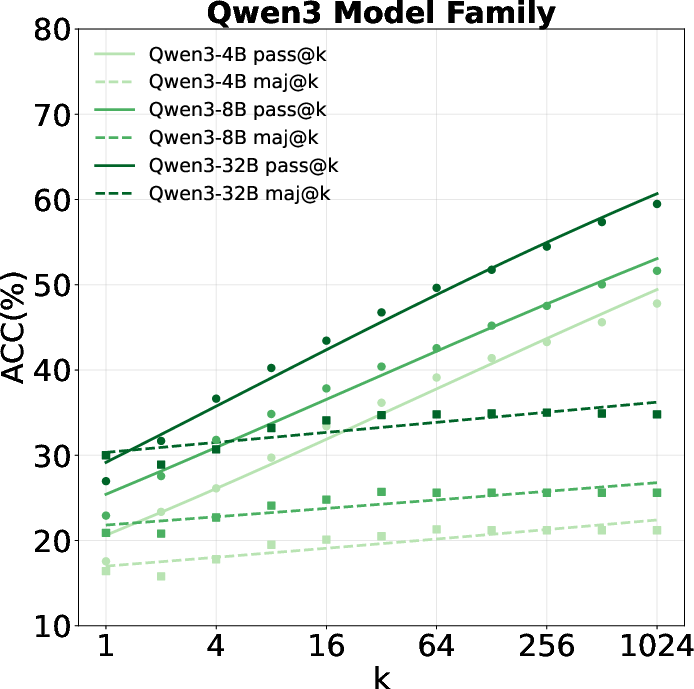

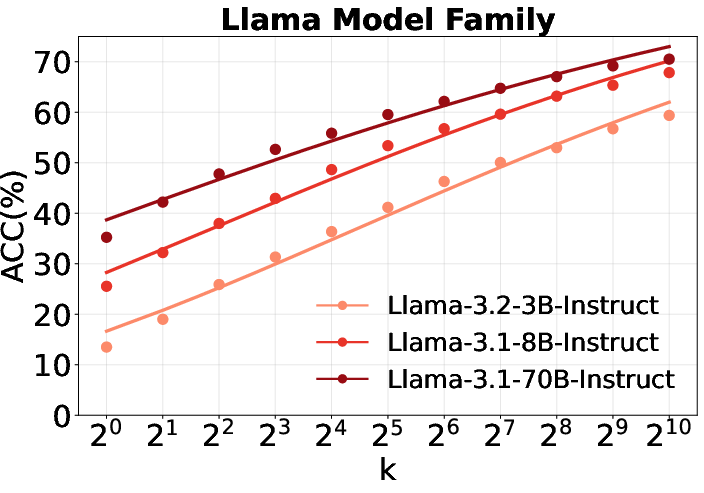

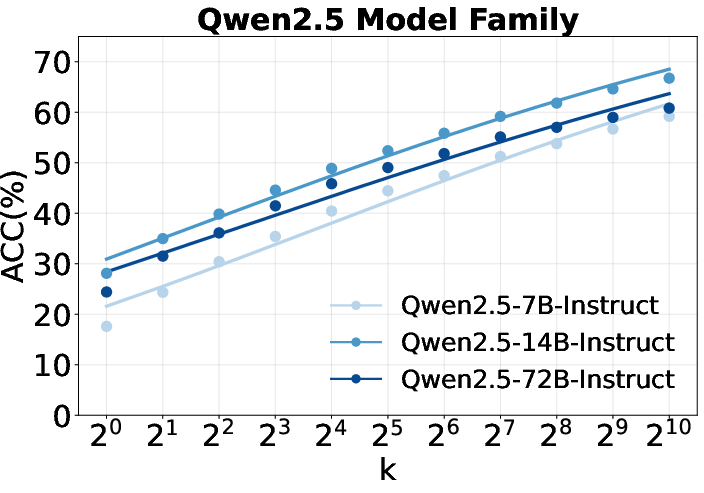

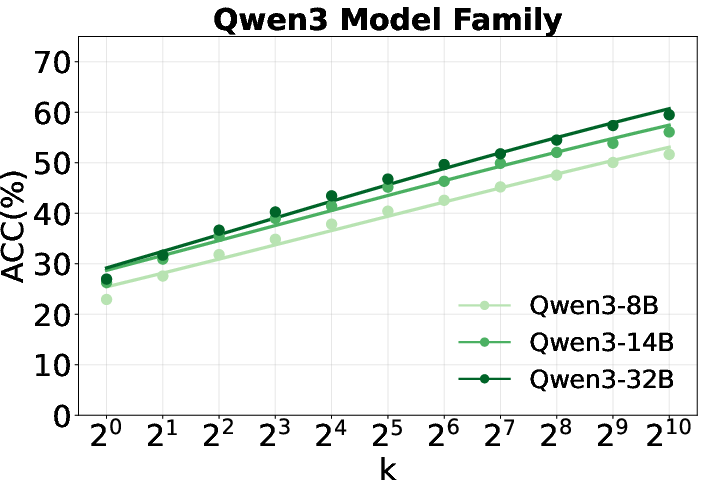

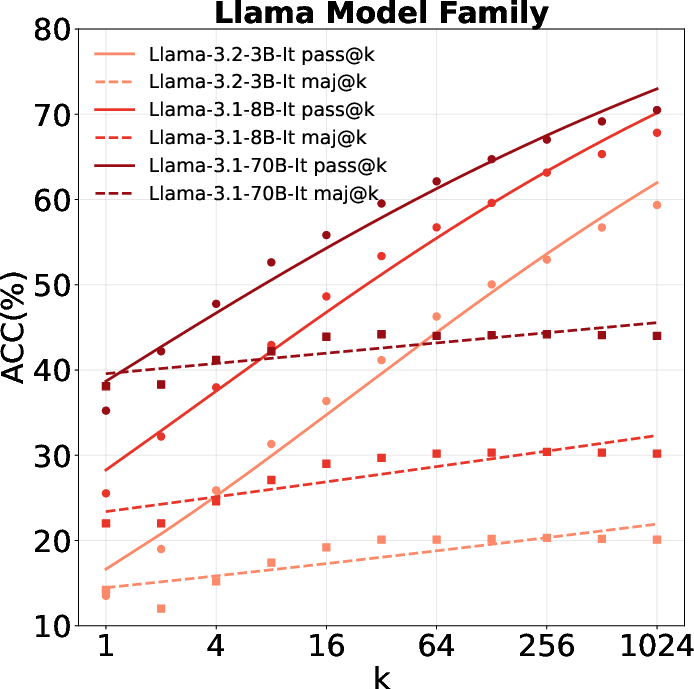

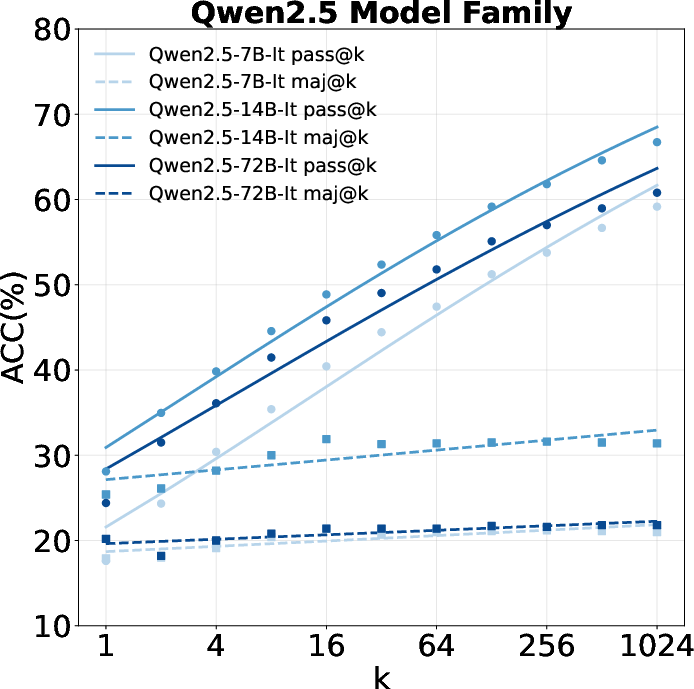

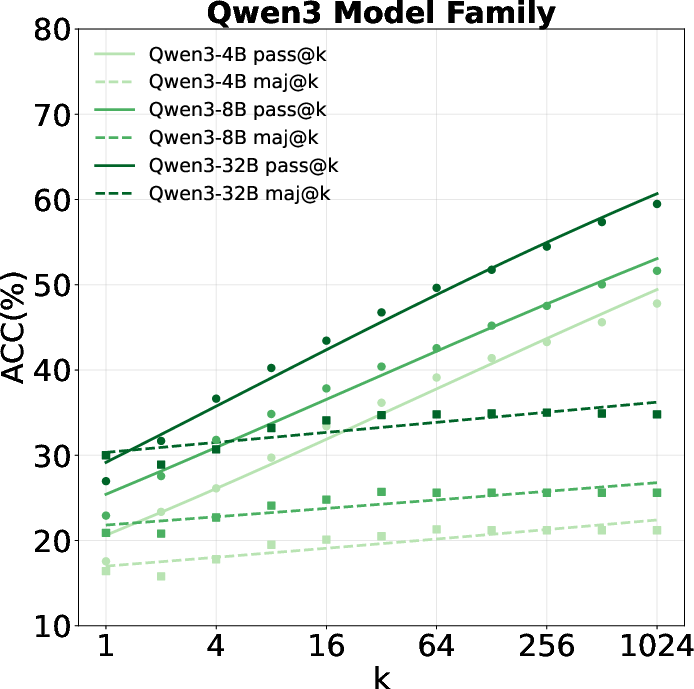

The paper introduces a rigorous evaluation of LLMs' self-search capacity using pass@k metrics across seven benchmarks, including factual, multi-hop, and web browsing QA tasks. The scaling law for repeated sampling is formalized as c≈exp(akb), where c is coverage and k is the number of samples. Empirical results demonstrate that predictive performance improves monotonically with increased sampling, with smaller models approaching the performance of much larger models under sufficient sampling budgets.

Figure 1: Scaling curves of repeated sampling for Qwen2.5, Llama, and Qwen3 families, showing consistent MAE improvements as sample size increases.

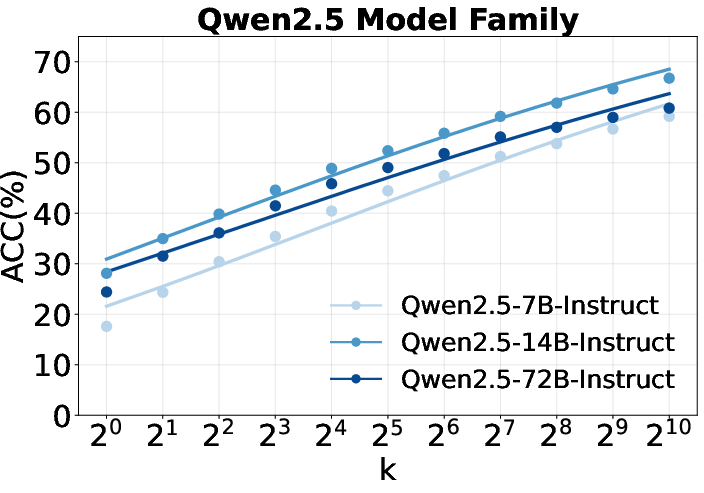

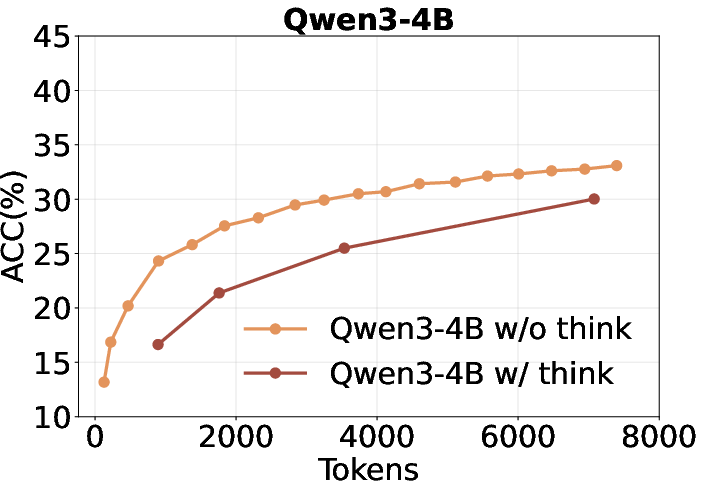

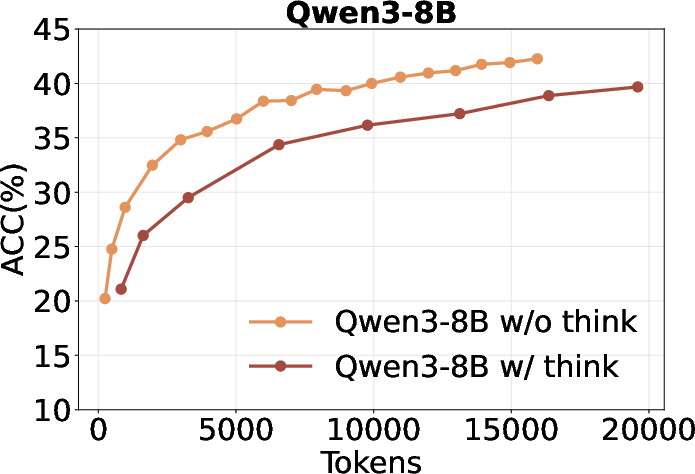

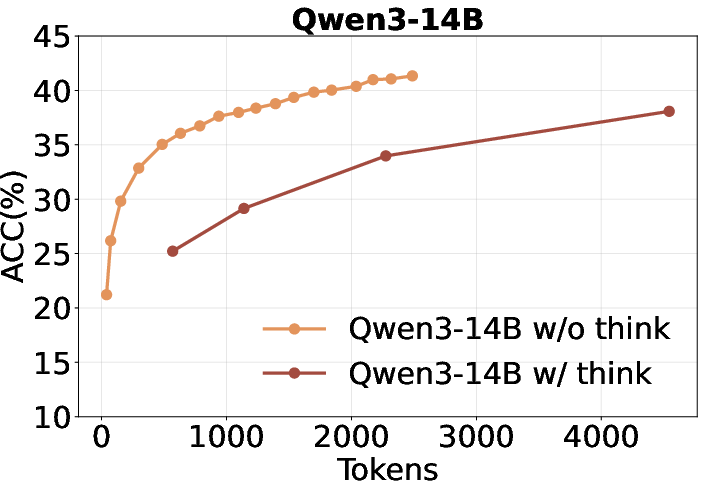

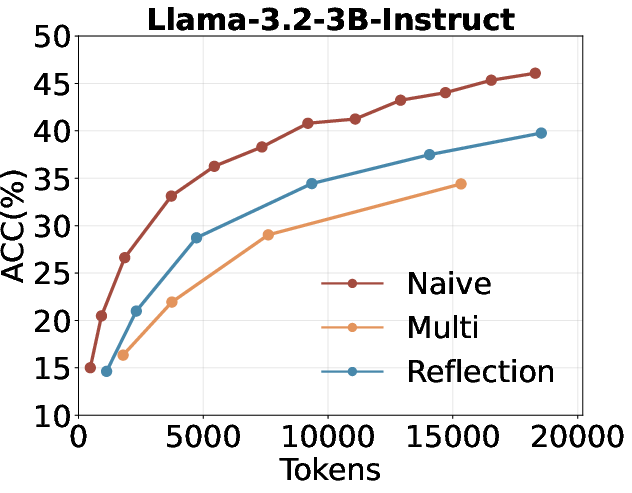

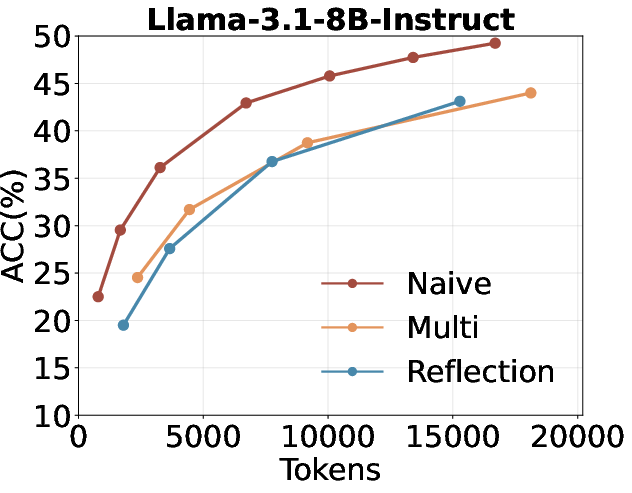

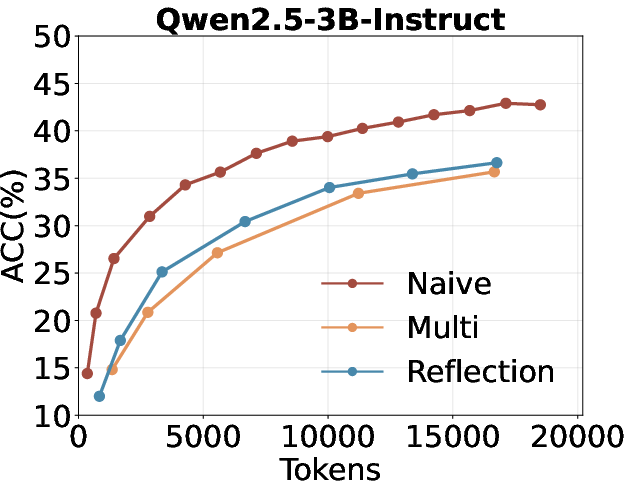

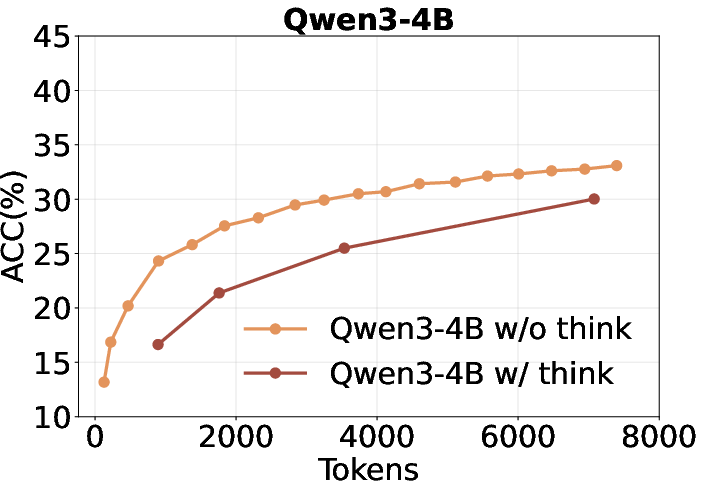

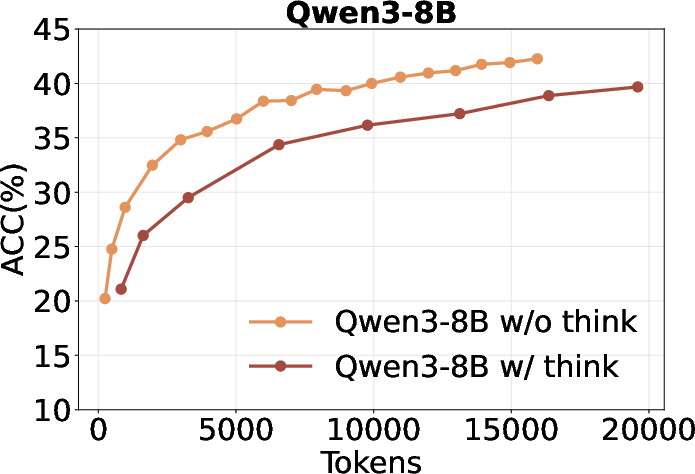

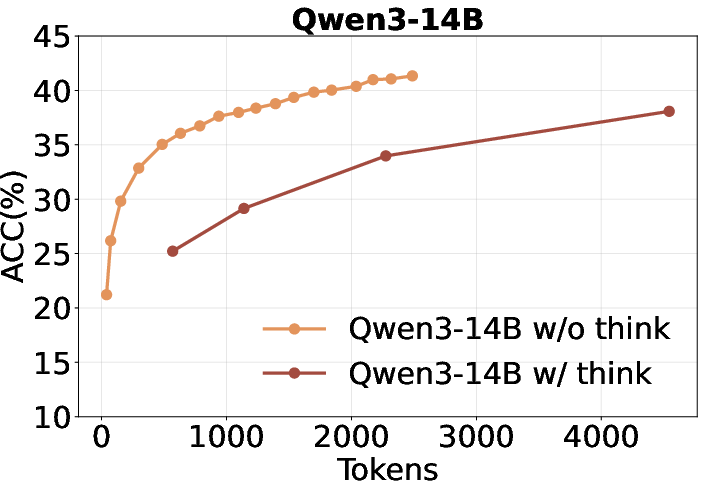

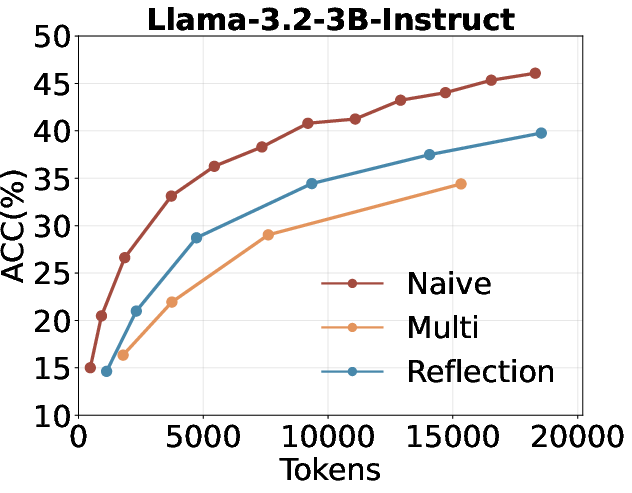

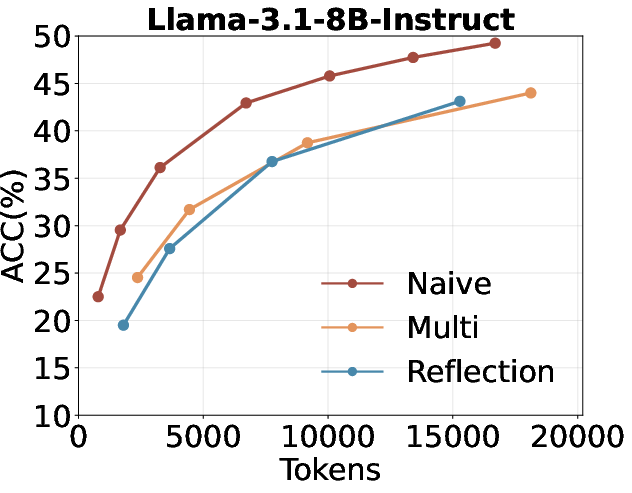

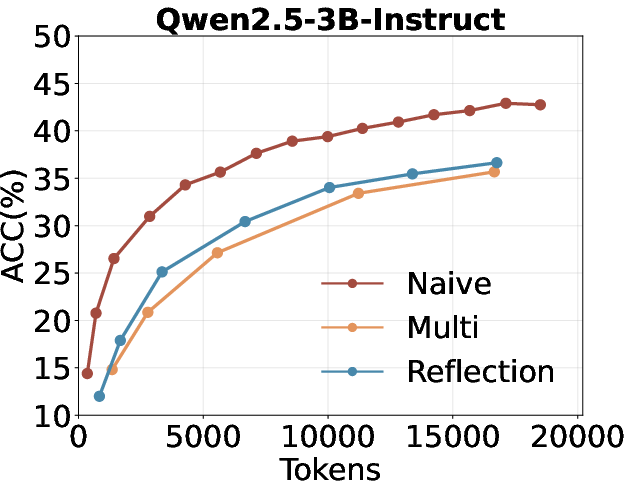

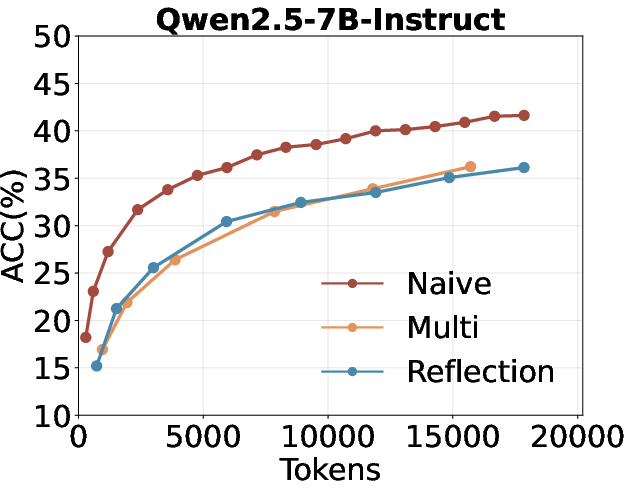

Contrary to prior findings in mathematical reasoning, Llama models outperform Qwen models in self-search settings, indicating that reasoning priors and world knowledge priors are not strongly correlated. Furthermore, increasing the number of "thinking" tokens or employing multi-turn/reflection strategies does not yield better performance in self-search, suggesting that knowledge utilization is more critical than extended reasoning for these tasks.

Figure 2: Qwen3 performance with and without forced-thinking, showing diminishing returns for extended reasoning token budgets.

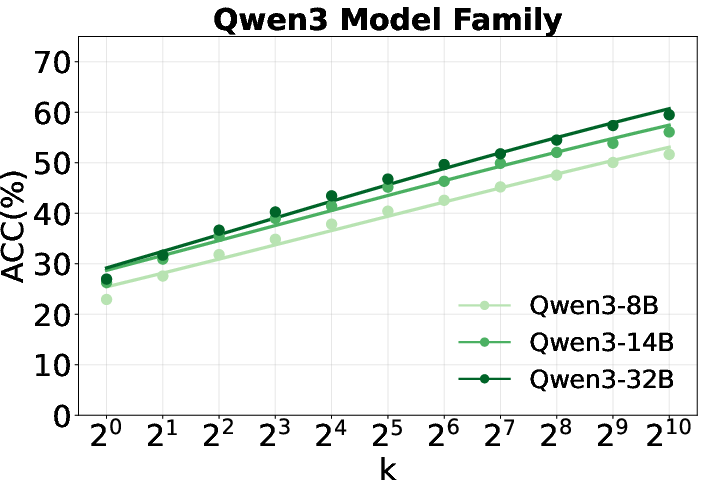

Figure 3: Comparison of repeated sampling, multi-turn self-search, and self-search with reflection under fixed token budgets.

Majority voting over sampled outputs provides only marginal gains, highlighting the difficulty of reliably extracting the optimal answer from the candidate set when ground truth is unknown.

Figure 4: Majority voting results averaged across six benchmarks, demonstrating limited scaling benefits.

SSRL: Reinforcement Learning with Internal Knowledge Retrieval

SSRL reformulates the RL objective for search agents by removing dependence on external retrieval, allowing the policy model to serve as both reasoner and internal search engine. The reward function combines outcome accuracy and format adherence, with an information mask applied to self-generated information tokens to encourage deeper reasoning.

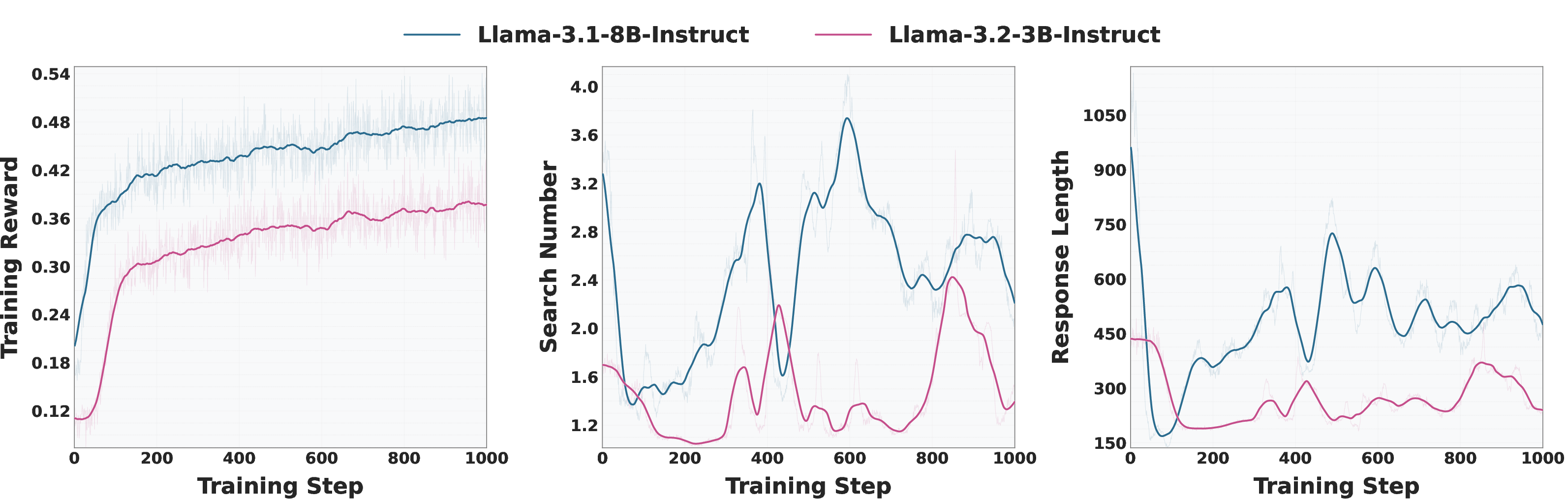

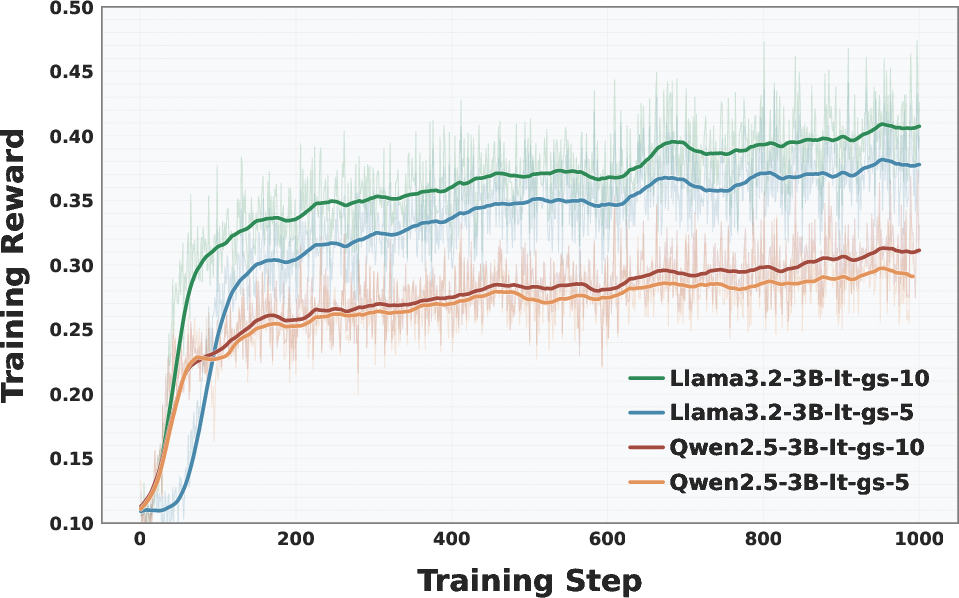

Empirical results show that SSRL-trained models consistently outperform baselines relying on external search engines or simulated LLM search, both in terms of accuracy and training efficiency. Instruction-tuned models demonstrate superior internal knowledge utilization compared to base models, and larger models exhibit more sophisticated self-search strategies.

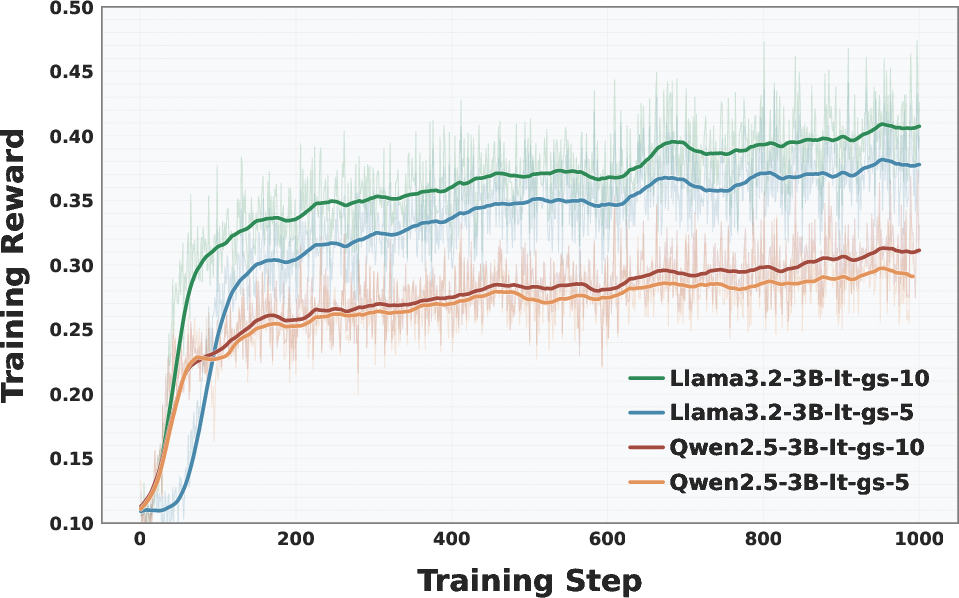

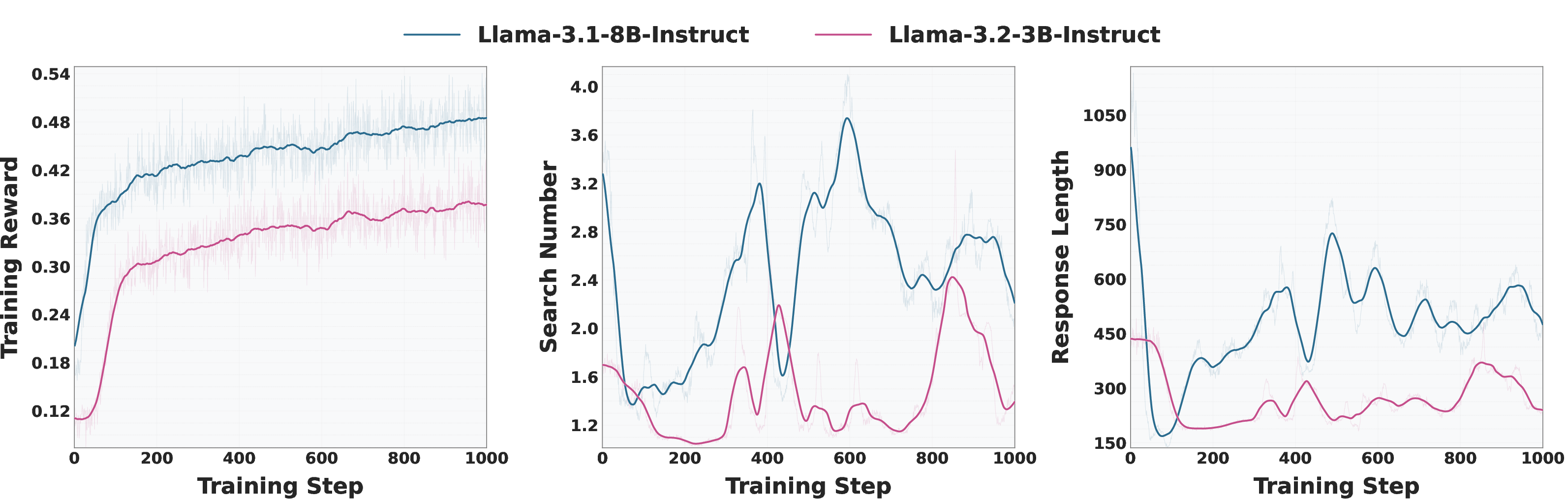

Figure 5: Training curves for Llama-3.2-3B-Instruct and Llama-3.1-8B-Instruct, showing reward, response length, and search count dynamics.

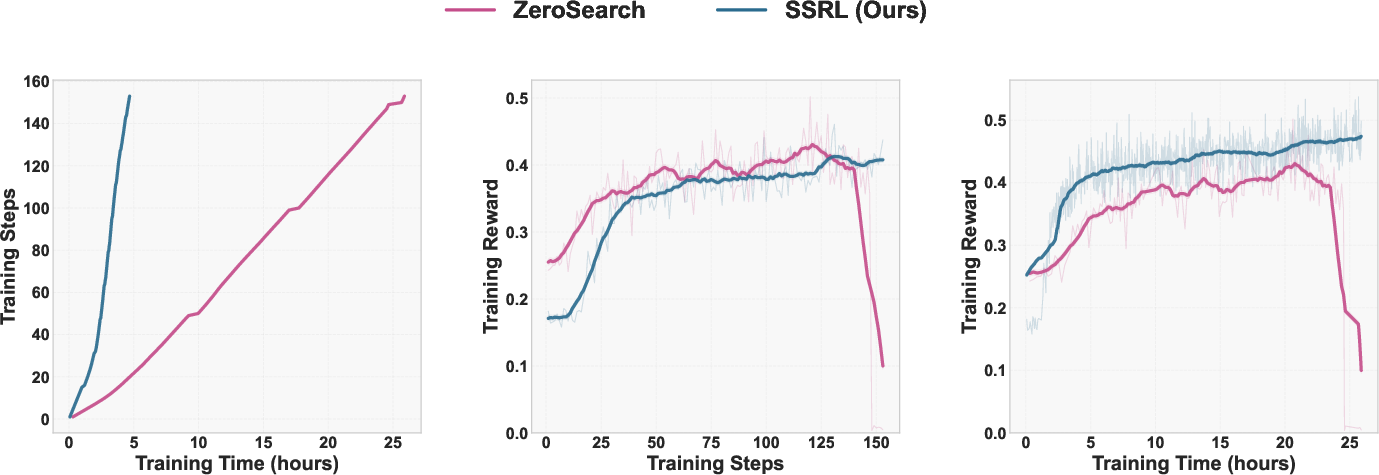

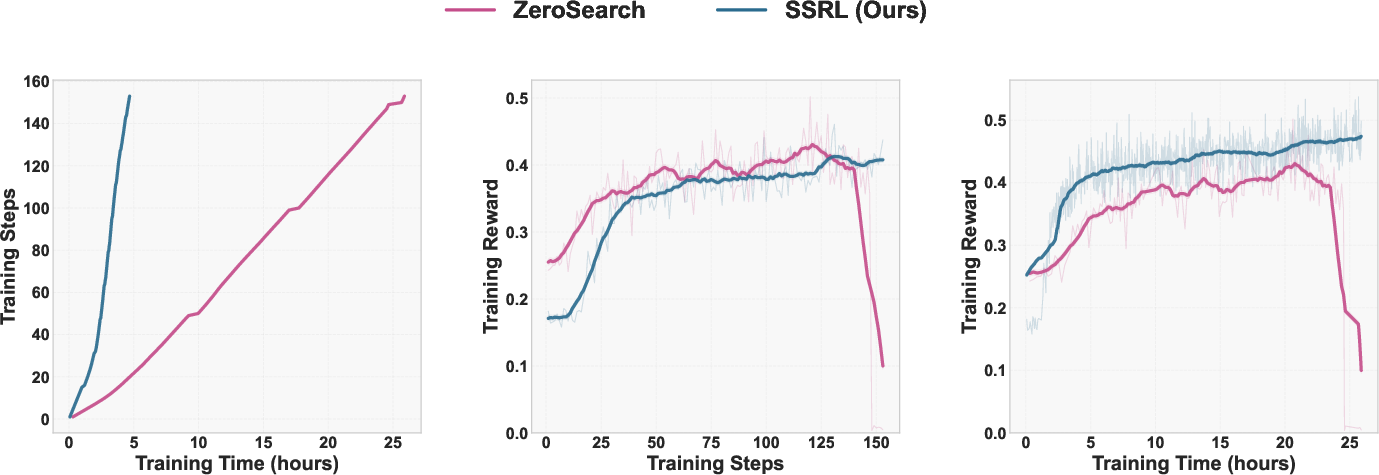

Figure 6: SSRL vs. ZeroSearch training efficiency, with SSRL achieving faster convergence and higher reward per unit time.

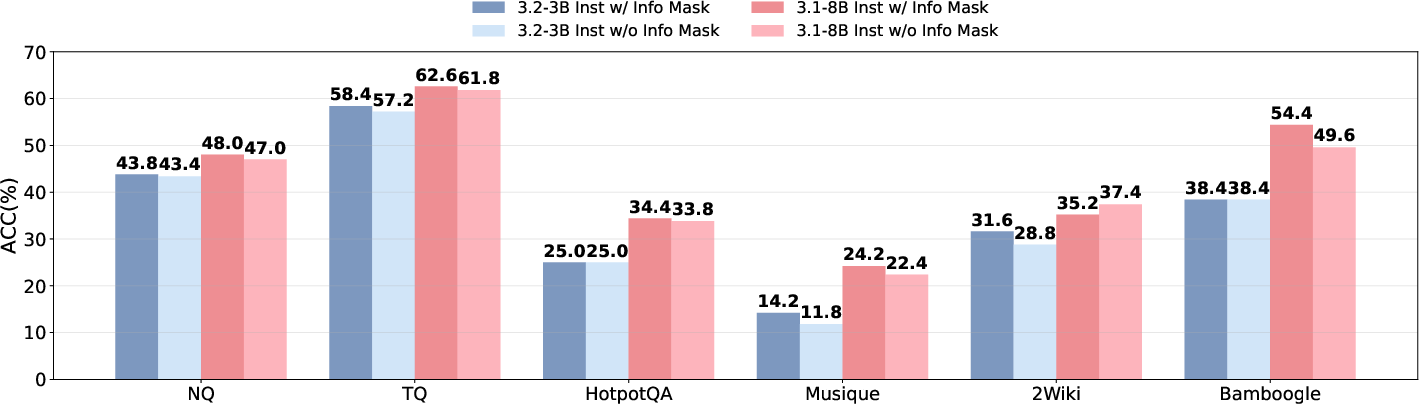

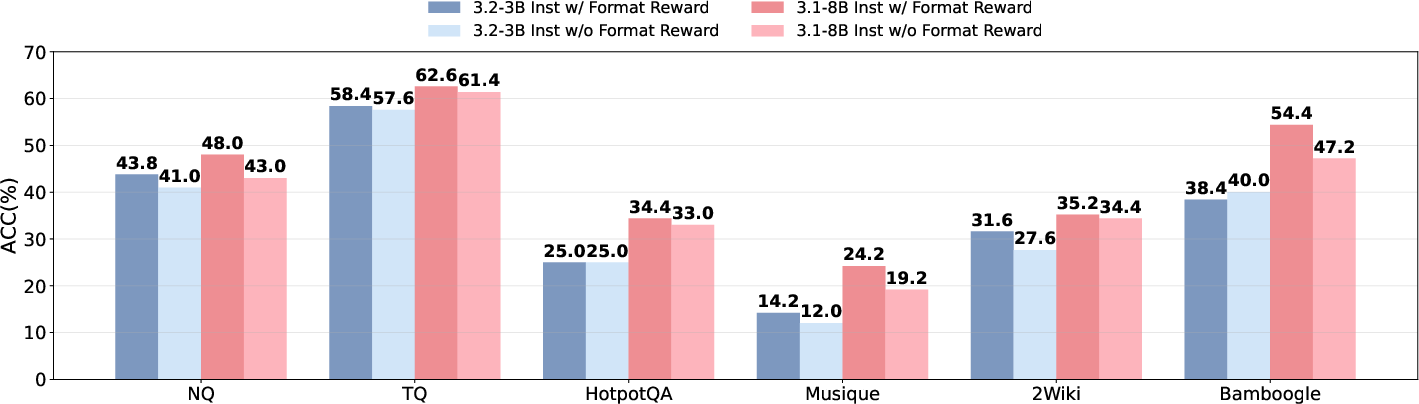

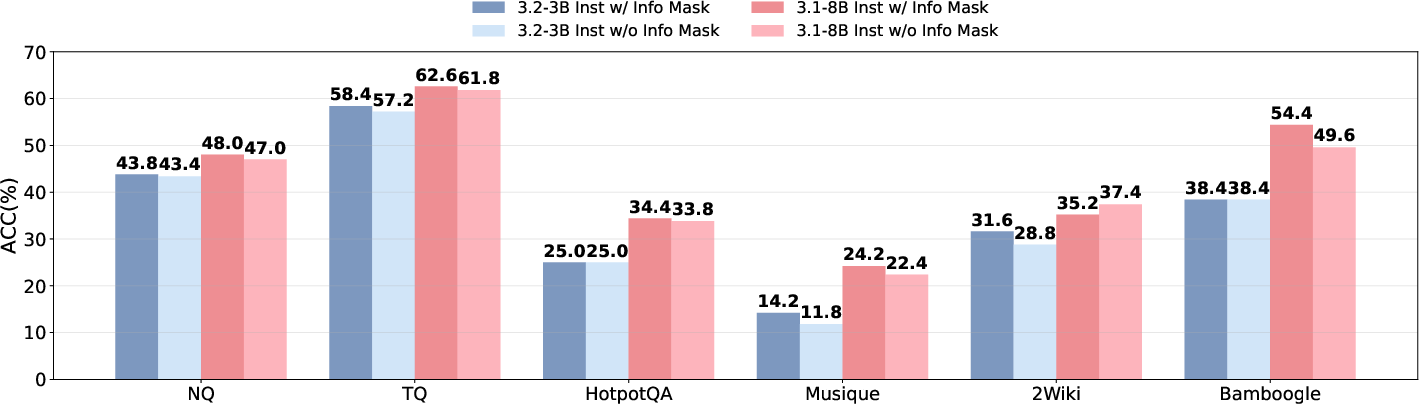

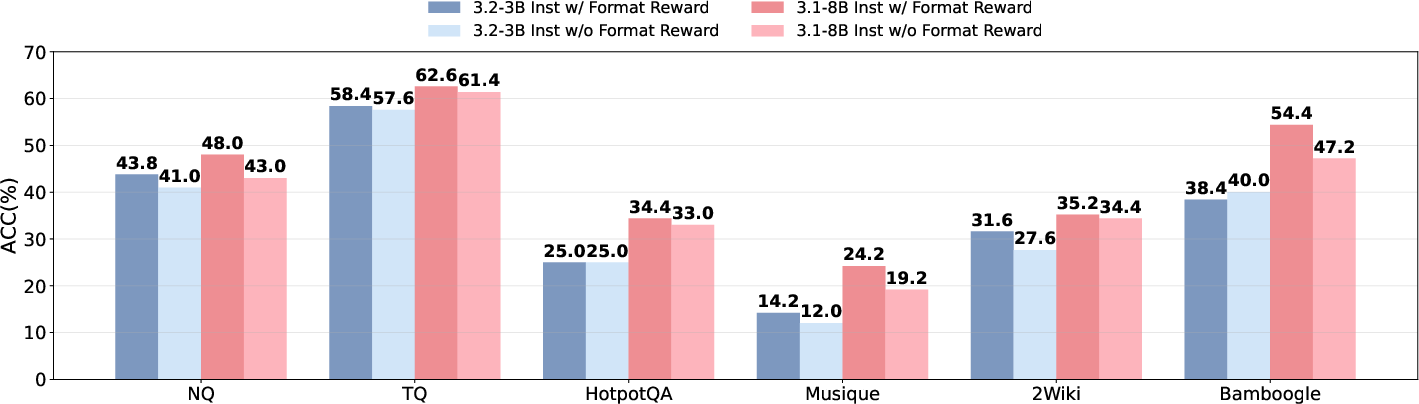

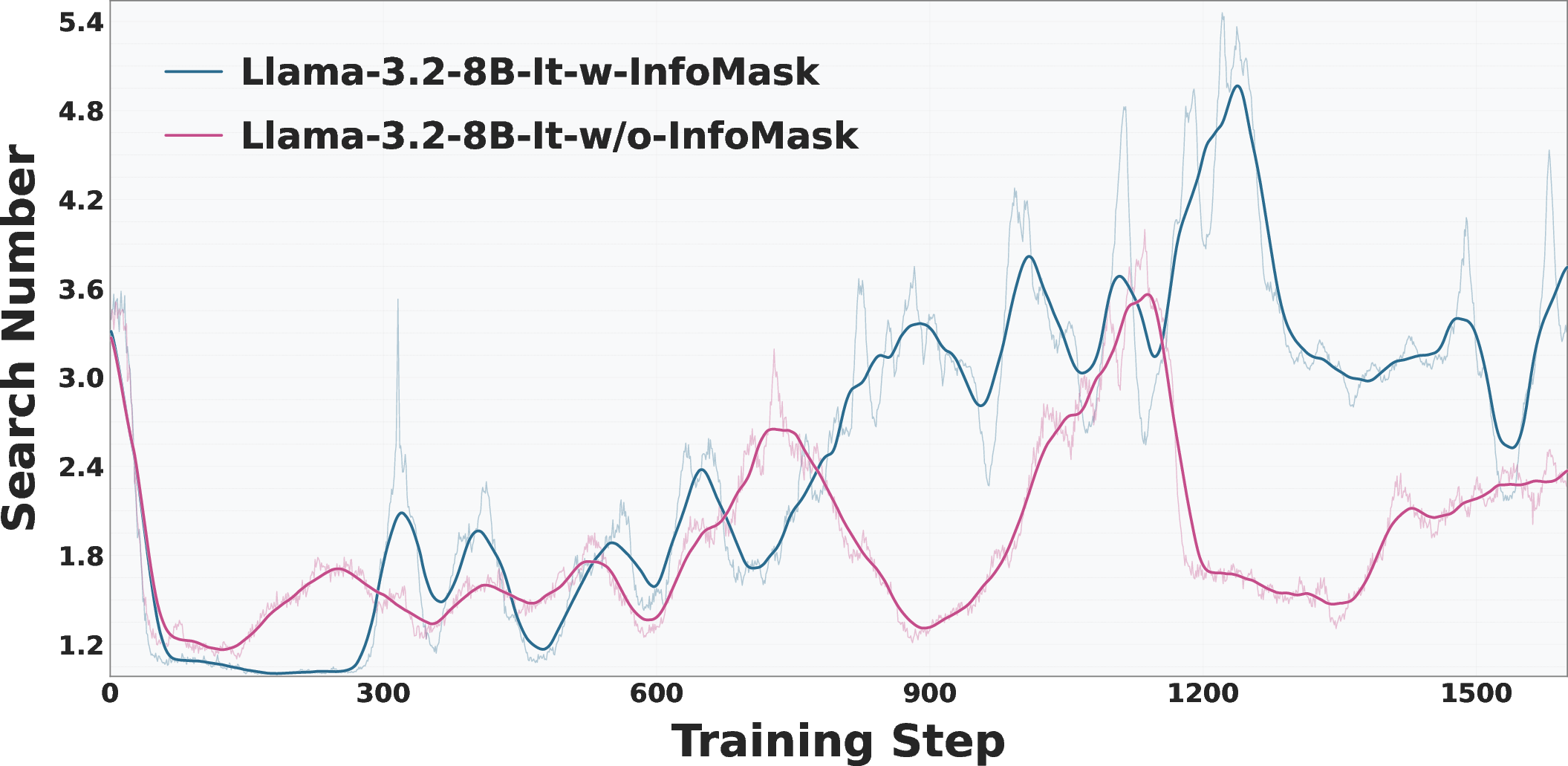

Ablation studies confirm the benefits of information masking and format-based rewards, both of which stabilize training and improve final performance.

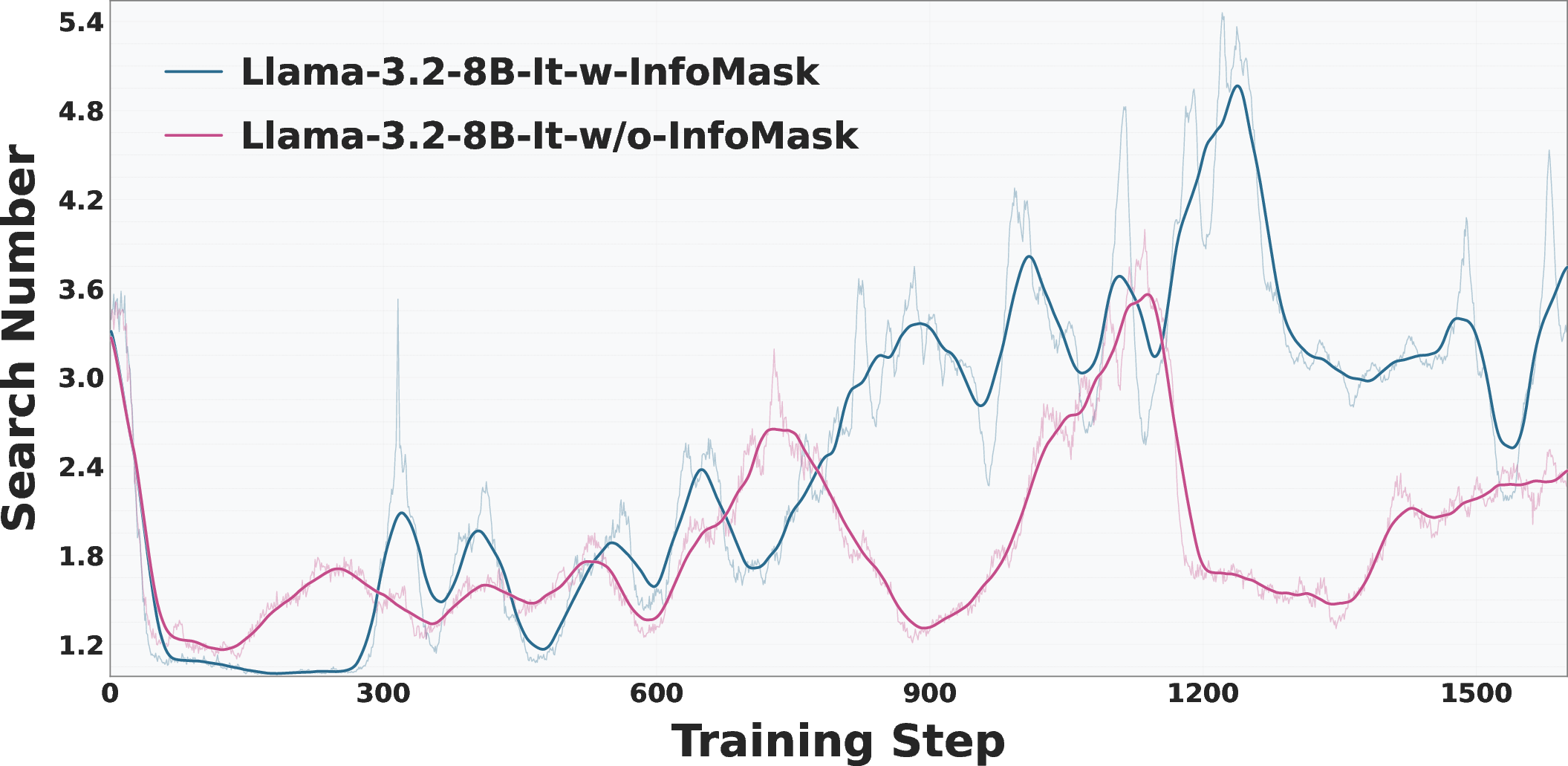

Figure 7: Performance comparison with and without information mask during training.

Figure 8: Impact of format reward on performance, with format reward yielding more stable and coherent reasoning trajectories.

Sim2Real Generalization and Hybrid Search Strategies

SSRL-trained models can be seamlessly adapted to real search scenarios ("sim-to-real transfer") by replacing self-generated information with results from actual search engines at inference time. This integration yields further performance improvements and cost savings, especially when combined with entropy-guided search strategies that trigger external search only when model uncertainty is high.

Figure 9: Left: Search count comparison with and without information mask. Right: Group size ablation for GRPO.

Pareto analysis demonstrates that SSRL-based agents achieve superior accuracy with fewer real searches compared to prior baselines, validating the practical utility of the approach.

Test-Time RL and Algorithmic Compatibility

Unsupervised RL algorithms such as TTRL generalize well to self-search settings, yielding substantial performance gains over GRPO. However, TTRL-trained models may exhibit over-reliance on internal knowledge and reduced adaptability to real environments. SSRL is compatible with a range of RL algorithms (GRPO, PPO, DAPO, KL-Cov, REINFORCE++), with GRPO and DAPO providing the best trade-off between efficiency and stability.

Implications and Future Directions

The SSRL paradigm establishes LLMs as cost-effective, robust simulators of world knowledge for search-driven RL agent training. The findings challenge the assumption that external search is necessary for high-performance QA and agentic reasoning, and demonstrate that internal knowledge can be systematically elicited and refined via RL. The observed scaling laws and sim-to-real transfer capabilities suggest that future LLM agents can be trained almost entirely offline, with minimal reliance on expensive online search APIs.

Theoretical implications include a re-examination of the relationship between reasoning priors and world knowledge priors in LLMs, and the potential for unsupervised RL to further unlock latent capabilities. Practically, SSRL opens new avenues for scalable agent training, hybrid search strategies, and efficient deployment in real-world environments.

Conclusion

SSRL provides a principled framework for leveraging LLMs' internalized world knowledge in search-driven RL tasks, achieving superior performance and efficiency compared to external search-based baselines. The approach enables robust sim-to-real generalization and supports scalable agentic reasoning without reliance on costly external APIs. Future work should explore more sophisticated selection mechanisms for candidate answers, deeper integration of unsupervised RL, and broader applications in embodied and multi-agent systems.