- The paper introduces personalization guidance for text-to-image diffusion models that balances subject and text fidelity using weight interpolation.

- It constructs an unlearned weak model by interpolating between pre-trained and fine-tuned models, achieving improved metrics such as DINO, CLIP-I, and CLIP-T scores.

- Experimental evaluations on diverse datasets and diffusion models demonstrate enhanced performance, efficiency, and broad applicability to personalized image generation.

Enhancing Personalized Text-to-Image Generation through Steered Guidance

The paper "Steering Guidance for Personalized Text-to-Image Diffusion Models" (2508.00319) introduces a novel approach to improve personalized text-to-image generation using diffusion models. The core problem addressed is the inherent trade-off between aligning with a specific target distribution (subject fidelity) and preserving the broad knowledge and editability of the original pre-trained model (text fidelity). The paper argues that existing guidance methods like CFG and AG are insufficient to navigate this trade-off effectively.

Addressing Limitations of Existing Guidance Techniques

The paper highlights the limitations of CFG, which can restrict adaptation to the target distribution, and AG, which compromises text alignment. To overcome these limitations, the paper proposes a "personalization guidance" technique that leverages an unlearned weak model conditioned on a null text prompt. The key innovation lies in dynamically controlling the degree of "unlearning" in the weak model through weight interpolation between the pre-trained and fine-tuned models during inference. This allows explicit steering of the output toward a balanced latent space without introducing additional computational overhead.

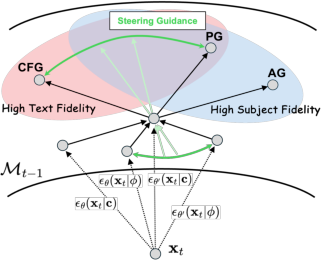

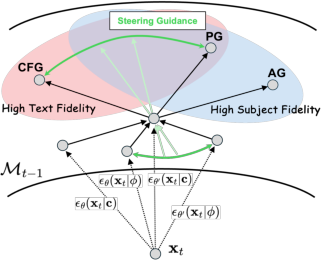

Figure 1: Motivation of Personalization Guidance. Classifier-free guidance [cfg] can interfere with subject fidelity due to its alignment with the text prompt.

The paper motivates the need for personalization guidance by illustrating the shortcomings of CFG and AG (Figure 1). CFG aligns well with text prompts but may compromise subject fidelity, while AG enhances subject fidelity but neglects text prompts. Personalization guidance aims to strike a balance, complementing the limitations of both methods.

Personalization Guidance Methodology

The proposed method involves interpolating the weights of the pre-trained model (θ) and the fine-tuned model (θ′) to create a weak model (θω) using the equation:

θω=ω⋅θ′+(1−ω)⋅θ

where ω is a weight interpolation scale between 0 and 1. This weak model is then used within the guidance framework:

ϵ~θ′λ(xt∣c)=ϵθω(xt∣ϕ)+λ(ϵθ′(xt∣c)−ϵθω(xt∣ϕ))

where xt is the noisy input, c is the text prompt, ϕ is the null text prompt, and λ is the guidance scale.

Figure 2: Comparison between Classifier-Free Guidance (CFG), AutoGuidance (AG), and our Personalization Guidance (PG), with the band conceptually representing the noisy data manifold.

Figure 2 conceptually illustrates how personalization guidance steers the output toward an optimal space between CFG and AG by leveraging weight interpolation. This contrasts with CFG and AG, which operate at the extremes of either prioritizing text fidelity or subject fidelity.

Experimental Validation and Results

The paper presents a comprehensive experimental evaluation using the ViCo dataset, which includes 16 concepts and 31 text prompts. The experiments are conducted on Stable Diffusion 1.5, Stable Diffusion 2.1, and SANA, fine-tuned using DreamBooth-LoRA, DB-LoRA with Textual Inversion, and ClassDiffusion. The results demonstrate that personalization guidance significantly enhances subject fidelity (DINO and CLIP-I scores) while maintaining text fidelity (CLIP-T score). The ablation studies on ω show that adjusting the weight interpolation scale allows for fine-grained control over the trade-off between subject and text fidelity.

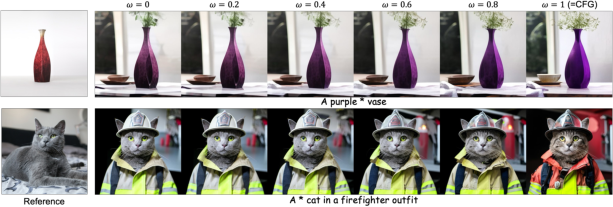

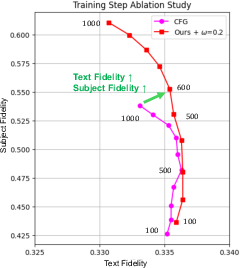

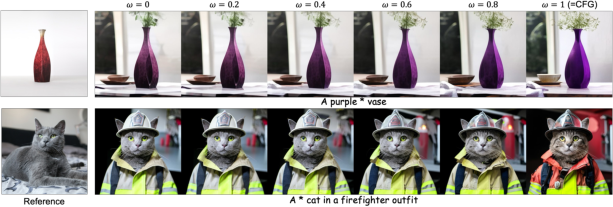

Figure 3: Ablation paper on the weight interpolation scale ω∈[0.0,1.0] with measurements taken at intervals of 0.1. Here, we use DreamBooth (DB) LoRA based on SD 1.5, SD 2.1, and SANA.

Figure 3 presents an ablation paper on the weight interpolation scale, demonstrating the ability to fine-tune subject or text fidelity by adjusting ω.

Comparative Analysis with Baselines

The paper compares personalization guidance with CFG, SAG, and AG. Quantitative results show that personalization guidance outperforms these baselines in terms of subject fidelity, while maintaining comparable text fidelity. Qualitative results further illustrate the superior performance of personalization guidance in preserving subject details and generating high-quality images.

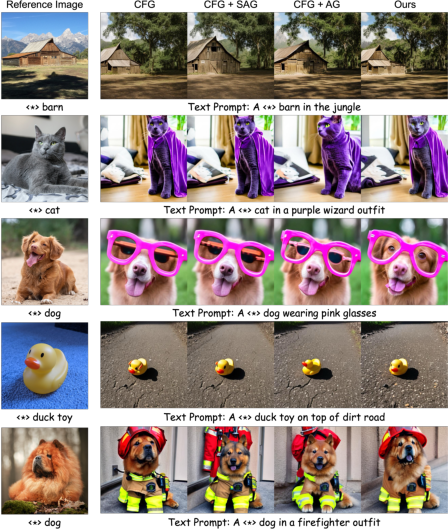

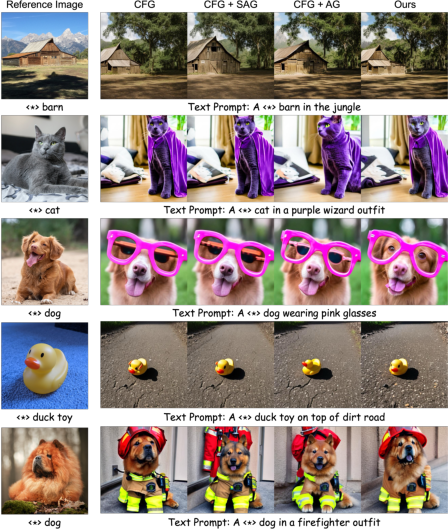

Figure 4: Comparison with other guidance techniques, including without guidance, CFG, CFG+SAG, and CFG+AG. These images are generated by the fine-tuned SD 2.1 using DB-LoRA and ClassDiffusion.

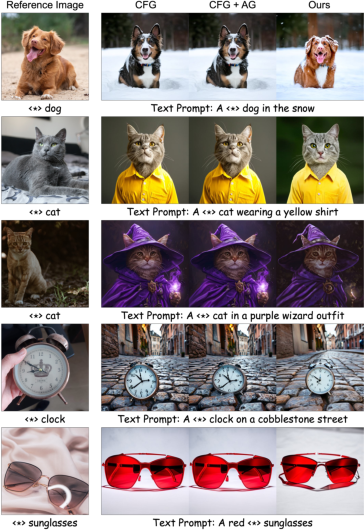

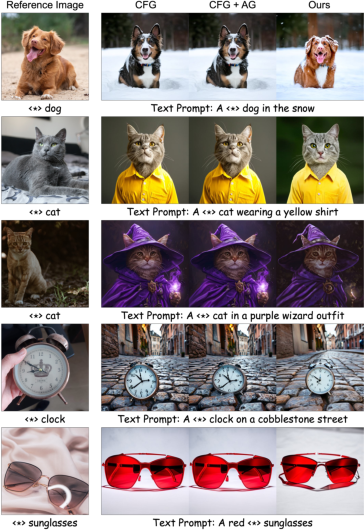

Figure 5: Comparison with other guidance techniques. These images are generated by fine-tuned SANA [sana] using DB-LoRA.

Figures 4 and 5 provide visual comparisons, highlighting the enhanced subject fidelity achieved by personalization guidance compared to CFG, SAG, and AG.

User Study and Subjective Evaluation

A user paper was conducted to assess subjective preferences for subject and text fidelity. The results indicate that users significantly preferred images generated using personalization guidance over those generated using baseline methods.

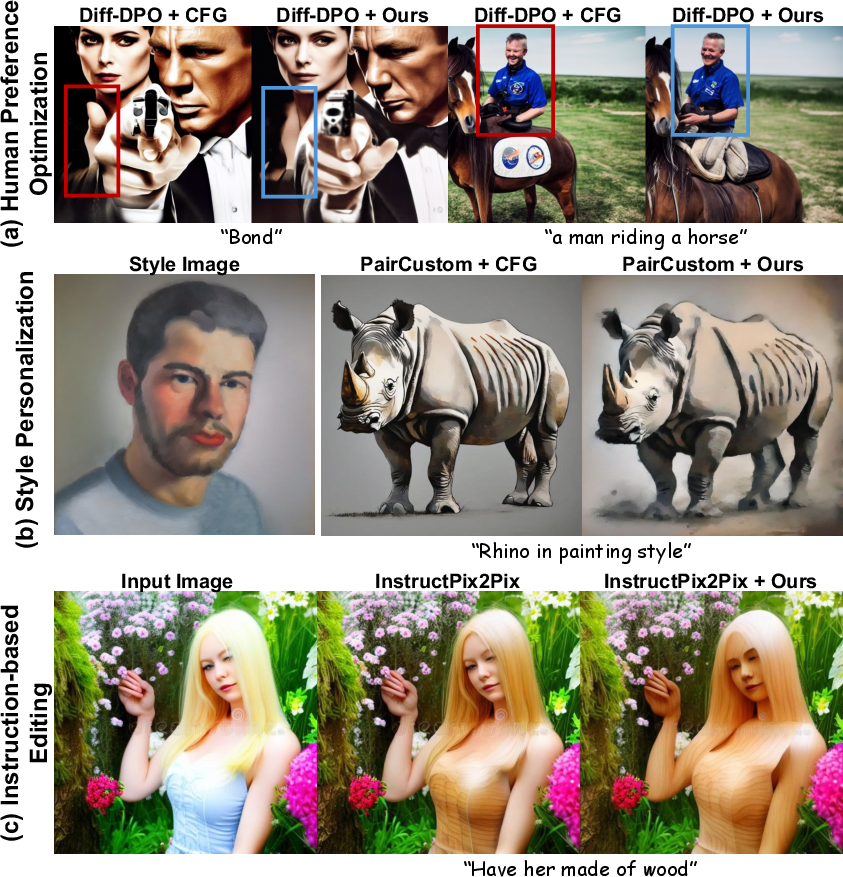

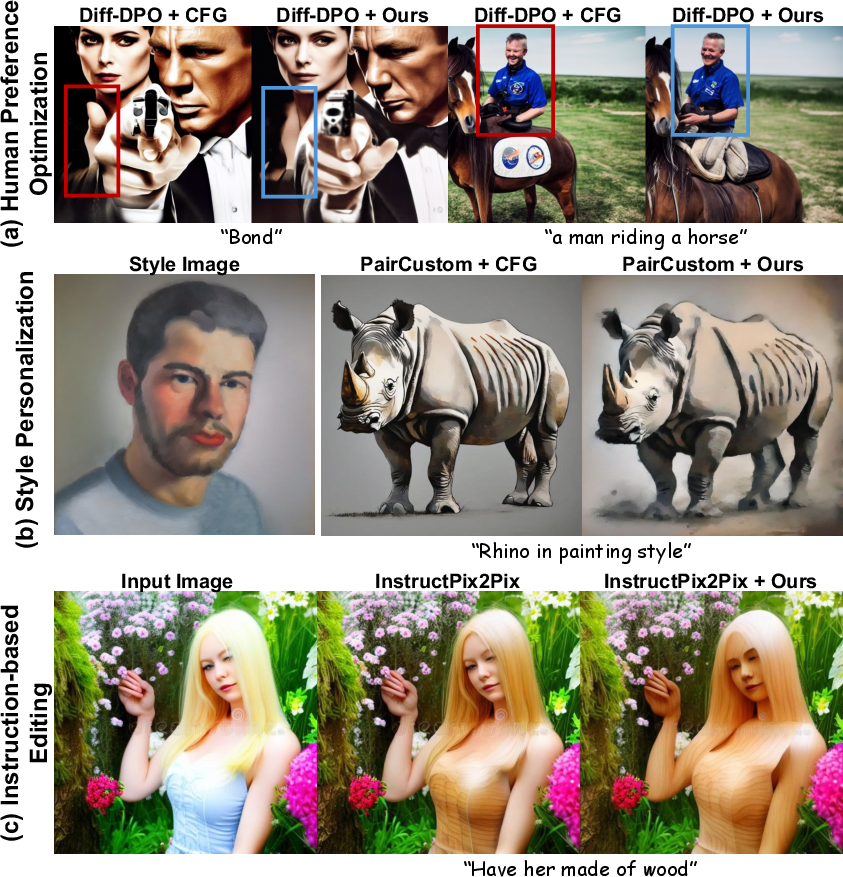

Generalization to Fine-Tuned Models

The paper explores the generalizability of personalization guidance to other fine-tuned diffusion models beyond personalization tasks, including human preference optimization (Diffusion-DPO), style personalization (PairCustom), and instruction-based editing (InstructPix2Pix). The results demonstrate that personalization guidance improves performance across these diverse tasks, suggesting its broad applicability.

Figure 6: Generated images by changing ω, where SANA with DB-LoRA is used.

Figure 6 visualizes the impact of ω on generation quality, demonstrating the ability to control the degree of subject and text fidelity.

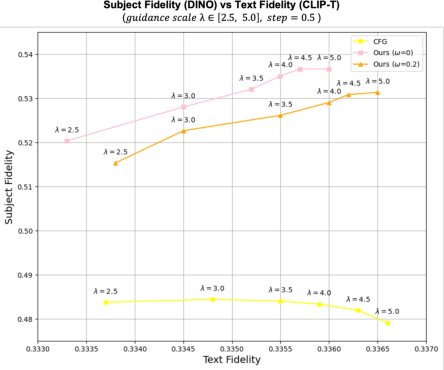

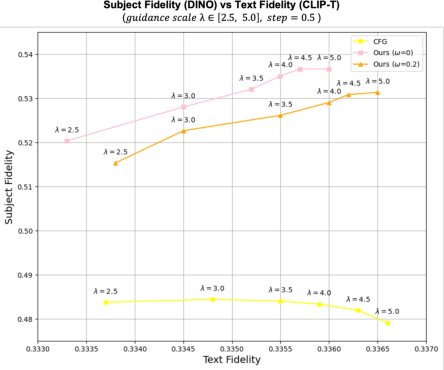

Figure 7: Ablation paper on the guidance scale λ∈[2.5,5.0], measuring performance at 0.5 intervals using DB-LoRA based on SANA.

Figure 7 presents an ablation paper on the guidance scale, showing that personalization guidance improves both subject and text fidelity compared to CFG, regardless of the guidance scale.

Figure 8: Qualitative results of our guidance across diverse tasks.

Figure 8 shows qualitative results across diverse tasks, demonstrating the broad applicability of personalization guidance.

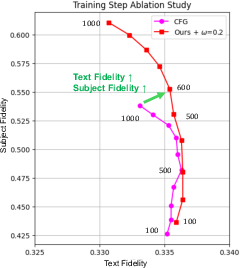

Figure 9: Ablation paper on the number of training steps. Notably, our method achieves superior subject fidelity with fewer steps, demonstrating enhanced efficiency.

Figure 9 illustrates that personalization guidance achieves superior subject fidelity with fewer training steps, demonstrating enhanced efficiency.

Figure 10: Comparison with other guidance techniques. These images are generated by fine-tuned SD 2.1 [ldm] using DB-LoRA and ClassDiffusion.

Figure 11: Comparison with other guidance techniques. These images are generated by fine-tuned SANA [sana].

Figures 10 and 11 provide additional visual comparisons, further supporting the effectiveness of personalization guidance.

Conclusion

The paper presents a compelling approach to address the trade-off between subject and text fidelity in personalized text-to-image generation. The proposed personalization guidance technique, based on weight interpolation and an unlearned weak model, offers a simple yet effective way to steer the output toward a balanced latent space. The extensive experimental results and user paper demonstrate the superior performance and broad applicability of the proposed method. The ability to adjust the degree of adaptation through weight interpolation at inference offers a practical advantage over existing methods. This research has significant implications for the development of more controllable and high-quality personalized generative models.