A Detailed Analysis of GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models

"GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models" presents a thorough exploration of employing diffusion models for text-conditional image synthesis and editing. The paper evaluates two primary guidance strategies: CLIP guidance and classifier-free guidance, ultimately favoring the latter for its superior performance in producing photorealistic and semantically accurate images. This essay provides an expert-level overview of the core methodologies, results, and implications presented in the paper.

Diffusion Models in Image Synthesis

Diffusion models, particularly Gaussian diffusion models enhanced through score-matching techniques, have showcased promising capabilities in image generation tasks. The authors leverage the foundational works by \citet{dickstein,scorematching,ddpm}, which introduce progressively adding Gaussian noise to a data sample and then denoising it to generate high-quality synthetic images. This process is augmented by implementing classifier-free guidance, proposed by \citet{uncond}, eliminating the need for additional classifier training.

The paper demonstrates that a 3.5 billion parameter diffusion model, trained to condition on text descriptions, can achieve impressive synthesis results. The model uses a text encoder to infuse linguistic context throughout the denoising process, enabling the generation of images that closely adhere to the provided textual prompts.

Guidance Strategies: CLIP vs. Classifier-Free

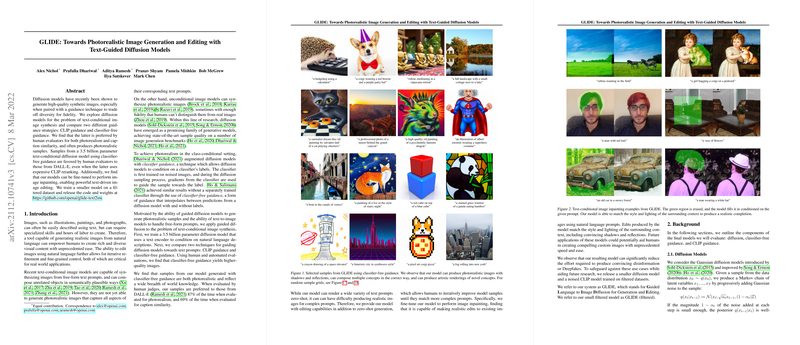

Two guidance strategies are compared: CLIP guidance and classifier-free guidance. CLIP, introduced by \citet{clip}, operates by optimizing a contrastive loss that aligns images and text embeddings, enabling the model to steer image generation based on text prompts. Despite its robust theoretical foundation, CLIP guidance often falls short in producing photorealistic images when compared to classifier-free guidance.

Classifier-free guidance leverages the diffusion model's inherent capabilities, guiding the generation process based on interpolations between conditioned and unconditioned outputs. This method simplifies the guidance process and taps into the diffusion model's comprehensive understanding of both image and text domains without relying on an external classifier.

Human evaluators consistently preferred images generated using classifier-free guidance over those produced by CLIP guidance, indicating a higher degree of photorealism and semantic alignment with the text prompts. This preference was quantified by significant human evaluation scores, demonstrating the efficacy of classifier-free guidance.

Text-Guided Image Editing and Inpainting

Beyond mere generation, the model extends to sophisticated image editing capabilities. By fine-tuning the diffusion model for image inpainting, GLIDE can seamlessly integrate new elements and modify existing ones within an image context driven by text prompts. This enables iterative refinement of generated images, which is crucial for various professional and creative applications.

The examples show that GLIDE can maintain style and lighting consistency during editing tasks, producing convincing modifications that blend naturally into the original image context. Such capabilities point towards potential applications in content creation, design, and other fields that benefit from high-quality image manipulation.

Quantitative and Qualitative Analysis

Numerically, GLIDE demonstrates competitive performance against established models like DALL-E. With experiments on MS-COCO datasets, GLIDE achieves favorable FID scores, even in zero-shot settings, highlighting its robustness. Moreover, the paper thoroughly examines the balance between fidelity and diversity of the generated images, underscoring classifier-free guidance's ability to reach near-optimal trade-offs.

Qualitatively, the paper showcases high diversity in the images generated from a variety of prompts, including complex, multi-object scenes and artistically stylized renditions. The visual examples provided in the supplementary materials confirm the model’s capability to generalize and compose intricate, novel scenes coherently.

Safety and Ethical Considerations

The authors provide an in-depth analysis of potential misuse risks associated with GLIDE. The ease of generating photorealistic images raises concerns about disinformation and deepfakes. To mitigate such risks, a smaller, filtered version of the diffusion model, GLIDE (filtered), is trained on datasets devoid of sensitive content, such as images of humans, violence, and hate symbols. This release strategy aims to balance between advancing research and safeguarding against misuse.

Future Directions

The research points towards several intriguing future developments. The techniques and findings presented open avenues for refining diffusion models’ efficiency, enabling real-time applications. Furthermore, interdisciplinary research on ethical implications and bias mitigation remains imperative as these models become more integrated into public applications. Continuous improvements in conditional generation strategies and further exploration into guidance techniques will likely drive future advancements in the field.

In conclusion, "GLIDE: Towards Photorealistic Image Generation and Editing with Text-Guided Diffusion Models" makes significant strides in text-conditional image synthesis and editing. Its methodological innovations, coupled with rigorous evaluations, pave the way for broader applications and further research in diffusion-based generative models. The balance between technical prowess and ethical considerations underscores the importance of responsible AI development in the pursuit of advanced capabilities.