Seed-Prover: Deep and Broad Reasoning for Automated Theorem Proving (2507.23726v2)

Abstract: LLMs have demonstrated strong mathematical reasoning abilities by leveraging reinforcement learning with long chain-of-thought, yet they continue to struggle with theorem proving due to the lack of clear supervision signals when solely using natural language. Dedicated domain-specific languages like Lean provide clear supervision via formal verification of proofs, enabling effective training through reinforcement learning. In this work, we propose \textbf{Seed-Prover}, a lemma-style whole-proof reasoning model. Seed-Prover can iteratively refine its proof based on Lean feedback, proved lemmas, and self-summarization. To solve IMO-level contest problems, we design three test-time inference strategies that enable both deep and broad reasoning. Seed-Prover proves $78.1\%$ of formalized past IMO problems, saturates MiniF2F, and achieves over 50\% on PutnamBench, outperforming the previous state-of-the-art by a large margin. To address the lack of geometry support in Lean, we introduce a geometry reasoning engine \textbf{Seed-Geometry}, which outperforms previous formal geometry engines. We use these two systems to participate in IMO 2025 and fully prove 5 out of 6 problems. This work represents a significant advancement in automated mathematical reasoning, demonstrating the effectiveness of formal verification with long chain-of-thought reasoning.

Summary

- The paper introduces a lemma-centric, iterative proof generation method that uses formal Lean verification to achieve state-of-the-art performance on mathematical benchmarks.

- It employs multi-stage reinforcement learning and diverse prompting to refine proofs iteratively, greatly improving accuracy and efficiency in complex theorem proving tasks.

- The integration of Seed-Geometry overcomes Lean’s geometry limitations, providing a neuro-symbolic engine that speeds up proof generation and enhances domain coverage.

Seed-Prover: Deep and Broad Reasoning for Automated Theorem Proving

Introduction and Motivation

The paper introduces Seed-Prover, a formal reasoning system that leverages LLMs for automated theorem proving (ATP) in Lean 4, with a particular focus on deep and broad mathematical reasoning. The work addresses the limitations of natural language-based LLMs in mathematical domains, where the absence of verifiable supervision signals impedes reinforcement learning (RL) and reliable proof generation. By utilizing formal languages such as Lean, Seed-Prover enables precise, compiler-verified feedback, facilitating effective RL and scalable proof search. The system is complemented by Seed-Geometry, a neuro-symbolic geometry engine designed to overcome Lean's historical deficiencies in geometry support.

System Architecture and Methodology

Lemma-Style Whole-Proof Generation

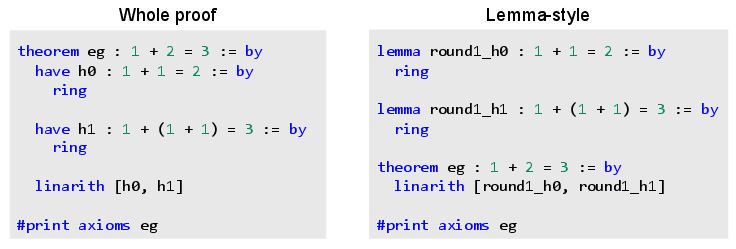

Seed-Prover departs from prior step-level and whole-proof LLM provers by adopting a lemma-centric proof paradigm. Instead of generating a monolithic proof, the model first proposes and proves intermediate lemmas, which are then composed to construct the main theorem. This modular approach enables independent verification, reuse, and combination of lemmas across different inference trajectories, enhancing both proof robustness and search efficiency.

Figure 1: An example of whole proof and lemma-style proof in Lean 4.

The lemma pool, a central data structure, stores all generated lemmas, their proofs, dependencies, and difficulty metrics. This facilitates retrieval, sampling, and recombination during iterative proof refinement.

Iterative Proof Refinement and Conjecture Proposing

Seed-Prover employs an iterative refinement loop, where proof attempts are repeatedly updated based on Lean compiler feedback, previously proved lemmas, and self-summarization. The system is trained to propose conjectures—potentially useful properties or subgoals—prior to attempting the main proof, enabling broad exploration of the problem space. This conjecture pool is dynamically expanded and filtered based on proof success rates and semantic relevance.

Multi-Stage RL Training and Diverse Prompting

The model is trained via multi-stage, multi-task RL, using VAPO as the RL backbone. The reward structure is binary, based on successful formal proof verification, with additional penalties to enforce lemma-first proof structure. The training corpus is a mixture of open-source and in-house formalized problems, augmented with easier variants generated by the model itself. Prompts are diversified to include natural language hints, failed attempts, summaries, and Lean feedback, enhancing the model's adaptability and robustness.

Test-Time Inference Strategies

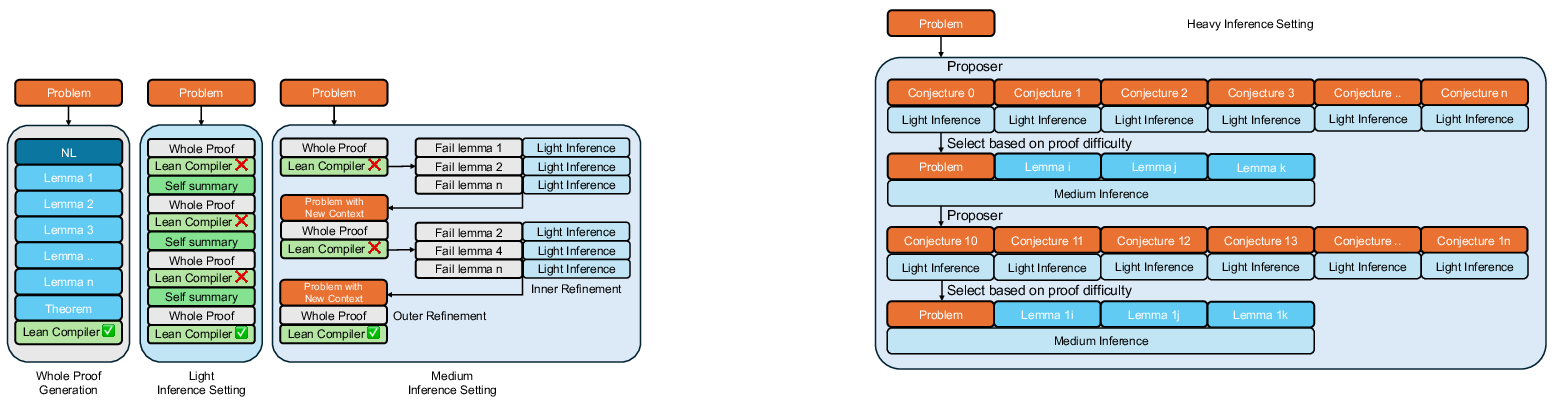

Seed-Prover introduces a three-tiered inference strategy to balance depth and breadth of reasoning, adapting to problem difficulty and available computational budget:

- Light Setting: Iterative refinement of single-pass proofs, leveraging Lean feedback and self-summarization. Each attempt is refined up to 8–16 times, yielding significant improvements over naive Pass@k sampling.

- Medium Setting: Nested refinement, where difficult lemmas generated during outer refinement are themselves refined using the light setting. This enables handling of lengthy, structurally complex proofs.

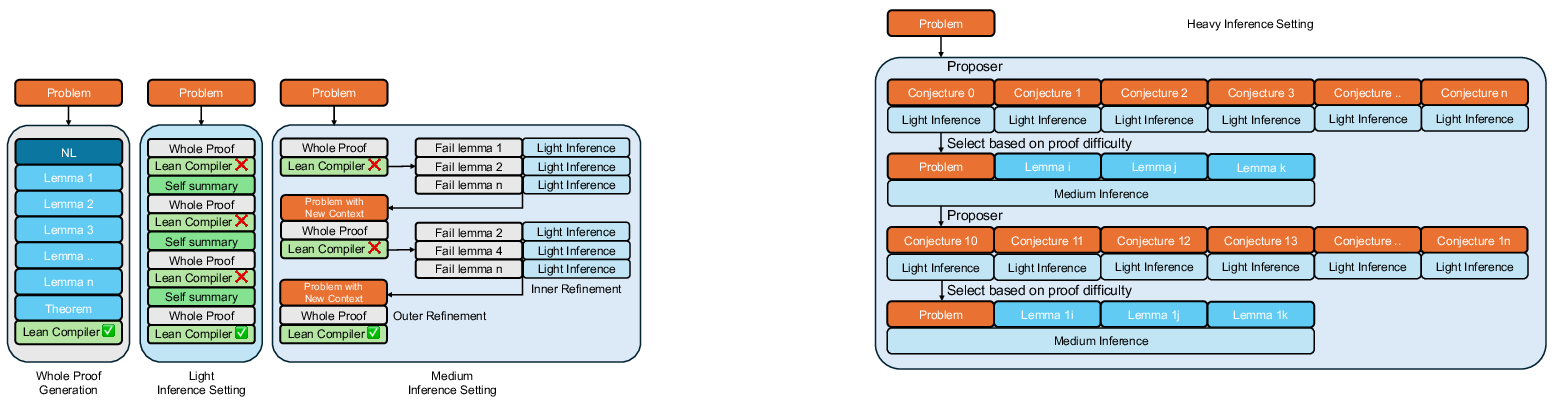

- Heavy Setting: Large-scale conjecture generation and proof attempts, with thousands of conjectures proposed and filtered. The lemma pool is populated with nontrivial facts, which are then integrated into the main proof using the medium setting.

Figure 2: The workflows of single-pass whole proof generation, light, and medium inference settings.

Figure 3: The workflow of heavy inference setting.

Seed-Geometry: Fast and Scalable Geometry Reasoning

Seed-Geometry is a neuro-symbolic geometry engine that addresses Lean's lack of geometry support. It features:

- An extended domain-specific language (DSL) for concise geometric constructions, including composite actions for common but complex constructions.

- A C++ backend for the reasoning engine, yielding a 100x speedup over previous Python implementations.

- A high-performing Seed-family LLM, trained on 38B tokens of geometry data, using step-by-step beam search in a distributed setup.

- Efficient, distributed search with asynchronous reasoning and inference, supporting large-scale problem generation and solution.

Empirical Results

Seed-Prover and Seed-Geometry achieve state-of-the-art results across multiple formal mathematics benchmarks:

- IMO 2025: Fully proved 5 out of 6 problems, with 4/6 within the official contest deadline.

- Past IMO Problems: 78.1% success rate (121/155), with strong performance across all difficulty levels and subject areas.

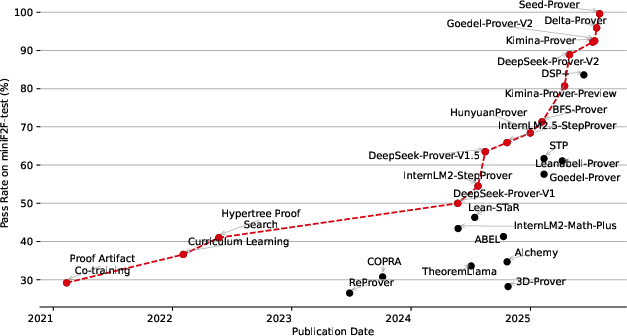

- MiniF2F: 100% on MiniF2F-valid and 99.6% on MiniF2F-test, surpassing previous SOTA by a significant margin.

Figure 4: Growth in MiniF2F-Test performance over time.

- PutnamBench: 331/657 problems solved, a nearly 4x improvement over previous SOTA.

- CombiBench: 30% success rate, tripling the previous best, though combinatorics remains a relative weakness.

- MiniCTX-v2: 81.8% success rate, demonstrating strong generalization to context-rich, real-world formalization tasks.

Seed-Geometry outperforms AlphaGeometry 2 on both standard IMO and IMO shortlist geometry problems, solving 43/50 and 22/39 respectively, and solves the IMO 2025 geometry problem in under 2 seconds.

Implementation Considerations and Trade-offs

- Resource Requirements: The heavy inference setting is computationally intensive, requiring days of distributed inference and large-scale beam search. The light and medium settings are more tractable, completing in hours.

- Scalability: The modular lemma pool and distributed search architecture enable scaling to harder problems and larger search spaces, but memory and compute constraints remain significant for the heaviest settings.

- Formal Verification: By relying on Lean's compiler for proof checking, the system ensures correctness and avoids the pitfalls of unverifiable natural language proofs.

- Prompt Engineering: The diverse prompting strategy is critical for robustness but increases the complexity of both training and inference pipelines.

- Geometry Support: The integration of Seed-Geometry is essential for full coverage of mathematical domains, as Lean's native geometry capabilities are insufficient for IMO-level problems.

Theoretical and Practical Implications

The results demonstrate that formal language-based LLMs, when combined with lemma-centric reasoning, iterative refinement, and scalable search, can achieve high levels of performance on challenging mathematical benchmarks. The modularity of lemma-style proofs facilitates knowledge reuse and compositionality, which are essential for scaling ATP to more complex domains. The success of Seed-Geometry highlights the importance of domain-specific neuro-symbolic systems for areas where formal libraries are underdeveloped.

The strong empirical results—particularly the ability to fully prove 5/6 IMO 2025 problems and saturate MiniF2F—underscore the viability of LLM-based formal provers as practical tools for mathematical research and education. The system's reliance on formal verification ensures reliability and trustworthiness, addressing a key limitation of natural language-based approaches.

Future Directions

Potential avenues for further research include:

- Integration of Natural Language and Formal Reasoning: Bridging the gap between informal mathematical discourse and formal proof generation.

- Automated Formalization: Extending the system to automatically translate natural language problem statements into formal Lean code.

- Open Conjectures: Applying the system to open mathematical problems, leveraging the compositionality and scalability of the lemma pool.

- Resource Optimization: Developing more efficient search and refinement strategies to reduce computational overhead, particularly in the heavy inference setting.

- Enhanced Domain Coverage: Expanding the formal libraries and neuro-symbolic engines to cover additional mathematical domains beyond geometry.

Conclusion

Seed-Prover and Seed-Geometry represent a significant advancement in automated formal reasoning, combining LLMs, formal verification, and scalable search to achieve state-of-the-art performance on a range of mathematical benchmarks. The lemma-style, whole-proof paradigm, iterative refinement, and domain-specific neuro-symbolic engines collectively enable deep and broad reasoning capabilities. The demonstrated results on IMO 2025 and other benchmarks establish a new standard for LLM-based ATP systems, with substantial implications for the future of AI-assisted mathematics.

Follow-up Questions

- How does the lemma-style proof generation enhance proof robustness in Seed-Prover?

- What roles do iterative refinement and multi-stage RL play in improving proof accuracy?

- How does Seed-Geometry address Lean's historical deficiencies in geometry reasoning?

- What are the computational trade-offs between the light, medium, and heavy inference settings?

- Find recent papers about automated theorem proving.

Related Papers

- DeepSeek-Prover: Advancing Theorem Proving in LLMs through Large-Scale Synthetic Data (2024)

- DeepSeek-Prover-V1.5: Harnessing Proof Assistant Feedback for Reinforcement Learning and Monte-Carlo Tree Search (2024)

- InternLM2.5-StepProver: Advancing Automated Theorem Proving via Expert Iteration on Large-Scale LEAN Problems (2024)

- Goedel-Prover: A Frontier Model for Open-Source Automated Theorem Proving (2025)

- Kimina-Prover Preview: Towards Large Formal Reasoning Models with Reinforcement Learning (2025)

- DeepSeek-Prover-V2: Advancing Formal Mathematical Reasoning via Reinforcement Learning for Subgoal Decomposition (2025)

- Towards Solving More Challenging IMO Problems via Decoupled Reasoning and Proving (2025)

- Gemini 2.5 Pro Capable of Winning Gold at IMO 2025 (2025)

- Thinking Machines: Mathematical Reasoning in the Age of LLMs (2025)

- Goedel-Prover-V2: Scaling Formal Theorem Proving with Scaffolded Data Synthesis and Self-Correction (2025)

Authors (36)

Tweets

YouTube

HackerNews

- Seed-Prover: Deep and Broad Reasoning for Automated Theorem Proving (3 points, 0 comments)

alphaXiv

- Seed-Prover: Deep and Broad Reasoning for Automated Theorem Proving (466 likes, 3 questions)