- The paper presents an open-source LLM that achieves state-of-the-art performance in automated formal proof generation using Lean 4.

- It introduces innovative formalizers to convert natural language mathematical problems into formal expressions and leverages iterative expert refinement.

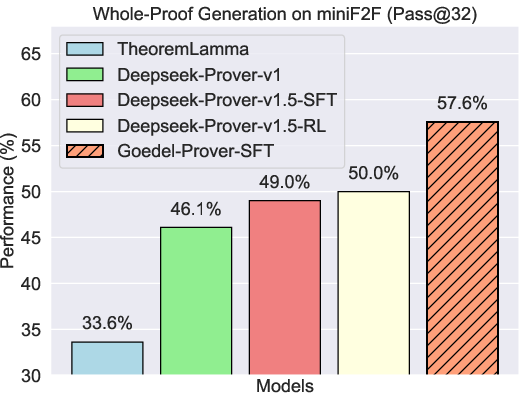

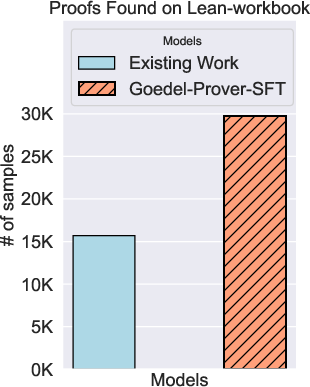

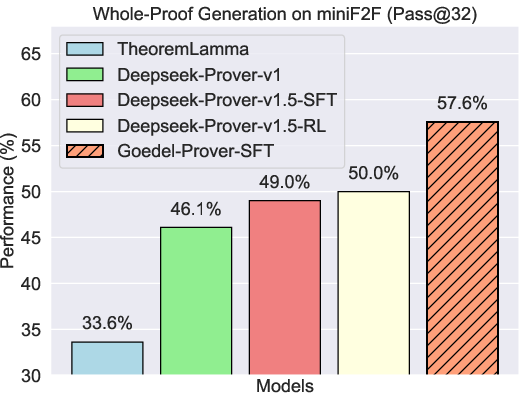

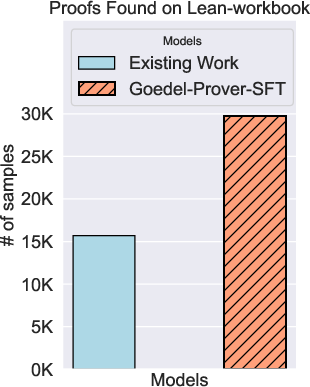

- Results demonstrate significant gains, including a 57.6% Pass@32 success rate and nearly doubling the proof output in Lean Workbook compared to previous models.

Goedel-Prover: A Frontier Model for Open-Source Automated Theorem Proving

Introduction

The paper introduces Goedel-Prover, an open-source LLM that achieves state-of-the-art (SOTA) performance in automated formal proof generation for mathematical problems. It addresses the challenge of scarce formalized math statements and proofs by developing statement formalizers to translate natural language problems from the extensive Numina dataset into formal language expressions in Lean 4. Moreover, the paper implements an expert iteration method with a series of provers to improve theorem-solving performance through iterative data enrichment and model refinement. Goedel-Prover demonstrates significant advancement in automated theorem proving, surpassing existing state-of-the-art models like DeepSeek-Prover-V1.5.

Figure 1: (Left) Performance of Pass@32 in whole-proof generation on the miniF2F benchmark. (Middle) Comparison of Goedel-Prover-SFT and DeepSeek-Prover-V1.5 in miniF2F performance across various inference budgets. (Right) Goedel-Prover-SFT solves 29.7K problems in Lean Workbook, doubling the previous best.

Methodology

The inadequate availability of formalized mathematical statements and proofs complicates the training of LLMs for theorem proving in formal languages. This paper addresses this scarcity by developing "Formalizer A" and "Formalizer B," which translate informal mathematical statements from the Numina and AOPS datasets into formal Lean language statements.

Figure 2: The training of the formalizers using Formal and Informal (F-I) statement pairs from various sources.

Key steps in the statement formalization include:

- Training of Formalizers: Two formalizers are created to convert informal mathematical statements into formal Lean language to ensure diversity in formalization styles, thus enhancing the dataset's comprehensiveness and robustness (Table 1).

- Quality Assessment: Formalized statements pass two rigorous tests: Compiling Correctness (CC) Test, which confirms Lean syntax conformity, and Faithfulness and Completeness (FC) Test, validated using LLMs such as Qwen2.5-72B-Instruct.

- Data Augmentation: The process includes data from the Numina dataset and the Art of Problem Solving (AOPS) repositories, resulting in a substantial dataset of 1.78 million formal statements for training.

Figure 2: Training process of Formalizers using Formal and Informal (F-I) statement pairs.

Expert Iteration Process

The expert iteration methodology is core to the Goedel-Prover's enhancements in theorem proving capabilities.

Figure 3: The expert iteration process involves collecting new proofs using the iter-k prover and updating the training data, leading to iter-(k+1) provers.

Key Steps in Expert Iteration:

- Initial Proof Candidate Generation: The process begins with DeepSeek-Prover-V1.5-RL, which generates multiple proof candidates per formal statement in the dataset.

- Verification and Fine-Tuning: The generated proofs are verified using the Lean compiler. Valid proofs are used for supervised fine-tuning of the next prover, iteratively improving the prover model.

- Multi-Iterations: A total of eight iterations are conducted, yielding consistent performance improvements, as illustrated by the figures throughout the process, particularly in Figure 3, which highlights the continual improvement across iterations.

Figure 3: Performance of Goedel-Prover model on four datasets at each iteration.

Results

On the miniF2F benchmark, Goedel-Prover demonstrated significant improvements in whole-proof generation. It achieved a Pass@32 success rate of 57.6%, surpassing the best open-source model previously available by 7.6% (Table 2 and Figure 1).

(Table 1)

Table 1: Whole-proof generation performance on the miniF2F benchmark highlights the improvement of Goedel-Prover over previous state-of-the-art models.

In Lean Workbook, Goedel-Prover generated 29.7K formal proofs, nearly doubling its antecedents' output of 15.7K. Furthermore, on the PutnamBench benchmark, it solved 7 problems with a Pass@512 inference budget, securing a top position on the leaderboard (Table 3).

Figure 4: Performance on four datasets at each iteration, showing incremental improvements from data scaling and iterative training.

Discussion

Implications and Future Directions

The findings underscore the potential of expert iteration in enhancing LLM-based theorem provers. By adapting LLMs for whole-proof generation, substantial gains in performance and resource efficiency have been achieved on extensive theorem datasets.

Significantly, the style of formalization plays a significant role in the effectiveness of the final prover, as evidenced by diverse results obtained from different formalizers (Table 2). Balancing cost and efficacy remains an area of importance. Although whole-proof and stepwise provers both have their merits, the future might involve hybrid approaches, leveraging stepwise and whole-proof generation methods.

Algorithmic Innovations: The use of expert iteration in refining provers by generating novel proofs is noteworthy, suggesting a promising direction for further improving automated proving systems. Moreover, integrating automated theorem provers with external tools like SymPy for enhanced simplification capacities may unlock additional performance improvements.

Figure 5: Correlation of model performance across four datasets with ProofNet showing a notably low correlation with other datasets.

Conclusion

Goedel-Prover marks a substantial advancement in the field of automated theorem proving, leveraging novel data synthesis and iterative training techniques to achieve great performance. By generating almost twice as many formal proofs as the previous state-of-the-art, Goedel-Prover represents a significant milestone in open-source automated theorem proving. Future directions include refining formalization styles, implementing search strategies, and exploring the integration of external symbolic computation tools. Open-sourcing the model and the newly generated proofs provides a robust foundation for further academic work, aiming at closing the gap between informal reasoning abilities and the requirements of automated formal reasoning systems.