- The paper demonstrates a novel experimental framework where human interactions with LLM-driven agents uncover causal links between platform features and perceived polarization.

- It employs a sophisticated agent-based simulation with LLMs to mimic opinion dynamics and assess the impact of recommendation bias on user engagement.

- Results show that polarized discourse elevates emotional tone, group identity salience, and bias detection while modulating engagement patterns without major opinion shifts.

Understanding Online Polarization Dynamics via Human Interaction with LLM-Driven Synthetic Social Networks

Introduction

The increasing role of social media in public discourse has brought renewed attention to the phenomenon of polarization—the clustering of individuals into ideologically homogeneous groups with limited cross-group interaction. Although both observational studies and theoretical opinion dynamics models have advanced our understanding, major gaps remain: empirical causal evidence connecting specific online platform features to users' perceptions and engagement is lacking due to the infeasibility of controlled experimentation on mainstream platforms and the abstraction of classic simulation models. This paper addresses this limitation by developing and validating a novel experimental framework in which human participants interact with LLM-based artificial agents within a synthetic social network. This setup enables tightly controlled manipulation of environmental factors (polarization, recommendation regimes) while retaining the ecological complexity of open-ended textual interaction.

Methodological Framework

The simulation system combines agent-based modeling (ABM) principles with GPT-4o-mini-driven agents that generate posts, comments, and social interactions. Each agent is parameterized by a continuous opinion value oi∈[−1,1], personality attributes, a profile, and a local interaction history. Both agent generation and messaging employ complex LLM prompting, incorporating explicit opinion values, persona, and social context, to control for degree and expression of polarization. Two regime types are implemented: one with polarized discourse (bimodal oi distributions, extreme certainty, affective language, antagonism) and one with moderate discourse (centered oi, hedging, inclusive language, uncertainty).

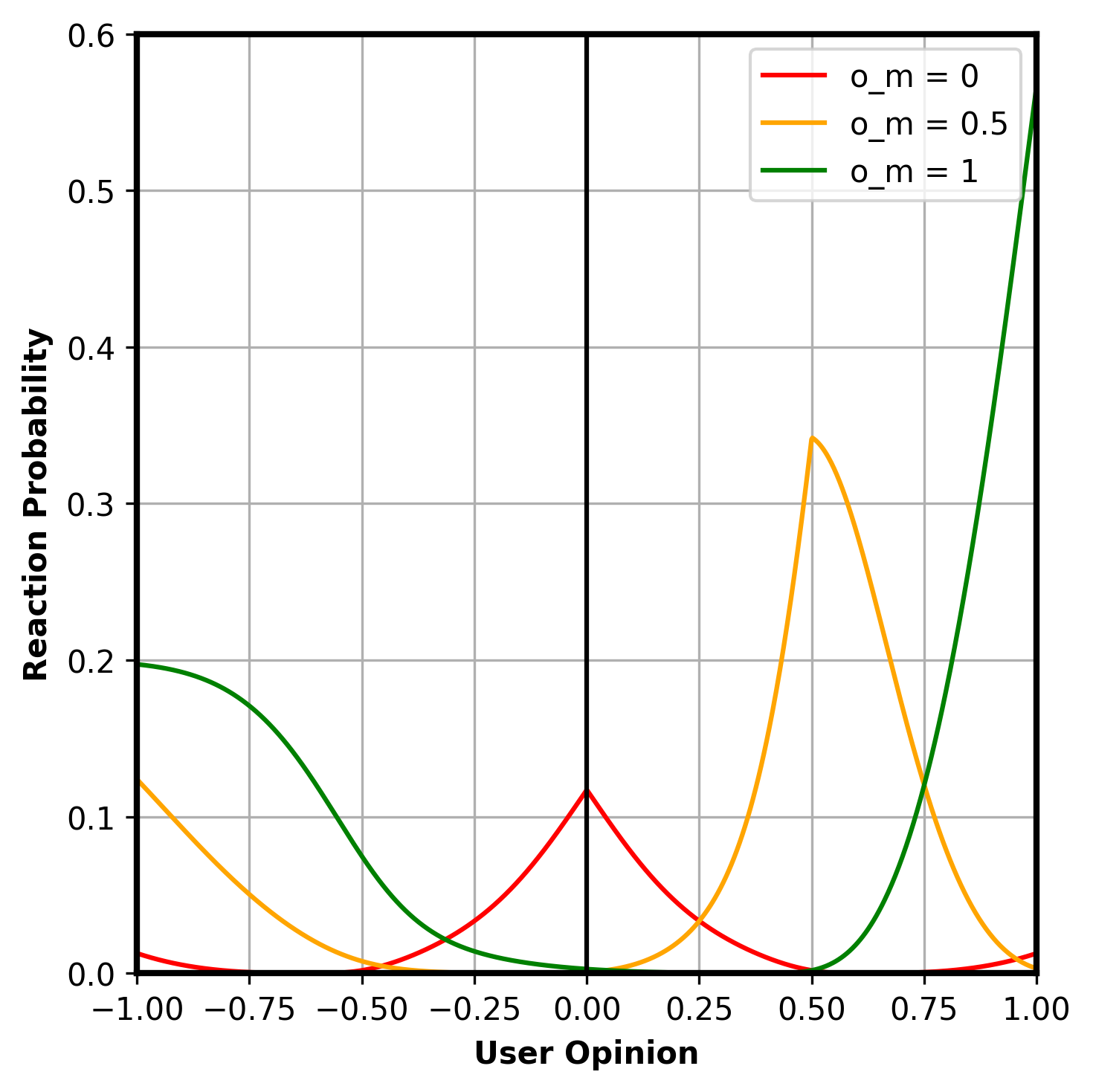

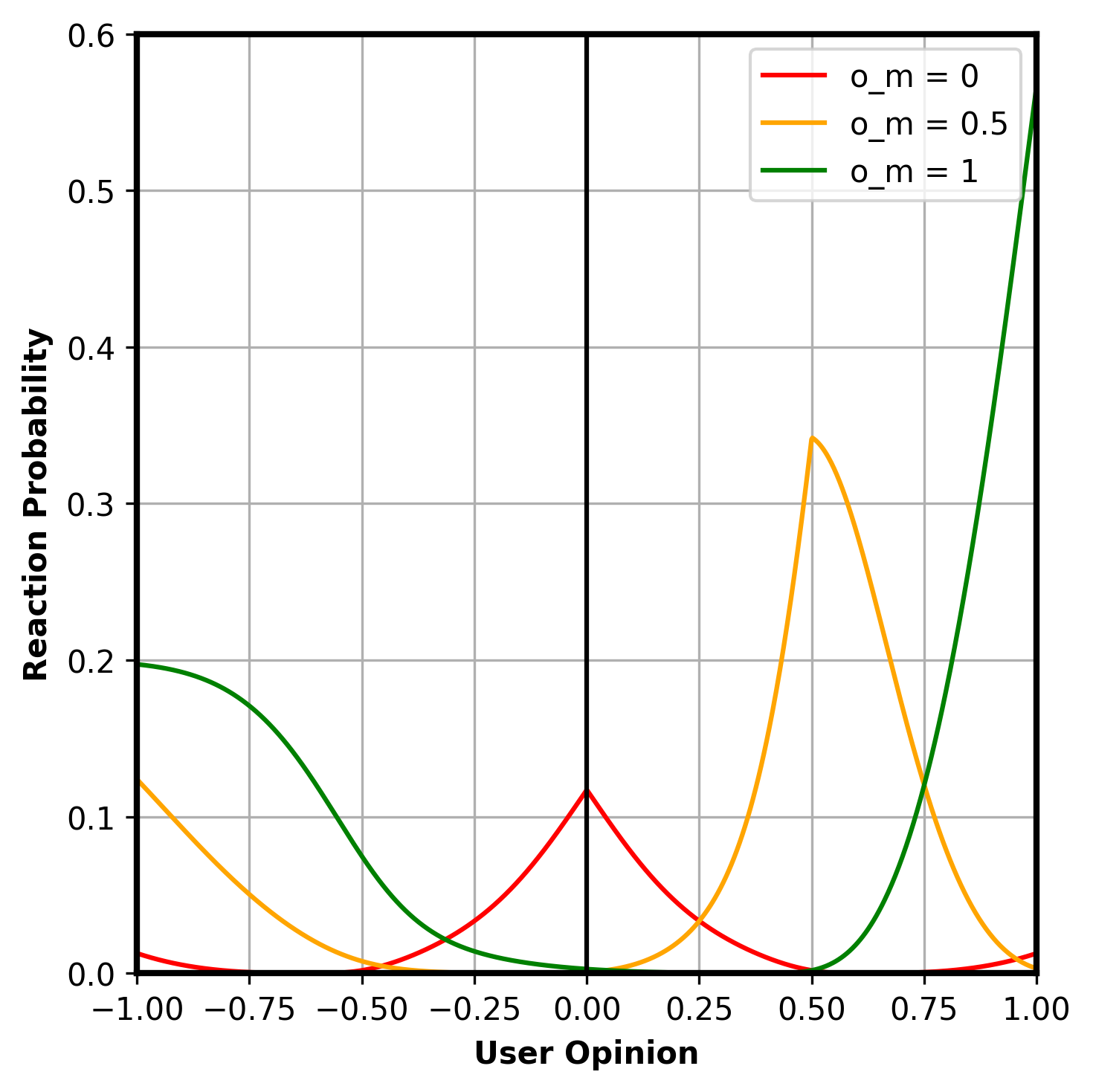

Interactions, including likes, comments, reposts, and follows, are probabilistically modeled to reflect confirmation bias and differential cross-ideological reactivity. The probability function modulates base reactivity by opinion strength and computes a U-shaped response function: agents are most likely to interact when content echoes their stance, with controlled likelihoods of engaged disagreement for more "active" reactions, reflecting both homophilic and heterophilic interaction patterns.

Figure 1: Reaction probability functions for different message opinion values om, capturing the likelihood peaks for ideological alignment and controlled cross-ideological engagement.

The synthetic social graph models follows as a directed network built with homophily and influencer amplification, and adaptively updates as participants interact. Edge formation and dissolution reflect selective exposure and network plasticity, while platform recommendations are governed by a controlled mixture of influence-based popularity, explicit recommendation bias (pro-, contra-, or balanced framing), and mild stochasticity.

Experimental Study Protocol

A 2×3 factorial user experiment was conducted (N=122, balanced across cells), manipulating (a) agent population polarization (polarized vs. moderate) and (b) recommendation bias (pro-UBI, balanced, contra-UBI) using Universal Basic Income as the focal controversy. Each participant entered an independent instance of the platform—a Twitter/X-like interface prepopulated with LLM agent content and network structure—and freely posted, liked, commented, or followed for 10 minutes. No human-to-human content was present, ensuring precise exposure control.

Figure 2: Screenshot of the simulated platform interface showing the Newsfeed, comment, and follow/like mechanics.

Detailed pre/post questionnaires measured shifts in policy opinion, perceived polarization, affective tone, group salience, uncertainty, and recommendation bias, alongside recording granular engagement activities.

Results

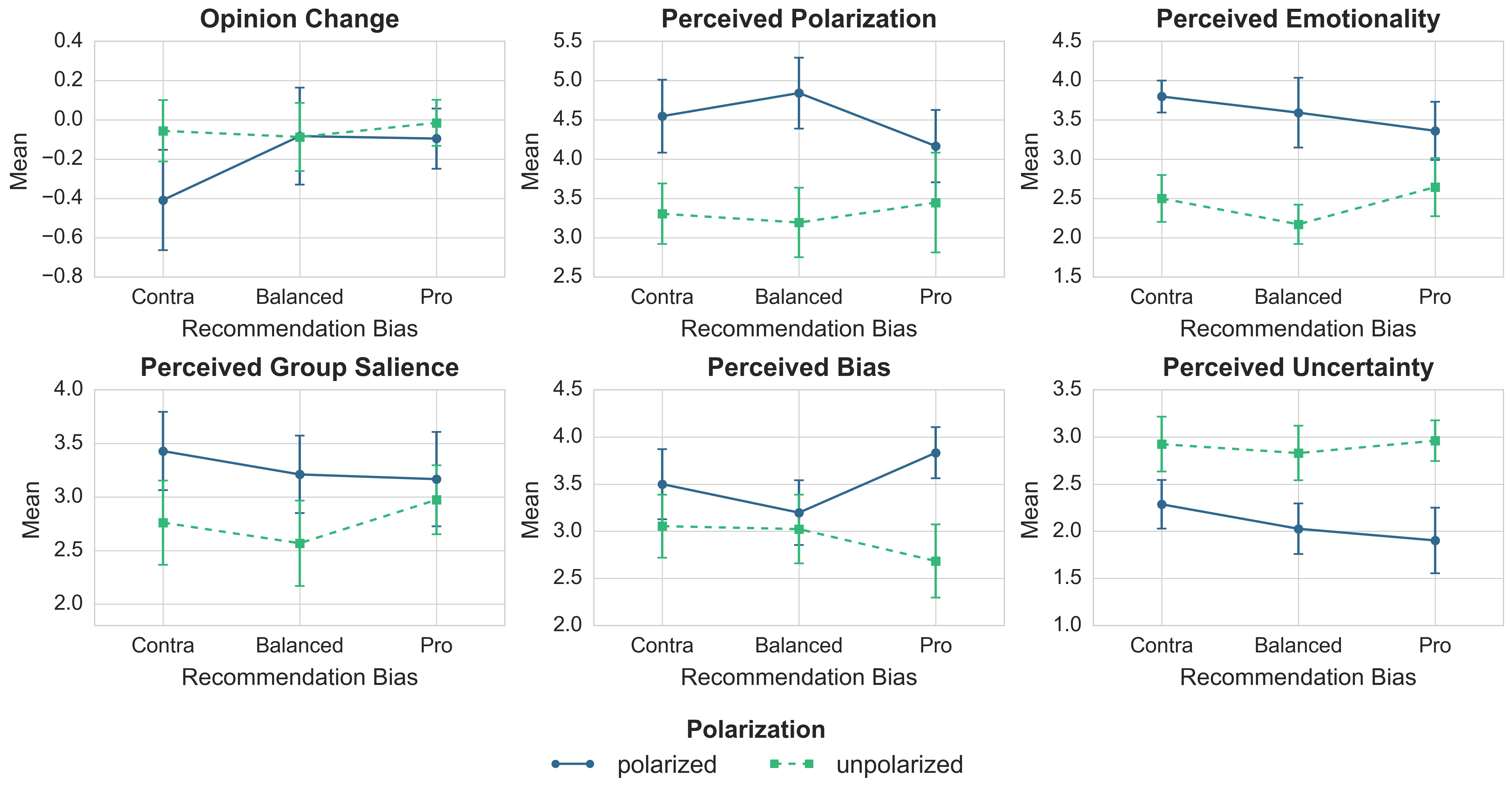

Discourse Perception and Psychological Response

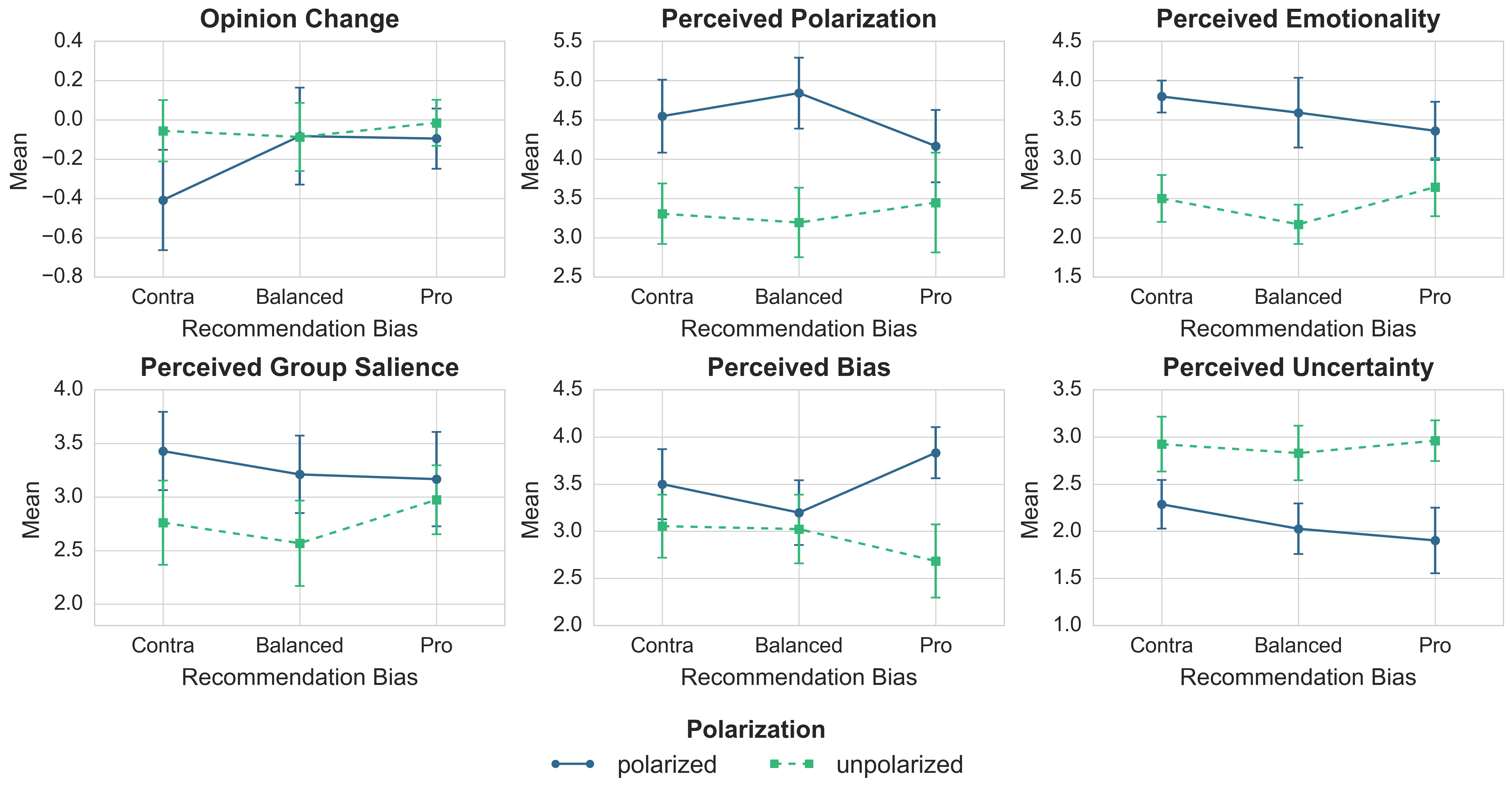

The main effects of the polarized discussion environment on user perceptions are strong and robust. Participants in the polarized regime exhibited significantly elevated perceptions of:

- Polarization (mean difference g=1.36)

- Emotionality (g=1.53; ηp2=0.388)

- Group Identity Salience (g=0.73)

- Perceived Platform Bias (g=0.69)

Conversely, perceived uncertainty—the observation of hedging and epistemic humility—dropped substantially in the polarized condition (g=−1.26; ηp2=0.296).

Figure 3: Interaction plots showing the effects of polarization and recommendation type on dependent variables; blue=polarized, green=unpolarized.

Recommendation bias had a more limited influence except for interaction effects on bias perception and group identity, particularly in pro-bias settings.

Importantly, while these perceptual shifts are statistically and substantively significant, direct measures of opinion change were not similarly affected, aligning with recent evidence that short-term exposure modulates perception and affect more than explicit stance, and that longer or repeated exposures may be required for belief updating.

User Engagement Patterns

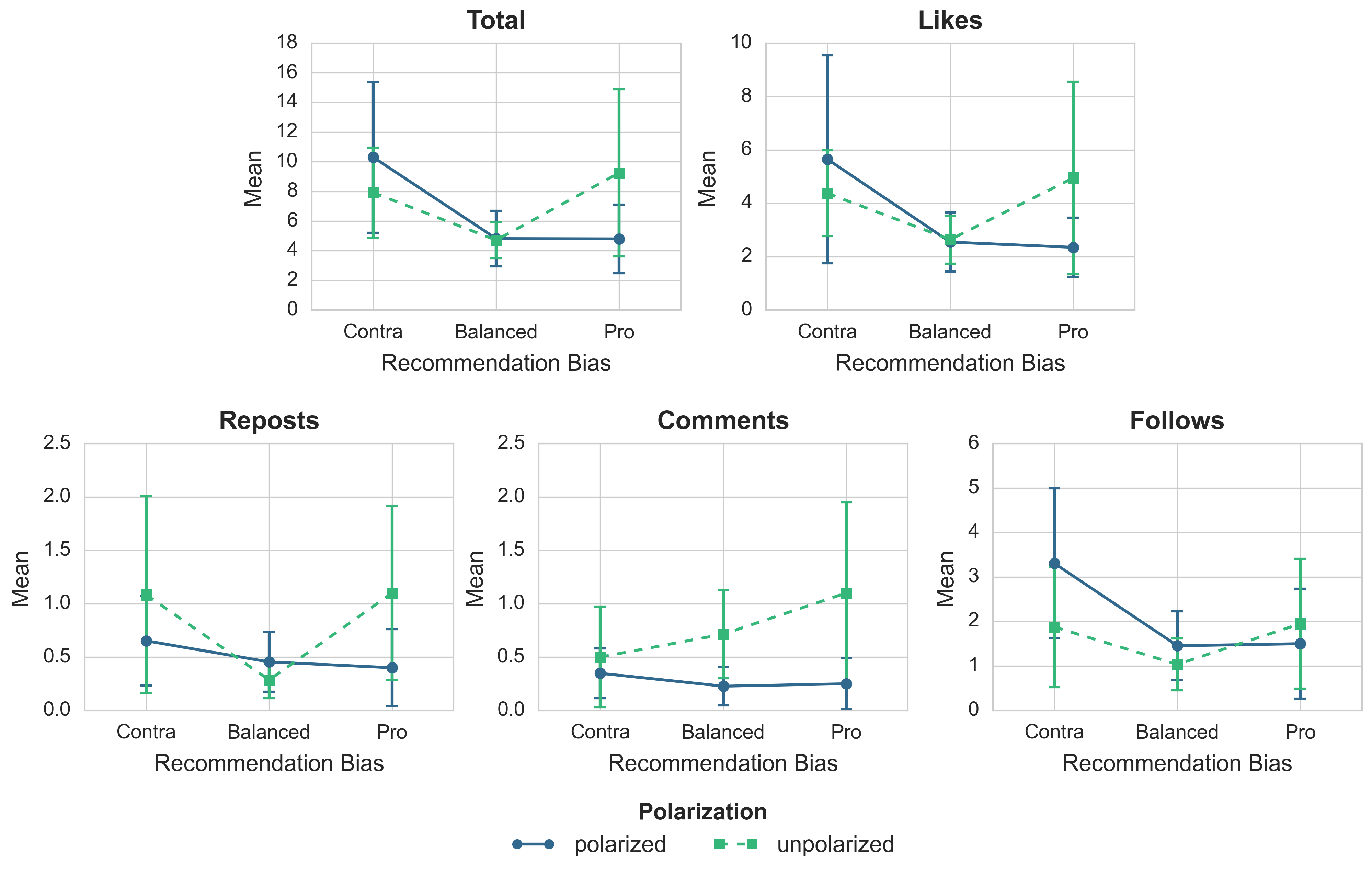

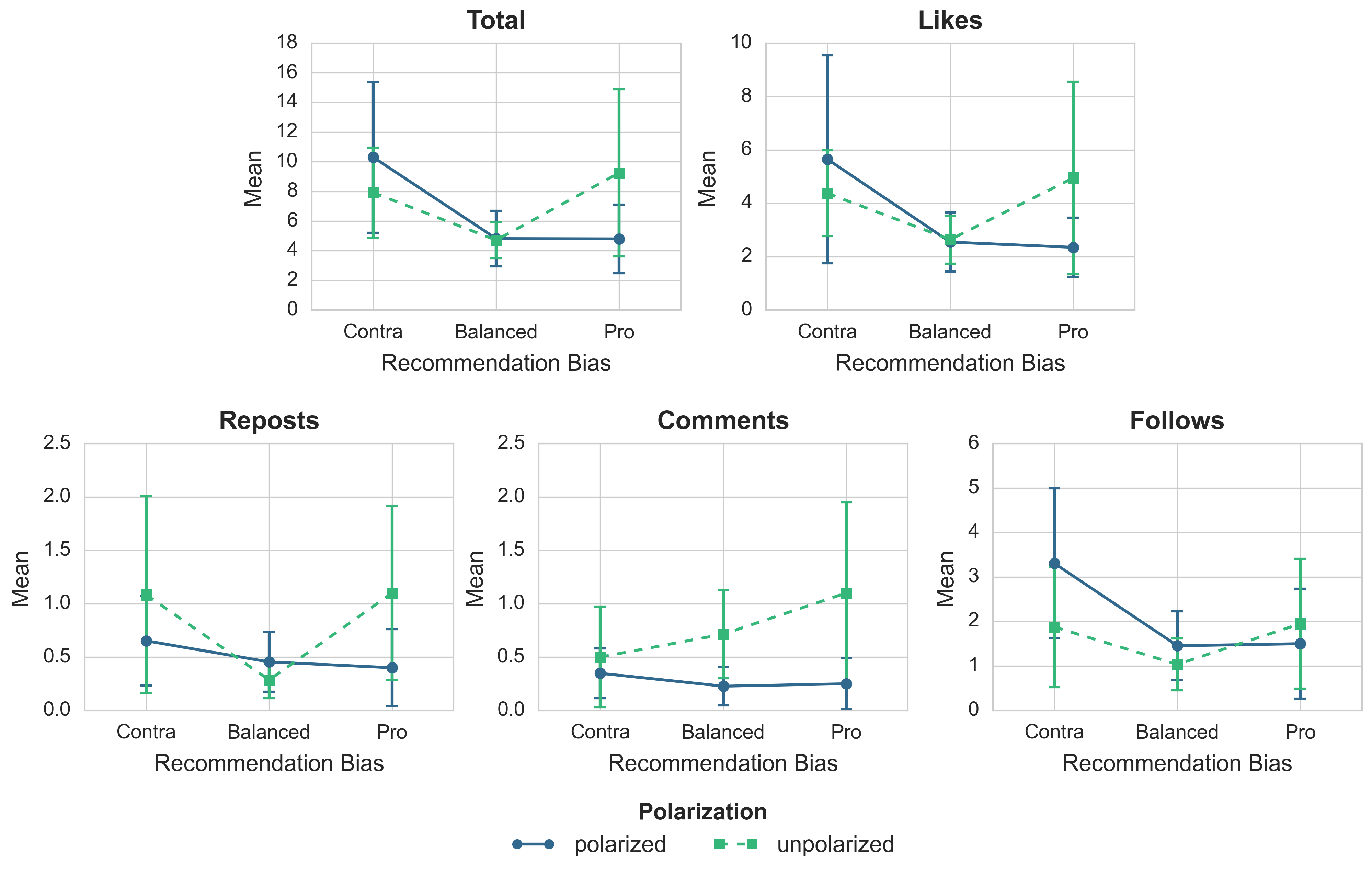

Analysis of engagement reveals several notable points:

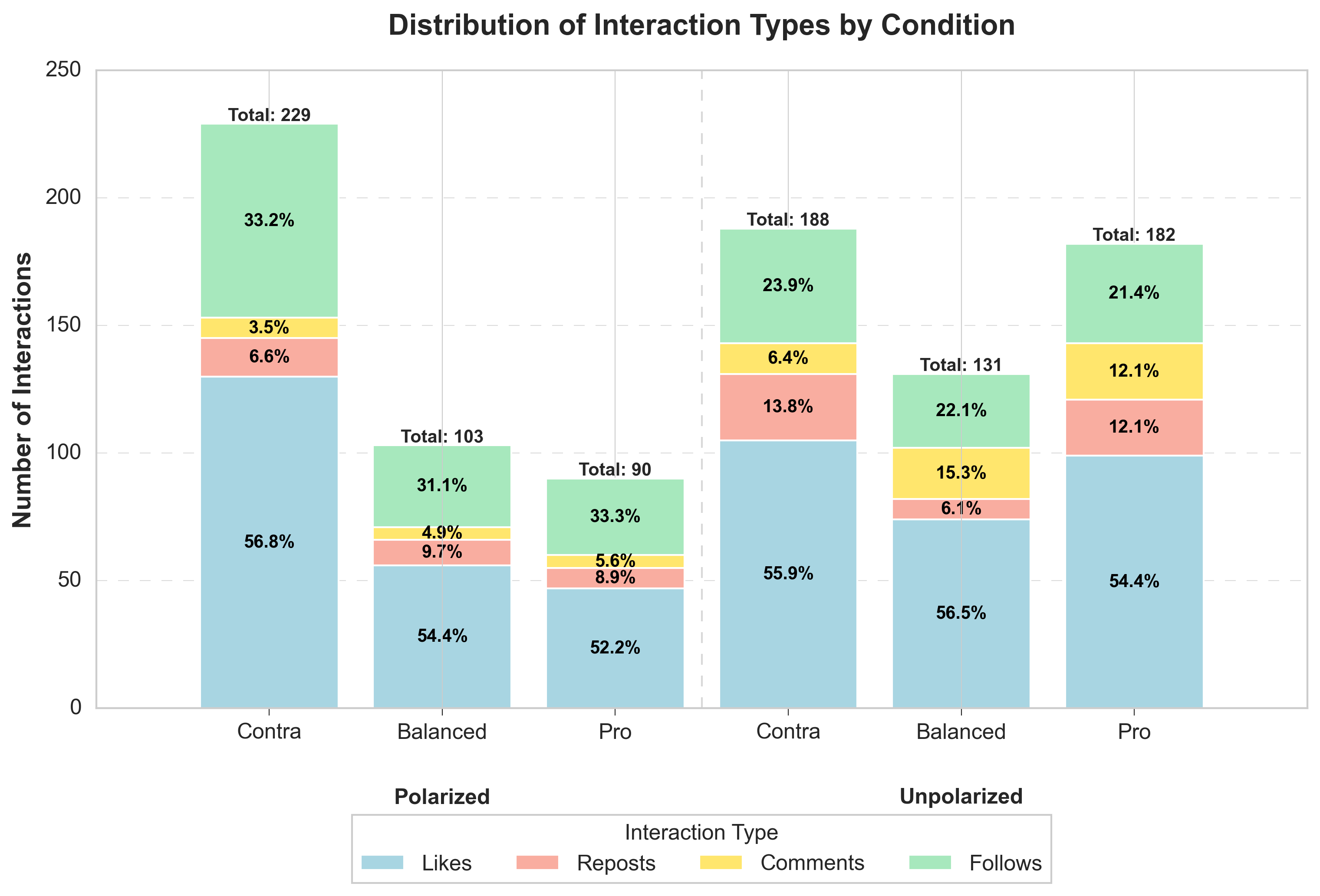

- Likes constitute the majority of interactions, followed by follows, reposts, and comments, reflecting typical effort hierarchy in social media.

- The greatest overall engagement occurred under polarized + contra-recommendation conditions, both in interaction count and in stronger mean opinion shifts (though not reaching significance).

- Polarized environments reduced commenting, particularly among predominantly moderate participants, but increased passive (liking, following) and more effortful interactions when confronted with challenging recommendations.

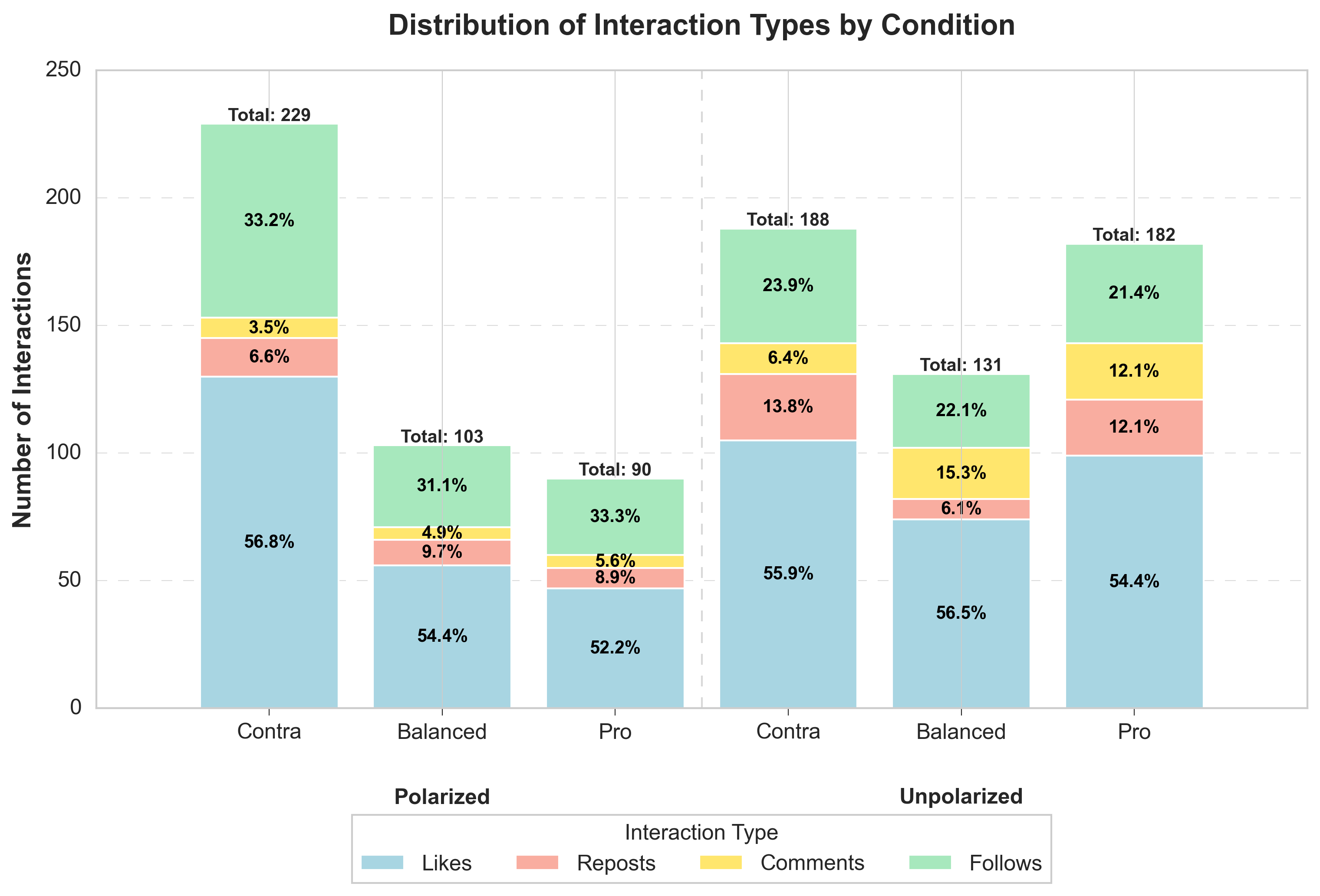

Figure 4: Interaction plots of polarization and recommendation type on user engagement. Blue=polarized, green=moderate; note engagement peaks with polarization plus contra-bias.

Figure 5: Distribution of interaction types across all polarization and recommendation conditions; likes predominate, with meaningful shifts in follows and comments by regime.

Modeling Observed Dynamics

The paper demonstrates that properly parameterized LLM-based ABMs can generate content that, when presented to humans, elicits perceptual and behavioral responses consistent with established social identity and affective polarization theory [tajfel_integrative_1979, mason_i_2015, iyengar_affect_2012, tornberg_how_2022-2]. The U-shaped probabilistic engagement model successfully mediates cross-ideological interaction asymmetry, while algorithmic recommendation bias exerts conditionally amplified effects.

The findings show that strong cues of group antagonism and affective polarization not only skew perception, but also alter patterns of engagement: user responses to platform polarization manifest in increased bias detection and social categorization, while the combination of "contra" recommendations and polarized style acts as a catalyst for increased engagement, especially in attention-demanding interactions.

Theoretical and Practical Implications

Empirical Causality and Theoretical Validity

This design closes a longstanding gap by providing causal evidence for the impact of platform-level features (environmental polarization, recommendation curation) on individual perception and engagement—something previously only accessible through observational correlations or overly abstracted simulations [chitra_analyzing_2020-1, del_vicario_echo_2015, santos_link_2021-1, de_arruda_modelling_2022-1]. The systematic main effects and interaction patterns mean that theories of group identity, opinion dynamics, and algorithmic curation can be experimentally validated and parameterized based on observed user response distributions, not just inferred from real-world data and competing interpretations.

Synthetic Social Networks for Polarization Research

LLM-driven synthetic agent approaches allow for rigorous, reproducible, and highly configurable testing of platform interventions—e.g., recommendation diversity, exposure control, influencer amplification—complementing empirical field experiments that are impractical or unethical on real-world platforms [chuang_simulating_2024, breum_persuasive_2024, ohagi_polarization_2024, gao_large_2024]. Extending to multiple topics or allowing network structure to evolve in tandem with interactivity and belief updating opens a rich testbed for interface, policy, or moderation experiments.

Broad Implications and Future Directions

- Platform design: Findings underscore that algorithmic interventions to diversify exposure must simultaneously account for the affective and identity-consolidating nature of polarized discourse; simple content balancing may have counterintuitive effects depending on group identity and emotionality cues.

- Polarization mechanisms: Results reinforce that perceived polarization and affective cues—rather than policy attitude extremity per se—are primary levers through which platforms affect group cohesion and antagonism. Affective polarization and identity salience, not just positional divergence, are the principal outcomes of polarized online architectures.

- Methodology: The integration of LLMs into ABMs in a human-in-the-loop context advances the empirical grounding and ecological validity of social simulation, moving beyond "toy" interactions to capture open-ended debate and social signaling. This is a direct extension of recent research on generative agent-based modeling and collective opinion dynamics with LLMs [ghaffarzadegan_generative_2024, chuang_simulating_2024, gao_large_2024, cisneros-velarde_principles_2024].

Limitations and Prospects for AI Research

- Agent realism: Although human participants reported high (but not universal) realism, certain stylization limitations (response repetitiveness, insufficient diversity) remain a challenge for LLM-driven agents.

- Temporal and structural generalizability: Effects are demonstrated in short sessions and with a single, moderately familiar issue (UBI); long-term dynamics, topic transfer, and exposure to "harder" entrenched debates must be further evaluated.

- Network co-evolution: Current implementation holds agent opinions fixed and network structure largely passive; integrating belief updating and dynamic network rewiring would further bridge the gap to real-world polarization and echo chamber formation [sasahara_social_2021-1, baumann_modeling_2020-2, ramaciotti_morales_inferring_2022].

- Bias and sampling: As in all synthetic environments, agent diversity, demographic realism, and emergent phenomena are constrained by model parameterization and prompting strategies; care must be taken to avoid reinforcing LLM biases or overfitting to artificial discourse styles [qu_performance_2024].

Future research involves extending simulation time scales, enabling opinion updating for both humans and agents, and systematically varying recommendation algorithms and moderation interventions. Integration with recent advances in reinforcement learning, graph-based recommendation, and longitudinal network analysis is also a promising direction [wu_graph_2022, fan_graph_2019-2, zhang_deep_2022].

Conclusion

The presented framework demonstrates that LLM-based synthetic networks can causally induce and measure core features of online polarization, mirroring both affective and group identity phenomena observed in real social platforms. Human participants exposed to polarized, emotionally charged, and group-marked content in LLM-driven microplatforms display robust shifts in perception, bias detection, and selective engagement, validating theoretical models and revealing actionable insights for platform design and intervention. As LLMs continue to advance in text generation, persona management, and social reasoning, their integration into controlled ABMs provides a scalable, reproducible, and ethically robust environment for dissecting the mechanisms and mitigation strategies for harmful online polarization.