Clean-Label Backdoor Attacks on Video Recognition Models

The paper "Clean-Label Backdoor Attacks on Video Recognition Models" investigates the vulnerabilities of deep neural networks (DNNs) to backdoor attacks. These malicious attacks can insert a trigger during the training of a model, allowing a perpetrator to control model predictions without detection when the trigger is present. Notably, most backdoor research has concentrated on image recognition, but this work significantly extends this to the more complex domain of video recognition models.

Problem and Contribution

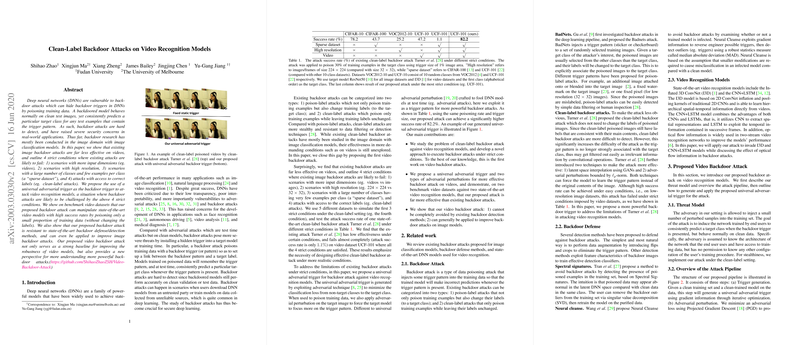

Backdoor attacks generally involve two types: poison-label attacks, which alter both data and labels, and clean-label attacks, which modify only the data with the original labels left unchanged. Clean-label attacks are stealthier, posing a tougher challenge in defense mechanisms due to the still intact data-label association. The paper studies such clean-label attacks in video contexts, where conventional image backdoor methods falter due to certain restrictive conditions: greater input dimensions, high-resolution data, large class numbers with sparse examples per class, and access to correct labels.

The authors propose a novel approach employing a universal adversarial trigger pattern to succeed under these conditions when attacking video recognition models. This approach achieves robust attack performance on state-of-the-art video datasets such as UCF-101 and HMDB-51 by poisoning a modest fraction of the training data without label alteration. The attack appears potent against cutting-edge models including Inflated 3D ConvNet (I3D) and CNN+LSTM, both noteworthy in the field of video recognition, achieving high attack success rates.

Key Findings and Results

Empirical findings demonstrate that existing backdoor methods perform poorly in video scenarios, underscoring the necessity of designing effective strategies within strict conditions. The proposed method surpasses these limitations, achieving significantly higher success rates compared to baseline approaches. It can cleverly exploit adversarial techniques to embed a persistent backdoor trigger, dramatically raising the attack's efficacy. For instance, the proposed attack achieved an 82.2% success rate on the UCF-101 dataset, compared to the baseline's negligible performance.

Furthermore, the method's evolution through different parameters like trigger size and poisoning rate reveals detailed analysis for optimal attack configurations, presenting improvement over the image-based approaches transferred to video domains.

Implications and Future Directions

The paper has dual significance in both advancing understanding of backdoor attacks and offering means for heightening model robustness against them. Attacks of this form represent a tangible threat to systems leveraging DNNs in autonomous systems, healthcare diagnostics, and security. As such, researchers and practitioners should note the implications for enhancing defense mechanisms, ensuring data and model integrity isn't compromised by undetected triggers.

Potential future directions may include expanding such attacks beyond single-stream video inputs to encompass multi-modal data scenarios, addressing two-stream networks that combine RGB input with optical flow information. Further, advancing defense technologies that can preemptively identify and thwart universal adversarial triggers is also a crucial avenue for exploration.

Conclusion

The research on clean-label backdoor attacks presents a compelling advancement in the understanding of threats to video recognition systems, offering innovative attack strategies substantially outperforming existing ones under challenging conditions. It draws necessary attention to developing more resilient defenses against such adversarial interventions while potentially informing related domains on similar methodologies applicable across diverse model architectures and data types.