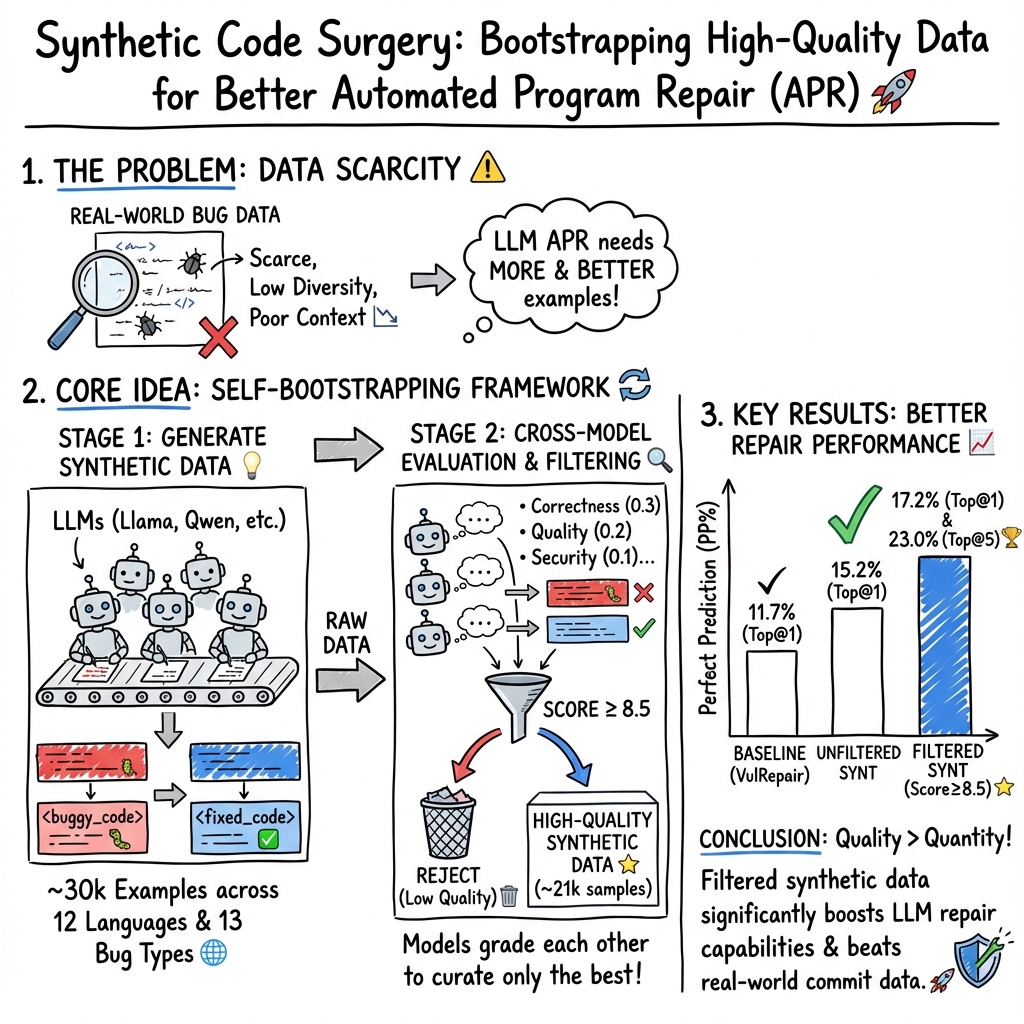

- The paper demonstrates how quality-filtered synthetic data generated by LLMs can significantly improve Automated Program Repair with higher prediction rates.

- It outlines a two-phase process of synthetic sample generation and rigorous evaluation across multiple programming languages and bug categories.

- The study employs statistical methods, including ANOVA and Tukey's HSD, to validate performance improvements and establish new benchmarks in APR.

Summary and Evaluation of "Synthetic Code Surgery: Repairing Bugs and Vulnerabilities with LLMs and Synthetic Data"

Introduction and Context

The paper "Synthetic Code Surgery: Repairing Bugs and Vulnerabilities with LLMs and Synthetic Data" (2505.07372) explores an innovative methodology for enhancing Automated Program Repair (APR) by utilizing synthetic data generation facilitated by LLMs. The cornerstone of this research lies in addressing a crucial limitation in current APR systems: the paucity of high-quality training data that spans diverse bug types across multiple programming languages. The authors propose a two-phase process involving synthetic sample generation and quality assessment to mitigate these data constraints. They demonstrate how this novel approach makes significant strides in APR, surpassing existing benchmarks despite using a less computationally intensive decoding strategy.

Methodology

Synthetic Data Generation

The research utilizes state-of-the-art LLMs to generate approximately 30,000 examples of buggy and fixed code pairs across 12 programming languages and 13 bug categories. These examples are subjected to rigorous evaluation across five criteria: correctness, code quality, security, performance, and completeness. The authors apply a quality threshold to filter the dataset, ensuring that only high-performance samples remain. This pre-processed and filtered dataset serves as a foundation for fine-tuning and training APR models.

Evaluation and Validation

The methodology employs rigorous statistical methods to validate the efficacy of the synthetic data. The experimental setup includes multiple training configurations tested on the VulRepair test set, measuring improvements using Perfect Prediction rates under various conditions. The authors implement ANOVA and post-hoc Tukey's HSD analysis to confirm that the observed advances are statistically significant, thus cementing the reliability of their results.

Experimental Results

The experimental results of the study underscore the effectiveness of the quality-filtered synthetic data. The validated models showed a substantial increase in Perfect Prediction rates, highlighting the critical role of data quality over quantity. Specifically, the best-performing configurations that included quality-filtered synthetic data exceeded baseline systems, with notable performance even when used in conjunction with real-world data like CommitPackFT. These findings demonstrate the potential of synthetic data to serve as a robust substitute or complement to traditional datasets.

Discussion and Implications

The research provides compelling evidence that rigorously filtered synthetic data can significantly enhance APR capabilities. The paper emphasizes the necessity of high-quality data, achieved through consensus-based evaluation by multiple LLMs. This approach not only improves patch accuracy but also enhances the diversity of patch generation—key elements for real-world applicability in software repair. Moreover, the paper contributes methodologically by applying a statistical framework often absent in APR research, thus setting a new standard for empirical validation.

The implications of this research are profound, suggesting that synthetic data generation can alleviate data scarcity issues across software engineering tasks. This self-bootstrapping model, where models generate and evaluate their own training data, exemplifies an advancement towards more autonomous and adaptable AI systems for code maintenance.

Conclusion

Overall, the paper presents a well-structured argument supported by extensive empirical evidence, proving that synthetic data generation with quality control can significantly enhance the performance of APR systems. The integration of statistical rigor reinforces the findings and suggests further exploration into the optimization of evaluation criteria, potentially opening new avenues for research in synthetic data methodologies for a variety of software engineering applications.