Can DeepSeek Reason Like a Surgeon? An Empirical Evaluation for Vision-Language Understanding in Robotic-Assisted Surgery (2503.23130v3)

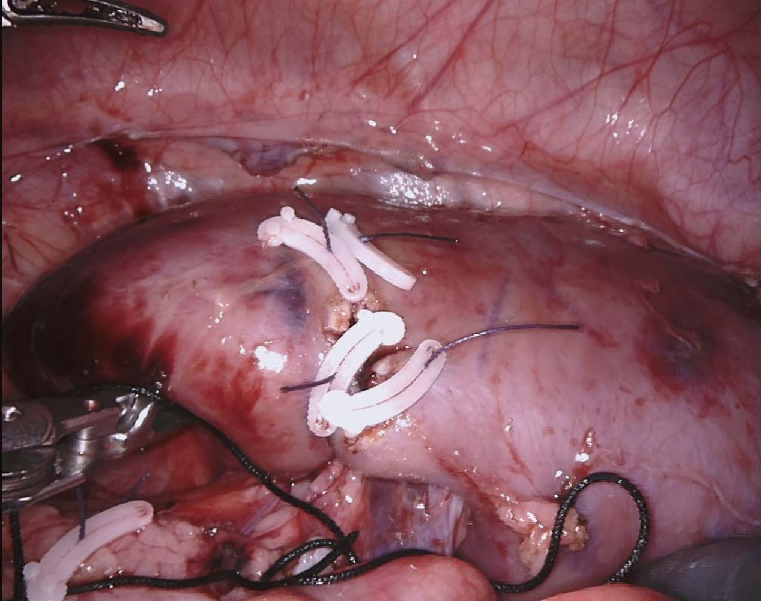

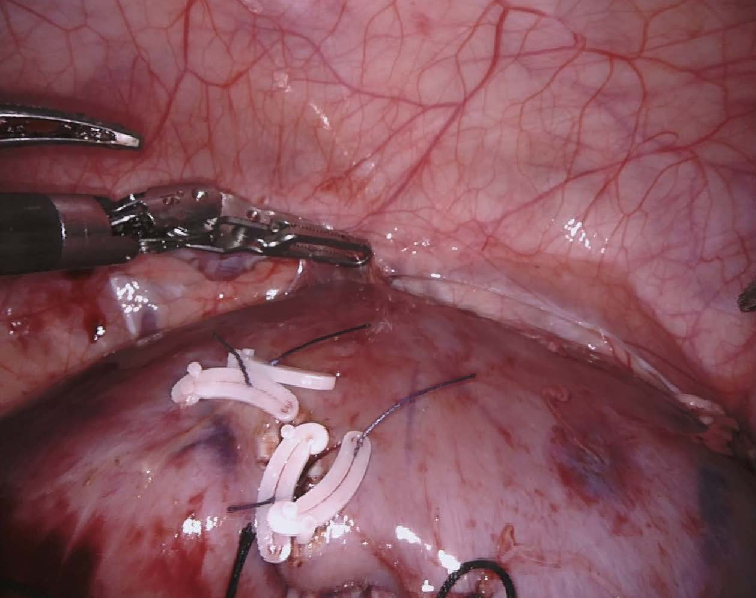

Abstract: The DeepSeek models have shown exceptional performance in general scene understanding, question-answering (QA), and text generation tasks, owing to their efficient training paradigm and strong reasoning capabilities. In this study, we investigate the dialogue capabilities of the DeepSeek model in robotic surgery scenarios, focusing on tasks such as Single Phrase QA, Visual QA, and Detailed Description. The Single Phrase QA tasks further include sub-tasks such as surgical instrument recognition, action understanding, and spatial position analysis. We conduct extensive evaluations using publicly available datasets, including EndoVis18 and CholecT50, along with their corresponding dialogue data. Our empirical study shows that, compared to existing general-purpose multimodal LLMs, DeepSeek-VL2 performs better on complex understanding tasks in surgical scenes. Additionally, although DeepSeek-V3 is purely a LLM, we find that when image tokens are directly inputted, the model demonstrates better performance on single-sentence QA tasks. However, overall, the DeepSeek models still fall short of meeting the clinical requirements for understanding surgical scenes. Under general prompts, DeepSeek models lack the ability to effectively analyze global surgical concepts and fail to provide detailed insights into surgical scenarios. Based on our observations, we argue that the DeepSeek models are not ready for vision-language tasks in surgical contexts without fine-tuning on surgery-specific datasets.

Paper Prompts

Sign up for free to create and run prompts on this paper using GPT-5.

Top Community Prompts

Explain it Like I'm 14

Overview

This paper asks a simple but important question: Can a powerful AI model called DeepSeek-V3 look at surgery images and “think” like a surgeon? The authors test how well DeepSeek-V3 understands what’s happening during robotic-assisted surgery by asking it different kinds of questions about surgical scenes.

What Questions Did the Researchers Ask?

In easy terms, they wanted to know:

- Can DeepSeek-V3 correctly name tools and organs in surgery images?

- Can it tell what a tool is doing (like cutting or holding)?

- Can it figure out where things are in the image (top-left, bottom-right, etc.)?

- Can it answer short, natural questions about the scene like a surgeon would?

- Can it write a clear, detailed description of what’s going on?

How Did They Study It?

Think of this paper like giving two students the same set of tests. The “students” are:

- DeepSeek-V3 (an open-source AI model)

- GPT-4o (a strong, closed-source AI model)

They used two public “photo albums” of surgery scenes:

- EndoVis18

- CholecT50

They tested the models in three ways (like three types of exams):

1) Single Phrase QA (multiple-choice)

- The AI is shown a surgery image and asked a very specific question, like “What organ is being operated on?” or “What is the tool doing?”

- It must pick one answer from a short list (for example: “kidney,” “grasping,” “cutting,” “left-top”).

- Analogy: It’s like a multiple-choice question where clear instructions make it easier to guess correctly.

2) Visual QA (short, clear answers)

- The AI gets a normal question about the image, such as “What tools are operating on the organ?” or “What procedure is this?”

- It must give a concise, helpful answer.

- Analogy: It’s like a short-answer quiz—you don’t have choices, but you still have a focused question.

3) Detailed Description (describe the picture)

- The AI is asked to describe the image in depth: name the organ, tools, what the tools are doing, where things are, and the overall context.

- Prompts are general, like “Describe the image in detail.”

- Analogy: It’s like writing a paragraph describing everything you see in a picture—no hints.

They then “graded” the answers with standard scoring methods used in AI research (names like BLEU, ROUGE, METEOR, CIDEr—these check how well the AI’s words match the correct answers). You can think of these like different rubrics that judge accuracy, coverage, and clarity. They also used a separate AI (GPT-3.5) to judge how well the answers matched the truth.

What Did They Find, and Why Is It Important?

Here are the key takeaways from the experiments:

- DeepSeek-V3 is good at naming things with clear options.

- When given a specific list (like “choose one answer”), DeepSeek-V3 does well at recognizing surgical tools and organs (for example, identifying a “kidney” or “forceps”).

- Why it matters: With good prompts and choices, the model can quickly help identify objects in surgery scenes.

- Both models struggle with motion and exact locations from a single image.

- Understanding what a tool is doing (cutting, grasping) is hard from a still picture—motion is easier to see in video.

- Figuring out precise spatial positions (like “top-left”) is tricky for DeepSeek-V3.

- Why it matters: In real surgery, knowing actions and positions is essential for safety and guidance.

- DeepSeek-V3 handles short, guided questions better than open-ended ones.

- In Visual QA (short answers), DeepSeek-V3 often performed well, especially when the prompt was clear and focused.

- But in Detailed Description (long, open-ended), DeepSeek-V3 tended to give vague, abstract answers (like describing “patterns” or “balance”) instead of concrete surgical details.

- GPT-4o did better at long, general descriptions without much prompting.

- Why it matters: In the operating room, AI must give clear, specific information—vague answers aren’t useful.

- Common tools are easier than tissues.

- Both models found it easier to recognize surgical tools than to analyze surgical tissues.

- Likely reason: Tools appear more often in general training data than specialized surgical tissue appearances.

- Why it matters: To be truly helpful in surgery, AI needs strong, domain-specific knowledge—especially about human anatomy and tissue states.

Overall conclusion:

- DeepSeek-V3 can act a bit like a beginner assistant when given very clear instructions or choices.

- However, it is not yet ready to reliably understand and describe complex surgical scenes on its own, especially without special training on surgery data.

What Does This Mean for the Future?

- Better prompts help a lot.

- Clear, specific instructions make DeepSeek-V3 more accurate. Designing good prompts is important for safe, useful AI assistance in surgery.

- Specialized training is needed.

- The model should be fine-tuned (tutored) on large, high-quality surgery datasets so it learns the language, actions, and look of surgical scenes.

- Methods like reinforcement learning (teaching the model through trial and reward), and techniques to make models cheaper and faster (like Mixture of Experts or distillation), could make deployment in hospitals more practical.

- Not ready for real surgical use yet.

- Until models like DeepSeek-V3 are better at understanding actions, positions, and full-scene context, they shouldn’t be relied on in the operating room without careful supervision.

In short, DeepSeek-V3 shows promise, especially with clear questions and choices, but it needs more specialized training and better prompting to truly “reason like a surgeon.”

Collections

Sign up for free to add this paper to one or more collections.