- The paper introduces PromptGFM, a framework that fully integrates LLMs within GNN workflows to enhance graph-text alignment.

- It replaces traditional node embeddings with textual attributes and uses prompt-based techniques for neighbor sampling and aggregation updates.

- Extensive experiments demonstrate significant performance improvements in node classification, link prediction, and cross-domain scenarios.

LLM as GNN: Graph Vocabulary Learning for Text-Attributed Graph Foundation Models

Introduction

This paper introduces the PromptGFM framework, which proposes a novel approach to integrating LLMs with Graph Neural Networks (GNNs) to manage Text-Attributed Graphs (TAGs). Highlighted by the direct implementation of LLMs as GNNs, it aims to address challenges in graph-text alignment and propose a unified model that can generalize across diverse graphs and tasks. The authors focus on overcoming the limitations associated with existing decoupled architectures and the inefficiencies caused by out-of-vocabulary tokens assigned to graph nodes.

Methodology

Integration Paradigms

The research identifies three integration paradigms—namely, GNN for LLM, LLM for GNN, and LLM as GNN. The former two utilize decoupled architectures resulting in suboptimal alignment between graph structures and semantic information:

- GNN for LLM involves using GNNs to create structure-aware embeddings subsequently utilized by LLMs.

- LLM for GNN focuses on employing LLMs to enhance semantic understanding for training GNNs.

PromptGFM sets itself apart by fully integrating LLMs within the GNN workflow to facilitate seamless graph-text alignment, functioning entirely in the text space instead of embedding space.

Figure 1: Overview of three GNN-LLM integration paradigms for graph-text alignment.

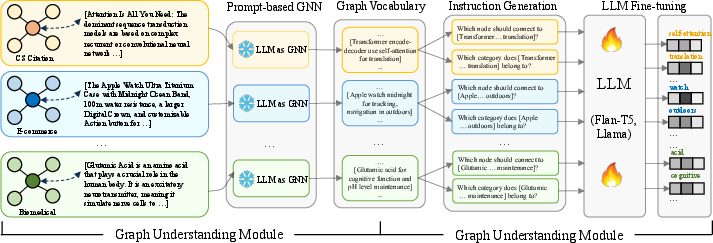

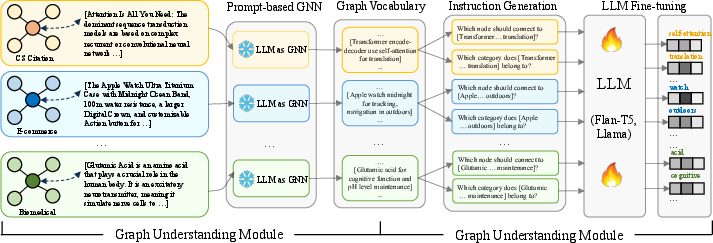

Graph Understanding and Inference Modules

Graph Understanding Module: This component prompts LLMs to replicate classical GNN workflows within the text space. It replaces traditional node representations with textual attributes, utilizes a prompt-based method for neighbor sampling, and designs aggregation-update mechanisms through LLM interactions.

Figure 2: LLM-driven replication of the GNN workflow. We achieve fine-grained alignment between traditional embedding-based GNN and our prompt-based GNN.

Graph Inference Module: It establishes a universal graph vocabulary of language-based IDs for node representations, facilitating seamless transferability across graphs and tasks. This module supports LLM fine-tuning across multiple domains and provides task-oriented prompt templates to enable effective cross-graph and cross-task knowledge transfer.

Figure 3: The pipeline of PromptGFM. It includes the graph understanding module and the graph inference module.

Experimental Evaluation

Extensive experiments demonstrate PromptGFM's significant performance enhancements across various datasets for node classification and link prediction tasks. The framework exhibits strong transferability, capable of generalizing across both intra-domain and inter-domain scenarios. Impressively, the system consistently outperforms existing GNN-LLM methods across benchmarks, validating its effectiveness and scalability.

Node Classification: Results illustrate substantial improvements over existing benchmarks. The integration of textual data with GNN-like message passing leads to precise and semantically enriched node representations.

Link Prediction: The system effectively predicts unseen links, with controlled decoding mechanisms avoiding hallucinations by leveraging language-based IDs.

Cross-Domain Transfer: Evaluations demonstrate remarkable generalization capabilities, achieving superior performance particularly in zero-shot learning scenarios, highlighting its potential in open-world graph learning.

Implications and Conclusions

The proposed architecture presents a revolutionary paradigm by utilizing LLMs to perform GNN tasks directly in the text space. This novel approach offers expansive opportunities for developing robust and scalable Graph Foundation Models (GFMs) capable of adapting to a diverse range of tasks and domains. The elegance of language-based graph vocabulary enables improved semantic and structural understanding, paving the way for future advancements in cross-functional AI applications.

By effectively integrating LLMs as GNNs, the study sets a precedent for developing TAG models notable for their expressiveness, transferability, and scalability. This work highlights future directions in enhancing semantic alignment across variable domains, potentially leading to the creation of more universal models that leverage the power of natural language processing within graph-based contexts.